Fei Chen

Fusing Visuo-Tactile Perception into Kernelized Synergies for Robust Grasping and Fine Manipulation of Non-rigid Objects

Sep 15, 2021

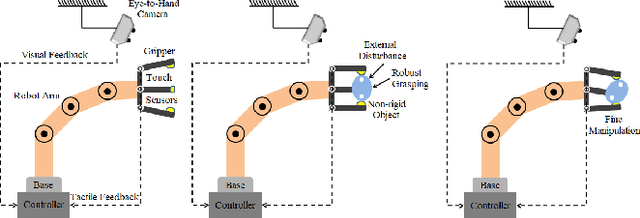

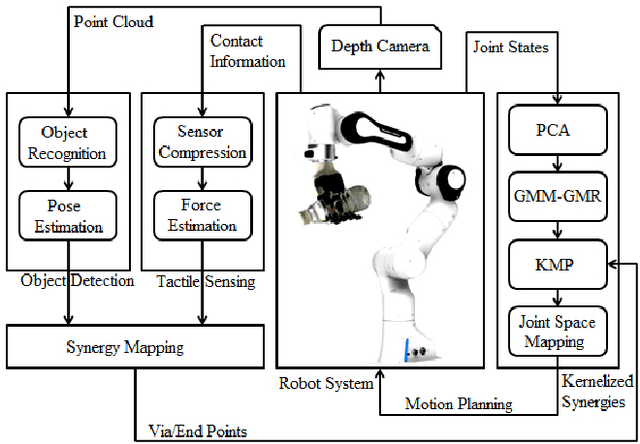

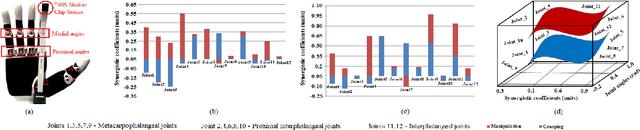

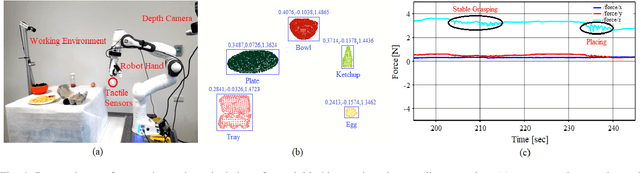

Abstract:Handling non-rigid objects using robot hands necessities a framework that does not only incorporate human-level dexterity and cognition but also the multi-sensory information and system dynamics for robust and fine interactions. In this research, our previously developed kernelized synergies framework, inspired from human behaviour on reusing same subspace for grasping and manipulation, is augmented with visuo-tactile perception for autonomous and flexible adaptation to unknown objects. To detect objects and estimate their poses, a simplified visual pipeline using RANSAC algorithm with Euclidean clustering and SVM classifier is exploited. To modulate interaction efforts while grasping and manipulating non-rigid objects, the tactile feedback using T40S shokac chip sensor, generating 3D force information, is incorporated. Moreover, different kernel functions are examined in the kernelized synergies framework, to evaluate its performance and potential against task reproducibility, execution, generalization and synergistic re-usability. Experiments performed with robot arm-hand system validates the capability and usability of upgraded framework on stably grasping and dexterously manipulating the non-rigid objects.

LV-BERT: Exploiting Layer Variety for BERT

Jun 25, 2021

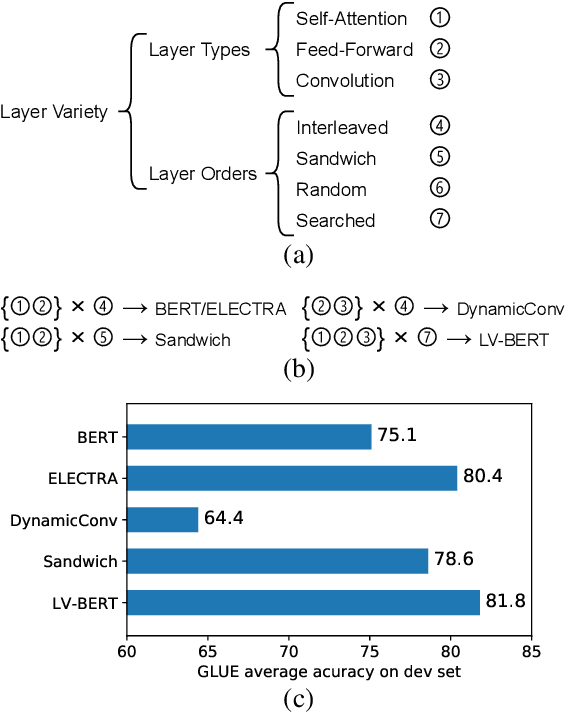

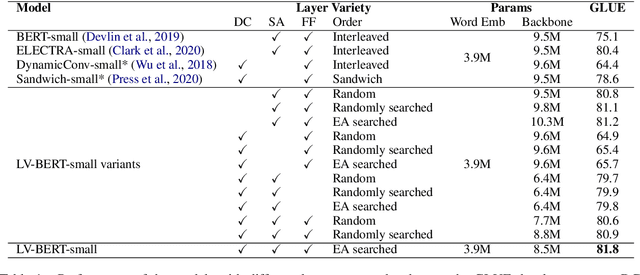

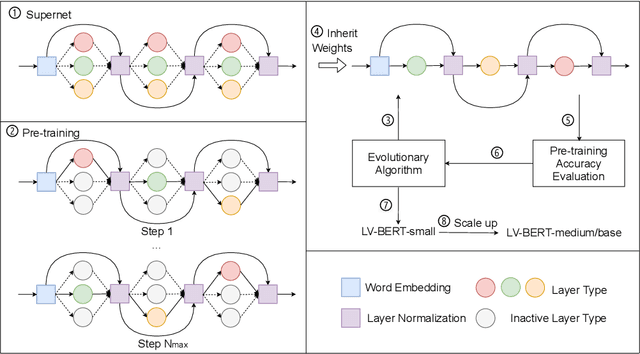

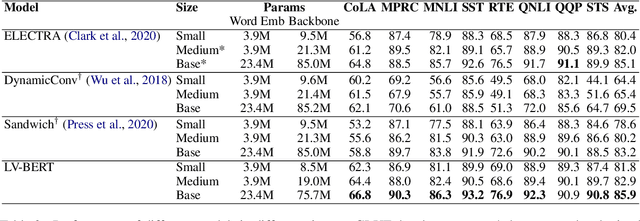

Abstract:Modern pre-trained language models are mostly built upon backbones stacking self-attention and feed-forward layers in an interleaved order. In this paper, beyond this stereotyped layer pattern, we aim to improve pre-trained models by exploiting layer variety from two aspects: the layer type set and the layer order. Specifically, besides the original self-attention and feed-forward layers, we introduce convolution into the layer type set, which is experimentally found beneficial to pre-trained models. Furthermore, beyond the original interleaved order, we explore more layer orders to discover more powerful architectures. However, the introduced layer variety leads to a large architecture space of more than billions of candidates, while training a single candidate model from scratch already requires huge computation cost, making it not affordable to search such a space by directly training large amounts of candidate models. To solve this problem, we first pre-train a supernet from which the weights of all candidate models can be inherited, and then adopt an evolutionary algorithm guided by pre-training accuracy to find the optimal architecture. Extensive experiments show that LV-BERT model obtained by our method outperforms BERT and its variants on various downstream tasks. For example, LV-BERT-small achieves 79.8 on the GLUE testing set, 1.8 higher than the strong baseline ELECTRA-small.

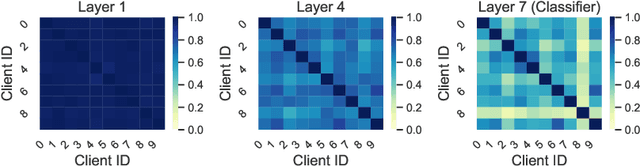

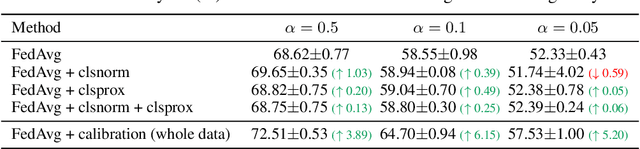

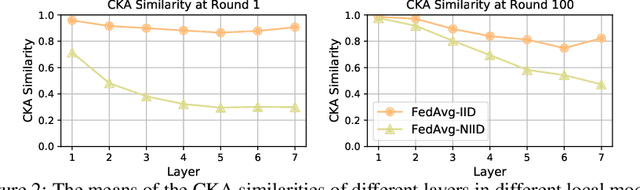

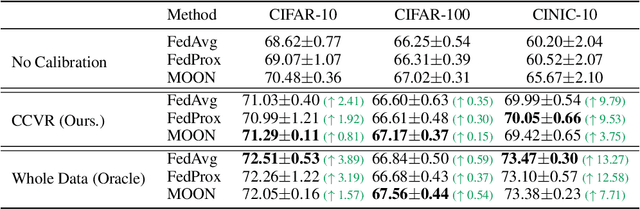

No Fear of Heterogeneity: Classifier Calibration for Federated Learning with Non-IID Data

Jun 09, 2021

Abstract:A central challenge in training classification models in the real-world federated system is learning with non-IID data. To cope with this, most of the existing works involve enforcing regularization in local optimization or improving the model aggregation scheme at the server. Other works also share public datasets or synthesized samples to supplement the training of under-represented classes or introduce a certain level of personalization. Though effective, they lack a deep understanding of how the data heterogeneity affects each layer of a deep classification model. In this paper, we bridge this gap by performing an experimental analysis of the representations learned by different layers. Our observations are surprising: (1) there exists a greater bias in the classifier than other layers, and (2) the classification performance can be significantly improved by post-calibrating the classifier after federated training. Motivated by the above findings, we propose a novel and simple algorithm called Classifier Calibration with Virtual Representations (CCVR), which adjusts the classifier using virtual representations sampled from an approximated gaussian mixture model. Experimental results demonstrate that CCVR achieves state-of-the-art performance on popular federated learning benchmarks including CIFAR-10, CIFAR-100, and CINIC-10. We hope that our simple yet effective method can shed some light on the future research of federated learning with non-IID data.

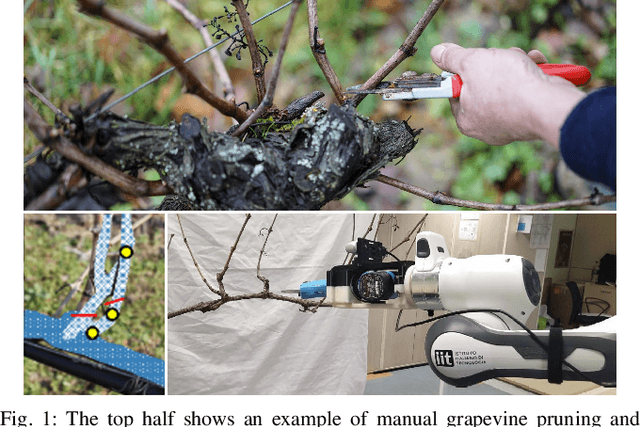

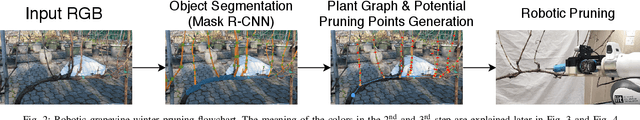

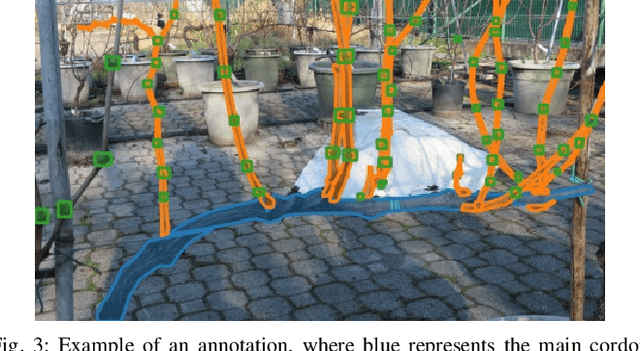

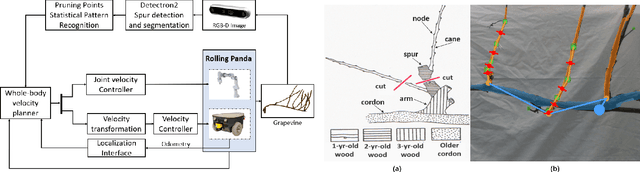

Grapevine Winter Pruning Automation: On Potential Pruning Points Detection through 2D Plant Modeling using Grapevine Segmentation

Jun 08, 2021

Abstract:Grapevine winter pruning is a complex task, that requires skilled workers to execute it correctly. The complexity of this task is also the reason why it is time consuming. Considering that this operation takes about 80-120 hours/ha to be completed, and therefore is even more crucial in large-size vineyards, an automated system can help to speed up the process. To this end, this paper presents a novel multidisciplinary approach that tackles this challenging task by performing object segmentation on grapevine images, used to create a representative model of the grapevine plants. Second, a set of potential pruning points is generated from this plant representation. We will describe (a) a methodology for data acquisition and annotation, (b) a neural network fine-tuning for grapevine segmentation, (c) an image processing based method for creating the representative model of grapevines, starting from the inferred segmentation and (d) potential pruning points detection and localization, based on the plant model which is a simplification of the grapevine structure. With this approach, we are able to identify a significant set of potential pruning points on the canes, that can be used, with further selection, to derive the final set of the real pruning points.

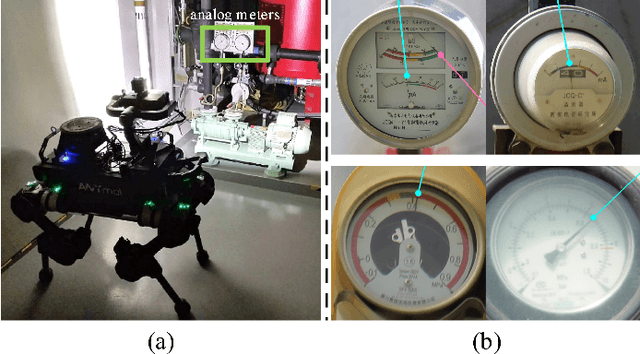

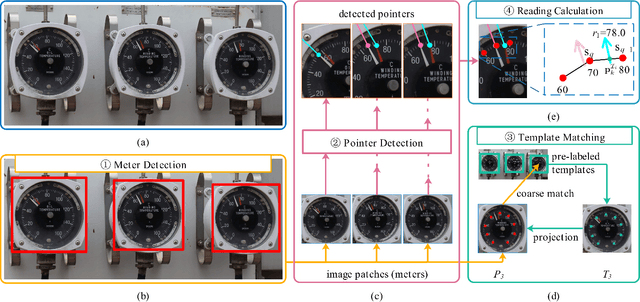

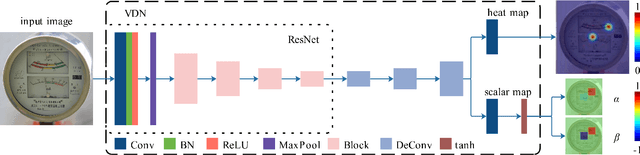

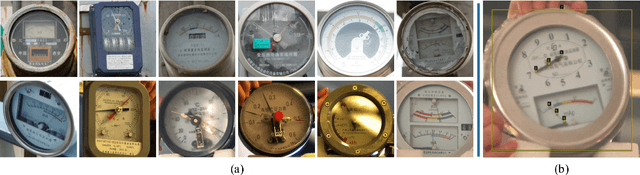

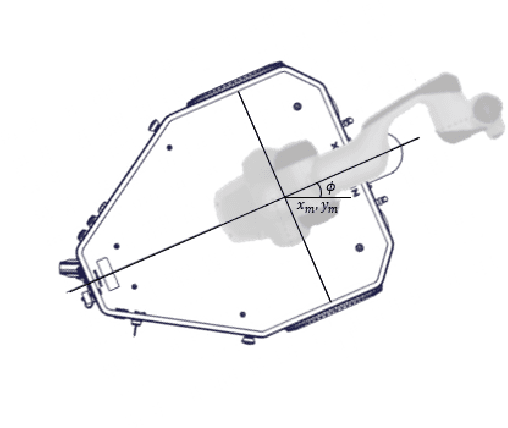

Vector Detection Network: An Application Study on Robots Reading Analog Meters in the Wild

May 30, 2021

Abstract:Analog meters equipped with one or multiple pointers are wildly utilized to monitor vital devices' status in industrial sites for safety concerns. Reading these legacy meters {\bi autonomously} remains an open problem since estimating pointer origin and direction under imaging damping factors imposed in the wild could be challenging. Nevertheless, high accuracy, flexibility, and real-time performance are demanded. In this work, we propose the Vector Detection Network (VDN) to detect analog meters' pointers given their images, eliminating the barriers for autonomously reading such meters using intelligent agents like robots. We tackled the pointer as a two-dimensional vector, whose initial point coincides with the tip, and the direction is along tail-to-tip. The network estimates a confidence map, wherein the peak pixels are treated as vectors' initial points, along with a two-layer scalar map, whose pixel values at each peak form the scalar components in the directions of the coordinate axes. We established the Pointer-10K dataset composing of real-world analog meter images to evaluate our approach due to no similar dataset is available for now. Experiments on the dataset demonstrated that our methods generalize well to various meters, robust to harsh imaging factors, and run in real-time.

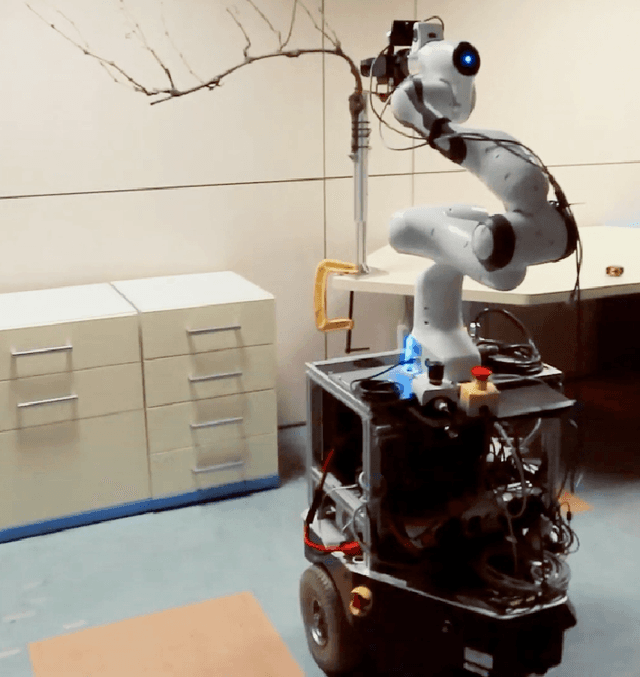

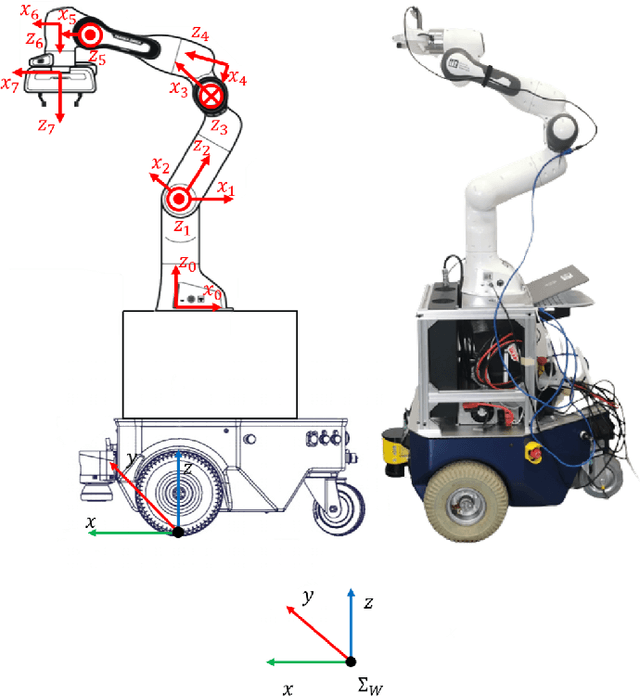

Whole-Body Control on Non-holonomic Mobile Manipulation for Grapevine Winter Pruning Automation

May 22, 2021

Abstract:Mobile manipulators that combine mobility and manipulability, are increasingly being used for various unstructured application scenarios in the field, e.g. vineyards. Therefore, the coordinated motion of the mobile base and manipulator is an essential feature of the overall performance. In this paper, we explore a whole-body motion controller of a robot which is composed of a 2-DoFs non-holonomic wheeled mobile base with a 7-DoFs manipulator (non-holonomic wheeled mobile manipulator, NWMM) This robotic platform is designed to efficiently undertake complex grapevine pruning tasks. In the control framework, a task priority coordinated motion of the NWMM is guaranteed. Lower-priority tasks are projected into the null space of the top-priority tasks so that higher-priority tasks are completed without interruption from lower-priority tasks. The proposed controller was evaluated in a grapevine spur pruning experiment scenario.

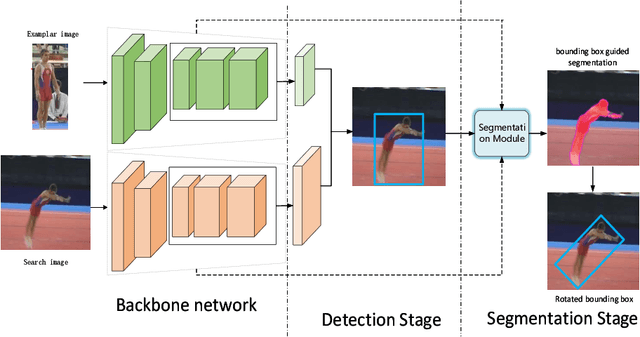

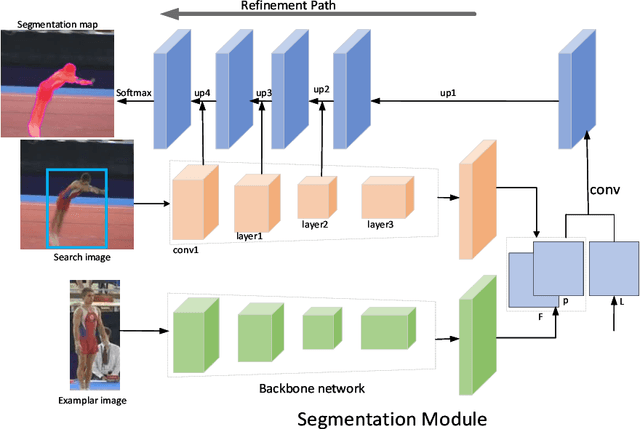

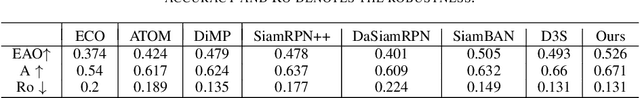

Two stages for visual object tracking

Apr 28, 2021

Abstract:Siamese-based trackers have achived promising performance on visual object tracking tasks. Most existing Siamese-based trackers contain two separate branches for tracking, including classification branch and bounding box regression branch. In addition, image segmentation provides an alternative way to obetain the more accurate target region. In this paper, we propose a novel tracker with two-stages: detection and segmentation. The detection stage is capable of locating the target by Siamese networks. Then more accurate tracking results are obtained by segmentation module given the coarse state estimation in the first stage. We conduct experiments on four benchmarks. Our approach achieves state-of-the-art results, with the EAO of 52.6$\%$ on VOT2016, 51.3$\%$ on VOT2018, and 39.0$\%$ on VOT2019 datasets, respectively.

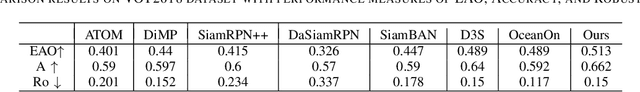

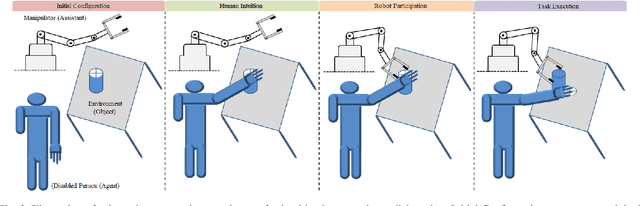

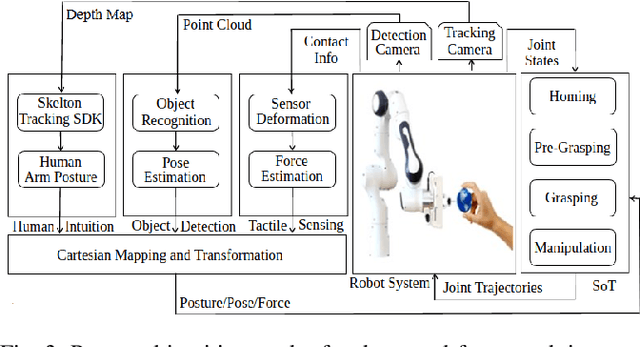

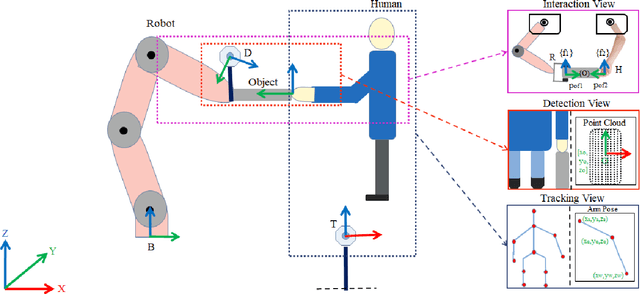

Formulating Intuitive Stack-of-Tasks with Visuo-Tactile Perception for Collaborative Human-Robot Fine Manipulation

Mar 09, 2021

Abstract:Enabling robots to work in close proximity with humans necessitates to employ not only multi-sensory information for coordinated and autonomous interactions but also a control framework that ensures adaptive and flexible collaborative behavior. Such a control framework needs to integrate accuracy and repeatability of robots with cognitive ability and adaptability of humans for co-manipulation. In this regard, an intuitive stack of tasks (iSOT) formulation is proposed, that defines the robots actions based on human ergonomics and task progress. The framework is augmented with visuo-tactile perception for flexible interaction and autonomous adaption. The visual information using depth cameras, monitors and estimates the object pose and human arm gesture while the tactile feedback provides exploration skills for maintaining the desired contact to avoid slippage. Experiments conducted on robot system with human partnership for assembly and disassembly tasks confirm the effectiveness and usability of proposed framework.

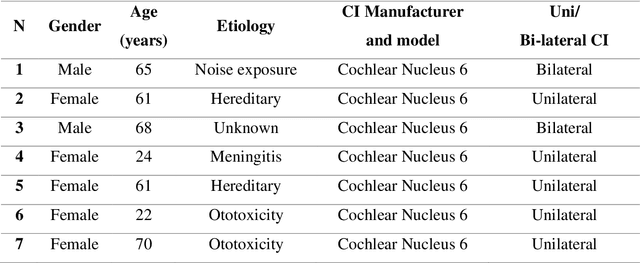

The effect of speech and noise levels on the quality perceived by cochlear implant and normal hearing listeners

Mar 03, 2021

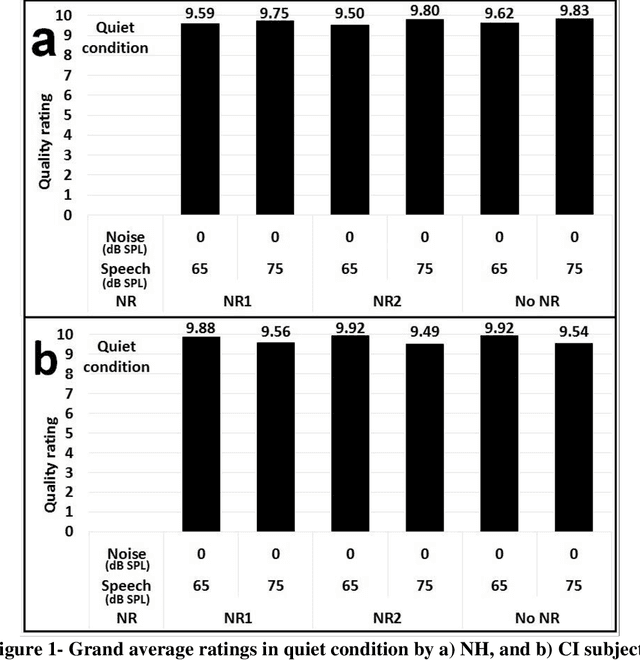

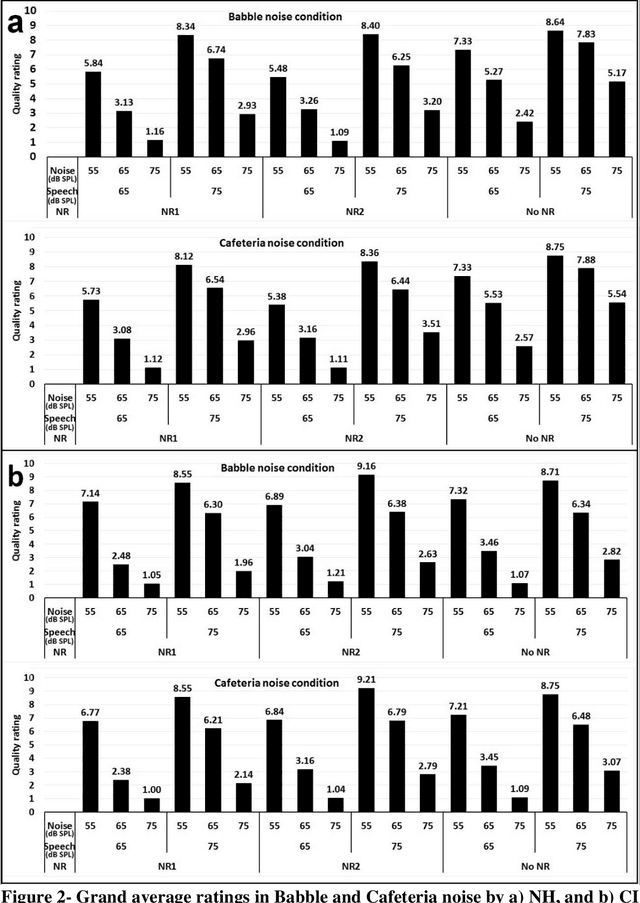

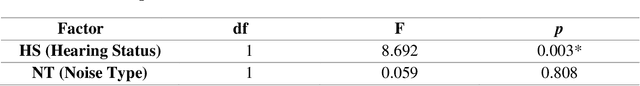

Abstract:Electrical hearing by cochlear implants (CIs) may be fundamentally different from acoustic hearing by normal-hearing (NH) listeners, presumably showing unequal speech quality perception in various noise environments. Noise reduction (NR) algorithms used in CI reduce the noise in favor of signal-to-noise ratio (SNR), regardless of plausible accompanying distortions that may degrade the speech quality perception. To gain better understanding of CI speech quality perception, the present work aimed investigating speech quality perception in a diverse noise conditions, including factors of speech/noise levels, type of noise, and distortions caused by NR models. Fifteen NH and seven CI subjects participated in this study. Speech sentences were set to two different levels (65 and 75 dB SPL). Two types of noise (Cafeteria and Babble) at three levels (55, 65, and 75 dB SPL) were used. Sentences were processed using two NR algorithms to investigate the perceptual sensitivity of CI and NH listeners to the distortion. All sentences processed with the combinations of these sets were presented to CI and NH listeners, and they were asked to rate the sound quality of speech as they perceived. The effect of each factor on the perceived speech quality was investigated based on the group averaged quality rated by CI and NH listeners. Consistent with previous studies, CI listeners were not as sensitive as NH to the distortion made by NR algorithms. Statistical analysis showed that the speech level has significant effect on quality perception. At the same SNR, the quality of 65 dB speech was rated higher than that of 75 dB for CI users, but vice versa for NH listeners. Therefore, the present study showed that the perceived speech quality patterns were different between CI and NH listeners in terms of their sensitivity to distortion and speech level in complex listening environment.

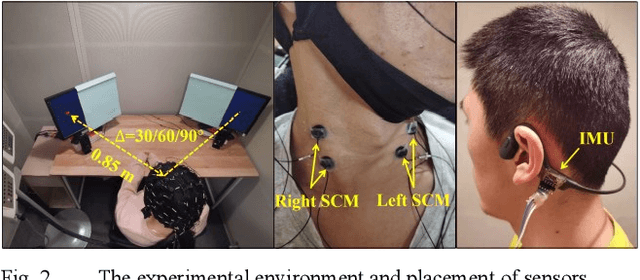

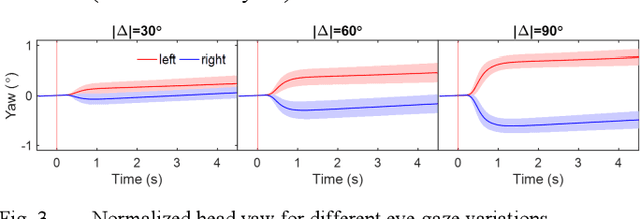

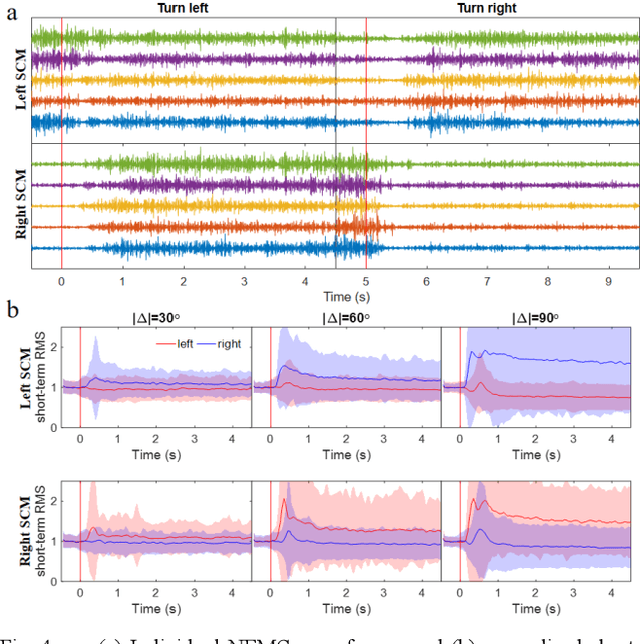

Eye-gaze Estimation with HEOG and Neck EMG using Deep Neural Networks

Mar 03, 2021

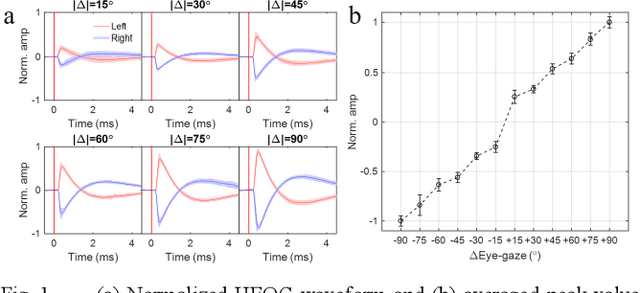

Abstract:Hearing-impaired listeners usually have troubles attending target talker in multi-talker scenes, even with hearing aids (HAs). The problem can be solved with eye-gaze steering HAs, which requires listeners eye-gazing on the target. In a situation where head rotates, eye-gaze is subject to both behaviors of saccade and head rotation. However, existing methods of eye-gaze estimation did not work reliably, since the listener's strategy of eye-gaze varies and measurements of the two behaviors were not properly combined. Besides, existing methods were based on hand-craft features, which could overlook some important information. In this paper, a head-fixed and a head-free experiments were conducted. We used horizontal electrooculography (HEOG) and neck electromyography (NEMG), which separately measured saccade and head rotation to commonly estimate eye-gaze. Besides traditional classifier and hand-craft features, deep neural networks (DNN) were introduced to automatically extract features from intact waveforms. Evaluation results showed that when the input was HEOG with inertial measurement unit, the best performance of our proposed DNN classifiers achieved 93.3%; and when HEOG was with NEMG together, the accuracy reached 72.6%, higher than that with HEOG (about 71.0%) or NEMG (about 35.7%) alone. These results indicated the feasibility to estimate eye-gaze with HEOG and NEMG.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge