Decentralized, Communication- and Coordination-free Learning in Structured Matching Markets

Jun 06, 2022Chinmay Maheshwari, Eric Mazumdar, Shankar Sastry

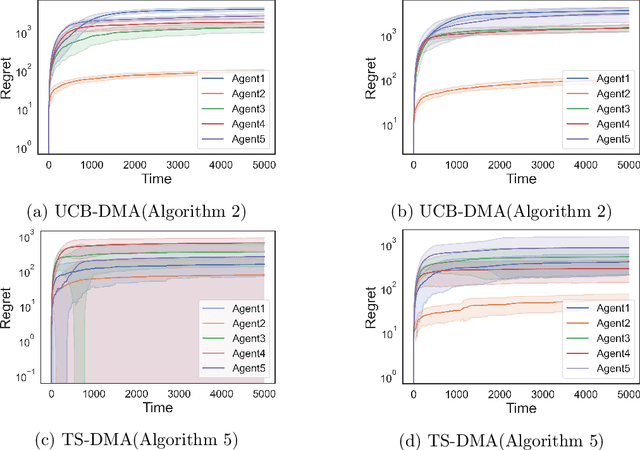

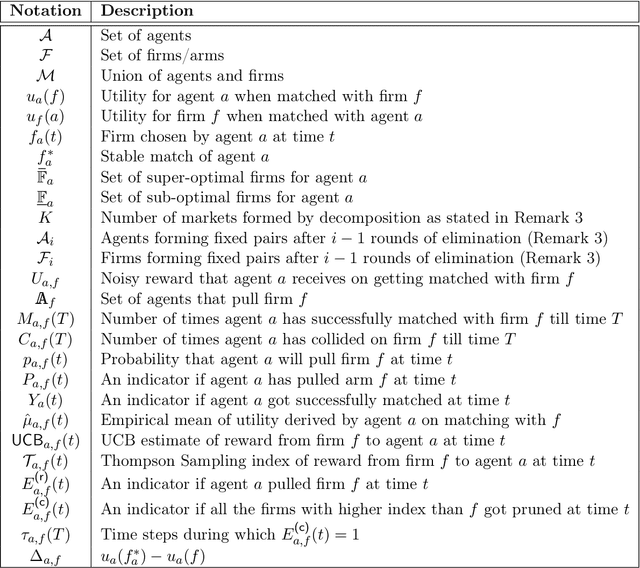

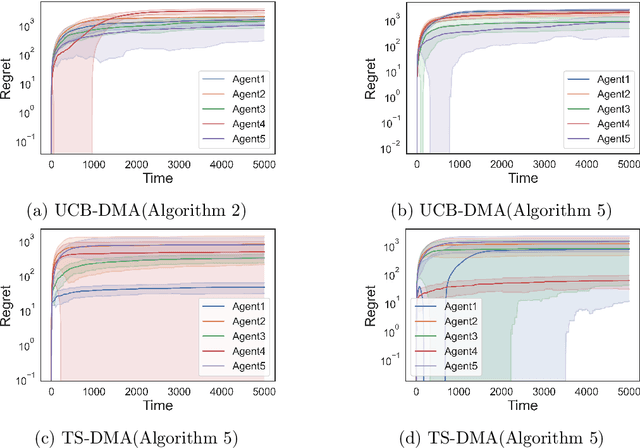

We study the problem of online learning in competitive settings in the context of two-sided matching markets. In particular, one side of the market, the agents, must learn about their preferences over the other side, the firms, through repeated interaction while competing with other agents for successful matches. We propose a class of decentralized, communication- and coordination-free algorithms that agents can use to reach to their stable match in structured matching markets. In contrast to prior works, the proposed algorithms make decisions based solely on an agent's own history of play and requires no foreknowledge of the firms' preferences. Our algorithms are constructed by splitting up the statistical problem of learning one's preferences, from noisy observations, from the problem of competing for firms. We show that under realistic structural assumptions on the underlying preferences of the agents and firms, the proposed algorithms incur a regret which grows at most logarithmically in the time horizon. Our results show that, in the case of matching markets, competition need not drastically affect the performance of decentralized, communication and coordination free online learning algorithms.

Who Leads and Who Follows in Strategic Classification?

Jun 23, 2021Tijana Zrnic, Eric Mazumdar, S. Shankar Sastry, Michael I. Jordan

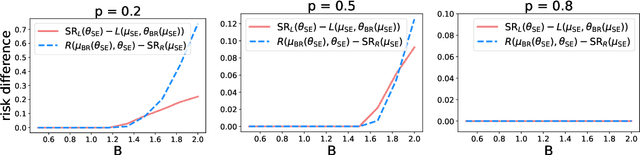

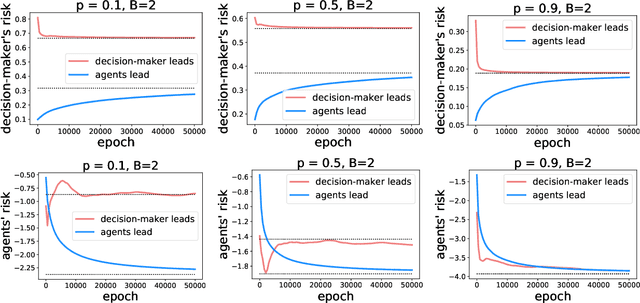

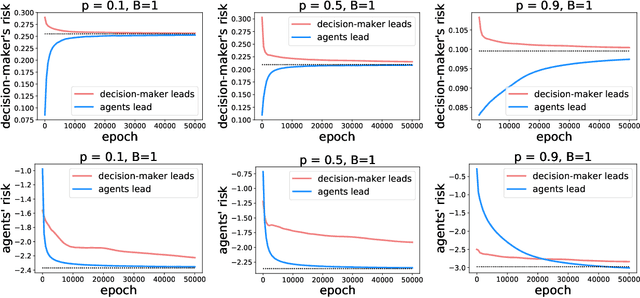

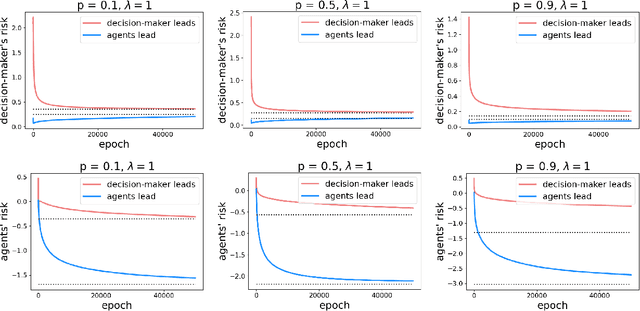

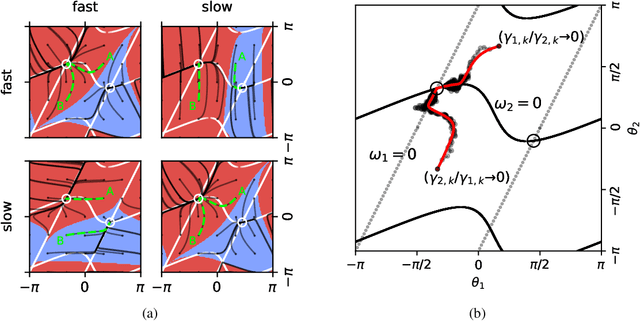

As predictive models are deployed into the real world, they must increasingly contend with strategic behavior. A growing body of work on strategic classification treats this problem as a Stackelberg game: the decision-maker "leads" in the game by deploying a model, and the strategic agents "follow" by playing their best response to the deployed model. Importantly, in this framing, the burden of learning is placed solely on the decision-maker, while the agents' best responses are implicitly treated as instantaneous. In this work, we argue that the order of play in strategic classification is fundamentally determined by the relative frequencies at which the decision-maker and the agents adapt to each other's actions. In particular, by generalizing the standard model to allow both players to learn over time, we show that a decision-maker that makes updates faster than the agents can reverse the order of play, meaning that the agents lead and the decision-maker follows. We observe in standard learning settings that such a role reversal can be desirable for both the decision-maker and the strategic agents. Finally, we show that a decision-maker with the freedom to choose their update frequency can induce learning dynamics that converge to Stackelberg equilibria with either order of play.

Zeroth-Order Methods for Convex-Concave Minmax Problems: Applications to Decision-Dependent Risk Minimization

Jun 16, 2021Chinmay Maheshwari, Chih-Yuan Chiu, Eric Mazumdar, S. Shankar Sastry, Lillian J. Ratliff

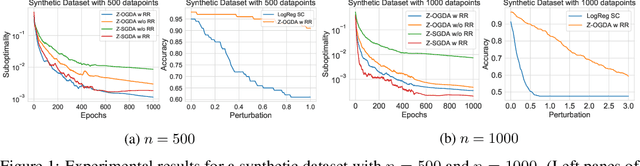

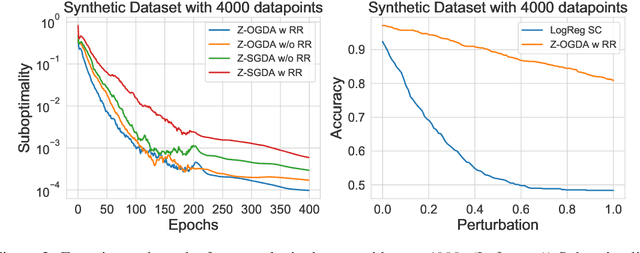

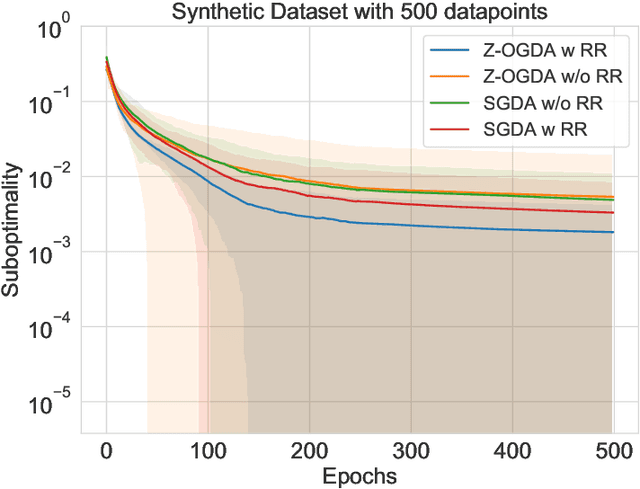

Min-max optimization is emerging as a key framework for analyzing problems of robustness to strategically and adversarially generated data. We propose a random reshuffling-based gradient free Optimistic Gradient Descent-Ascent algorithm for solving convex-concave min-max problems with finite sum structure. We prove that the algorithm enjoys the same convergence rate as that of zeroth-order algorithms for convex minimization problems. We further specialize the algorithm to solve distributionally robust, decision-dependent learning problems, where gradient information is not readily available. Through illustrative simulations, we observe that our proposed approach learns models that are simultaneously robust against adversarial distribution shifts and strategic decisions from the data sources, and outperforms existing methods from the strategic classification literature.

Fast Distributionally Robust Learning with Variance Reduced Min-Max Optimization

Apr 27, 2021Yaodong Yu, Tianyi Lin, Eric Mazumdar, Michael I. Jordan

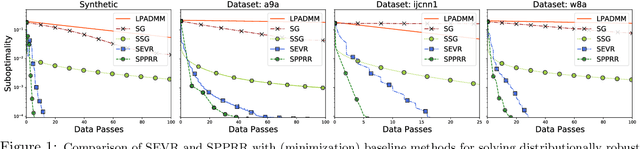

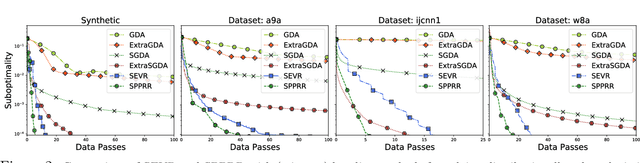

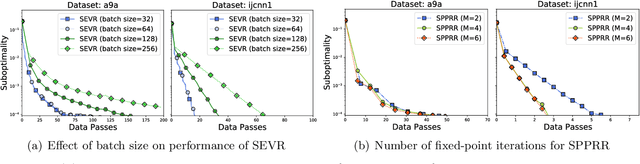

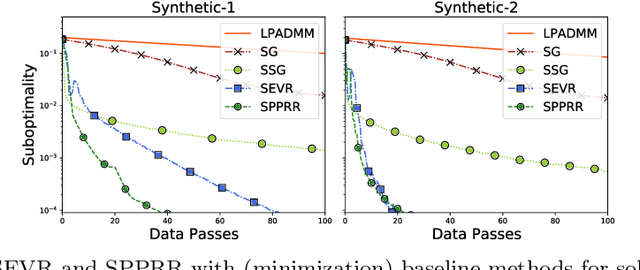

Distributionally robust supervised learning (DRSL) is emerging as a key paradigm for building reliable machine learning systems for real-world applications -- reflecting the need for classifiers and predictive models that are robust to the distribution shifts that arise from phenomena such as selection bias or nonstationarity. Existing algorithms for solving Wasserstein DRSL -- one of the most popular DRSL frameworks based around robustness to perturbations in the Wasserstein distance -- involve solving complex subproblems or fail to make use of stochastic gradients, limiting their use in large-scale machine learning problems. We revisit Wasserstein DRSL through the lens of min-max optimization and derive scalable and efficiently implementable stochastic extra-gradient algorithms which provably achieve faster convergence rates than existing approaches. We demonstrate their effectiveness on synthetic and real data when compared to existing DRSL approaches. Key to our results is the use of variance reduction and random reshuffling to accelerate stochastic min-max optimization, the analysis of which may be of independent interest.

Expert Selection in High-Dimensional Markov Decision Processes

Oct 26, 2020Vicenc Rubies-Royo, Eric Mazumdar, Roy Dong, Claire Tomlin, S. Shankar Sastry

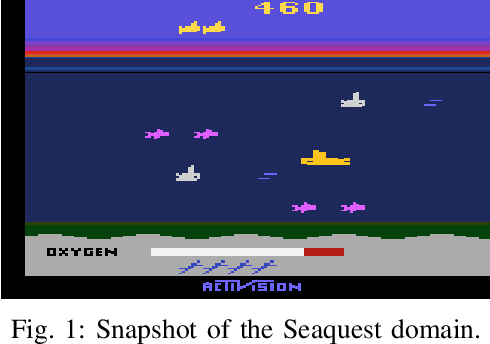

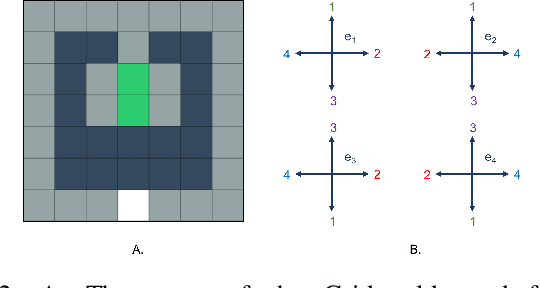

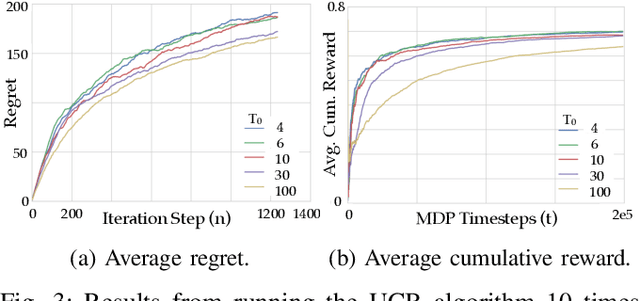

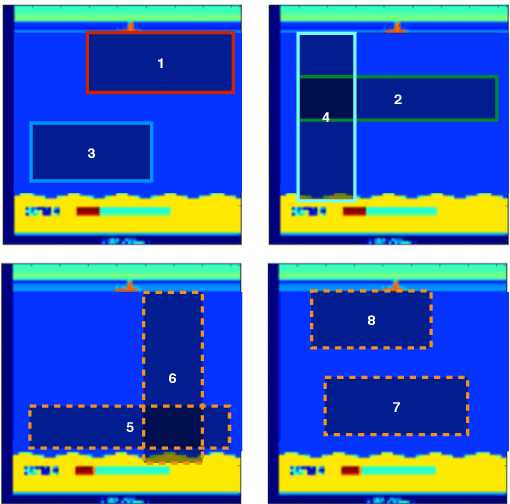

In this work we present a multi-armed bandit framework for online expert selection in Markov decision processes and demonstrate its use in high-dimensional settings. Our method takes a set of candidate expert policies and switches between them to rapidly identify the best performing expert using a variant of the classical upper confidence bound algorithm, thus ensuring low regret in the overall performance of the system. This is useful in applications where several expert policies may be available, and one needs to be selected at run-time for the underlying environment.

Technical Report: Adaptive Control for Linearizable Systems Using On-Policy Reinforcement Learning

Apr 06, 2020Tyler Westenbroek, Eric Mazumdar, David Fridovich-Keil, Valmik Prabhu, Claire J. Tomlin, S. Shankar Sastry

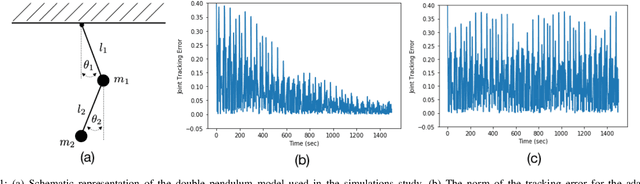

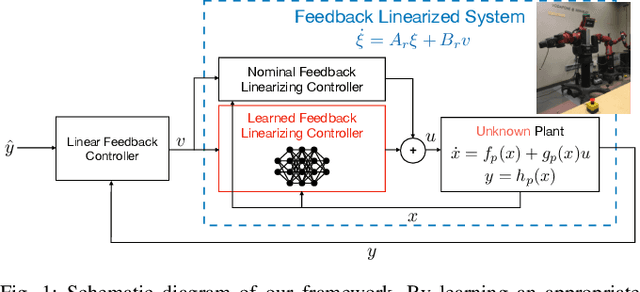

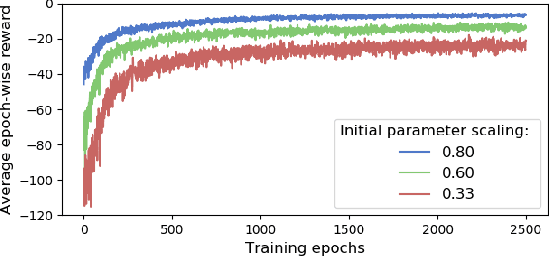

This paper proposes a framework for adaptively learning a feedback linearization-based tracking controller for an unknown system using discrete-time model-free policy-gradient parameter update rules. The primary advantage of the scheme over standard model-reference adaptive control techniques is that it does not require the learned inverse model to be invertible at all instances of time. This enables the use of general function approximators to approximate the linearizing controller for the system without having to worry about singularities. However, the discrete-time and stochastic nature of these algorithms precludes the direct application of standard machinery from the adaptive control literature to provide deterministic stability proofs for the system. Nevertheless, we leverage these techniques alongside tools from the stochastic approximation literature to demonstrate that with high probability the tracking and parameter errors concentrate near zero when a certain persistence of excitation condition is satisfied. A simulated example of a double pendulum demonstrates the utility of the proposed theory. 1

On Thompson Sampling with Langevin Algorithms

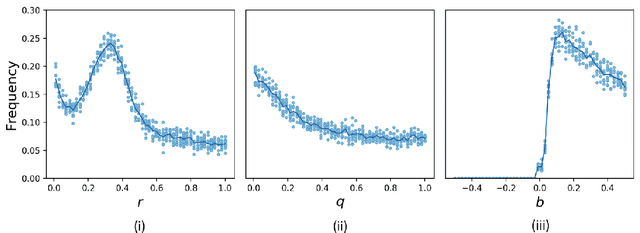

Feb 23, 2020Eric Mazumdar, Aldo Pacchiano, Yi-an Ma, Peter L. Bartlett, Michael I. Jordan

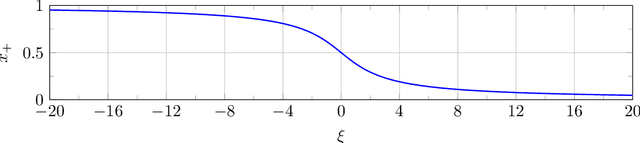

Thompson sampling is a methodology for multi-armed bandit problems that is known to enjoy favorable performance in both theory and practice. It does, however, have a significant limitation computationally, arising from the need for samples from posterior distributions at every iteration. We propose two Markov Chain Monte Carlo (MCMC) methods tailored to Thompson sampling to address this issue. We construct quickly converging Langevin algorithms to generate approximate samples that have accuracy guarantees, and we leverage novel posterior concentration rates to analyze the regret of the resulting approximate Thompson sampling algorithm. Further, we specify the necessary hyper-parameters for the MCMC procedure to guarantee optimal instance-dependent frequentist regret while having low computational complexity. In particular, our algorithms take advantage of both posterior concentration and a sample reuse mechanism to ensure that only a constant number of iterations and a constant amount of data is needed in each round. The resulting approximate Thompson sampling algorithm has logarithmic regret and its computational complexity does not scale with the time horizon of the algorithm.

Feedback Linearization for Unknown Systems via Reinforcement Learning

Oct 29, 2019Tyler Westenbroek, David Fridovich-Keil, Eric Mazumdar, Shreyas Arora, Valmik Prabhu, S. Shankar Sastry, Claire J. Tomlin

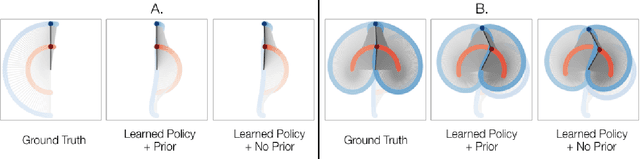

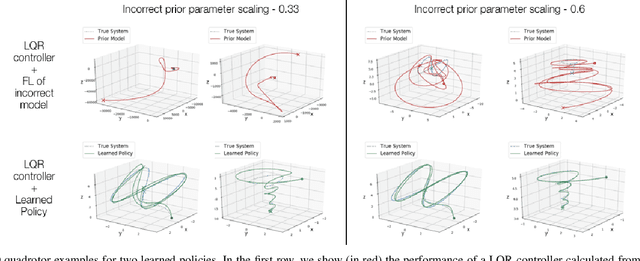

We present a novel approach to control design for nonlinear systems, which leverages reinforcement learning techniques to learn a linearizing controller for a physical plant with unknown dynamics. Feedback linearization is a technique from nonlinear control which renders the input-output dynamics of a nonlinear plant \emph{linear} under application of an appropriate feedback controller. Once a linearizing controller has been constructed, desired output trajectories for the nonlinear plant can be tracked using a variety of linear control techniques. A single learned policy then serves to track arbitrary desired reference signals provided by a higher-level planner. We present theoretical results which provide conditions under which the learning problem has a unique solution which exactly linearizes the plant. We demonstrate the performance of our approach on two simulated problems and a physical robotic platform. For the simulated environments, we observe that the learned feedback linearizing policies can achieve arbitrary tracking of reference trajectories for a fully actuated double pendulum and a 14 dimensional quadrotor. In hardware, we demonstrate that our approach significantly improves tracking performance on a 7-DOF Baxter robot after less than two hours of training.

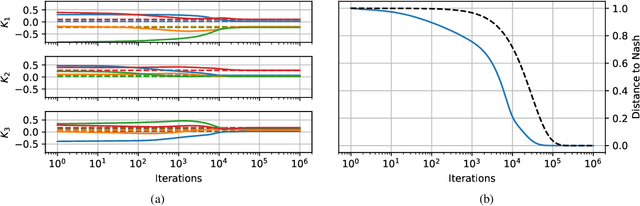

Policy-Gradient Algorithms Have No Guarantees of Convergence in Continuous Action and State Multi-Agent Settings

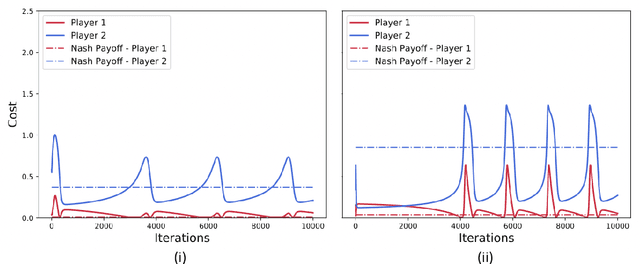

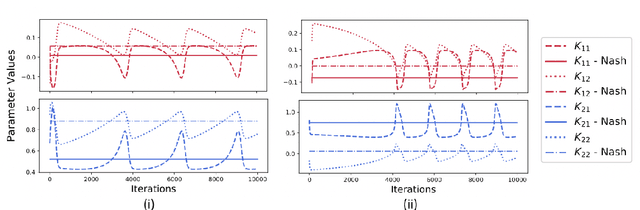

Jul 08, 2019Eric Mazumdar, Lillian J. Ratliff, Michael I. Jordan, S. Shankar Sastry

We show by counterexample that policy-gradient algorithms have no guarantees of even local convergence to Nash equilibria in continuous action and state space multi-agent settings. To do so, we analyze gradient-play in $N$-player general-sum linear quadratic games. In such games the state and action spaces are continuous and the unique global Nash equilibrium can be found be solving coupled Ricatti equations. Further, gradient-play in LQ games is equivalent to multi-agent policy gradient. We first prove that the only critical point of the gradient dynamics in these games is the unique global Nash equilibrium. We then give sufficient conditions under which policy gradient will avoid the Nash equilibrium, and generate a large number of general-sum linear quadratic games that satisfy these conditions. The existence of such games indicates that one of the most popular approaches to solving reinforcement learning problems in the classic reinforcement learning setting has no guarantee of convergence in multi-agent settings. Further, the ease with which we can generate these counterexamples suggests that such situations are not mere edge cases and are in fact quite common.

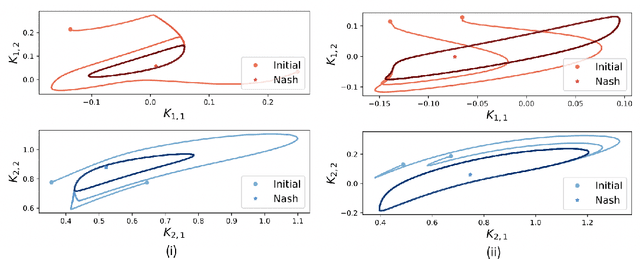

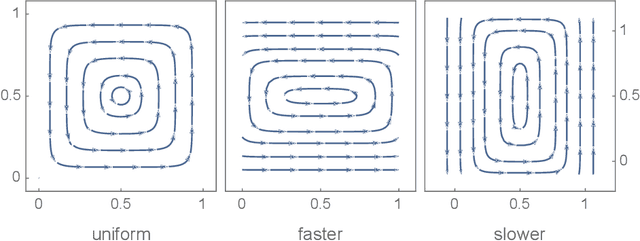

Convergence Analysis of Gradient-Based Learning with Non-Uniform Learning Rates in Non-Cooperative Multi-Agent Settings

May 30, 2019Benjamin Chasnov, Lillian J. Ratliff, Eric Mazumdar, Samuel A. Burden

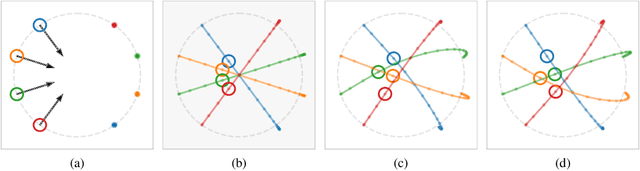

Considering a class of gradient-based multi-agent learning algorithms in non-cooperative settings, we provide local convergence guarantees to a neighborhood of a stable local Nash equilibrium. In particular, we consider continuous games where agents learn in (i) deterministic settings with oracle access to their gradient and (ii) stochastic settings with an unbiased estimator of their gradient. Utilizing the minimum and maximum singular values of the game Jacobian, we provide finite-time convergence guarantees in the deterministic case. On the other hand, in the stochastic case, we provide concentration bounds guaranteeing that with high probability agents will converge to a neighborhood of a stable local Nash equilibrium in finite time. Different than other works in this vein, we also study the effects of non-uniform learning rates on the learning dynamics and convergence rates. We find that much like preconditioning in optimization, non-uniform learning rates cause a distortion in the vector field which can, in turn, change the rate of convergence and the shape of the region of attraction. The analysis is supported by numerical examples that illustrate different aspects of the theory. We conclude with discussion of the results and open questions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge