Ekram Hossain

Cross-reality Location Privacy Protection in 6G-enabled Vehicular Metaverses: An LLM-enhanced Hybrid Generative Diffusion Model-based Approach

Jan 18, 2026Abstract:The emergence of 6G-enabled vehicular metaverses enables Autonomous Vehicles (AVs) to operate across physical and virtual spaces through space-air-ground-sea integrated networks. The AVs can deploy AI agents powered by large AI models as personalized assistants, on edge servers to support intelligent driving decision making and enhanced on-board experiences. However, such cross-reality interactions may cause serious location privacy risks, as adversaries can infer AV trajectories by correlating the location reported when AVs request LBS in reality with the location of the edge servers on which their corresponding AI agents are deployed in virtuality. To address this challenge, we design a cross-reality location privacy protection framework based on hybrid actions, including continuous location perturbation in reality and discrete privacy-aware AI agent migration in virtuality. In this framework, a new privacy metric, termed cross-reality location entropy, is proposed to effectively quantify the privacy levels of AVs. Based on this metric, we formulate an optimization problem to optimize the hybrid action, focusing on achieving a balance between location protection, service latency reduction, and quality of service maintenance. To solve the complex mixed-integer problem, we develop a novel LLM-enhanced Hybrid Diffusion Proximal Policy Optimization (LHDPPO) algorithm, which integrates LLM-driven informative reward design to enhance environment understanding with double Generative Diffusion Models-based policy exploration to handle high-dimensional action spaces, thereby enabling reliable determination of optimal hybrid actions. Extensive experiments on real-world datasets demonstrate that the proposed framework effectively mitigates cross-reality location privacy leakage for AVs while maintaining strong user immersion within 6G-enabled vehicular metaverse scenarios.

Generalization Analysis and Method for Domain Generalization for a Family of Recurrent Neural Networks

Jan 13, 2026Abstract:Deep learning (DL) has driven broad advances across scientific and engineering domains. Despite its success, DL models often exhibit limited interpretability and generalization, which can undermine trust, especially in safety-critical deployments. As a result, there is growing interest in (i) analyzing interpretability and generalization and (ii) developing models that perform robustly under data distributions different from those seen during training (i.e. domain generalization). However, the theoretical analysis of DL remains incomplete. For example, many generalization analyses assume independent samples, which is violated in sequential data with temporal correlations. Motivated by these limitations, this paper proposes a method to analyze interpretability and out-of-domain (OOD) generalization for a family of recurrent neural networks (RNNs). Specifically, the evolution of a trained RNN's states is modeled as an unknown, discrete-time, nonlinear closed-loop feedback system. Using Koopman operator theory, these nonlinear dynamics are approximated with a linear operator, enabling interpretability. Spectral analysis is then used to quantify the worst-case impact of domain shifts on the generalization error. Building on this analysis, a domain generalization method is proposed that reduces the OOD generalization error and improves the robustness to distribution shifts. Finally, the proposed analysis and domain generalization approach are validated on practical temporal pattern-learning tasks.

Beamforming Control in RIS-Aided Wireless Communications: A Predictive Physics-Based Approach

Aug 23, 2025Abstract:Integrating reconfigurable intelligent surfaces (RIS) into wireless communication systems is a promising approach for enhancing coverage and data rates by intelligently redirecting signals, through a process known as beamforming. However, the process of RIS beamforming (or passive beamforming) control is associated with multiple latency-inducing factors. As a result, by the time the beamforming is effectively updated, the channel conditions may have already changed. For example, the low update rate of localization systems becomes a critical limitation, as a mobile UE's position may change significantly between two consecutive measurements. To address this issue, this work proposes a practical and scalable physics-based solution that is effective across a wide range of UE movement models. Specifically, we propose a kinematic observer and predictor to enable proactive RIS control. From low-rate position estimates provided by a localizer, the kinematic observer infers the UE's speed and acceleration. These motion parameters are then used by a predictor to estimate the UE's future positions at a higher rate, allowing the RIS to adjust promptly and compensate for inherent delays in both the RIS control and localization systems. Numerical results validate the effectiveness of the proposed approach, demonstrating real-time RIS adjustments with low computational complexity, even in scenarios involving rapid UE movement.

Convergence-Privacy-Fairness Trade-Off in Personalized Federated Learning

Jun 17, 2025Abstract:Personalized federated learning (PFL), e.g., the renowned Ditto, strikes a balance between personalization and generalization by conducting federated learning (FL) to guide personalized learning (PL). While FL is unaffected by personalized model training, in Ditto, PL depends on the outcome of the FL. However, the clients' concern about their privacy and consequent perturbation of their local models can affect the convergence and (performance) fairness of PL. This paper presents PFL, called DP-Ditto, which is a non-trivial extension of Ditto under the protection of differential privacy (DP), and analyzes the trade-off among its privacy guarantee, model convergence, and performance distribution fairness. We also analyze the convergence upper bound of the personalized models under DP-Ditto and derive the optimal number of global aggregations given a privacy budget. Further, we analyze the performance fairness of the personalized models, and reveal the feasibility of optimizing DP-Ditto jointly for convergence and fairness. Experiments validate our analysis and demonstrate that DP-Ditto can surpass the DP-perturbed versions of the state-of-the-art PFL models, such as FedAMP, pFedMe, APPLE, and FedALA, by over 32.71% in fairness and 9.66% in accuracy.

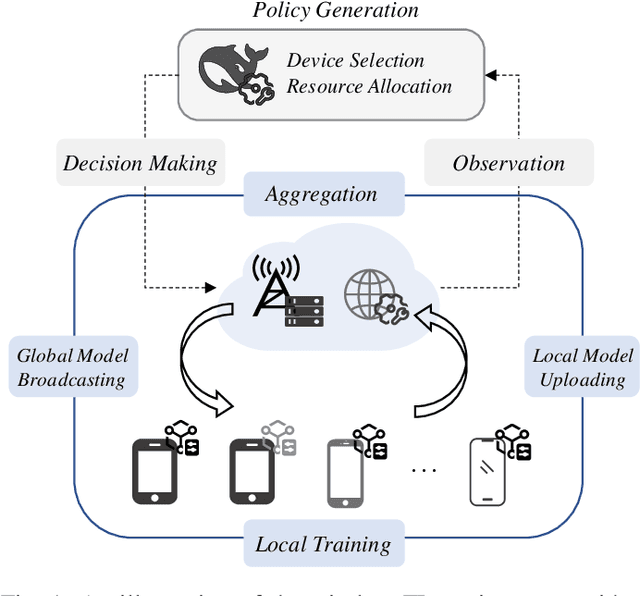

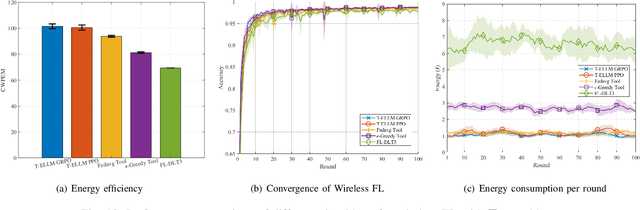

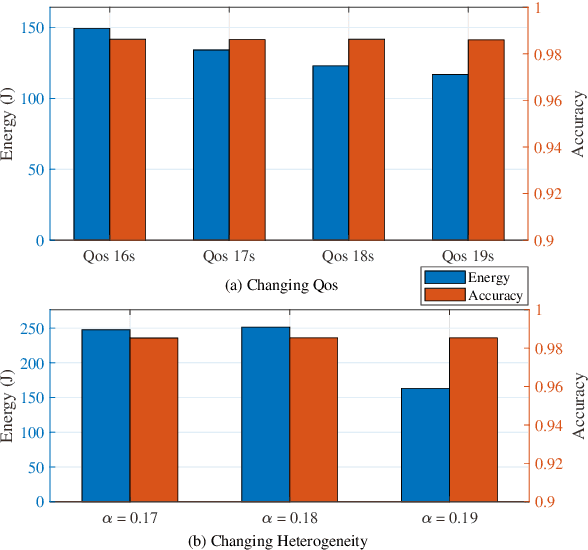

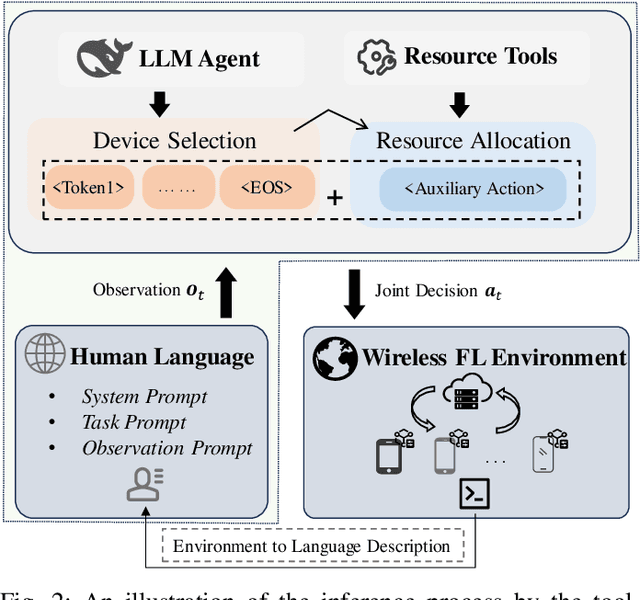

Tool-Aided Evolutionary LLM for Generative Policy Toward Efficient Resource Management in Wireless Federated Learning

May 16, 2025

Abstract:Federated Learning (FL) enables distributed model training across edge devices in a privacy-friendly manner. However, its efficiency heavily depends on effective device selection and high-dimensional resource allocation in dynamic and heterogeneous wireless environments. Conventional methods demand a confluence of domain-specific expertise, extensive hyperparameter tuning, and/or heavy interaction cost. This paper proposes a Tool-aided Evolutionary Large Language Model (T-ELLM) framework to generate a qualified policy for device selection in a wireless FL environment. Unlike conventional optimization methods, T-ELLM leverages natural language-based scenario prompts to enhance generalization across varying network conditions. The framework decouples the joint optimization problem mathematically, enabling tractable learning of device selection policies while delegating resource allocation to convex optimization tools. To improve adaptability, T-ELLM integrates a sample-efficient, model-based virtual learning environment that captures the relationship between device selection and learning performance, facilitating subsequent group relative policy optimization. This concerted approach reduces reliance on real-world interactions, minimizing communication overhead while maintaining high-fidelity decision-making. Theoretical analysis proves that the discrepancy between virtual and real environments is bounded, ensuring the advantage function learned in the virtual environment maintains a provably small deviation from real-world conditions. Experimental results demonstrate that T-ELLM outperforms benchmark methods in energy efficiency and exhibits robust adaptability to environmental changes.

Koopman-Based Generalization of Deep Reinforcement Learning With Application to Wireless Communications

Mar 04, 2025

Abstract:Deep Reinforcement Learning (DRL) is a key machine learning technology driving progress across various scientific and engineering fields, including wireless communication. However, its limited interpretability and generalizability remain major challenges. In supervised learning, generalizability is commonly evaluated through the generalization error using information-theoretic methods. In DRL, the training data is sequential and not independent and identically distributed (i.i.d.), rendering traditional information-theoretic methods unsuitable for generalizability analysis. To address this challenge, this paper proposes a novel analytical method for evaluating the generalizability of DRL. Specifically, we first model the evolution of states and actions in trained DRL algorithms as unknown discrete, stochastic, and nonlinear dynamical functions. Then, we employ a data-driven identification method, the Koopman operator, to approximate these functions, and propose two interpretable representations. Based on these interpretable representations, we develop a rigorous mathematical approach to evaluate the generalizability of DRL algorithms. This approach is formulated using the spectral feature analysis of the Koopman operator, leveraging the H_\infty norm. Finally, we apply this generalization analysis to compare the soft actor-critic method, widely recognized as a robust DRL approach, against the proximal policy optimization algorithm for an unmanned aerial vehicle-assisted mmWave wireless communication scenario.

Next-Generation Wireless: Tracking the Evolutionary Path of 6G Mobile Communication

Jan 24, 2025Abstract:The mobile communications industry is lighting-fast machinery, and discussions about 6G technologies have already begun. In this article, we intend to take the readers on a journey to discover 6G and its evolutionary stages. The journey starts with an overview of the technical constraints of 5G prompting the need for a new generation of mobile systems. The initial discussion is followed by an examination of the 6G vision, use cases, technical requirements, technology enablers, and potential network architecture. We conclude the discussion by reviewing the transformative opportunities of this technology in society, businesses and industries, along with the anticipated technological limitations of the network that will drive the development of further mobile generations. Our goal is to deliver a friendly, informative, precise, and engaging narrative that could give readers a panoramic overview of this important topic.

Multi-Task Semantic Communication With Graph Attention-Based Feature Correlation Extraction

Jan 02, 2025

Abstract:Multi-task semantic communication can serve multiple learning tasks using a shared encoder model. Existing models have overlooked the intricate relationships between features extracted during an encoding process of tasks. This paper presents a new graph attention inter-block (GAI) module to the encoder/transmitter of a multi-task semantic communication system, which enriches the features for multiple tasks by embedding the intermediate outputs of encoding in the features, compared to the existing techniques. The key idea is that we interpret the outputs of the intermediate feature extraction blocks of the encoder as the nodes of a graph to capture the correlations of the intermediate features. Another important aspect is that we refine the node representation using a graph attention mechanism to extract the correlations and a multi-layer perceptron network to associate the node representations with different tasks. Consequently, the intermediate features are weighted and embedded into the features transmitted for executing multiple tasks at the receiver. Experiments demonstrate that the proposed model surpasses the most competitive and publicly available models by 11.4% on the CityScapes 2Task dataset and outperforms the established state-of-the-art by 3.97% on the NYU V2 3Task dataset, respectively, when the bandwidth ratio of the communication channel (i.e., compression level for transmission over the channel) is as constrained as 1 12 .

Distributed Traffic Control in Complex Dynamic Roadblocks: A Multi-Agent Deep RL Approach

Dec 31, 2024Abstract:Autonomous Vehicles (AVs) represent a transformative advancement in the transportation industry. These vehicles have sophisticated sensors, advanced algorithms, and powerful computing systems that allow them to navigate and operate without direct human intervention. However, AVs' systems still get overwhelmed when they encounter a complex dynamic change in the environment resulting from an accident or a roadblock for maintenance. The advanced features of Sixth Generation (6G) technology are set to offer strong support to AVs, enabling real-time data exchange and management of complex driving maneuvers. This paper proposes a Multi-Agent Reinforcement Learning (MARL) framework to improve AVs' decision-making in dynamic and complex Intelligent Transportation Systems (ITS) utilizing 6G-V2X communication. The primary objective is to enable AVs to avoid roadblocks efficiently by changing lanes while maintaining optimal traffic flow and maximizing the mean harmonic speed. To ensure realistic operations, key constraints such as minimum vehicle speed, roadblock count, and lane change frequency are integrated. We train and test the proposed MARL model with two traffic simulation scenarios using the SUMO and TraCI interface. Through extensive simulations, we demonstrate that the proposed model adapts to various traffic conditions and achieves efficient and robust traffic flow management. The trained model effectively navigates dynamic roadblocks, promoting improved traffic efficiency in AV operations with more than 70% efficiency over other benchmark solutions.

Science-Informed Deep Learning (ScIDL) With Applications to Wireless Communications

Jun 29, 2024

Abstract:Given the extensive and growing capabilities offered by deep learning (DL), more researchers are turning to DL to address complex challenges in next-generation (xG) communications. However, despite its progress, DL also reveals several limitations that are becoming increasingly evident. One significant issue is its lack of interpretability, which is especially critical for safety-sensitive applications. Another significant consideration is that DL may not comply with the constraints set by physics laws or given security standards, which are essential for reliable DL. Additionally, DL models often struggle outside their training data distributions, which is known as poor generalization. Moreover, there is a scarcity of theoretical guidance on designing DL algorithms. These challenges have prompted the emergence of a burgeoning field known as science-informed DL (ScIDL). ScIDL aims to integrate existing scientific knowledge with DL techniques to develop more powerful algorithms. The core objective of this article is to provide a brief tutorial on ScIDL that illustrates its building blocks and distinguishes it from conventional DL. Furthermore, we discuss both recent applications of ScIDL and potential future research directions in the field of wireless communications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge