David Vazquez

Towards good validation metrics for generative models in offline model-based optimisation

Nov 19, 2022Abstract:In this work we propose a principled evaluation framework for model-based optimisation to measure how well a generative model can extrapolate. We achieve this by interpreting the training and validation splits as draws from their respective `truncated' ground truth distributions, where examples in the validation set contain scores much larger than those in the training set. Model selection is performed on the validation set for some prescribed validation metric. A major research question however is in determining what validation metric correlates best with the expected value of generated candidates with respect to the ground truth oracle; work towards answering this question can translate to large economic gains since it is expensive to evaluate the ground truth oracle in the real world. We compare various validation metrics for generative adversarial networks using our framework. We also discuss limitations with our framework with respect to existing datasets and how progress can be made to mitigate them.

Flaky Performances when Pretraining on Relational Databases

Nov 09, 2022Abstract:We explore the downstream task performances for graph neural network (GNN) self-supervised learning (SSL) methods trained on subgraphs extracted from relational databases (RDBs). Intuitively, this joint use of SSL and GNNs should allow to leverage more of the available data, which could translate to better results. However, we found that naively porting contrastive SSL techniques can cause ``negative transfer'': linear evaluation on fixed representations from a pretrained model performs worse than on representations from the randomly-initialized model. Based on the conjecture that contrastive SSL conflicts with the message passing layers of the GNN, we propose InfoNode: a contrastive loss aiming to maximize the mutual information between a node's initial- and final-layer representation. The primary empirical results support our conjecture and the effectiveness of InfoNode.

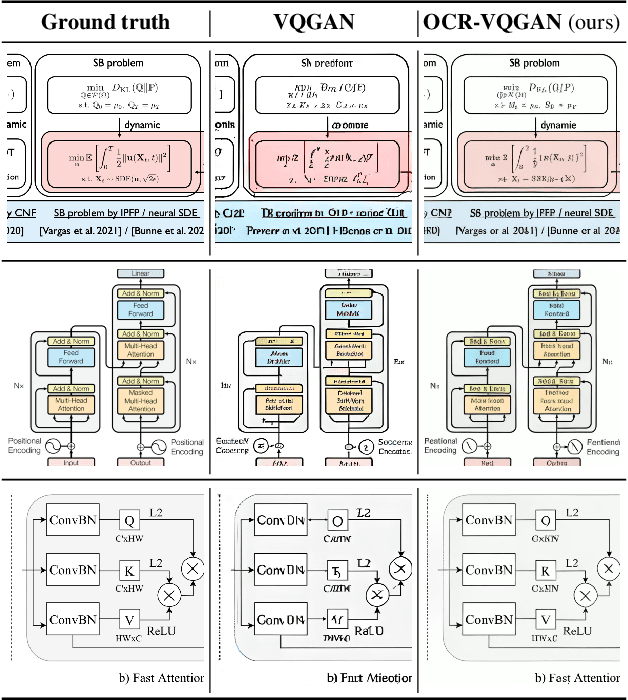

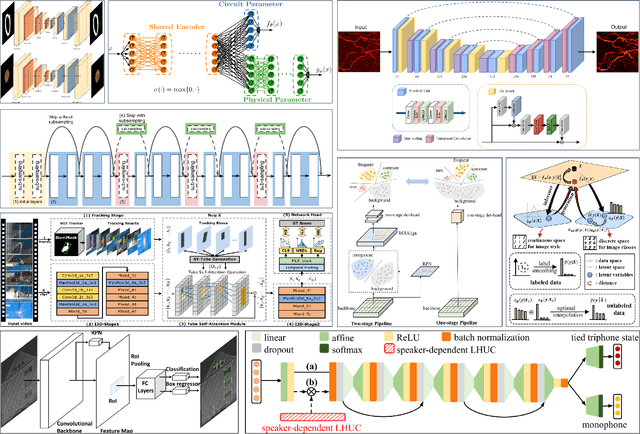

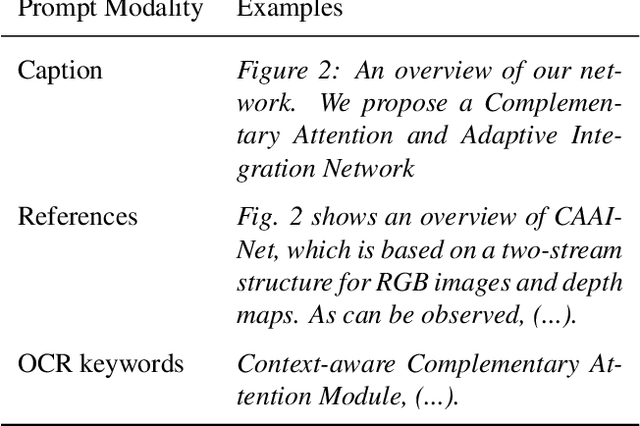

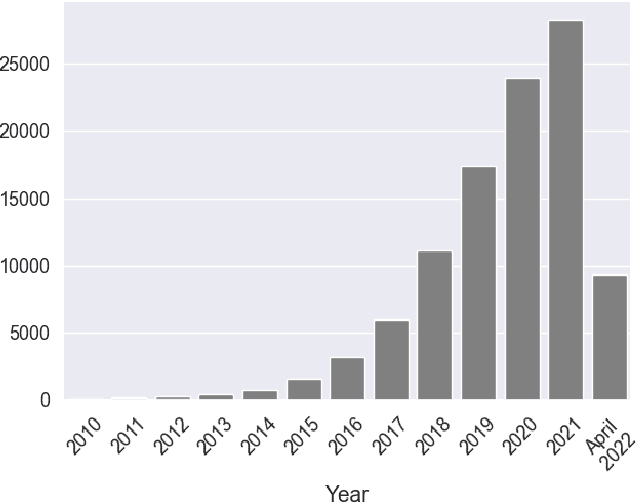

OCR-VQGAN: Taming Text-within-Image Generation

Oct 19, 2022

Abstract:Synthetic image generation has recently experienced significant improvements in domains such as natural image or art generation. However, the problem of figure and diagram generation remains unexplored. A challenging aspect of generating figures and diagrams is effectively rendering readable texts within the images. To alleviate this problem, we present OCR-VQGAN, an image encoder, and decoder that leverages OCR pre-trained features to optimize a text perceptual loss, encouraging the architecture to preserve high-fidelity text and diagram structure. To explore our approach, we introduce the Paper2Fig100k dataset, with over 100k images of figures and texts from research papers. The figures show architecture diagrams and methodologies of articles available at arXiv.org from fields like artificial intelligence and computer vision. Figures usually include text and discrete objects, e.g., boxes in a diagram, with lines and arrows that connect them. We demonstrate the effectiveness of OCR-VQGAN by conducting several experiments on the task of figure reconstruction. Additionally, we explore the qualitative and quantitative impact of weighting different perceptual metrics in the overall loss function. We release code, models, and dataset at https://github.com/joanrod/ocr-vqgan.

CADet: Fully Self-Supervised Anomaly Detection With Contrastive Learning

Oct 04, 2022

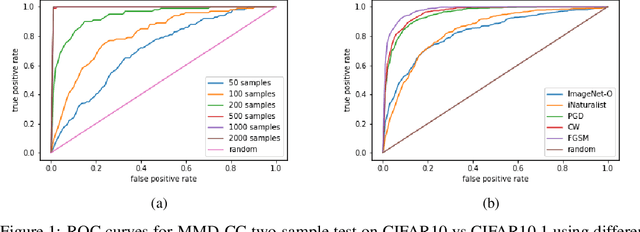

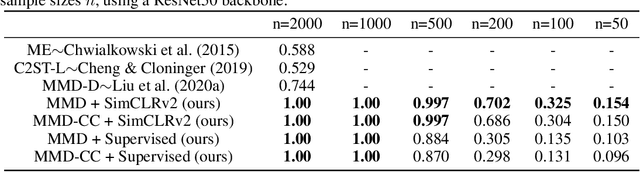

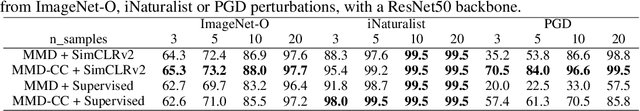

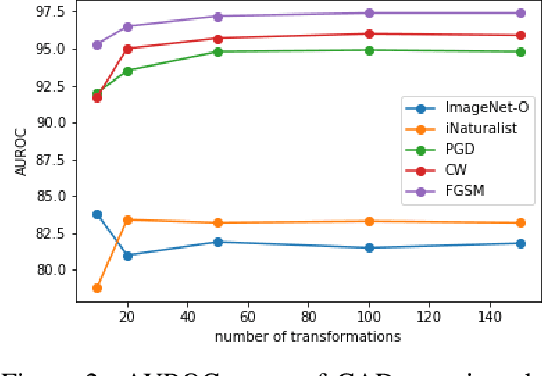

Abstract:Handling out-of-distribution (OOD) samples has become a major stake in the real-world deployment of machine learning systems. This work explores the application of self-supervised contrastive learning to the simultaneous detection of two types of OOD samples: unseen classes and adversarial perturbations. Since in practice the distribution of such samples is not known in advance, we do not assume access to OOD examples. We show that similarity functions trained with contrastive learning can be leveraged with the maximum mean discrepancy (MMD) two-sample test to verify whether two independent sets of samples are drawn from the same distribution. Inspired by this approach, we introduce CADet (Contrastive Anomaly Detection), a method based on image augmentations to perform anomaly detection on single samples. CADet compares favorably to adversarial detection methods to detect adversarially perturbed samples on ImageNet. Simultaneously, it achieves comparable performance to unseen label detection methods on two challenging benchmarks: ImageNet-O and iNaturalist. CADet is fully self-supervised and requires neither labels for in-distribution samples nor access to OOD examples.

Constraining Representations Yields Models That Know What They Don't Know

Aug 30, 2022

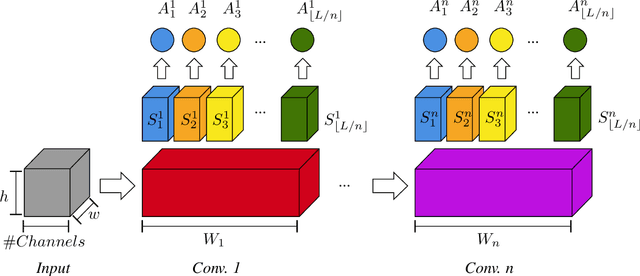

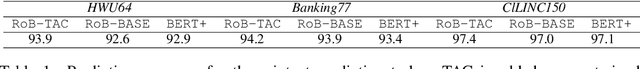

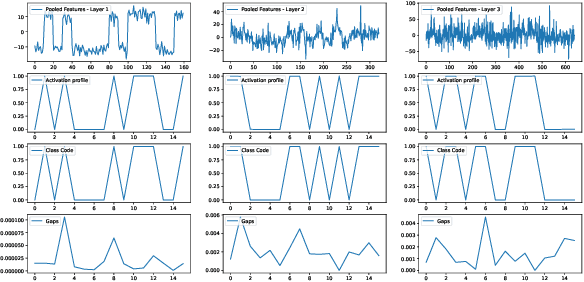

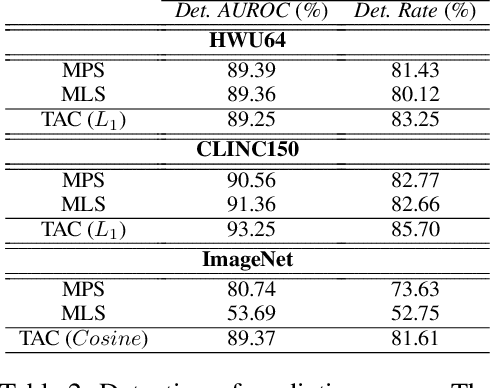

Abstract:A well-known failure mode of neural networks corresponds to high confidence erroneous predictions, especially for data that somehow differs from the training distribution. Such an unsafe behaviour limits their applicability. To counter that, we show that models offering accurate confidence levels can be defined via adding constraints in their internal representations. That is, we encode class labels as fixed unique binary vectors, or class codes, and use those to enforce class-dependent activation patterns throughout the model. Resulting predictors are dubbed Total Activation Classifiers (TAC), and TAC is used as an additional component to a base classifier to indicate how reliable a prediction is. Given a data instance, TAC slices intermediate representations into disjoint sets and reduces such slices into scalars, yielding activation profiles. During training, activation profiles are pushed towards the code assigned to a given training instance. At testing time, one can predict the class corresponding to the code that best matches the activation profile of an example. Empirically, we observe that the resemblance between activation patterns and their corresponding codes results in an inexpensive unsupervised approach for inducing discriminative confidence scores. Namely, we show that TAC is at least as good as state-of-the-art confidence scores extracted from existing models, while strictly improving the model's value on the rejection setting. TAC was also observed to work well on multiple types of architectures and data modalities.

Workflow Discovery from Dialogues in the Low Data Regime

May 24, 2022

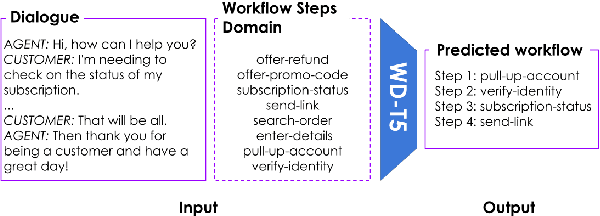

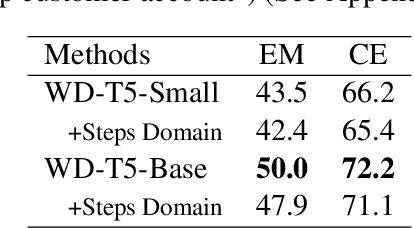

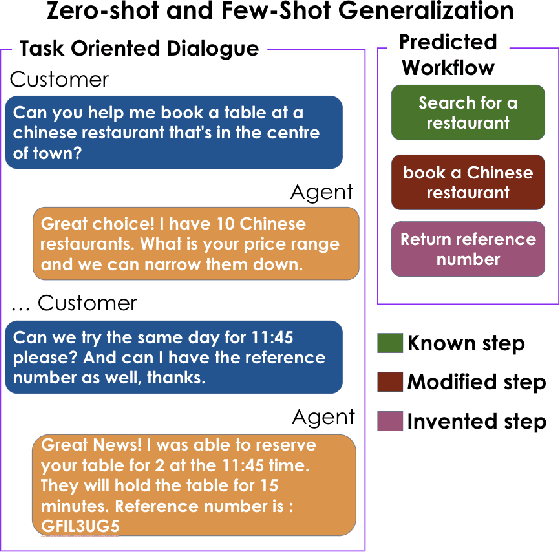

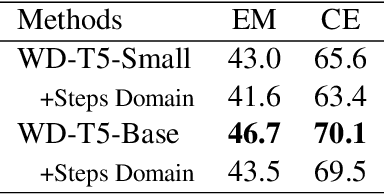

Abstract:Text-based dialogues are now widely used to solve real-world problems. In cases where solution strategies are already known, they can sometimes be codified into workflows and used to guide humans or artificial agents through the task of helping clients. We are interested in the situation where a formal workflow may not yet exist, but we wish to discover the steps of actions that have been taken to resolve problems. We examine a novel transformer-based approach for this situation and we present experiments where we summarize dialogues in the Action-Based Conversations Dataset (ABCD) with workflows. Since the ABCD dialogues were generated using known workflows to guide agents we can evaluate our ability to extract such workflows using ground truth sequences of action steps, organized as workflows. We propose and evaluate an approach that conditions models on the set of allowable action steps and we show that using this strategy we can improve workflow discovery (WD) performance. Our conditioning approach also improves zero-shot and few-shot WD performance when transferring learned models to entirely new domains (i.e. the MultiWOZ setting). Further, a modified variant of our architecture achieves state-of-the-art performance on the related but different problems of Action State Tracking (AST) and Cascading Dialogue Success (CDS) on the ABCD.

Data Augmentation for Intent Classification with Off-the-shelf Large Language Models

Apr 05, 2022

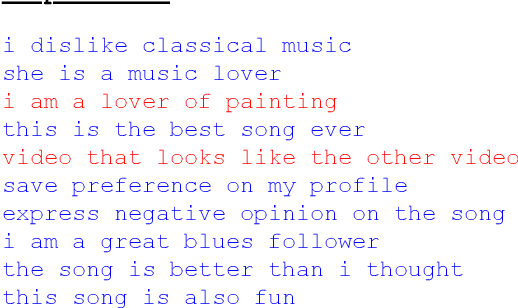

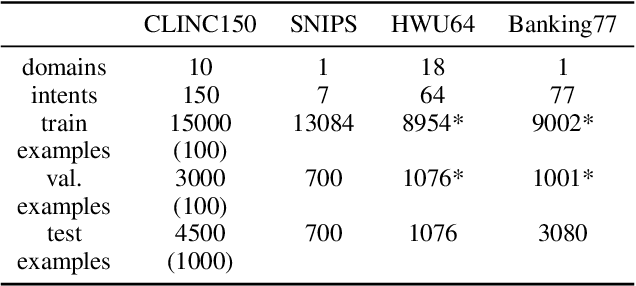

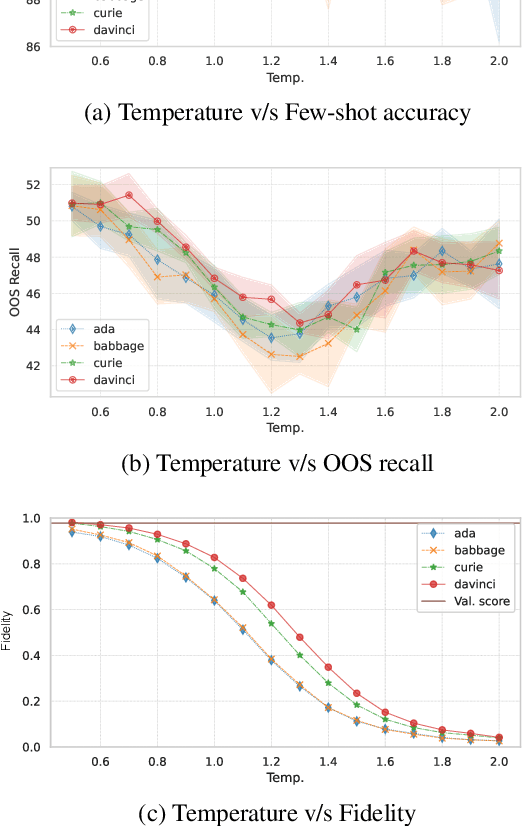

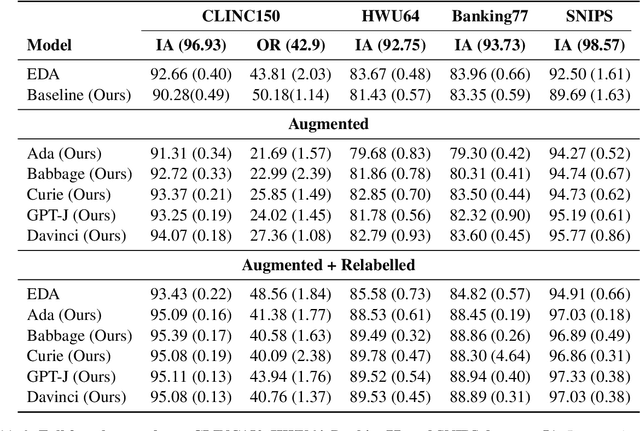

Abstract:Data augmentation is a widely employed technique to alleviate the problem of data scarcity. In this work, we propose a prompting-based approach to generate labelled training data for intent classification with off-the-shelf language models (LMs) such as GPT-3. An advantage of this method is that no task-specific LM-fine-tuning for data generation is required; hence the method requires no hyper-parameter tuning and is applicable even when the available training data is very scarce. We evaluate the proposed method in a few-shot setting on four diverse intent classification tasks. We find that GPT-generated data significantly boosts the performance of intent classifiers when intents in consideration are sufficiently distinct from each other. In tasks with semantically close intents, we observe that the generated data is less helpful. Our analysis shows that this is because GPT often generates utterances that belong to a closely-related intent instead of the desired one. We present preliminary evidence that a prompting-based GPT classifier could be helpful in filtering the generated data to enhance its quality.

Challenges in leveraging GANs for few-shot data augmentation

Mar 30, 2022

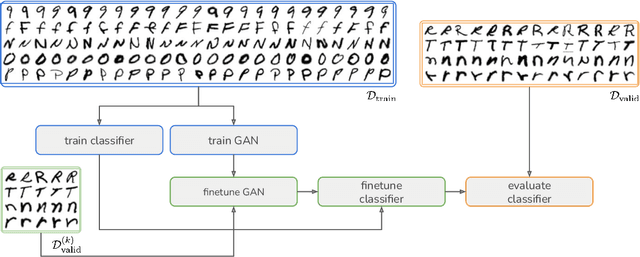

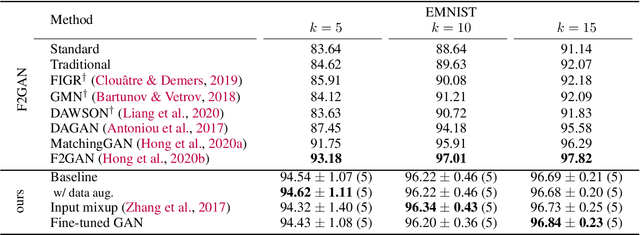

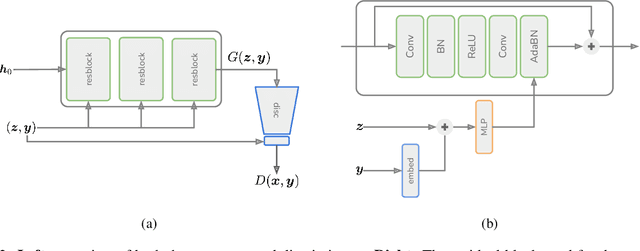

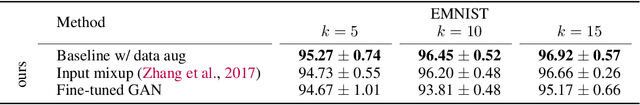

Abstract:In this paper, we explore the use of GAN-based few-shot data augmentation as a method to improve few-shot classification performance. We perform an exploration into how a GAN can be fine-tuned for such a task (one of which is in a class-incremental manner), as well as a rigorous empirical investigation into how well these models can perform to improve few-shot classification. We identify issues related to the difficulty of training such generative models under a purely supervised regime with very few examples, as well as issues regarding the evaluation protocols of existing works. We also find that in this regime, classification accuracy is highly sensitive to how the classes of the dataset are randomly split. Therefore, we propose a semi-supervised fine-tuning approach as a more pragmatic way forward to address these problems.

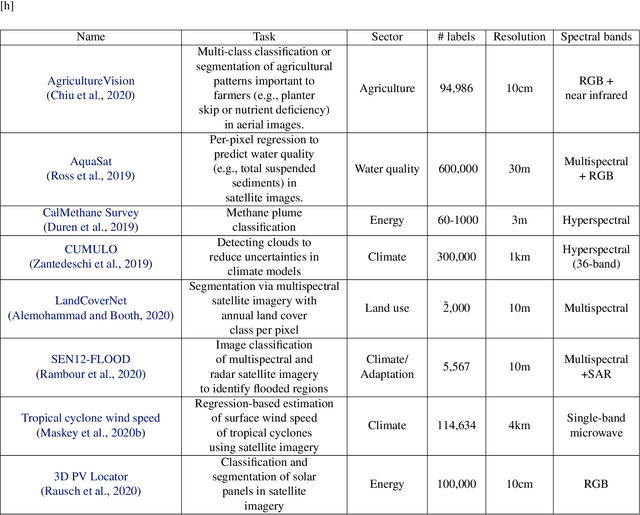

Toward Foundation Models for Earth Monitoring: Proposal for a Climate Change Benchmark

Dec 01, 2021

Abstract:Recent progress in self-supervision shows that pre-training large neural networks on vast amounts of unsupervised data can lead to impressive increases in generalisation for downstream tasks. Such models, recently coined as foundation models, have been transformational to the field of natural language processing. While similar models have also been trained on large corpuses of images, they are not well suited for remote sensing data. To stimulate the development of foundation models for Earth monitoring, we propose to develop a new benchmark comprised of a variety of downstream tasks related to climate change. We believe that this can lead to substantial improvements in many existing applications and facilitate the development of new applications. This proposal is also a call for collaboration with the aim of developing a better evaluation process to mitigate potential downsides of foundation models for Earth monitoring.

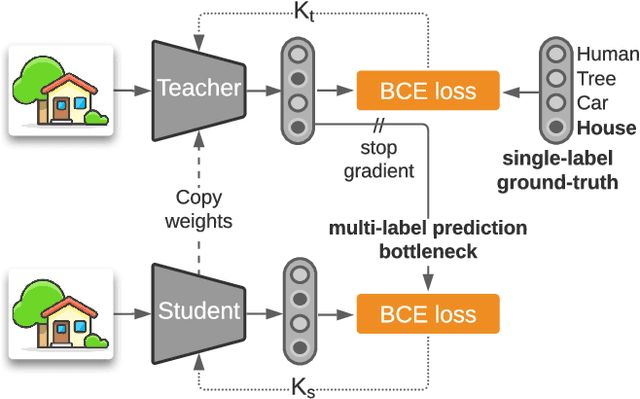

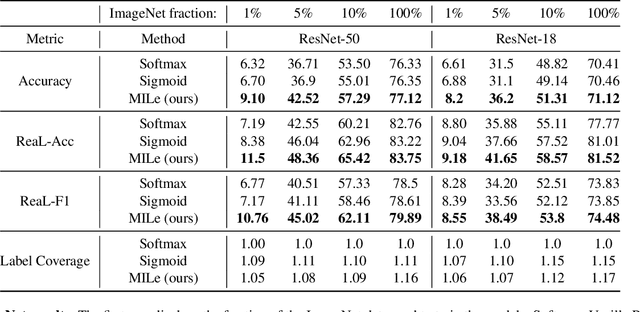

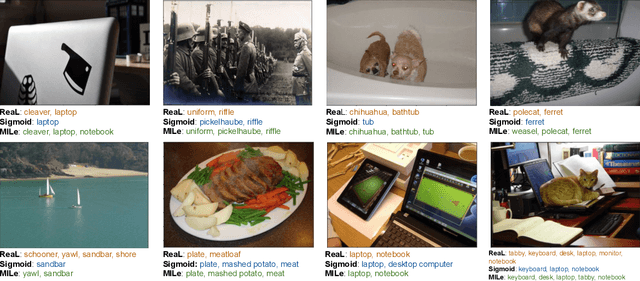

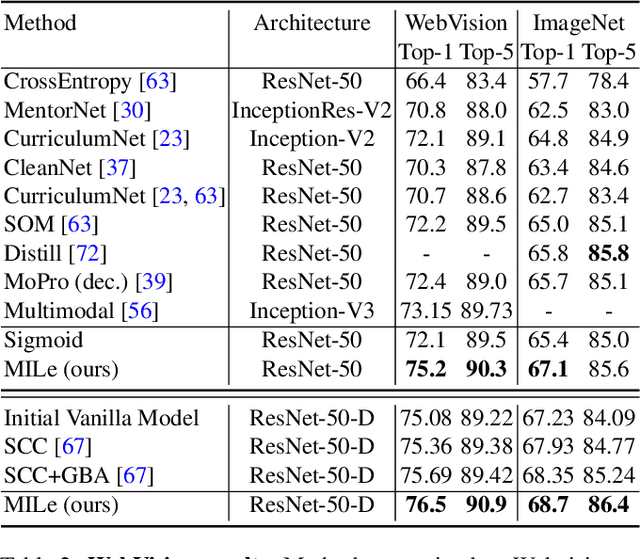

Multi-label Iterated Learning for Image Classification with Label Ambiguity

Nov 23, 2021

Abstract:Transfer learning from large-scale pre-trained models has become essential for many computer vision tasks. Recent studies have shown that datasets like ImageNet are weakly labeled since images with multiple object classes present are assigned a single label. This ambiguity biases models towards a single prediction, which could result in the suppression of classes that tend to co-occur in the data. Inspired by language emergence literature, we propose multi-label iterated learning (MILe) to incorporate the inductive biases of multi-label learning from single labels using the framework of iterated learning. MILe is a simple yet effective procedure that builds a multi-label description of the image by propagating binary predictions through successive generations of teacher and student networks with a learning bottleneck. Experiments show that our approach exhibits systematic benefits on ImageNet accuracy as well as ReaL F1 score, which indicates that MILe deals better with label ambiguity than the standard training procedure, even when fine-tuning from self-supervised weights. We also show that MILe is effective reducing label noise, achieving state-of-the-art performance on real-world large-scale noisy data such as WebVision. Furthermore, MILe improves performance in class incremental settings such as IIRC and it is robust to distribution shifts. Code: https://github.com/rajeswar18/MILe

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge