David Doermann

LineFormer: Rethinking Line Chart Data Extraction as Instance Segmentation

May 03, 2023

Abstract:Data extraction from line-chart images is an essential component of the automated document understanding process, as line charts are a ubiquitous data visualization format. However, the amount of visual and structural variations in multi-line graphs makes them particularly challenging for automated parsing. Existing works, however, are not robust to all these variations, either taking an all-chart unified approach or relying on auxiliary information such as legends for line data extraction. In this work, we propose LineFormer, a robust approach to line data extraction using instance segmentation. We achieve state-of-the-art performance on several benchmark synthetic and real chart datasets. Our implementation is available at https://github.com/TheJaeLal/LineFormer .

Progressive Multi-view Human Mesh Recovery with Self-Supervision

Dec 10, 2022

Abstract:To date, little attention has been given to multi-view 3D human mesh estimation, despite real-life applicability (e.g., motion capture, sport analysis) and robustness to single-view ambiguities. Existing solutions typically suffer from poor generalization performance to new settings, largely due to the limited diversity of image-mesh pairs in multi-view training data. To address this shortcoming, people have explored the use of synthetic images. But besides the usual impact of visual gap between rendered and target data, synthetic-data-driven multi-view estimators also suffer from overfitting to the camera viewpoint distribution sampled during training which usually differs from real-world distributions. Tackling both challenges, we propose a novel simulation-based training pipeline for multi-view human mesh recovery, which (a) relies on intermediate 2D representations which are more robust to synthetic-to-real domain gap; (b) leverages learnable calibration and triangulation to adapt to more diversified camera setups; and (c) progressively aggregates multi-view information in a canonical 3D space to remove ambiguities in 2D representations. Through extensive benchmarking, we demonstrate the superiority of the proposed solution especially for unseen in-the-wild scenarios.

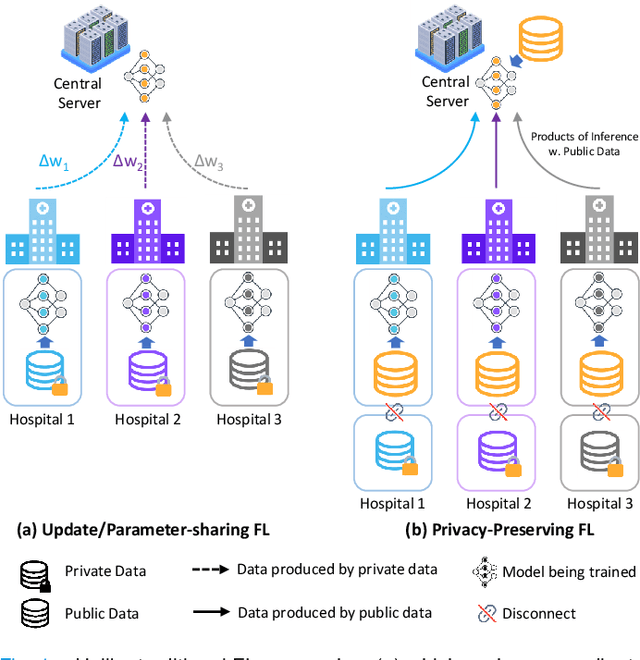

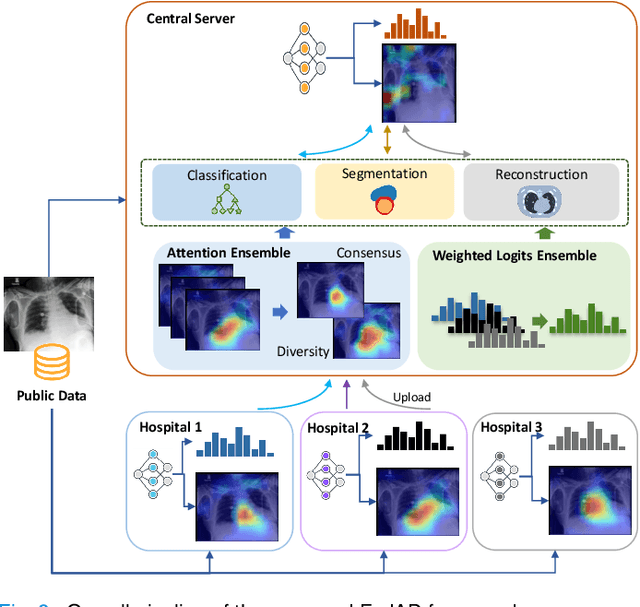

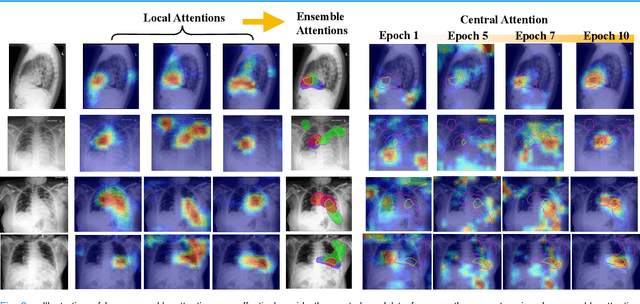

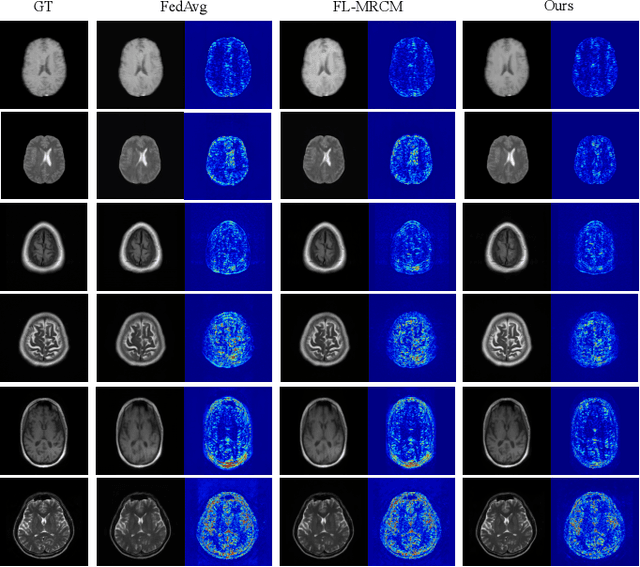

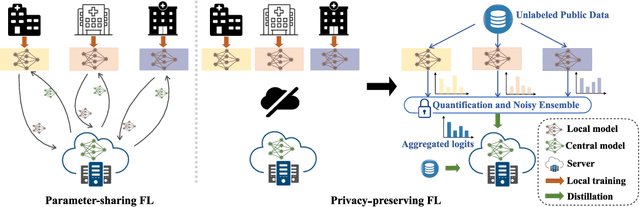

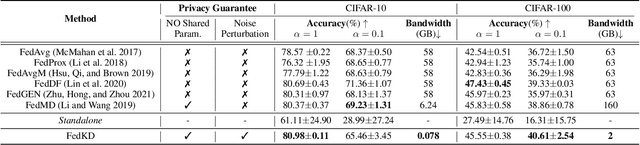

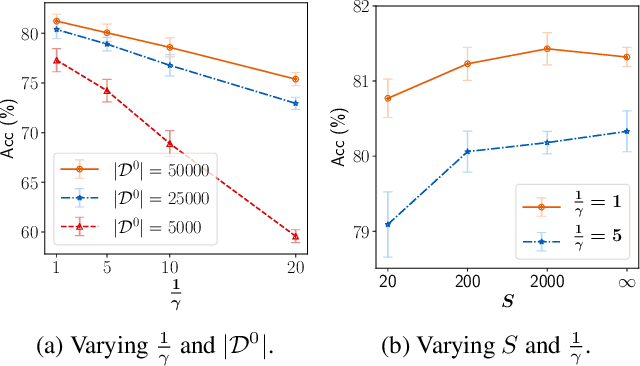

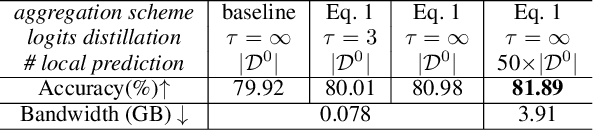

Federated Learning with Privacy-Preserving Ensemble Attention Distillation

Oct 16, 2022

Abstract:Federated Learning (FL) is a machine learning paradigm where many local nodes collaboratively train a central model while keeping the training data decentralized. This is particularly relevant for clinical applications since patient data are usually not allowed to be transferred out of medical facilities, leading to the need for FL. Existing FL methods typically share model parameters or employ co-distillation to address the issue of unbalanced data distribution. However, they also require numerous rounds of synchronized communication and, more importantly, suffer from a privacy leakage risk. We propose a privacy-preserving FL framework leveraging unlabeled public data for one-way offline knowledge distillation in this work. The central model is learned from local knowledge via ensemble attention distillation. Our technique uses decentralized and heterogeneous local data like existing FL approaches, but more importantly, it significantly reduces the risk of privacy leakage. We demonstrate that our method achieves very competitive performance with more robust privacy preservation based on extensive experiments on image classification, segmentation, and reconstruction tasks.

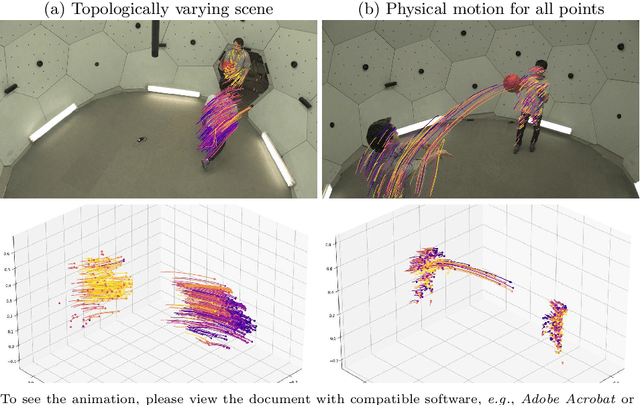

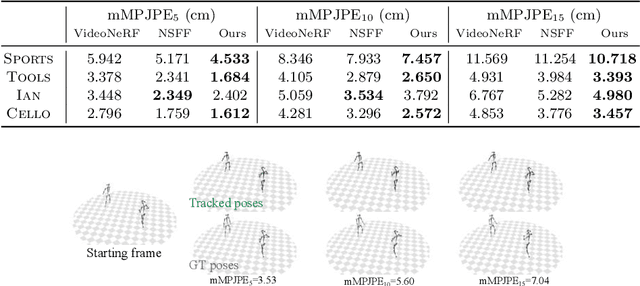

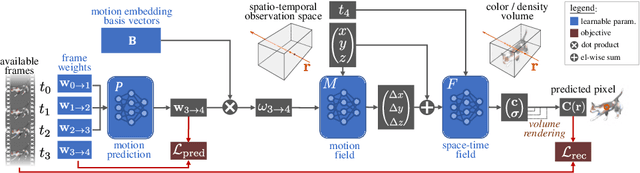

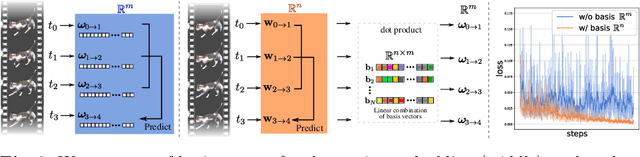

PREF: Predictability Regularized Neural Motion Fields

Sep 21, 2022

Abstract:Knowing the 3D motions in a dynamic scene is essential to many vision applications. Recent progress is mainly focused on estimating the activity of some specific elements like humans. In this paper, we leverage a neural motion field for estimating the motion of all points in a multiview setting. Modeling the motion from a dynamic scene with multiview data is challenging due to the ambiguities in points of similar color and points with time-varying color. We propose to regularize the estimated motion to be predictable. If the motion from previous frames is known, then the motion in the near future should be predictable. Therefore, we introduce a predictability regularization by first conditioning the estimated motion on latent embeddings, then by adopting a predictor network to enforce predictability on the embeddings. The proposed framework PREF (Predictability REgularized Fields) achieves on par or better results than state-of-the-art neural motion field-based dynamic scene representation methods, while requiring no prior knowledge of the scene.

Preserving Privacy in Federated Learning with Ensemble Cross-Domain Knowledge Distillation

Sep 10, 2022

Abstract:Federated Learning (FL) is a machine learning paradigm where local nodes collaboratively train a central model while the training data remains decentralized. Existing FL methods typically share model parameters or employ co-distillation to address the issue of unbalanced data distribution. However, they suffer from communication bottlenecks. More importantly, they risk privacy leakage. In this work, we develop a privacy preserving and communication efficient method in a FL framework with one-shot offline knowledge distillation using unlabeled, cross-domain public data. We propose a quantized and noisy ensemble of local predictions from completely trained local models for stronger privacy guarantees without sacrificing accuracy. Based on extensive experiments on image classification and text classification tasks, we show that our privacy-preserving method outperforms baseline FL algorithms with superior performance in both accuracy and communication efficiency.

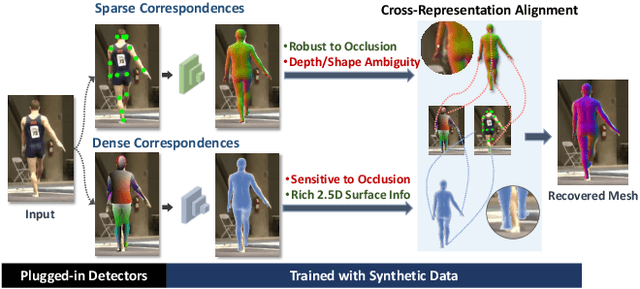

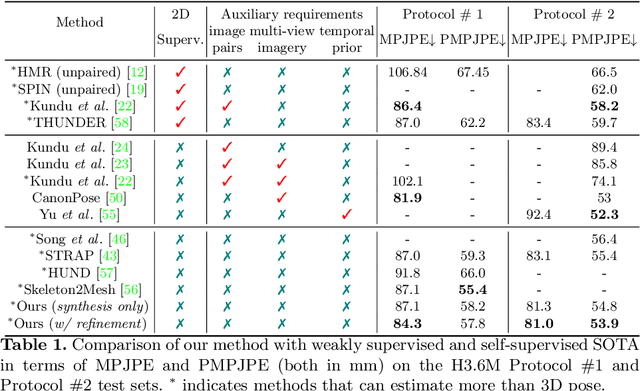

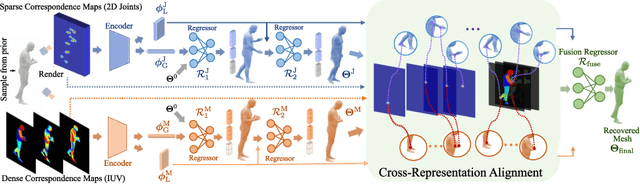

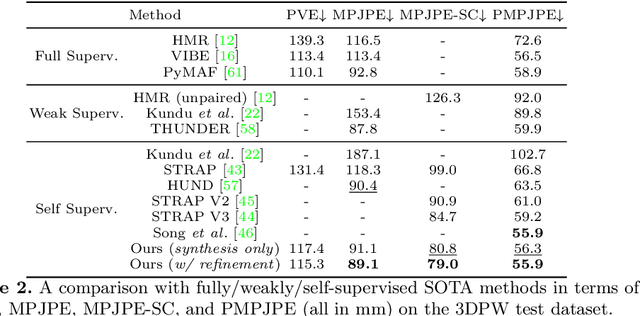

Self-supervised Human Mesh Recovery with Cross-Representation Alignment

Sep 10, 2022

Abstract:Fully supervised human mesh recovery methods are data-hungry and have poor generalizability due to the limited availability and diversity of 3D-annotated benchmark datasets. Recent progress in self-supervised human mesh recovery has been made using synthetic-data-driven training paradigms where the model is trained from synthetic paired 2D representation (e.g., 2D keypoints and segmentation masks) and 3D mesh. However, on synthetic dense correspondence maps (i.e., IUV) few have been explored since the domain gap between synthetic training data and real testing data is hard to address for 2D dense representation. To alleviate this domain gap on IUV, we propose cross-representation alignment utilizing the complementary information from the robust but sparse representation (2D keypoints). Specifically, the alignment errors between initial mesh estimation and both 2D representations are forwarded into regressor and dynamically corrected in the following mesh regression. This adaptive cross-representation alignment explicitly learns from the deviations and captures complementary information: robustness from sparse representation and richness from dense representation. We conduct extensive experiments on multiple standard benchmark datasets and demonstrate competitive results, helping take a step towards reducing the annotation effort needed to produce state-of-the-art models in human mesh estimation.

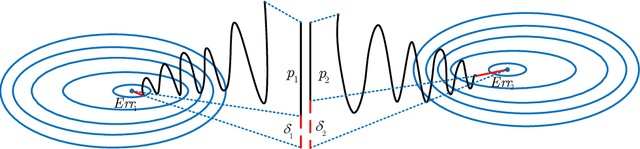

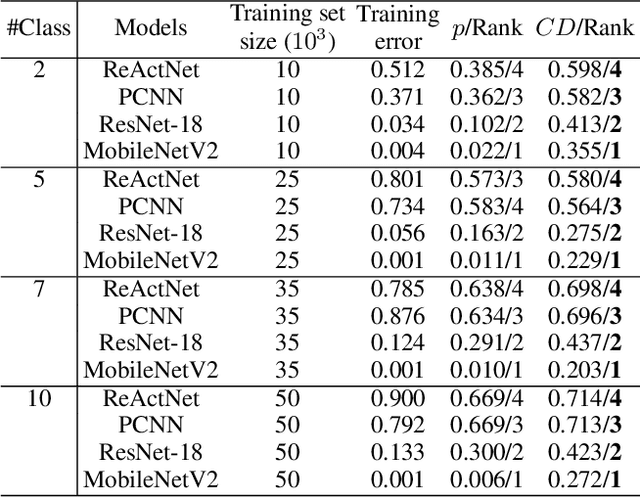

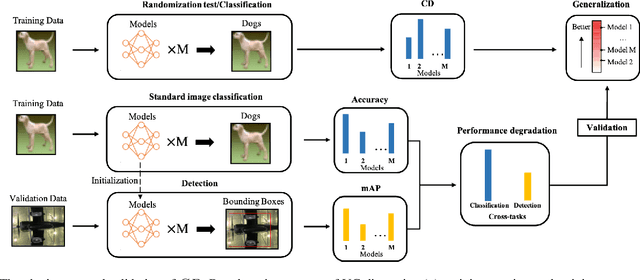

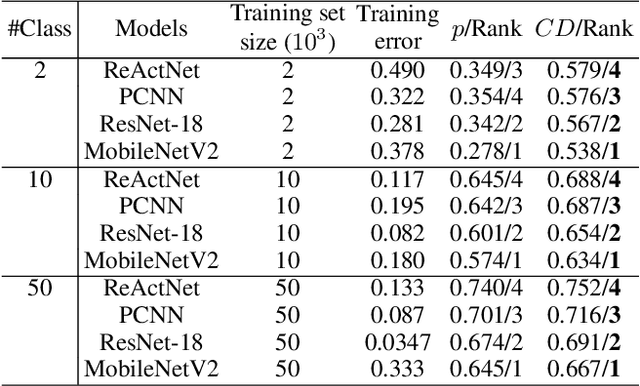

Confidence Dimension for Deep Learning based on Hoeffding Inequality and Relative Evaluation

Mar 17, 2022

Abstract:Research on the generalization ability of deep neural networks (DNNs) has recently attracted a great deal of attention. However, due to their complex architectures and large numbers of parameters, measuring the generalization ability of specific DNN models remains an open challenge. In this paper, we propose to use multiple factors to measure and rank the relative generalization of DNNs based on a new concept of confidence dimension (CD). Furthermore, we provide a feasible framework in our CD to theoretically calculate the upper bound of generalization based on the conventional Vapnik-Chervonenk dimension (VC-dimension) and Hoeffding's inequality. Experimental results on image classification and object detection demonstrate that our CD can reflect the relative generalization ability for different DNNs. In addition to full-precision DNNs, we also analyze the generalization ability of binary neural networks (BNNs), whose generalization ability remains an unsolved problem. Our CD yields a consistent and reliable measure and ranking for both full-precision DNNs and BNNs on all the tasks.

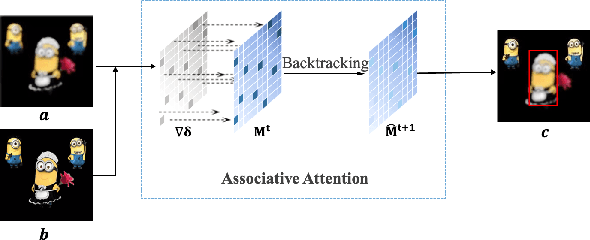

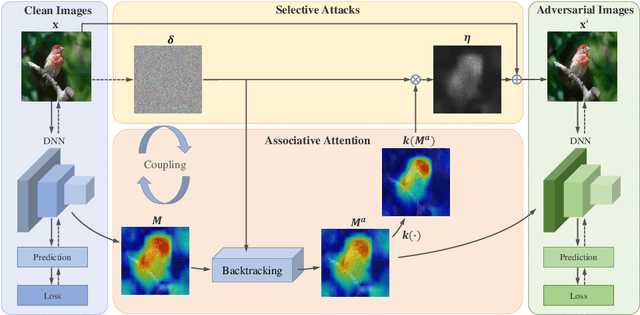

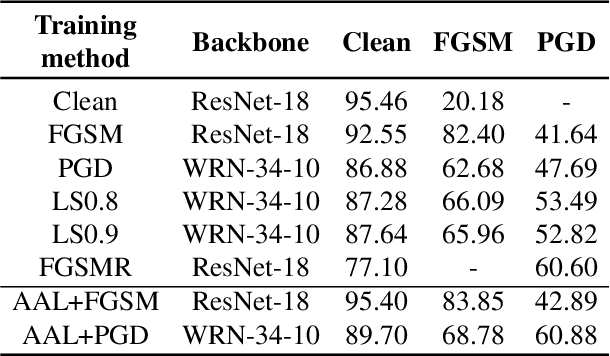

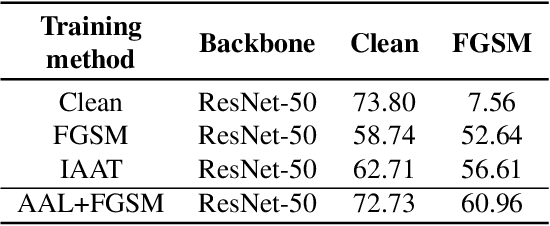

Associative Adversarial Learning Based on Selective Attack

Jan 04, 2022

Abstract:A human's attention can intuitively adapt to corrupted areas of an image by recalling a similar uncorrupted image they have previously seen. This observation motivates us to improve the attention of adversarial images by considering their clean counterparts. To accomplish this, we introduce Associative Adversarial Learning (AAL) into adversarial learning to guide a selective attack. We formulate the intrinsic relationship between attention and attack (perturbation) as a coupling optimization problem to improve their interaction. This leads to an attention backtracking algorithm that can effectively enhance the attention's adversarial robustness. Our method is generic and can be used to address a variety of tasks by simply choosing different kernels for the associative attention that select other regions for a specific attack. Experimental results show that the selective attack improves the model's performance. We show that our method improves the recognition accuracy of adversarial training on ImageNet by 8.32% compared with the baseline. It also increases object detection mAP on PascalVOC by 2.02% and recognition accuracy of few-shot learning on miniImageNet by 1.63%.

Learning Robot Swarm Tactics over Complex Adversarial Environments

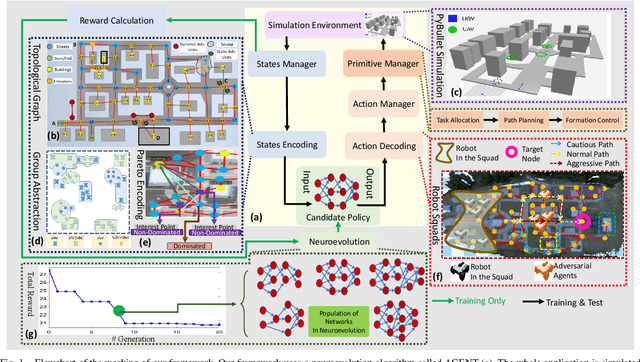

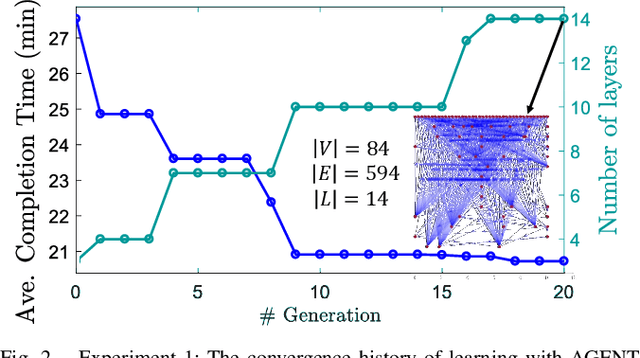

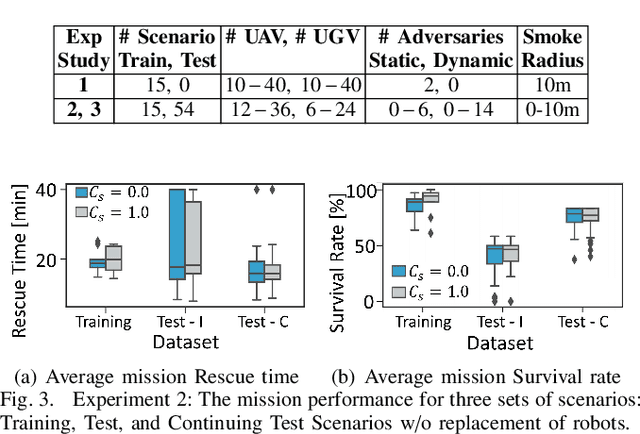

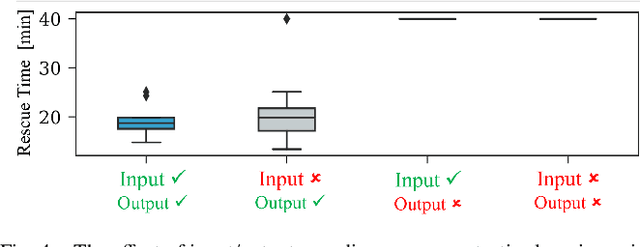

Sep 13, 2021

Abstract:To accomplish complex swarm robotic missions in the real world, one needs to plan and execute a combination of single robot behaviors, group primitives such as task allocation, path planning, and formation control, and mission-specific objectives such as target search and group coverage. Most such missions are designed manually by teams of robotics experts. Recent work in automated approaches to learning swarm behavior has been limited to individual primitives with sparse work on learning complete missions. This paper presents a systematic approach to learn tactical mission-specific policies that compose primitives in a swarm to accomplish the mission efficiently using neural networks with special input and output encoding. To learn swarm tactics in an adversarial environment, we employ a combination of 1) map-to-graph abstraction, 2) input/output encoding via Pareto filtering of points of interest and clustering of robots, and 3) learning via neuroevolution and policy gradient approaches. We illustrate this combination as critical to providing tractable learning, especially given the computational cost of simulating swarm missions of this scale and complexity. Successful mission completion outcomes are demonstrated with up to 60 robots. In addition, a close match in the performance statistics in training and testing scenarios shows the potential generalizability of the proposed framework.

Semantic Text-to-Face GAN -ST^2FG

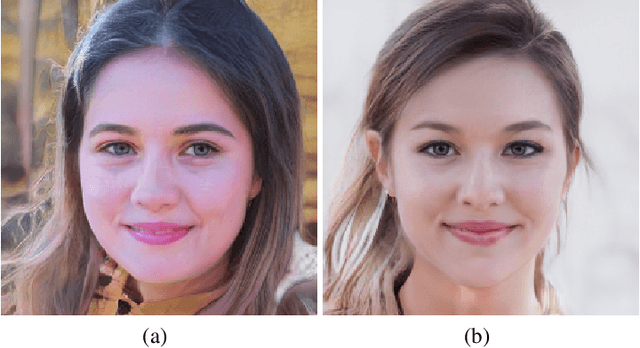

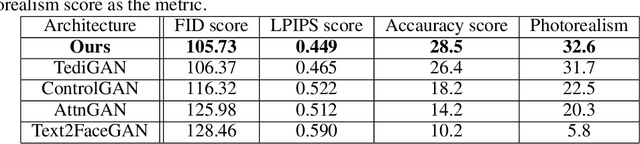

Jul 22, 2021

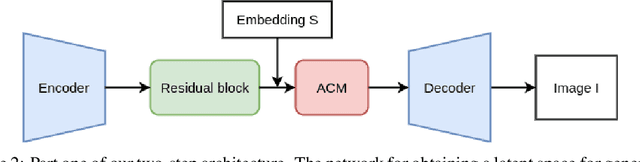

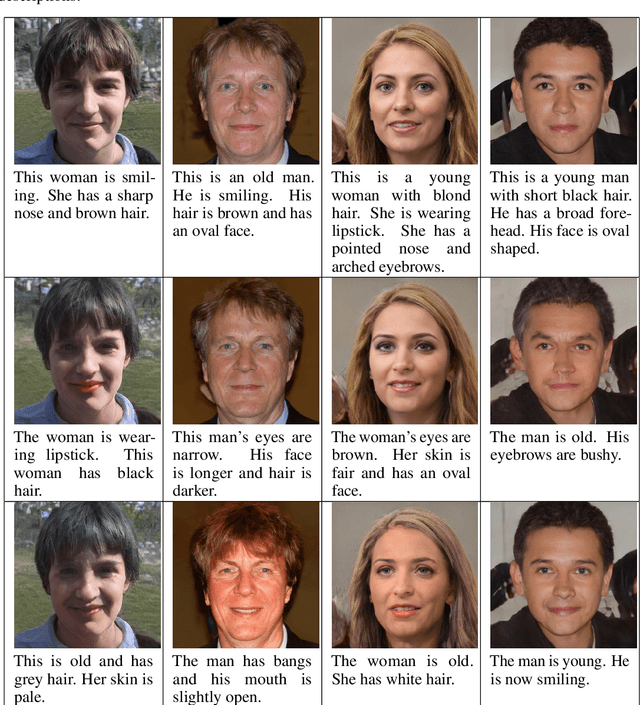

Abstract:Faces generated using generative adversarial networks (GANs) have reached unprecedented realism. These faces, also known as "Deep Fakes", appear as realistic photographs with very little pixel-level distortions. While some work has enabled the training of models that lead to the generation of specific properties of the subject, generating a facial image based on a natural language description has not been fully explored. For security and criminal identification, the ability to provide a GAN-based system that works like a sketch artist would be incredibly useful. In this paper, we present a novel approach to generate facial images from semantic text descriptions. The learned model is provided with a text description and an outline of the type of face, which the model uses to sketch the features. Our models are trained using an Affine Combination Module (ACM) mechanism to combine the text embedding from BERT and the GAN latent space using a self-attention matrix. This avoids the loss of features due to inadequate "attention", which may happen if text embedding and latent vector are simply concatenated. Our approach is capable of generating images that are very accurately aligned to the exhaustive textual descriptions of faces with many fine detail features of the face and helps in generating better images. The proposed method is also capable of making incremental changes to a previously generated image if it is provided with additional textual descriptions or sentences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge