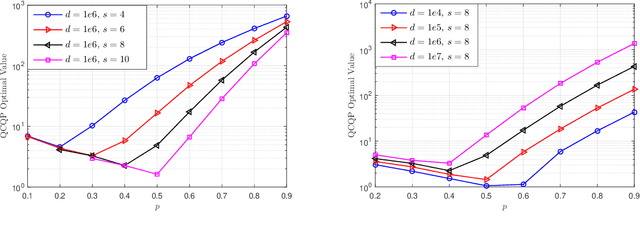

Minimax Optimal Quantile and Semi-Adversarial Regret via Root-Logarithmic Regularizers

Nov 07, 2021Jeffrey Negrea, Blair Bilodeau, Nicolò Campolongo, Francesco Orabona, Daniel M. Roy

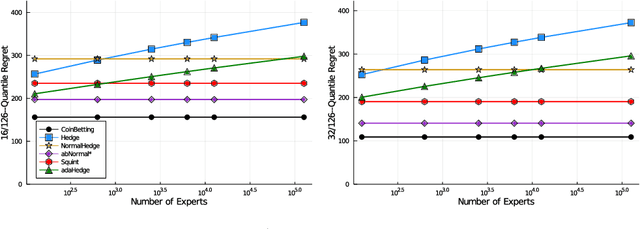

Quantile (and, more generally, KL) regret bounds, such as those achieved by NormalHedge (Chaudhuri, Freund, and Hsu 2009) and its variants, relax the goal of competing against the best individual expert to only competing against a majority of experts on adversarial data. More recently, the semi-adversarial paradigm (Bilodeau, Negrea, and Roy 2020) provides an alternative relaxation of adversarial online learning by considering data that may be neither fully adversarial nor stochastic (i.i.d.). We achieve the minimax optimal regret in both paradigms using FTRL with separate, novel, root-logarithmic regularizers, both of which can be interpreted as yielding variants of NormalHedge. We extend existing KL regret upper bounds, which hold uniformly over target distributions, to possibly uncountable expert classes with arbitrary priors; provide the first full-information lower bounds for quantile regret on finite expert classes (which are tight); and provide an adaptively minimax optimal algorithm for the semi-adversarial paradigm that adapts to the true, unknown constraint faster, leading to uniformly improved regret bounds over existing methods.

* 30 pages, 2 figures. Jeffrey Negrea and Blair Bilodeau are equal-contribution authors. Updated citations

The Future is Log-Gaussian: ResNets and Their Infinite-Depth-and-Width Limit at Initialization

Jun 07, 2021Mufan Bill Li, Mihai Nica, Daniel M. Roy

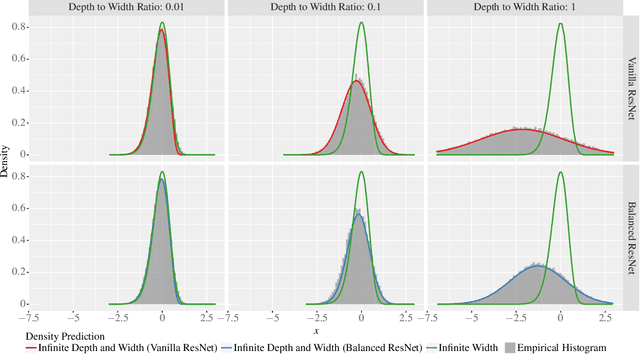

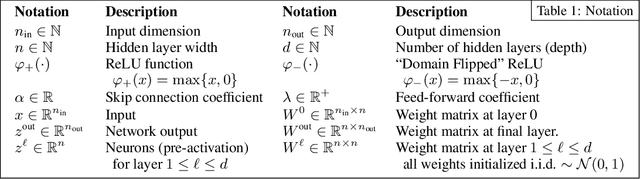

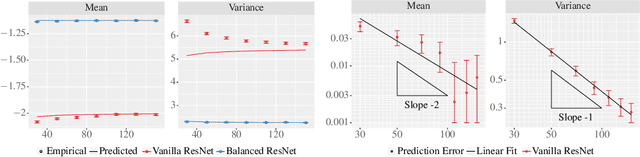

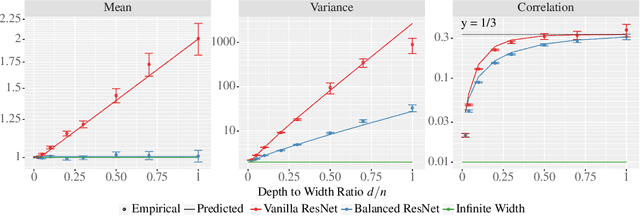

Theoretical results show that neural networks can be approximated by Gaussian processes in the infinite-width limit. However, for fully connected networks, it has been previously shown that for any fixed network width, $n$, the Gaussian approximation gets worse as the network depth, $d$, increases. Given that modern networks are deep, this raises the question of how well modern architectures, like ResNets, are captured by the infinite-width limit. To provide a better approximation, we study ReLU ResNets in the infinite-depth-and-width limit, where both depth and width tend to infinity as their ratio, $d/n$, remains constant. In contrast to the Gaussian infinite-width limit, we show theoretically that the network exhibits log-Gaussian behaviour at initialization in the infinite-depth-and-width limit, with parameters depending on the ratio $d/n$. Using Monte Carlo simulations, we demonstrate that even basic properties of standard ResNet architectures are poorly captured by the Gaussian limit, but remarkably well captured by our log-Gaussian limit. Moreover, our analysis reveals that ReLU ResNets at initialization are hypoactivated: fewer than half of the ReLUs are activated. Additionally, we calculate the interlayer correlations, which have the effect of exponentially increasing the variance of the network output. Based on our analysis, we introduce Balanced ResNets, a simple architecture modification, which eliminates hypoactivation and interlayer correlations and is more amenable to theoretical analysis.

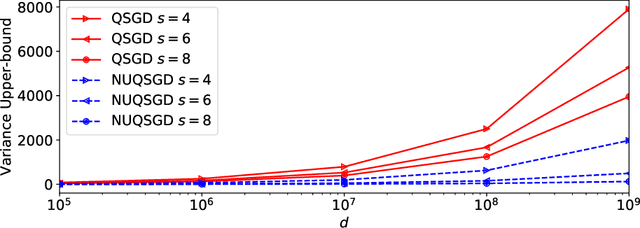

NUQSGD: Provably Communication-efficient Data-parallel SGD via Nonuniform Quantization

May 01, 2021Ali Ramezani-Kebrya, Fartash Faghri, Ilya Markov, Vitalii Aksenov, Dan Alistarh, Daniel M. Roy

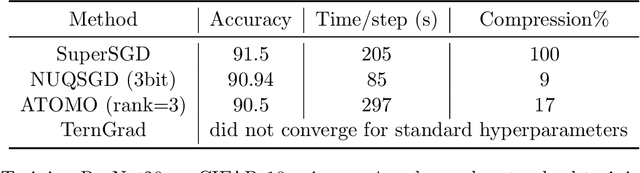

As the size and complexity of models and datasets grow, so does the need for communication-efficient variants of stochastic gradient descent that can be deployed to perform parallel model training. One popular communication-compression method for data-parallel SGD is QSGD (Alistarh et al., 2017), which quantizes and encodes gradients to reduce communication costs. The baseline variant of QSGD provides strong theoretical guarantees, however, for practical purposes, the authors proposed a heuristic variant which we call QSGDinf, which demonstrated impressive empirical gains for distributed training of large neural networks. In this paper, we build on this work to propose a new gradient quantization scheme, and show that it has both stronger theoretical guarantees than QSGD, and matches and exceeds the empirical performance of the QSGDinf heuristic and of other compression methods.

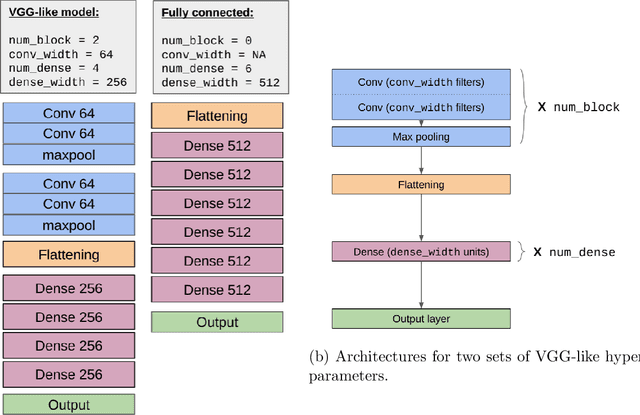

NeurIPS 2020 Competition: Predicting Generalization in Deep Learning

Dec 14, 2020Yiding Jiang, Pierre Foret, Scott Yak, Daniel M. Roy, Hossein Mobahi, Gintare Karolina Dziugaite, Samy Bengio, Suriya Gunasekar, Isabelle Guyon, Behnam Neyshabur

Understanding generalization in deep learning is arguably one of the most important questions in deep learning. Deep learning has been successfully adopted to a large number of problems ranging from pattern recognition to complex decision making, but many recent researchers have raised many concerns about deep learning, among which the most important is generalization. Despite numerous attempts, conventional statistical learning approaches have yet been able to provide a satisfactory explanation on why deep learning works. A recent line of works aims to address the problem by trying to predict the generalization performance through complexity measures. In this competition, we invite the community to propose complexity measures that can accurately predict generalization of models. A robust and general complexity measure would potentially lead to a better understanding of deep learning's underlying mechanism and behavior of deep models on unseen data, or shed light on better generalization bounds. All these outcomes will be important for making deep learning more robust and reliable.

On the Information Complexity of Proper Learners for VC Classes in the Realizable Case

Nov 05, 2020Mahdi Haghifam, Gintare Karolina Dziugaite, Shay Moran, Daniel M. Roy

We provide a negative resolution to a conjecture of Steinke and Zakynthinou (2020a), by showing that their bound on the conditional mutual information (CMI) of proper learners of Vapnik--Chervonenkis (VC) classes cannot be improved from $d \log n +2$ to $O(d)$, where $n$ is the number of i.i.d. training examples. In fact, we exhibit VC classes for which the CMI of any proper learner cannot be bounded by any real-valued function of the VC dimension only.

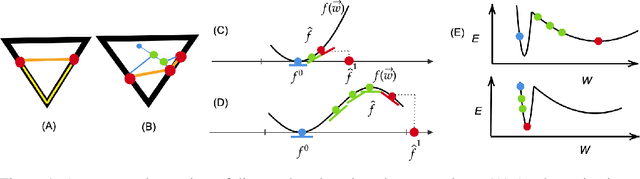

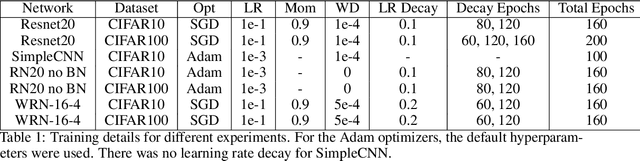

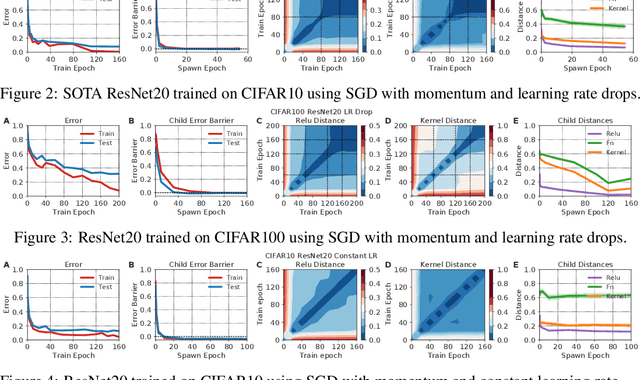

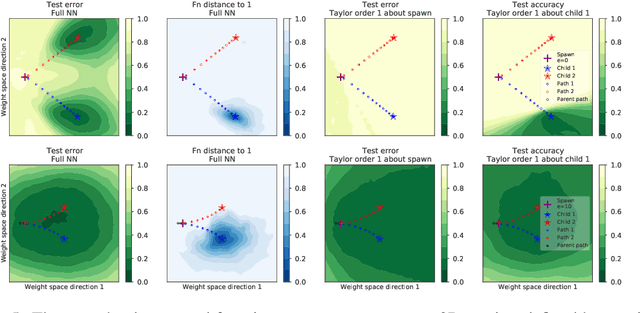

Deep learning versus kernel learning: an empirical study of loss landscape geometry and the time evolution of the Neural Tangent Kernel

Oct 28, 2020Stanislav Fort, Gintare Karolina Dziugaite, Mansheej Paul, Sepideh Kharaghani, Daniel M. Roy, Surya Ganguli

In suitably initialized wide networks, small learning rates transform deep neural networks (DNNs) into neural tangent kernel (NTK) machines, whose training dynamics is well-approximated by a linear weight expansion of the network at initialization. Standard training, however, diverges from its linearization in ways that are poorly understood. We study the relationship between the training dynamics of nonlinear deep networks, the geometry of the loss landscape, and the time evolution of a data-dependent NTK. We do so through a large-scale phenomenological analysis of training, synthesizing diverse measures characterizing loss landscape geometry and NTK dynamics. In multiple neural architectures and datasets, we find these diverse measures evolve in a highly correlated manner, revealing a universal picture of the deep learning process. In this picture, deep network training exhibits a highly chaotic rapid initial transient that within 2 to 3 epochs determines the final linearly connected basin of low loss containing the end point of training. During this chaotic transient, the NTK changes rapidly, learning useful features from the training data that enables it to outperform the standard initial NTK by a factor of 3 in less than 3 to 4 epochs. After this rapid chaotic transient, the NTK changes at constant velocity, and its performance matches that of full network training in 15% to 45% of training time. Overall, our analysis reveals a striking correlation between a diverse set of metrics over training time, governed by a rapid chaotic to stable transition in the first few epochs, that together poses challenges and opportunities for the development of more accurate theories of deep learning.

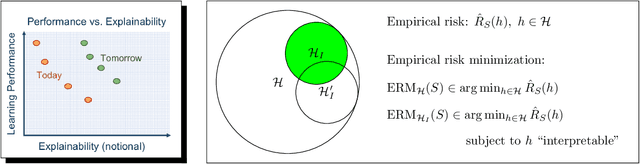

Enforcing Interpretability and its Statistical Impacts: Trade-offs between Accuracy and Interpretability

Oct 28, 2020Gintare Karolina Dziugaite, Shai Ben-David, Daniel M. Roy

To date, there has been no formal study of the statistical cost of interpretability in machine learning. As such, the discourse around potential trade-offs is often informal and misconceptions abound. In this work, we aim to initiate a formal study of these trade-offs. A seemingly insurmountable roadblock is the lack of any agreed upon definition of interpretability. Instead, we propose a shift in perspective. Rather than attempt to define interpretability, we propose to model the \emph{act} of \emph{enforcing} interpretability. As a starting point, we focus on the setting of empirical risk minimization for binary classification, and view interpretability as a constraint placed on learning. That is, we assume we are given a subset of hypothesis that are deemed to be interpretable, possibly depending on the data distribution and other aspects of the context. We then model the act of enforcing interpretability as that of performing empirical risk minimization over the set of interpretable hypotheses. This model allows us to reason about the statistical implications of enforcing interpretability, using known results in statistical learning theory. Focusing on accuracy, we perform a case analysis, explaining why one may or may not observe a trade-off between accuracy and interpretability when the restriction to interpretable classifiers does or does not come at the cost of some excess statistical risk. We close with some worked examples and some open problems, which we hope will spur further theoretical development around the tradeoffs involved in interpretability.

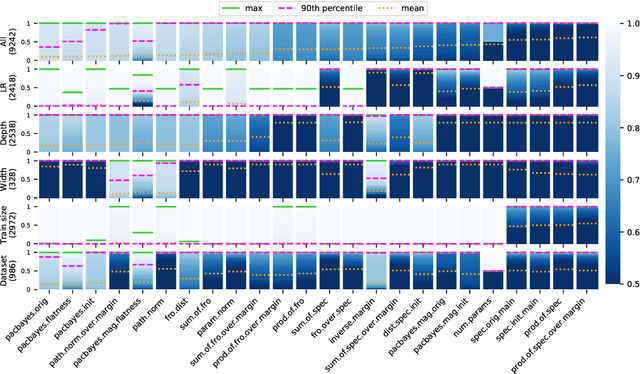

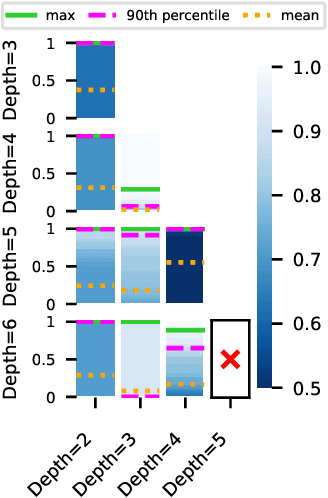

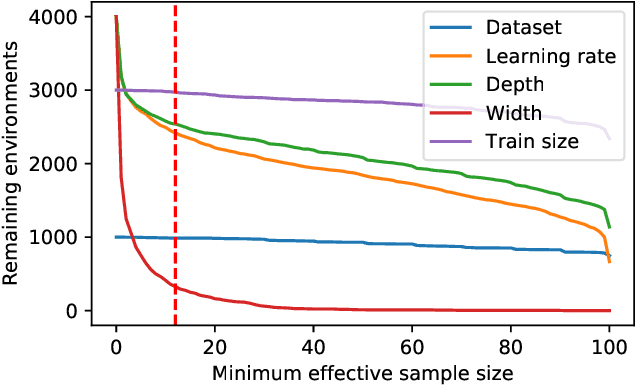

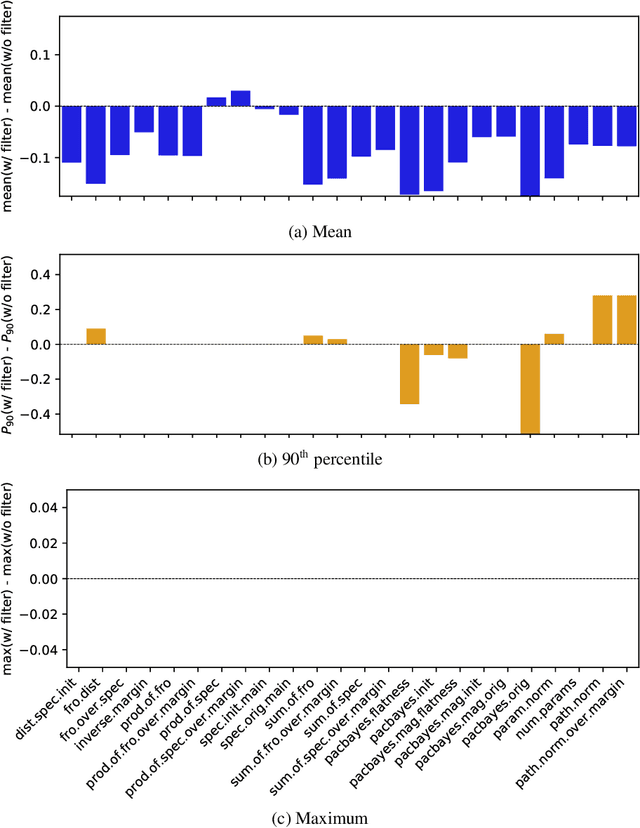

In Search of Robust Measures of Generalization

Oct 22, 2020Gintare Karolina Dziugaite, Alexandre Drouin, Brady Neal, Nitarshan Rajkumar, Ethan Caballero, Linbo Wang, Ioannis Mitliagkas, Daniel M. Roy

One of the principal scientific challenges in deep learning is explaining generalization, i.e., why the particular way the community now trains networks to achieve small training error also leads to small error on held-out data from the same population. It is widely appreciated that some worst-case theories -- such as those based on the VC dimension of the class of predictors induced by modern neural network architectures -- are unable to explain empirical performance. A large volume of work aims to close this gap, primarily by developing bounds on generalization error, optimization error, and excess risk. When evaluated empirically, however, most of these bounds are numerically vacuous. Focusing on generalization bounds, this work addresses the question of how to evaluate such bounds empirically. Jiang et al. (2020) recently described a large-scale empirical study aimed at uncovering potential causal relationships between bounds/measures and generalization. Building on their study, we highlight where their proposed methods can obscure failures and successes of generalization measures in explaining generalization. We argue that generalization measures should instead be evaluated within the framework of distributional robustness.

Pruning Neural Networks at Initialization: Why are We Missing the Mark?

Sep 18, 2020Jonathan Frankle, Gintare Karolina Dziugaite, Daniel M. Roy, Michael Carbin

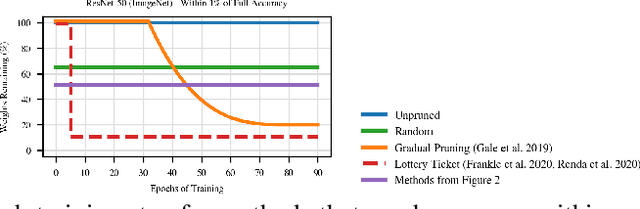

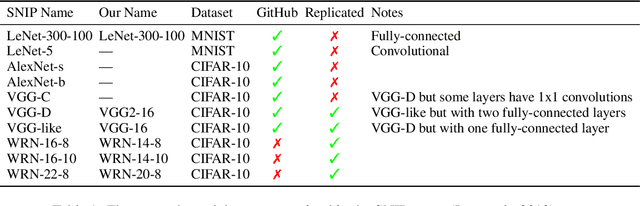

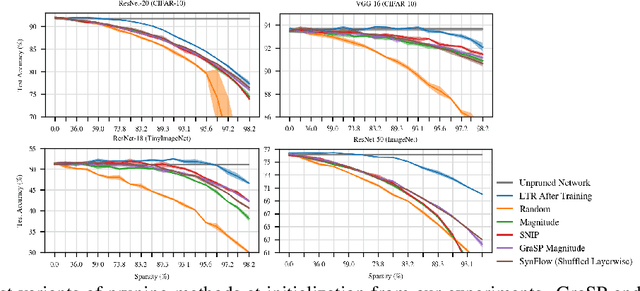

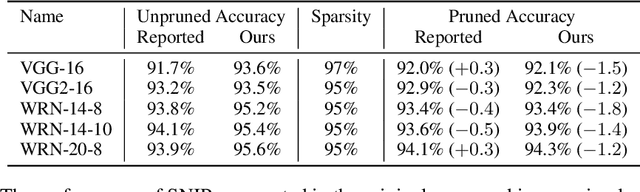

Recent work has explored the possibility of pruning neural networks at initialization. We assess proposals for doing so: SNIP (Lee et al., 2019), GraSP (Wang et al., 2020), SynFlow (Tanaka et al., 2020), and magnitude pruning. Although these methods surpass the trivial baseline of random pruning, they remain below the accuracy of magnitude pruning after training, and we endeavor to understand why. We show that, unlike pruning after training, accuracy is the same or higher when randomly shuffling which weights these methods prune within each layer or sampling new initial values. As such, the per-weight pruning decisions made by these methods can be replaced by a per-layer choice of the fraction of weights to prune. This property undermines the claimed justifications for these methods and suggests broader challenges with the underlying pruning heuristics, the desire to prune at initialization, or both.

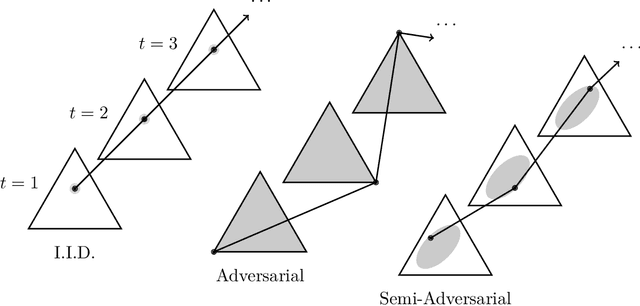

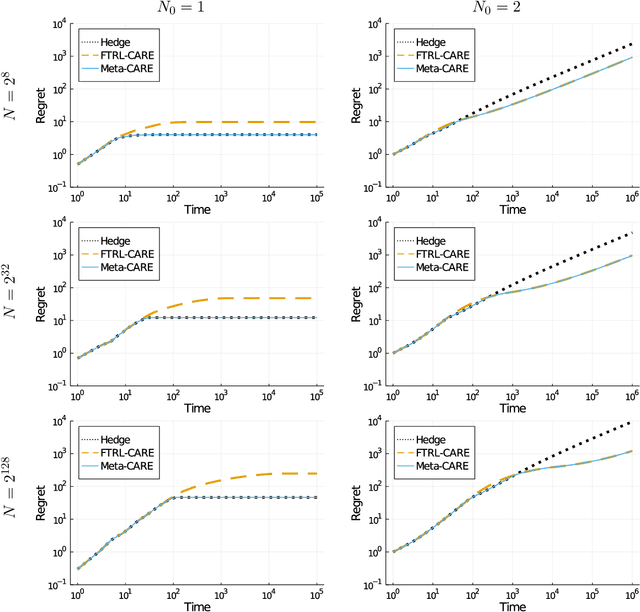

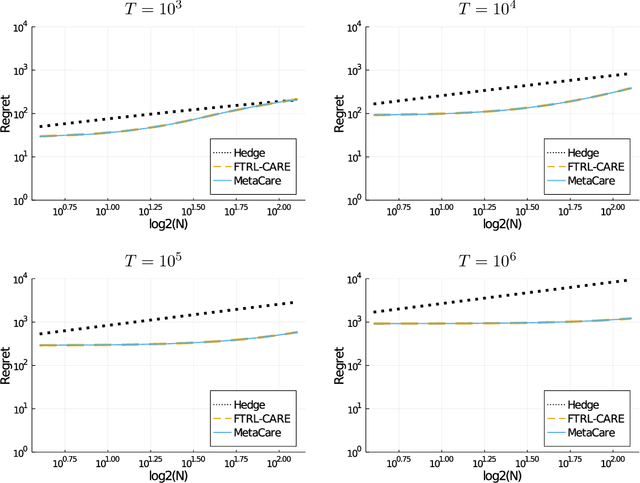

Relaxing the I.I.D. Assumption: Adaptive Minimax Optimal Sequential Prediction with Expert Advice

Jul 13, 2020Blair Bilodeau, Jeffrey Negrea, Daniel M. Roy

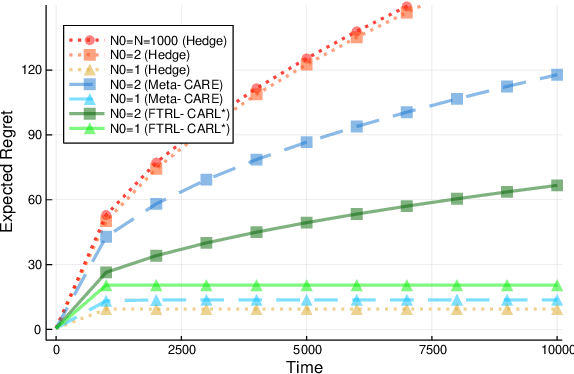

We consider sequential prediction with expert advice when the data are generated stochastically, but the distributions generating the data may vary arbitrarily among some constraint set. We quantify relaxations of the classical I.I.D. assumption in terms of possible constraint sets, with I.I.D. at one extreme, and an adversarial mechanism at the other. The Hedge algorithm, long known to be minimax optimal in the adversarial regime, has recently been shown to also be minimax optimal in the I.I.D. setting. We show that Hedge is suboptimal between these extremes, and present a new algorithm that is adaptively minimax optimal with respect to our relaxations of the I.I.D. assumption, without knowledge of which setting prevails.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge