Chenglong Fu

Design, Modelling, and Control of a Reconfigurable Rotary Series Elastic Actuator with Nonlinear Stiffness for Assistive Robots

May 28, 2022

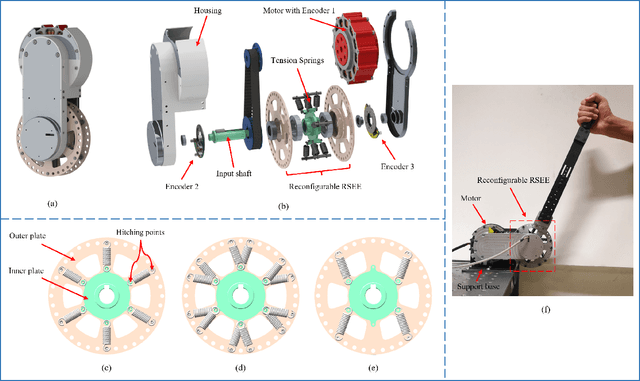

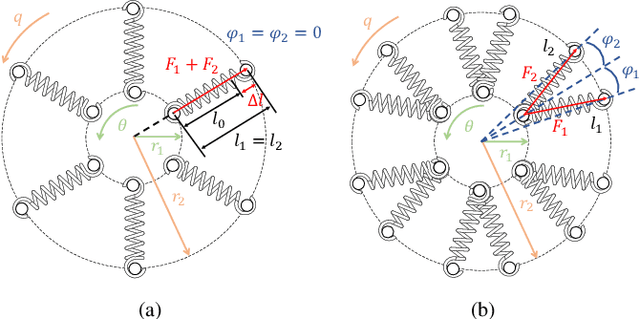

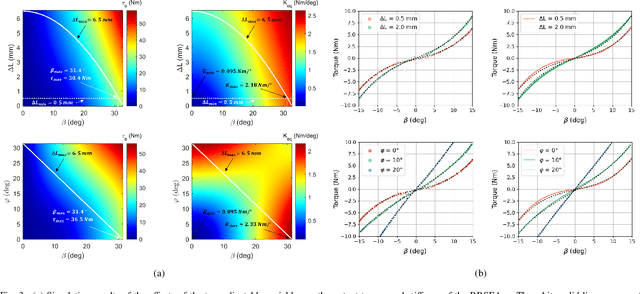

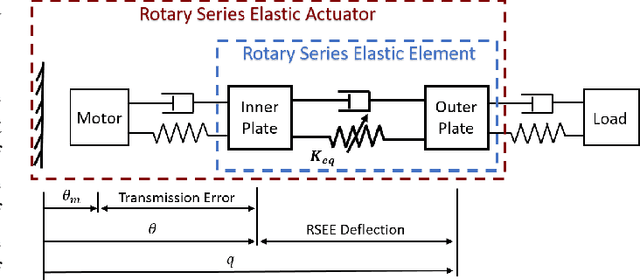

Abstract:In assistive robots, compliant actuator is a key component in establishing safe and satisfactory physical human-robot interaction (pHRI). The performance of compliant actuators largely depends on the stiffness of the elastic element. Generally, low stiffness is desirable to achieve low impedance, high fidelity of force control and safe pHRI, while high stiffness is required to ensure sufficient force bandwidth and output force. These requirements, however, are contradictory and often vary according to different tasks and conditions. In order to address the contradiction of stiffness selection and improve adaptability to different applications, we develop a reconfigurable rotary series elastic actuator with nonlinear stiffness (RRSEAns) for assistive robots. In this paper, an accurate model of the reconfigurable rotary series elastic element (RSEE) is presented and the adjusting principles are investigated, followed by detailed analysis and experimental validation. The RRSEAns can provide a wide range of stiffness from 0.095 Nm/deg to 2.33 Nm/deg, and different stiffness profiles can be yielded with respect to different configuration of the reconfigurable RSEE. The overall performance of the RRSEAns is verified by experiments on frequency response, torque control and pHRI, which is adequate for most applications in assistive robots. Specifically, the root-mean-square (RMS) error of the interaction torque results as low as 0.07 Nm in transparent/human-in-charge mode, demonstrating the advantages of the RRSEAns in pHRI.

Ensemble diverse hypotheses and knowledge distillation for unsupervised cross-subject adaptation

Apr 15, 2022

Abstract:Recognizing human locomotion intent and activities is important for controlling the wearable robots while walking in complex environments. However, human-robot interface signals are usually user-dependent, which causes that the classifier trained on source subjects performs poorly on new subjects. To address this issue, this paper designs the ensemble diverse hypotheses and knowledge distillation (EDHKD) method to realize unsupervised cross-subject adaptation. EDH mitigates the divergence between labeled data of source subjects and unlabeled data of target subjects to accurately classify the locomotion modes of target subjects without labeling data. Compared to previous domain adaptation methods based on the single learner, which may only learn a subset of features from input signals, EDH can learn diverse features by incorporating multiple diverse feature generators and thus increases the accuracy and decreases the variance of classifying target data, but it sacrifices the efficiency. To solve this problem, EDHKD (student) distills the knowledge from the EDH (teacher) to a single network to remain efficient and accurate. The performance of the EDHKD is theoretically proved and experimentally validated on a 2D moon dataset and two public human locomotion datasets. Experimental results show that the EDHKD outperforms all other methods. The EDHKD can classify target data with 96.9%, 94.4%, and 97.4% average accuracy on the above three datasets with a short computing time (1 ms). Compared to a benchmark (BM) method, the EDHKD increases 1.3% and 7.1% average accuracy for classifying the locomotion modes of target subjects. The EDHKD also stabilizes the learning curves. Therefore, the EDHKD is significant for increasing the generalization ability and efficiency of the human intent prediction and human activity recognition system, which will improve human-robot interactions.

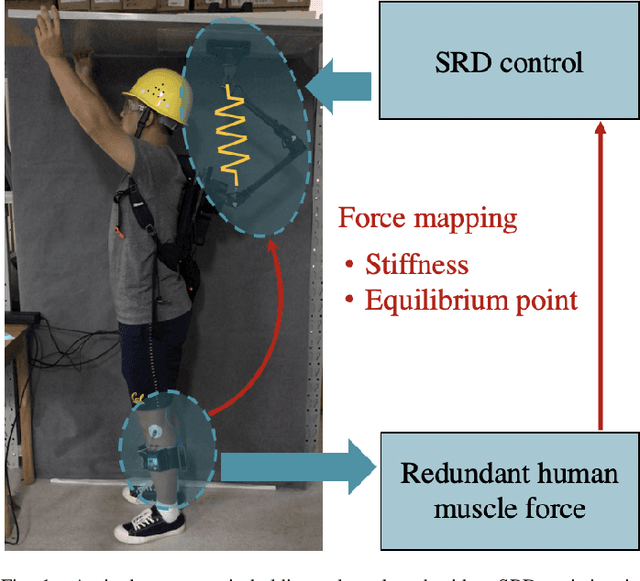

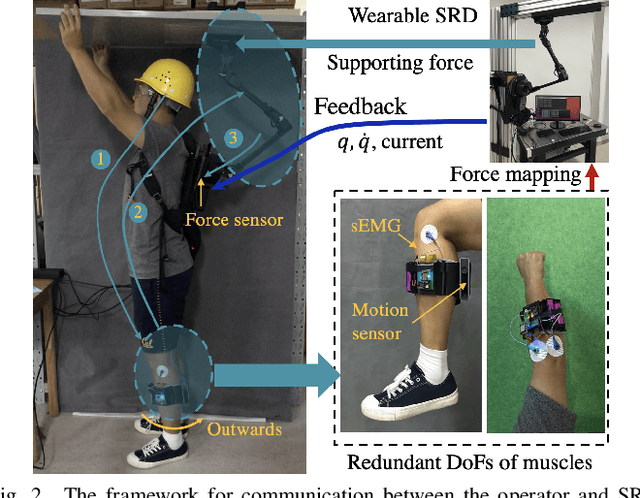

Mapping Human Muscle Force to Supernumerary Robotics Device for Overhead Task Assistance

Jul 29, 2021

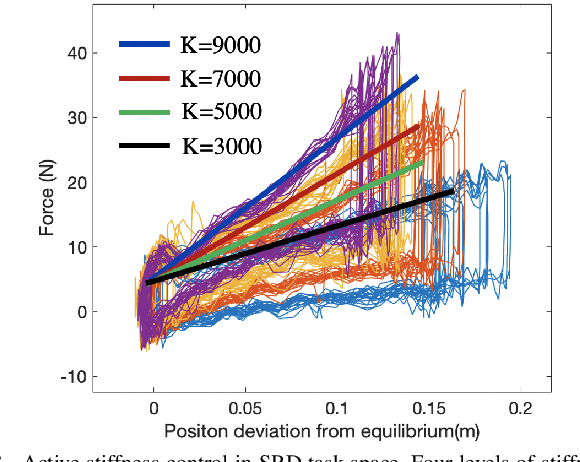

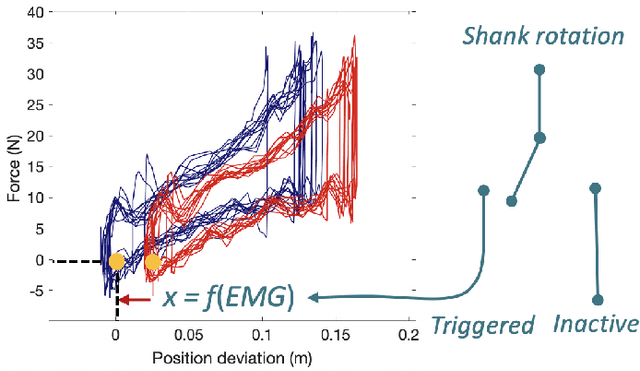

Abstract:Supernumerary Robotics Device (SRD) is an ideal solution to provide robotic assistance in overhead manual manipulation. Since two arms are occupied for the overhead task, it is desired to have additional arms to assist us in achieving other subtasks such as supporting the far end of a long plate and pushing it upward to fit in the ceiling. In this study, a method that maps human muscle force to SRD for overhead task assistance is proposed. Our methodology is to utilize redundant DoFs such as the idle muscles in the leg to control the supporting force of the SRD. A sEMG device is worn on the operator's shank where muscle signals are measured, parsed, and transmitted to SRD for control. In the control aspect, we adopted stiffness control in the task space based on torque control at the joint level. We are motivated by the fact that humans can achieve daily manipulation merely through simple inherent compliance property in joint driven by muscles. We explore to estimate the force of some particular muscles in humans and control the SRD to imitate the behaviors of muscle and output supporting forces to accomplish the subtasks such as overhead supporting. The sEMG signals detected from human muscles are extracted, filtered, rectified, and parsed to estimate the muscle force. We use this force information as the intent of the operator for proper overhead supporting force. As one of the well-known compliance control methods, stiffness control is easy to achieve using a few of straightforward parameters such as stiffness and equilibrium point. Through tuning the stiffness and equilibrium point, the supporting force of SRD in task space can be easily controlled. The muscle force estimated by sEMG is mapped to the desired force in the task space of the SRD. The desired force is transferred into stiffness or equilibrium point to output the corresponding supporting force.

How does the structure embedded in learning policy affect learning quadruped locomotion?

Aug 29, 2020

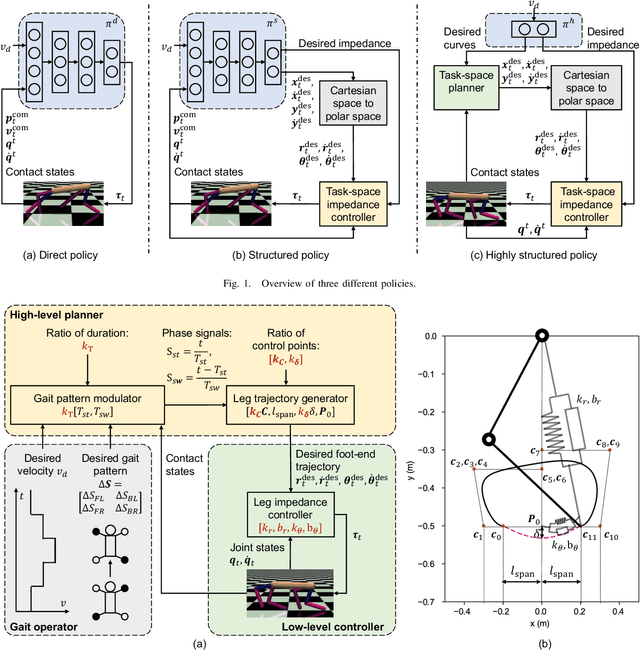

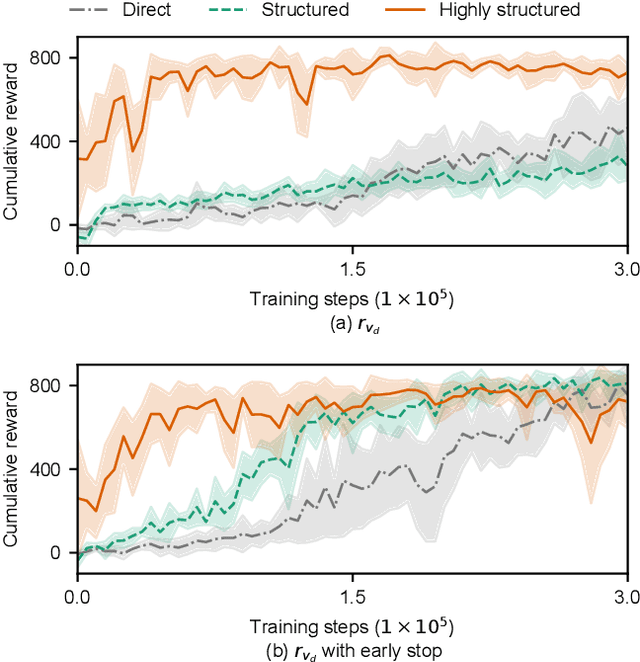

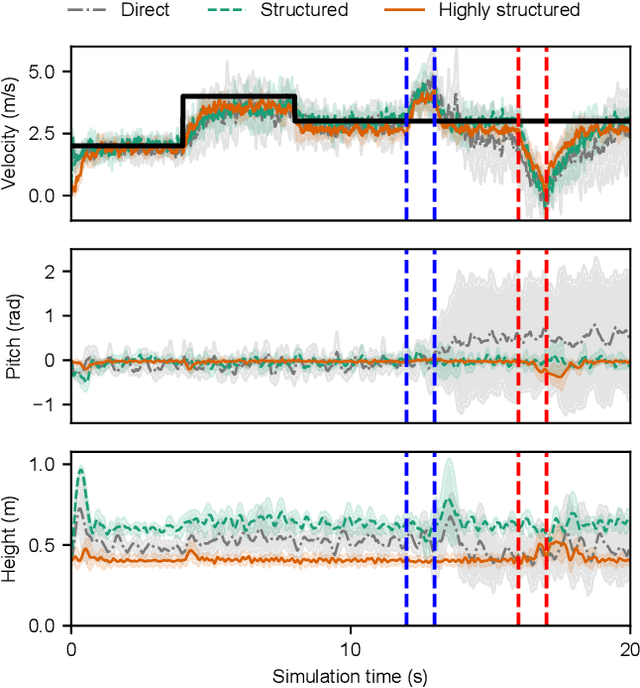

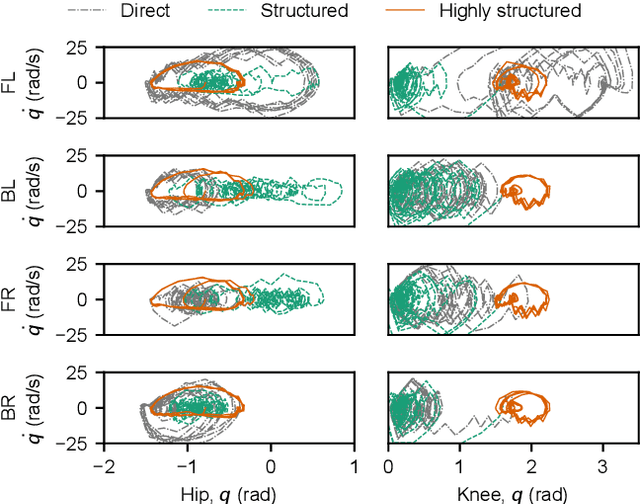

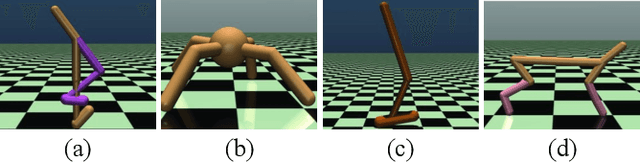

Abstract:Reinforcement learning (RL) is a popular data-driven method that has demonstrated great success in robotics. Previous works usually focus on learning an end-to-end (direct) policy to directly output joint torques. While the direct policy seems convenient, the resultant performance may not meet our expectations. To improve its performance, more sophisticated reward functions or more structured policies can be utilized. This paper focuses on the latter because the structured policy is more intuitive and can inherit insights from previous model-based controllers. It is unsurprising that the structure, such as a better choice of the action space and constraints of motion trajectory, may benefit the training process and the final performance of the policy at the cost of generality, but the quantitative effect is still unclear. To analyze the effect of the structure quantitatively, this paper investigates three policies with different levels of structure in learning quadruped locomotion: a direct policy, a structured policy, and a highly structured policy. The structured policy is trained to learn a task-space impedance controller and the highly structured policy learns a controller tailored for trot running, which we adopt from previous work. To evaluate trained policies, we design a simulation experiment to track different desired velocities under force disturbances. Simulation results show that structured policy and highly structured policy require 1/3 and 3/4 fewer training steps than the direct policy to achieve a similar level of cumulative reward, and seem more robust and efficient than the direct policy. We highlight that the structure embedded in the policies significantly affects the overall performance of learning a complicated task when complex dynamics are involved, such as legged locomotion.

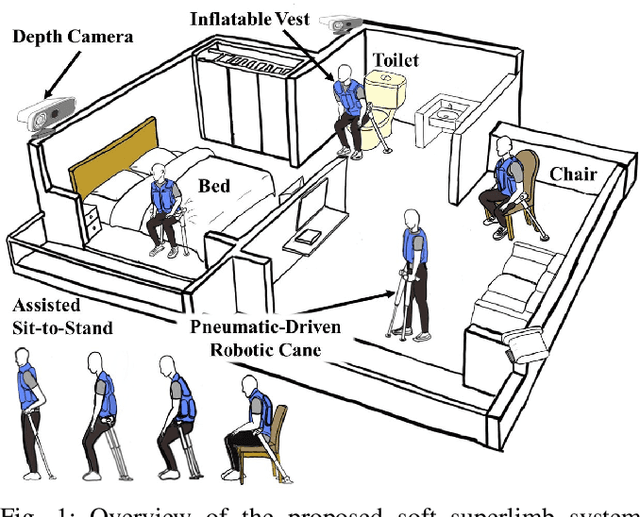

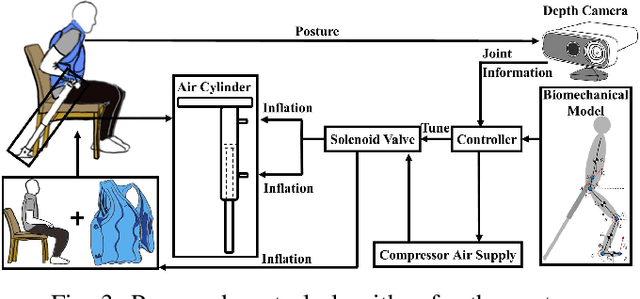

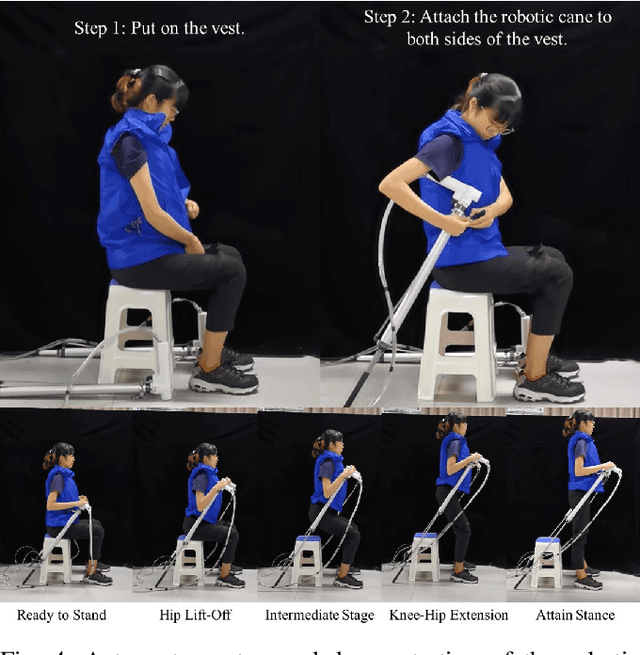

Robotic Cane as a Soft SuperLimb for Elderly Sit-to-Stand Assistance

Feb 29, 2020

Abstract:Many researchers have identified robotics as a potential solution to the aging population faced by many developed and developing countries. If so, how should we address the cognitive acceptance and ambient control of elderly assistive robots through design? In this paper, we proposed an explorative design of an ambient SuperLimb (Supernumerary Robotic Limb) system that involves a pneumatically-driven robotic cane for at-home motion assistance, an inflatable vest for compliant human-robot interaction, and a depth sensor for ambient intention detection. The proposed system aims at providing active assistance during the sit-to-stand transition for at-home usage by the elderly at the bedside, in the chair, and on the toilet. We proposed a modified biomechanical model with a linear cane robot for closed-loop control implementation. We validated the design feasibility of the proposed ambient SuperLimb system including the biomechanical model, our result showed the advantages in reducing lower limb efforts and elderly fall risks, yet the detection accuracy using depth sensing and adjustments on the model still require further research in the future. Nevertheless, we summarized empirical guidelines to support the ambient design of elderly-assistive SuperLimb systems for lower limb functional augmentation.

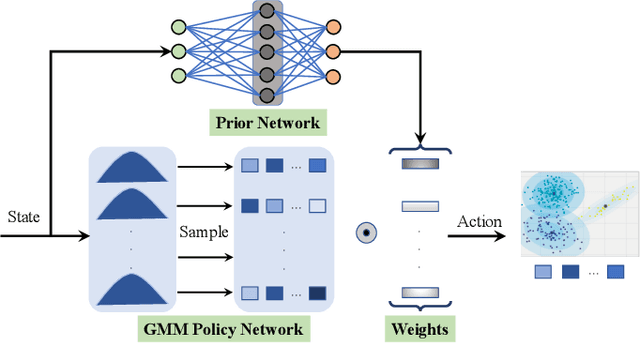

Off-policy Maximum Entropy Reinforcement Learning : Soft Actor-Critic with Advantage Weighted Mixture Policy(SAC-AWMP)

Feb 07, 2020

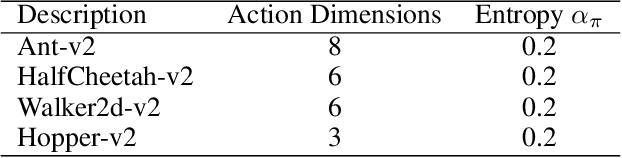

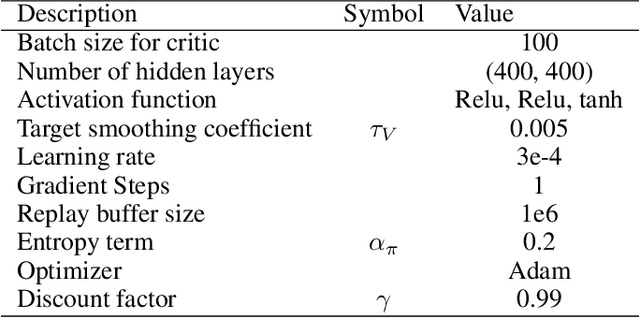

Abstract:The optimal policy of a reinforcement learning problem is often discontinuous and non-smooth. I.e., for two states with similar representations, their optimal policies can be significantly different. In this case, representing the entire policy with a function approximator (FA) with shared parameters for all states maybe not desirable, as the generalization ability of parameters sharing makes representing discontinuous, non-smooth policies difficult. A common way to solve this problem, known as Mixture-of-Experts, is to represent the policy as the weighted sum of multiple components, where different components perform well on different parts of the state space. Following this idea and inspired by a recent work called advantage-weighted information maximization, we propose to learn for each state weights of these components, so that they entail the information of the state itself and also the preferred action learned so far for the state. The action preference is characterized via the advantage function. In this case, the weight of each component would only be large for certain groups of states whose representations are similar and preferred action representations are also similar. Therefore each component is easy to be represented. We call a policy parameterized in this way an Advantage Weighted Mixture Policy (AWMP) and apply this idea to improve soft-actor-critic (SAC), one of the most competitive continuous control algorithm. Experimental results demonstrate that SAC with AWMP clearly outperforms SAC in four commonly used continuous control tasks and achieve stable performance across different random seeds.

Teach Biped Robots to Walk via Gait Principles and Reinforcement Learning with Adversarial Critics

Oct 22, 2019

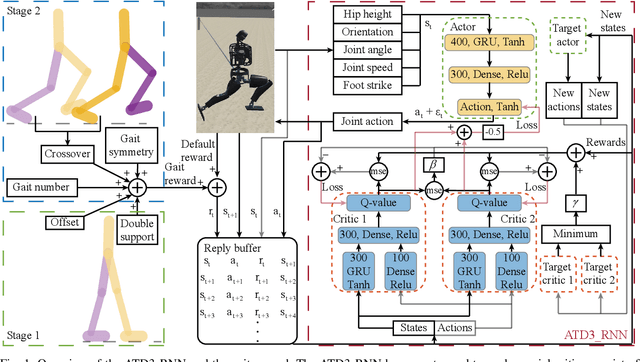

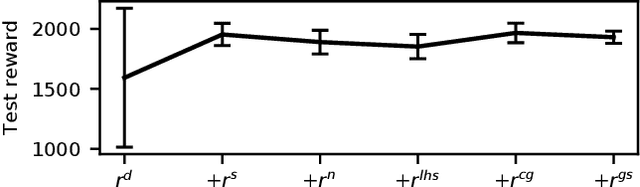

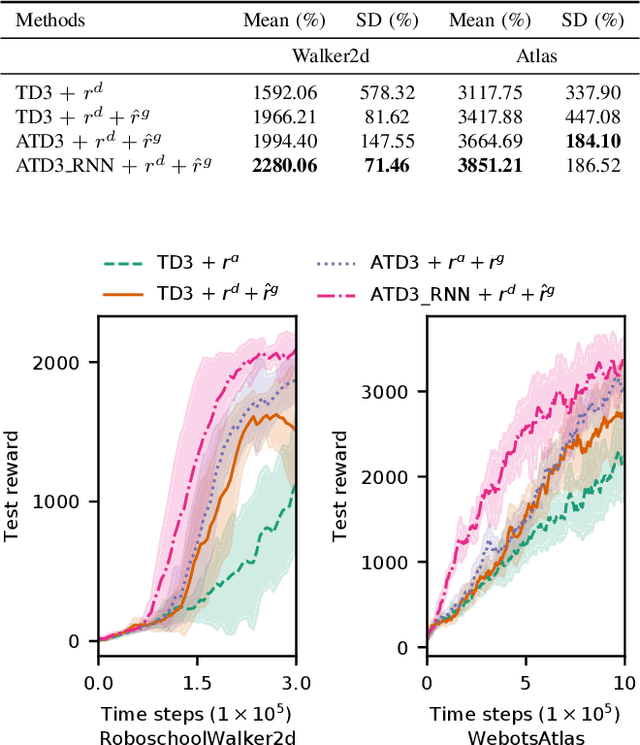

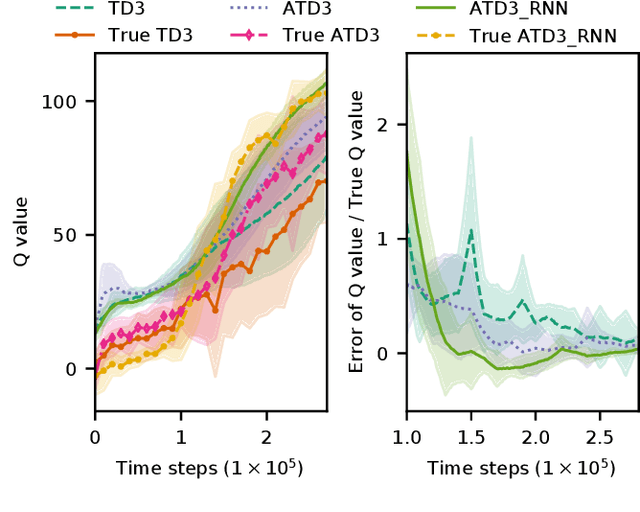

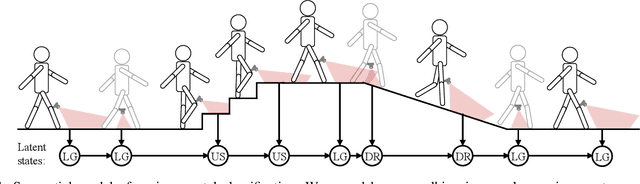

Abstract:Controlling a biped robot to walk stably is a challenging task considering its nonlinearity and hybrid dynamics. Reinforcement learning can address these issues by directly mapping the observed states to optimal actions that maximize the cumulative reward. However, the local minima caused by unsuitable rewards and the overestimation of the cumulative reward impede the maximization of the cumulative reward. To increase the cumulative reward, this paper designs a gait reward based on walking principles, which compensates the local minima for unnatural motions. Besides, an Adversarial Twin Delayed Deep Deterministic (ATD3) policy gradient algorithm with a recurrent neural network (RNN) is proposed to further boost the cumulative reward by mitigating the overestimation of the cumulative reward. Experimental results in the Roboschool Walker2d and Webots Atlas simulators indicate that the test rewards increase by 23.50% and 9.63% after adding the gait reward. The test rewards further increase by 15.96% and 12.68% after using the ATD3_RNN, and the reason may be that the ATD3_RNN decreases the error of estimating cumulative reward from 19.86% to 3.35%. Besides, the cosine kinetic similarity between the human and the biped robot trained by the gait reward and ATD3_RNN increases by over 69.23%. Consequently, the designed gait reward and ATD3_RNN boost the cumulative reward and teach biped robots to walk better.

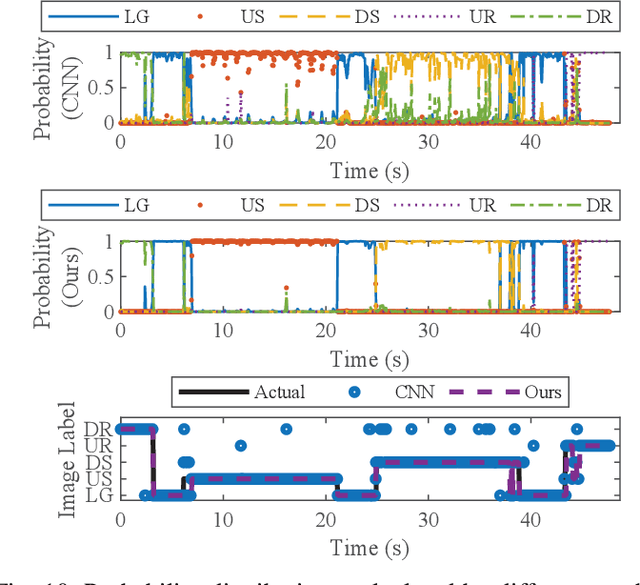

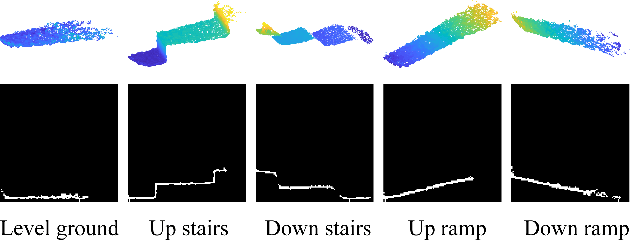

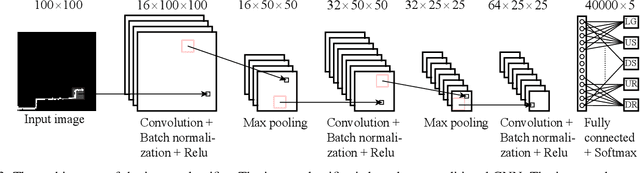

Sequential Decision Fusion for Environmental Classification in Assistive Walking

Apr 25, 2019

Abstract:Powered prostheses are effective for helping amputees walk on level ground, but these devices are inconvenient to use in complex environments. Prostheses need to understand the motion intent of amputees to help them walk in complex environments. Recently, researchers have found that they can use vision sensors to classify environments and predict the motion intent of amputees. Previous researchers can classify environments accurately in the offline analysis, but they neglect to decrease the corresponding time delay. To increase the accuracy and decrease the time delay of environmental classification, we propose a new decision fusion method in this paper. We fuse sequential decisions of environmental classification by constructing a hidden Markov model and designing a transition probability matrix. We evaluate our method by inviting able-bodied subjects and amputees to implement indoor and outdoor experiments. Experimental results indicate that our method can classify environments more accurately and with less time delay than previous methods. Besides classifying environments, the proposed decision fusion method may also optimize sequential predictions of the human motion intent in the future.

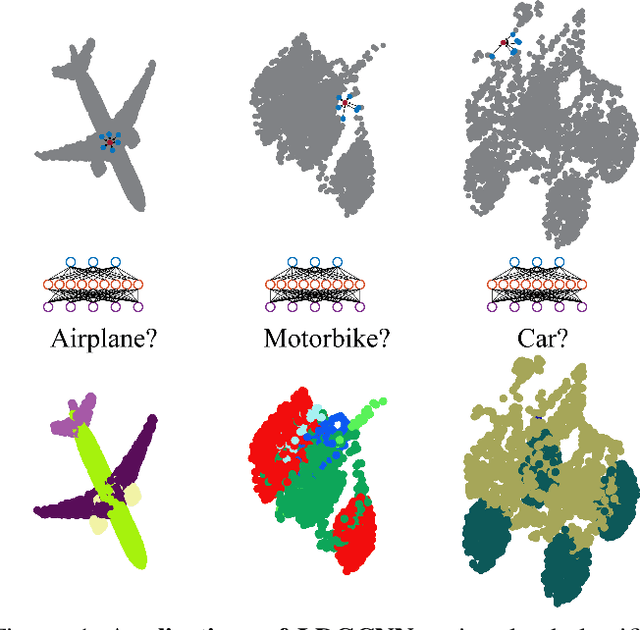

Linked Dynamic Graph CNN: Learning on Point Cloud via Linking Hierarchical Features

Apr 22, 2019

Abstract:Learning on point cloud is eagerly in demand because the point cloud is a common type of geometric data and can aid robots to understand environments robustly. However, the point cloud is sparse, unstructured, and unordered, which cannot be recognized accurately by a traditional convolutional neural network (CNN) nor a recurrent neural network (RNN). Fortunately, a graph convolutional neural network (Graph CNN) can process sparse and unordered data. Hence, we propose a linked dynamic graph CNN (LDGCNN) to classify and segment point cloud directly in this paper. We remove the transformation network, link hierarchical features from dynamic graphs, freeze feature extractor, and retrain the classifier to increase the performance of LDGCNN. We explain our network using theoretical analysis and visualization. Through experiments, we show that the proposed LDGCNN achieves state-of-art performance on two standard datasets: ModelNet40 and ShapeNet.

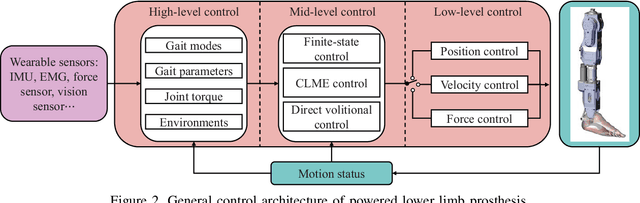

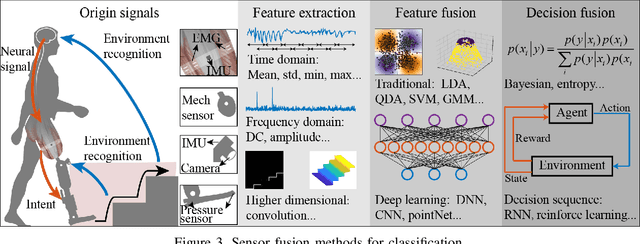

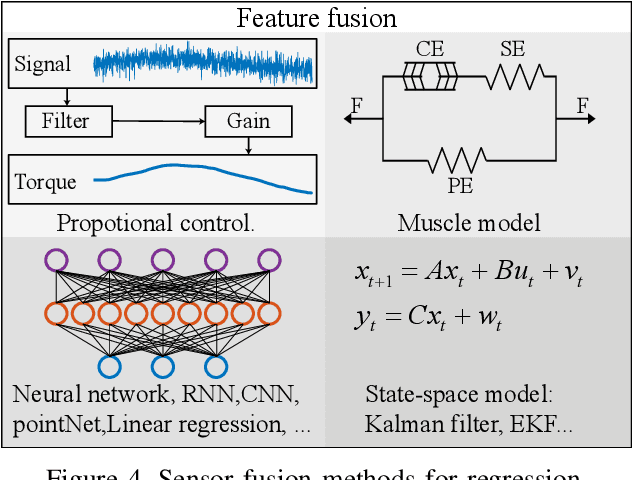

Sensor Fusion for Predictive Control of Human-Prosthesis-Environment Dynamics in Assistive Walking: A Survey

Mar 22, 2019

Abstract:This survey paper concerns Sensor Fusion for Predictive Control of Human-Prosthesis-Environment Dynamics in Assistive Walking. The powered lower limb prosthesis can imitate the human limb motion and help amputees to recover the walking ability, but it is still a challenge for amputees to walk in complex environments with the powered prosthesis. Previous researchers mainly focused on the interaction between a human and the prosthesis without considering the environmental information, which can provide an environmental context for human-prosthesis interaction. Therefore, in this review, recent sensor fusion methods for the predictive control of human-prosthesis-environment dynamics in assistive walking are critically surveyed. In that backdrop, several pertinent research issues that need further investigation are presented. In particular, general controllers, comparison of sensors, and complete procedures of sensor fusion methods that are applicable in assistive walking are introduced. Also, possible sensor fusion research for human-prosthesis-environment dynamics is presented.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge