Andrew P. King

King's College London

Uncertainty Aware Training to Improve Deep Learning Model Calibration for Classification of Cardiac MR Images

Aug 29, 2023Abstract:Quantifying uncertainty of predictions has been identified as one way to develop more trustworthy artificial intelligence (AI) models beyond conventional reporting of performance metrics. When considering their role in a clinical decision support setting, AI classification models should ideally avoid confident wrong predictions and maximise the confidence of correct predictions. Models that do this are said to be well-calibrated with regard to confidence. However, relatively little attention has been paid to how to improve calibration when training these models, i.e., to make the training strategy uncertainty-aware. In this work we evaluate three novel uncertainty-aware training strategies comparing against two state-of-the-art approaches. We analyse performance on two different clinical applications: cardiac resynchronisation therapy (CRT) response prediction and coronary artery disease (CAD) diagnosis from cardiac magnetic resonance (CMR) images. The best-performing model in terms of both classification accuracy and the most common calibration measure, expected calibration error (ECE) was the Confidence Weight method, a novel approach that weights the loss of samples to explicitly penalise confident incorrect predictions. The method reduced the ECE by 17% for CRT response prediction and by 22% for CAD diagnosis when compared to a baseline classifier in which no uncertainty-aware strategy was included. In both applications, as well as reducing the ECE there was a slight increase in accuracy from 69% to 70% and 70% to 72% for CRT response prediction and CAD diagnosis respectively. However, our analysis showed a lack of consistency in terms of optimal models when using different calibration measures. This indicates the need for careful consideration of performance metrics when training and selecting models for complex high-risk applications in healthcare.

Bias in Unsupervised Anomaly Detection in Brain MRI

Aug 26, 2023

Abstract:Unsupervised anomaly detection methods offer a promising and flexible alternative to supervised approaches, holding the potential to revolutionize medical scan analysis and enhance diagnostic performance. In the current landscape, it is commonly assumed that differences between a test case and the training distribution are attributed solely to pathological conditions, implying that any disparity indicates an anomaly. However, the presence of other potential sources of distributional shift, including scanner, age, sex, or race, is frequently overlooked. These shifts can significantly impact the accuracy of the anomaly detection task. Prominent instances of such failures have sparked concerns regarding the bias, credibility, and fairness of anomaly detection. This work presents a novel analysis of biases in unsupervised anomaly detection. By examining potential non-pathological distributional shifts between the training and testing distributions, we shed light on the extent of these biases and their influence on anomaly detection results. Moreover, this study examines the algorithmic limitations that arise due to biases, providing valuable insights into the challenges encountered by anomaly detection algorithms in accurately learning and capturing the entire range of variability present in the normative distribution. Through this analysis, we aim to enhance the understanding of these biases and pave the way for future improvements in the field. Here, we specifically investigate Alzheimer's disease detection from brain MR imaging as a case study, revealing significant biases related to sex, race, and scanner variations that substantially impact the results. These findings align with the broader goal of improving the reliability, fairness, and effectiveness of anomaly detection in medical imaging.

An investigation into the impact of deep learning model choice on sex and race bias in cardiac MR segmentation

Aug 25, 2023

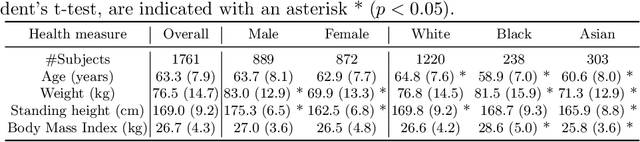

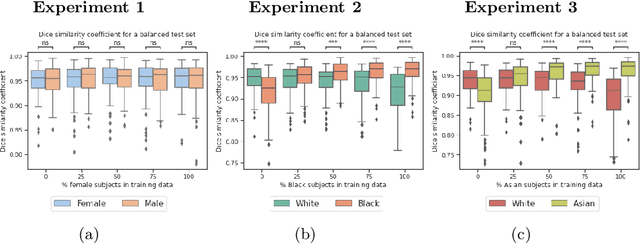

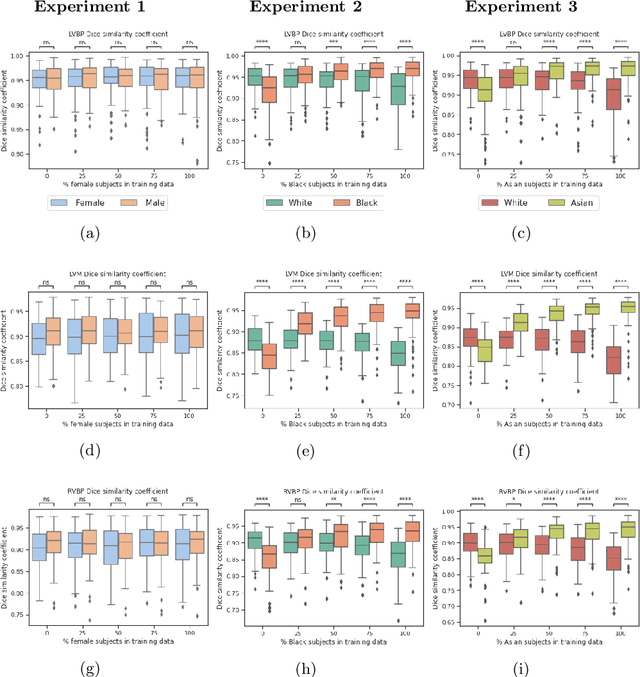

Abstract:In medical imaging, artificial intelligence (AI) is increasingly being used to automate routine tasks. However, these algorithms can exhibit and exacerbate biases which lead to disparate performances between protected groups. We investigate the impact of model choice on how imbalances in subject sex and race in training datasets affect AI-based cine cardiac magnetic resonance image segmentation. We evaluate three convolutional neural network-based models and one vision transformer model. We find significant sex bias in three of the four models and racial bias in all of the models. However, the severity and nature of the bias varies between the models, highlighting the importance of model choice when attempting to train fair AI-based segmentation models for medical imaging tasks.

Deep Learning Framework for Spleen Volume Estimation from 2D Cross-sectional Views

Aug 17, 2023Abstract:Abnormal spleen enlargement (splenomegaly) is regarded as a clinical indicator for a range of conditions, including liver disease, cancer and blood diseases. While spleen length measured from ultrasound images is a commonly used surrogate for spleen size, spleen volume remains the gold standard metric for assessing splenomegaly and the severity of related clinical conditions. Computed tomography is the main imaging modality for measuring spleen volume, but it is less accessible in areas where there is a high prevalence of splenomegaly (e.g., the Global South). Our objective was to enable automated spleen volume measurement from 2D cross-sectional segmentations, which can be obtained from ultrasound imaging. In this study, we describe a variational autoencoder-based framework to measure spleen volume from single- or dual-view 2D spleen segmentations. We propose and evaluate three volume estimation methods within this framework. We also demonstrate how 95% confidence intervals of volume estimates can be produced to make our method more clinically useful. Our best model achieved mean relative volume accuracies of 86.62% and 92.58% for single- and dual-view segmentations, respectively, surpassing the performance of the clinical standard approach of linear regression using manual measurements and a comparative deep learning-based 2D-3D reconstruction-based approach. The proposed spleen volume estimation framework can be integrated into standard clinical workflows which currently use 2D ultrasound images to measure spleen length. To the best of our knowledge, this is the first work to achieve direct 3D spleen volume estimation from 2D spleen segmentations.

Automatic retrieval of corresponding US views in longitudinal examinations

Jun 07, 2023

Abstract:Skeletal muscle atrophy is a common occurrence in critically ill patients in the intensive care unit (ICU) who spend long periods in bed. Muscle mass must be recovered through physiotherapy before patient discharge and ultrasound imaging is frequently used to assess the recovery process by measuring the muscle size over time. However, these manual measurements are subject to large variability, particularly since the scans are typically acquired on different days and potentially by different operators. In this paper, we propose a self-supervised contrastive learning approach to automatically retrieve similar ultrasound muscle views at different scan times. Three different models were compared using data from 67 patients acquired in the ICU. Results indicate that our contrastive model outperformed a supervised baseline model in the task of view retrieval with an AUC of 73.52% and when combined with an automatic segmentation model achieved 5.7%+/-0.24% error in cross-sectional area. Furthermore, a user study survey confirmed the efficacy of our model for muscle view retrieval.

Addressing Deep Learning Model Calibration Using Evidential Neural Networks and Uncertainty-Aware Training

Jan 30, 2023

Abstract:In terms of accuracy, deep learning (DL) models have had considerable success in classification problems for medical imaging applications. However, it is well-known that the outputs of such models, which typically utilise the SoftMax function in the final classification layer can be over-confident, i.e. they are poorly calibrated. Two competing solutions to this problem have been proposed: uncertainty-aware training and evidential neural networks (ENNs). In this paper, we perform an investigation into the improvements to model calibration that can be achieved by each of these approaches individually, and their combination. We perform experiments on two classification tasks: a simpler MNIST digit classification task and a more complex and realistic medical imaging artefact detection task using Phase Contrast Cardiac Magnetic Resonance images. The experimental results demonstrate that model calibration can suffer when the task becomes challenging enough to require a higher-capacity model. However, in our complex artefact detection task, we saw an improvement in calibration for both a low and higher-capacity model when implementing both the ENN and uncertainty-aware training together, indicating that this approach can offer a promising way to improve calibration in such settings. The findings highlight the potential use of these approaches to improve model calibration in a complex application, which would in turn improve clinician trust in DL models.

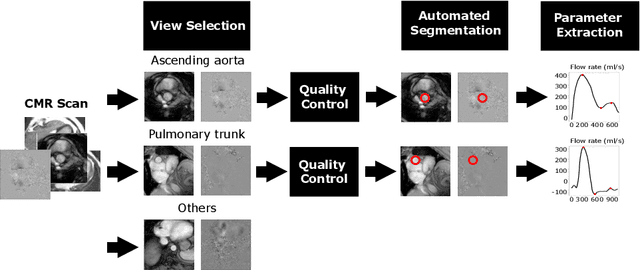

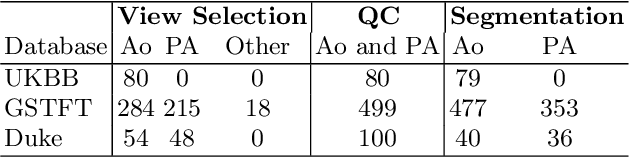

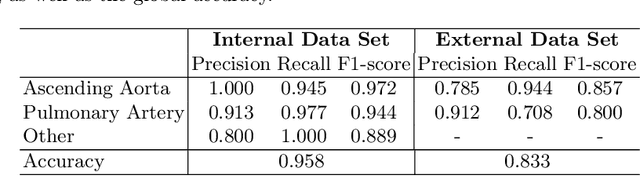

Automated Quality Controlled Analysis of 2D Phase Contrast Cardiovascular Magnetic Resonance Imaging

Sep 28, 2022

Abstract:Flow analysis carried out using phase contrast cardiac magnetic resonance imaging (PC-CMR) enables the quantification of important parameters that are used in the assessment of cardiovascular function. An essential part of this analysis is the identification of the correct CMR views and quality control (QC) to detect artefacts that could affect the flow quantification. We propose a novel deep learning based framework for the fully-automated analysis of flow from full CMR scans that first carries out these view selection and QC steps using two sequential convolutional neural networks, followed by automatic aorta and pulmonary artery segmentation to enable the quantification of key flow parameters. Accuracy values of 0.958 and 0.914 were obtained for view classification and QC, respectively. For segmentation, Dice scores were $>$0.969 and the Bland-Altman plots indicated excellent agreement between manual and automatic peak flow values. In addition, we tested our pipeline on an external validation data set, with results indicating good robustness of the pipeline. This work was carried out using multivendor clinical data consisting of 986 cases, indicating the potential for the use of this pipeline in a clinical setting.

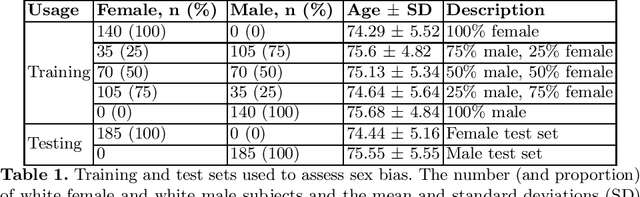

A systematic study of race and sex bias in CNN-based cardiac MR segmentation

Sep 04, 2022

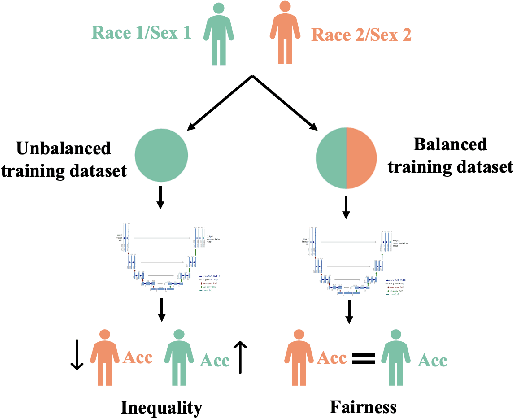

Abstract:In computer vision there has been significant research interest in assessing potential demographic bias in deep learning models. One of the main causes of such bias is imbalance in the training data. In medical imaging, where the potential impact of bias is arguably much greater, there has been less interest. In medical imaging pipelines, segmentation of structures of interest plays an important role in estimating clinical biomarkers that are subsequently used to inform patient management. Convolutional neural networks (CNNs) are starting to be used to automate this process. We present the first systematic study of the impact of training set imbalance on race and sex bias in CNN-based segmentation. We focus on segmentation of the structures of the heart from short axis cine cardiac magnetic resonance images, and train multiple CNN segmentation models with different levels of race/sex imbalance. We find no significant bias in the sex experiment but significant bias in two separate race experiments, highlighting the need to consider adequate representation of different demographic groups in health datasets.

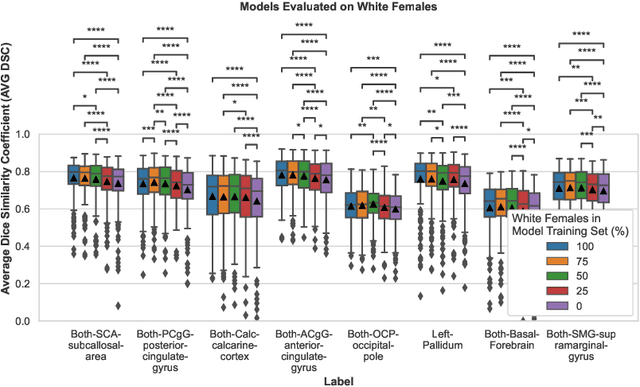

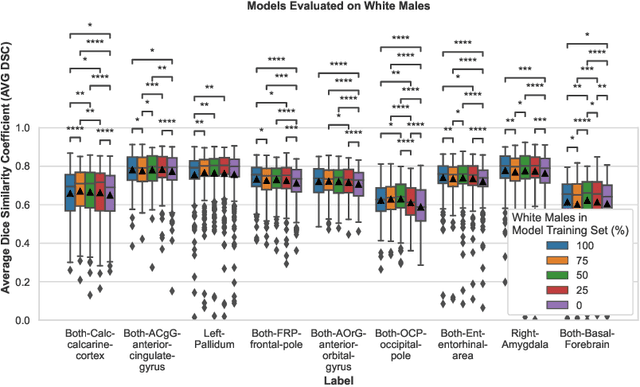

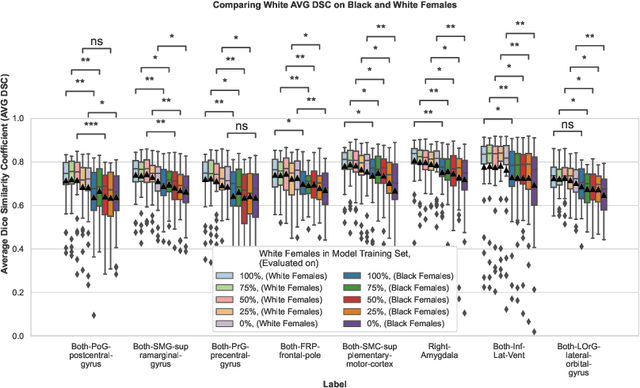

A Study of Demographic Bias in CNN-based Brain MR Segmentation

Aug 13, 2022

Abstract:Convolutional neural networks (CNNs) are increasingly being used to automate the segmentation of brain structures in magnetic resonance (MR) images for research studies. In other applications, CNN models have been shown to exhibit bias against certain demographic groups when they are under-represented in the training sets. In this work, we investigate whether CNN models for brain MR segmentation have the potential to contain sex or race bias when trained with imbalanced training sets. We train multiple instances of the FastSurferCNN model using different levels of sex imbalance in white subjects. We evaluate the performance of these models separately for white male and white female test sets to assess sex bias, and furthermore evaluate them on black male and black female test sets to assess potential racial bias. We find significant sex and race bias effects in segmentation model performance. The biases have a strong spatial component, with some brain regions exhibiting much stronger bias than others. Overall, our results suggest that race bias is more significant than sex bias. Our study demonstrates the importance of considering race and sex balance when forming training sets for CNN-based brain MR segmentation, to avoid maintaining or even exacerbating existing health inequalities through biased research study findings.

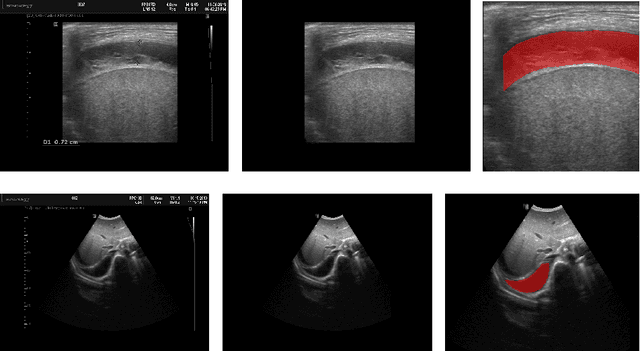

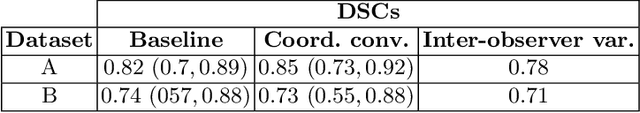

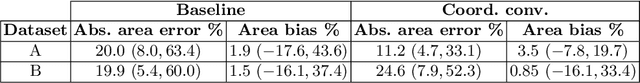

Deep Learning-based Segmentation of Pleural Effusion From Ultrasound Using Coordinate Convolutions

Aug 05, 2022

Abstract:In many low-to-middle income (LMIC) countries, ultrasound is used for assessment of pleural effusion. Typically, the extent of the effusion is manually measured by a sonographer, leading to significant intra-/inter-observer variability. In this work, we investigate the use of deep learning (DL) to automate the process of pleural effusion segmentation from ultrasound images. On two datasets acquired in a LMIC setting, we achieve median Dice Similarity Coefficients (DSCs) of 0.82 and 0.74 respectively using the nnU-net DL model. We also investigate the use of coordinate convolutions in the DL model and find that this results in a statistically significant improvement in the median DSC on the first dataset to 0.85, with no significant change on the second dataset. This work showcases, for the first time, the potential of DL in automating the process of effusion assessment from ultrasound in LMIC settings where there is often a lack of experienced radiologists to perform such tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge