Tareen Dawood

Improving Deep Learning Model Calibration for Cardiac Applications using Deterministic Uncertainty Networks and Uncertainty-aware Training

May 10, 2024

Abstract:Improving calibration performance in deep learning (DL) classification models is important when planning the use of DL in a decision-support setting. In such a scenario, a confident wrong prediction could lead to a lack of trust and/or harm in a high-risk application. We evaluate the impact on accuracy and calibration of two types of approach that aim to improve DL classification model calibration: deterministic uncertainty methods (DUM) and uncertainty-aware training. Specifically, we test the performance of three DUMs and two uncertainty-aware training approaches as well as their combinations. To evaluate their utility, we use two realistic clinical applications from the field of cardiac imaging: artefact detection from phase contrast cardiac magnetic resonance (CMR) and disease diagnosis from the public ACDC CMR dataset. Our results indicate that both DUMs and uncertainty-aware training can improve both accuracy and calibration in both of our applications, with DUMs generally offering the best improvements. We also investigate the combination of the two approaches, resulting in a novel deterministic uncertainty-aware training approach. This provides further improvements for some combinations of DUMs and uncertainty-aware training approaches.

Uncertainty Aware Training to Improve Deep Learning Model Calibration for Classification of Cardiac MR Images

Aug 29, 2023Abstract:Quantifying uncertainty of predictions has been identified as one way to develop more trustworthy artificial intelligence (AI) models beyond conventional reporting of performance metrics. When considering their role in a clinical decision support setting, AI classification models should ideally avoid confident wrong predictions and maximise the confidence of correct predictions. Models that do this are said to be well-calibrated with regard to confidence. However, relatively little attention has been paid to how to improve calibration when training these models, i.e., to make the training strategy uncertainty-aware. In this work we evaluate three novel uncertainty-aware training strategies comparing against two state-of-the-art approaches. We analyse performance on two different clinical applications: cardiac resynchronisation therapy (CRT) response prediction and coronary artery disease (CAD) diagnosis from cardiac magnetic resonance (CMR) images. The best-performing model in terms of both classification accuracy and the most common calibration measure, expected calibration error (ECE) was the Confidence Weight method, a novel approach that weights the loss of samples to explicitly penalise confident incorrect predictions. The method reduced the ECE by 17% for CRT response prediction and by 22% for CAD diagnosis when compared to a baseline classifier in which no uncertainty-aware strategy was included. In both applications, as well as reducing the ECE there was a slight increase in accuracy from 69% to 70% and 70% to 72% for CRT response prediction and CAD diagnosis respectively. However, our analysis showed a lack of consistency in terms of optimal models when using different calibration measures. This indicates the need for careful consideration of performance metrics when training and selecting models for complex high-risk applications in healthcare.

Addressing Deep Learning Model Calibration Using Evidential Neural Networks and Uncertainty-Aware Training

Jan 30, 2023

Abstract:In terms of accuracy, deep learning (DL) models have had considerable success in classification problems for medical imaging applications. However, it is well-known that the outputs of such models, which typically utilise the SoftMax function in the final classification layer can be over-confident, i.e. they are poorly calibrated. Two competing solutions to this problem have been proposed: uncertainty-aware training and evidential neural networks (ENNs). In this paper, we perform an investigation into the improvements to model calibration that can be achieved by each of these approaches individually, and their combination. We perform experiments on two classification tasks: a simpler MNIST digit classification task and a more complex and realistic medical imaging artefact detection task using Phase Contrast Cardiac Magnetic Resonance images. The experimental results demonstrate that model calibration can suffer when the task becomes challenging enough to require a higher-capacity model. However, in our complex artefact detection task, we saw an improvement in calibration for both a low and higher-capacity model when implementing both the ENN and uncertainty-aware training together, indicating that this approach can offer a promising way to improve calibration in such settings. The findings highlight the potential use of these approaches to improve model calibration in a complex application, which would in turn improve clinician trust in DL models.

Uncertainty-Aware Training for Cardiac Resynchronisation Therapy Response Prediction

Sep 22, 2021

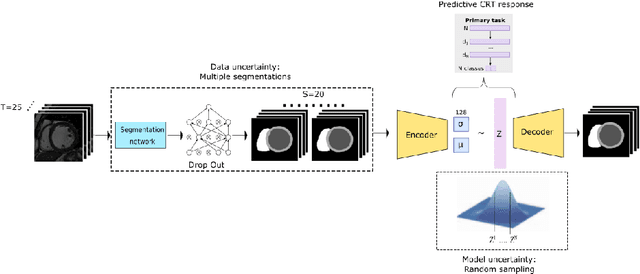

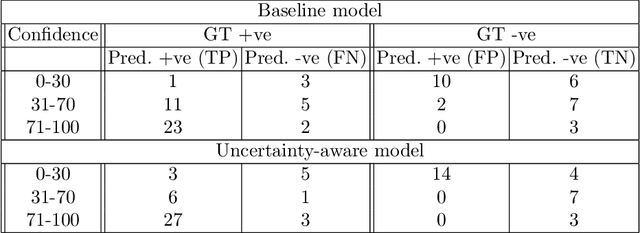

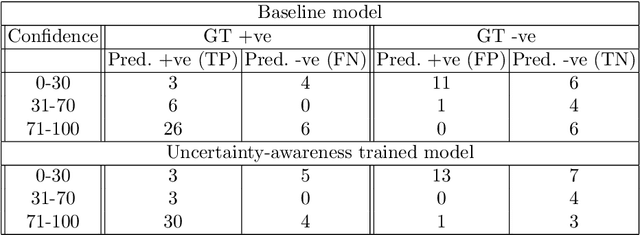

Abstract:Evaluation of predictive deep learning (DL) models beyond conventional performance metrics has become increasingly important for applications in sensitive environments like healthcare. Such models might have the capability to encode and analyse large sets of data but they often lack comprehensive interpretability methods, preventing clinical trust in predictive outcomes. Quantifying uncertainty of a prediction is one way to provide such interpretability and promote trust. However, relatively little attention has been paid to how to include such requirements into the training of the model. In this paper we: (i) quantify the data (aleatoric) and model (epistemic) uncertainty of a DL model for Cardiac Resynchronisation Therapy response prediction from cardiac magnetic resonance images, and (ii) propose and perform a preliminary investigation of an uncertainty-aware loss function that can be used to retrain an existing DL image-based classification model to encourage confidence in correct predictions and reduce confidence in incorrect predictions. Our initial results are promising, showing a significant increase in the (epistemic) confidence of true positive predictions, with some evidence of a reduction in false negative confidence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge