Amanpreet Singh

Physics Informed Convex Artificial Neural Networks (PICANNs) for Optimal Transport based Density Estimation

Apr 02, 2021

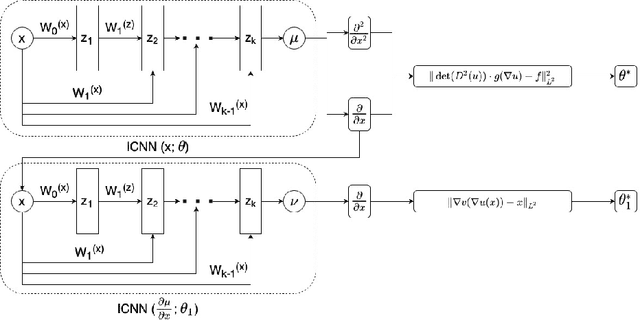

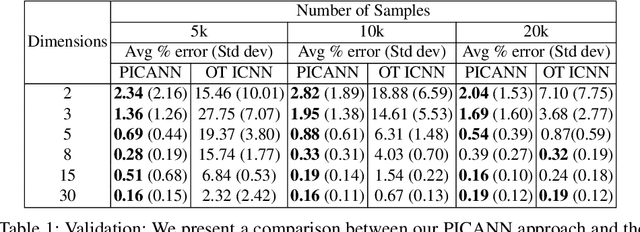

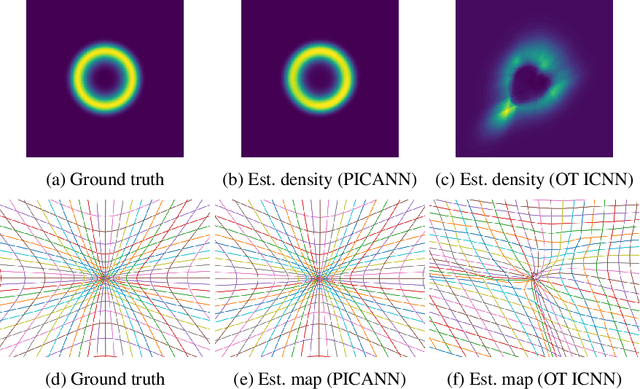

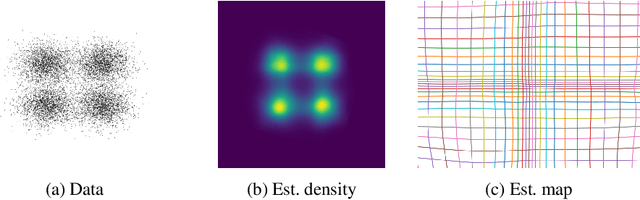

Abstract:Optimal Mass Transport (OMT) is a well studied problem with a variety of applications in a diverse set of fields ranging from Physics to Computer Vision and in particular Statistics and Data Science. Since the original formulation of Monge in 1781 significant theoretical progress been made on the existence, uniqueness and properties of the optimal transport maps. The actual numerical computation of the transport maps, particularly in high dimensions, remains a challenging problem. By Brenier's theorem, the continuous OMT problem can be reduced to that of solving a non-linear PDE of Monge-Ampere type whose solution is a convex function. In this paper, building on recent developments of input convex neural networks and physics informed neural networks for solving PDE's, we propose a Deep Learning approach to solve the continuous OMT problem. To demonstrate the versatility of our framework we focus on the ubiquitous density estimation and generative modeling tasks in statistics and machine learning. Finally as an example we show how our framework can be incorporated with an autoencoder to estimate an effective probabilistic generative model.

Transformer is All You Need: Multimodal Multitask Learning with a Unified Transformer

Feb 22, 2021

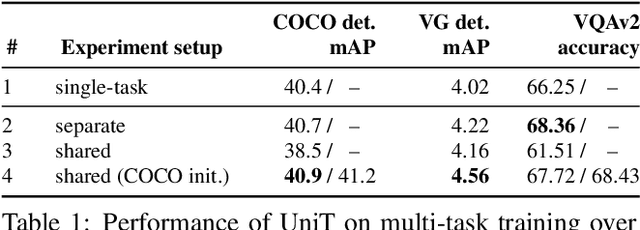

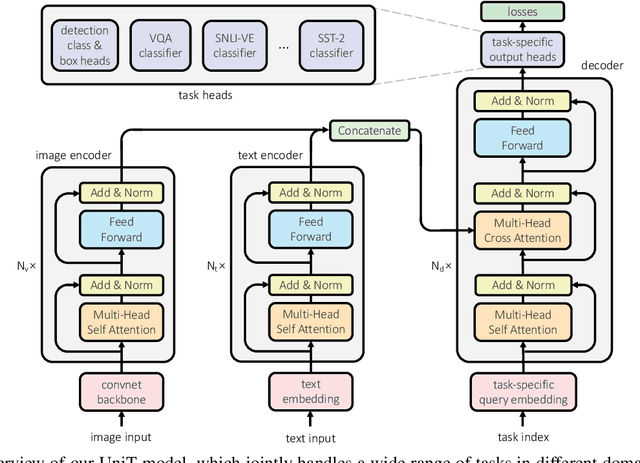

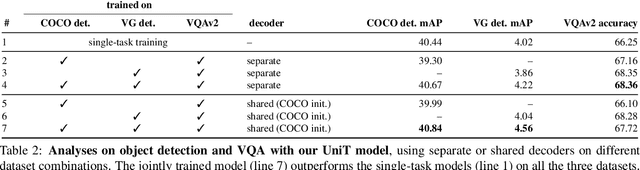

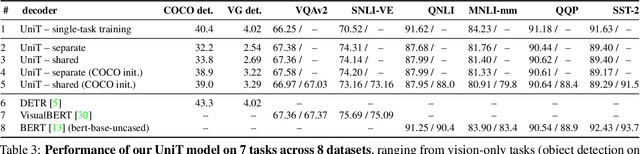

Abstract:We propose UniT, a Unified Transformer model to simultaneously learn the most prominent tasks across different domains, ranging from object detection to language understanding and multimodal reasoning. Based on the transformer encoder-decoder architecture, our UniT model encodes each input modality with an encoder and makes predictions on each task with a shared decoder over the encoded input representations, followed by task-specific output heads. The entire model is jointly trained end-to-end with losses from each task. Compared to previous efforts on multi-task learning with transformers, we share the same model parameters to all tasks instead of separately fine-tuning task-specific models and handle a much higher variety of tasks across different domains. In our experiments, we learn 7 tasks jointly over 8 datasets, achieving comparable performance to well-established prior work on each domain under the same supervision with a compact set of model parameters. Code will be released in MMF at https://mmf.sh.

Open4Business(O4B): An Open Access Dataset for Summarizing Business Documents

Nov 29, 2020

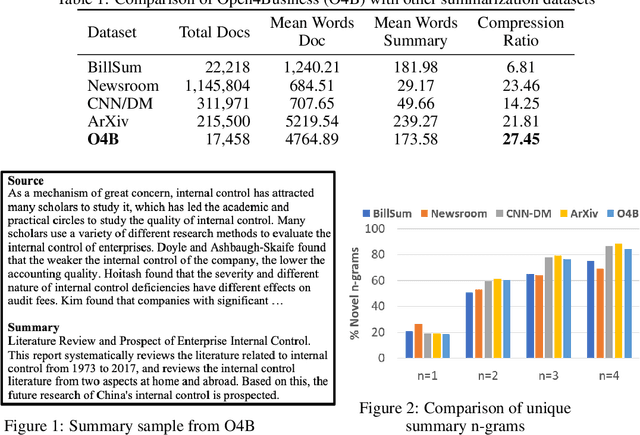

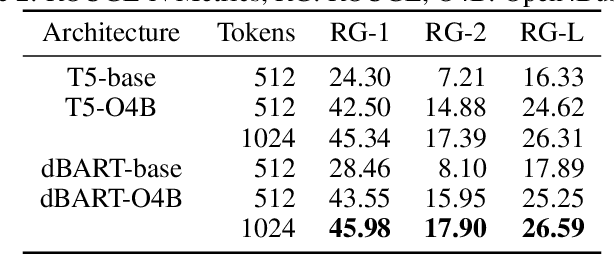

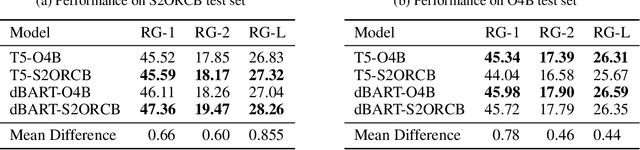

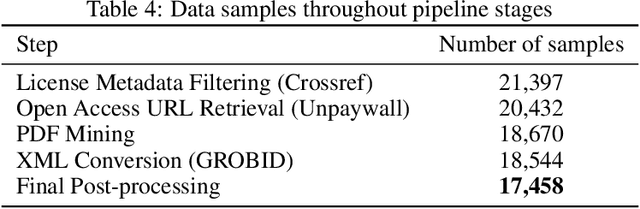

Abstract:A major challenge in fine-tuning deep learning models for automatic summarization is the need for large domain specific datasets. One of the barriers to curating such data from resources like online publications is navigating the license regulations applicable to their re-use, especially for commercial purposes. As a result, despite the availability of several business journals there are no large scale datasets for summarizing business documents. In this work, we introduce Open4Business(O4B),a dataset of 17,458 open access business articles and their reference summaries. The dataset introduces a new challenge for summarization in the business domain, requiring highly abstractive and more concise summaries as compared to other existing datasets. Additionally, we evaluate existing models on it and consequently show that models trained on O4B and a 7x larger non-open access dataset achieve comparable performance on summarization. We release the dataset, along with the code which can be leveraged to similarly gather data for multiple domains.

Seeing the Un-Scene: Learning Amodal Semantic Maps for Room Navigation

Jul 20, 2020

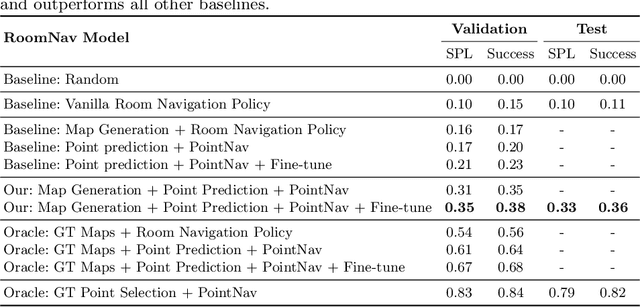

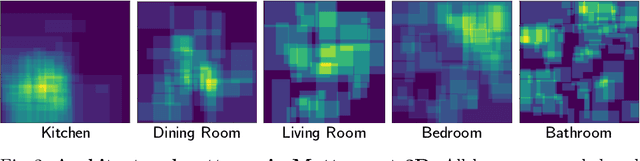

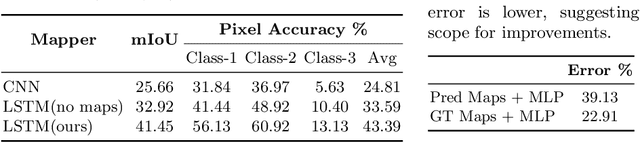

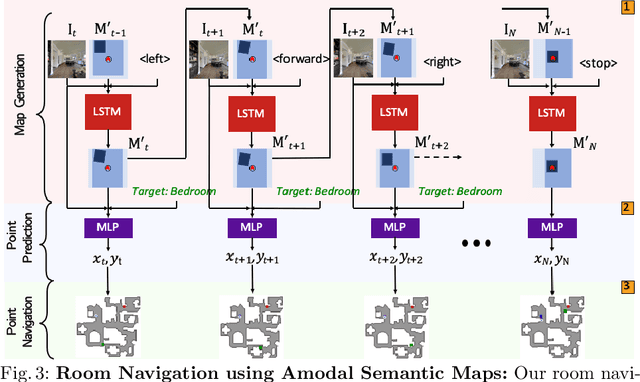

Abstract:We introduce a learning-based approach for room navigation using semantic maps. Our proposed architecture learns to predict top-down belief maps of regions that lie beyond the agent's field of view while modeling architectural and stylistic regularities in houses. First, we train a model to generate amodal semantic top-down maps indicating beliefs of location, size, and shape of rooms by learning the underlying architectural patterns in houses. Next, we use these maps to predict a point that lies in the target room and train a policy to navigate to the point. We empirically demonstrate that by predicting semantic maps, the model learns common correlations found in houses and generalizes to novel environments. We also demonstrate that reducing the task of room navigation to point navigation improves the performance further.

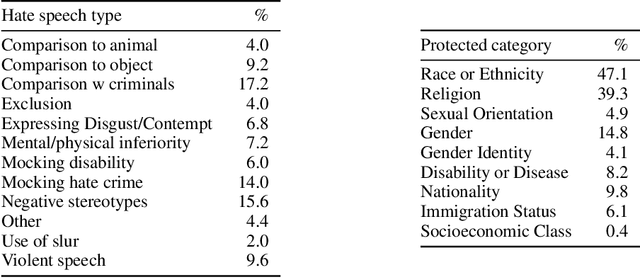

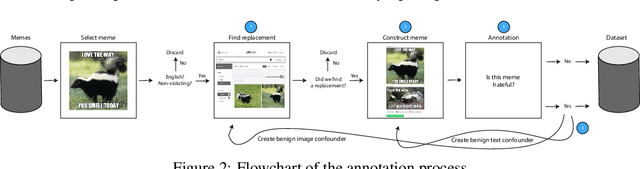

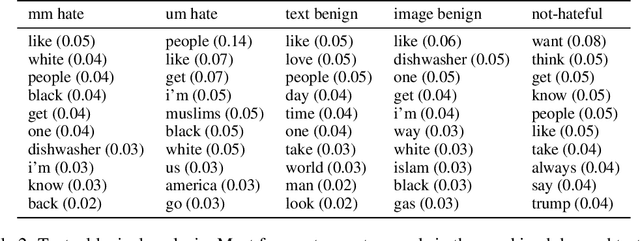

The Hateful Memes Challenge: Detecting Hate Speech in Multimodal Memes

Jun 08, 2020

Abstract:This work proposes a new challenge set for multimodal classification, focusing on detecting hate speech in multimodal memes. It is constructed such that unimodal models struggle and only multimodal models can succeed: difficult examples ("benign confounders") are added to the dataset to make it hard to rely on unimodal signals. The task requires subtle reasoning, yet is straightforward to evaluate as a binary classification problem. We provide baseline performance numbers for unimodal models, as well as for multimodal models with various degrees of sophistication. We find that state-of-the-art methods perform poorly compared to humans (64.73% vs. 84.7% accuracy), illustrating the difficulty of the task and highlighting the challenge that this important problem poses to the community.

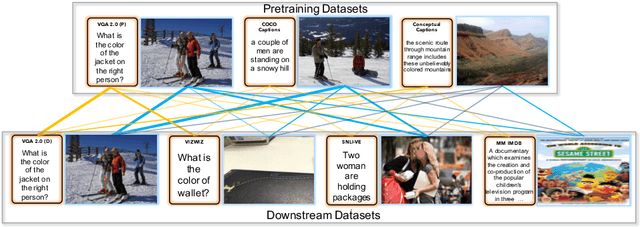

Are we pretraining it right? Digging deeper into visio-linguistic pretraining

Apr 19, 2020

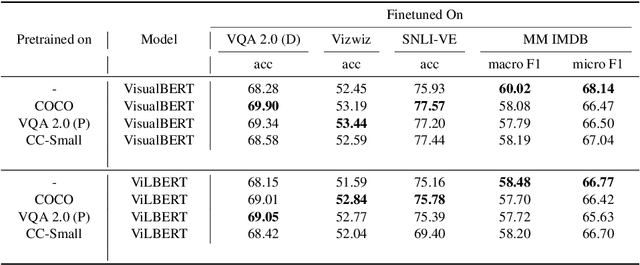

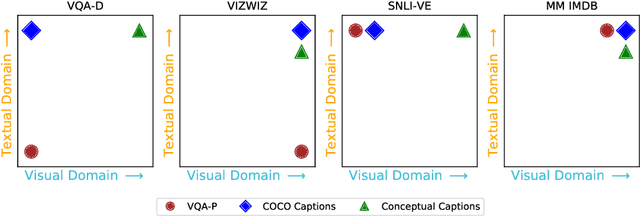

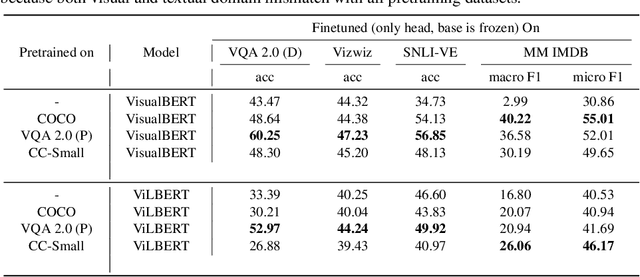

Abstract:Numerous recent works have proposed pretraining generic visio-linguistic representations and then finetuning them for downstream vision and language tasks. While architecture and objective function design choices have received attention, the choice of pretraining datasets has received little attention. In this work, we question some of the default choices made in literature. For instance, we systematically study how varying similarity between the pretraining dataset domain (textual and visual) and the downstream domain affects performance. Surprisingly, we show that automatically generated data in a domain closer to the downstream task (e.g., VQA v2) is a better choice for pretraining than "natural" data but of a slightly different domain (e.g., Conceptual Captions). On the other hand, some seemingly reasonable choices of pretraining datasets were found to be entirely ineffective for some downstream tasks. This suggests that despite the numerous recent efforts, vision & language pretraining does not quite work "out of the box" yet. Overall, as a by-product of our study, we find that simple design choices in pretraining can help us achieve close to state-of-art results on downstream tasks without any architectural changes.

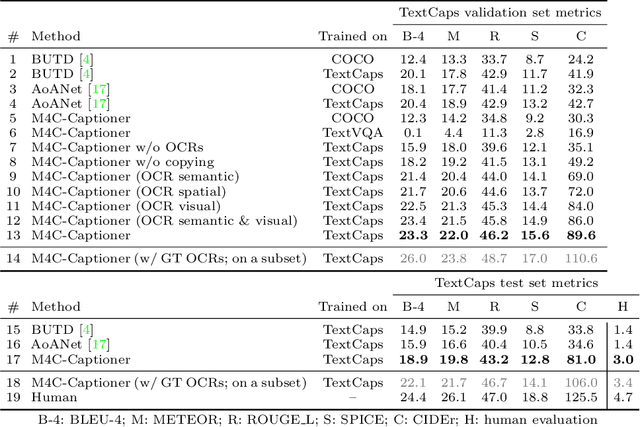

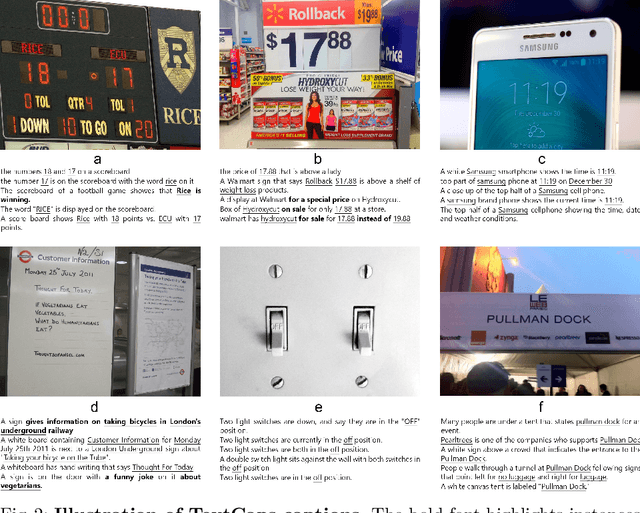

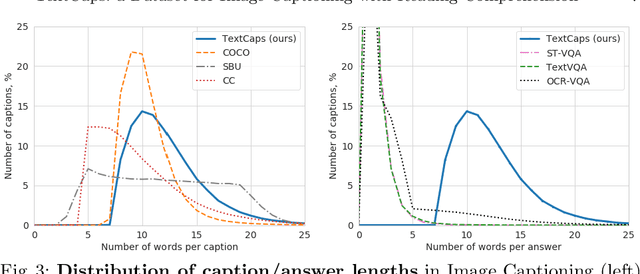

TextCaps: a Dataset for Image Captioning with Reading Comprehension

Mar 24, 2020

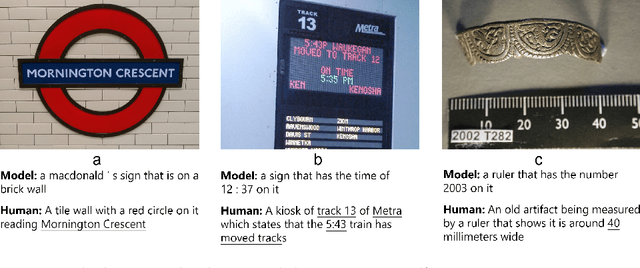

Abstract:Image descriptions can help visually impaired people to quickly understand the image content. While we made significant progress in automatically describing images and optical character recognition, current approaches are unable to include written text in their descriptions, although text is omnipresent in human environments and frequently critical to understand our surroundings. To study how to comprehend text in the context of an image we collect a novel dataset, TextCaps, with 145k captions for 28k images. Our dataset challenges a model to recognize text, relate it to its visual context, and decide what part of the text to copy or paraphrase, requiring spatial, semantic, and visual reasoning between multiple text tokens and visual entities, such as objects. We study baselines and adapt existing approaches to this new task, which we refer to as image captioning with reading comprehension. Our analysis with automatic and human studies shows that our new TextCaps dataset provides many new technical challenges over previous datasets.

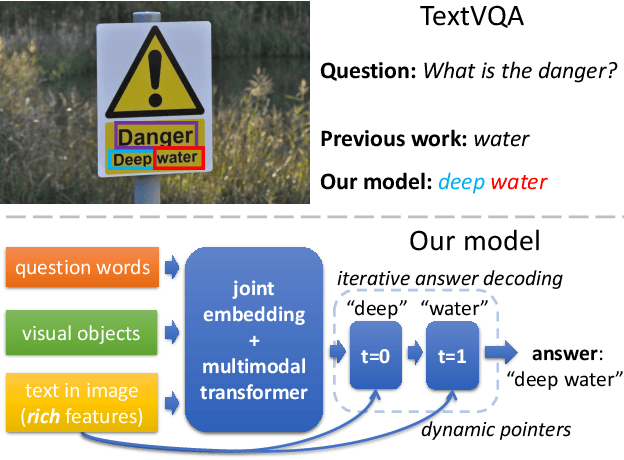

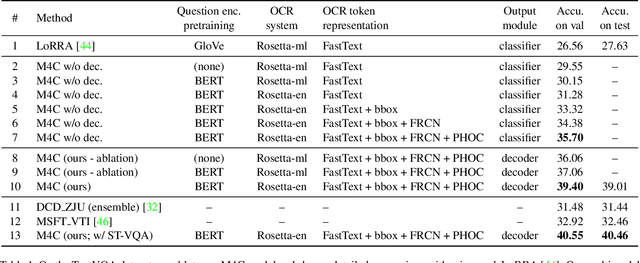

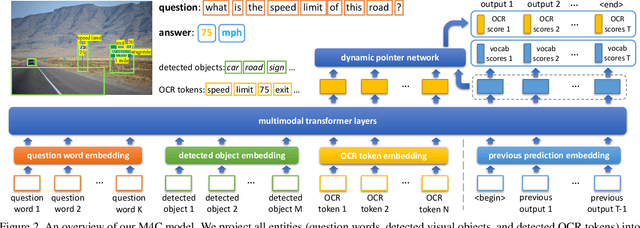

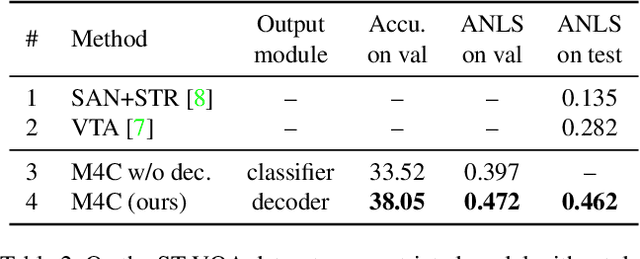

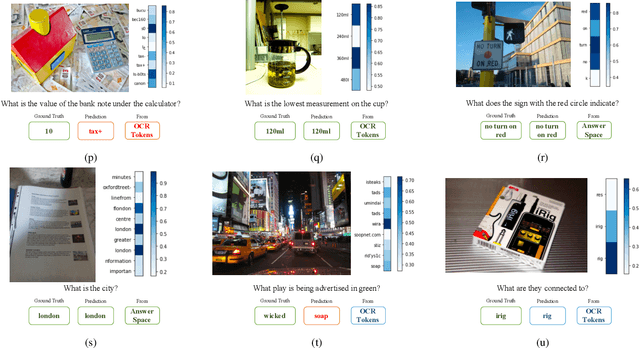

Iterative Answer Prediction with Pointer-Augmented Multimodal Transformers for TextVQA

Dec 05, 2019

Abstract:Many visual scenes contain text that carries crucial information, and it is thus essential to understand text in images for downstream reasoning tasks. For example, a deep water label on a warning sign warns people about the danger in the scene. Recent work has explored the TextVQA task that requires reading and understanding text in images to answer a question. However, existing approaches for TextVQA are mostly based on custom pairwise fusion mechanisms between a pair of two modalities and are restricted to a single prediction step by casting TextVQA as a classification task. In this work, we propose a novel model for the TextVQA task based on a multimodal transformer architecture accompanied by a rich representation for text in images. Our model naturally fuses different modalities homogeneously by embedding them into a common semantic space where self-attention is applied to model inter- and intra- modality context. Furthermore, it enables iterative answer decoding with a dynamic pointer network, allowing the model to form an answer through multi-step prediction instead of one-step classification. Our model outperforms existing approaches on three benchmark datasets for the TextVQA task by a large margin.

Towards VQA Models That Can Read

May 13, 2019

Abstract:Studies have shown that a dominant class of questions asked by visually impaired users on images of their surroundings involves reading text in the image. But today's VQA models can not read! Our paper takes a first step towards addressing this problem. First, we introduce a new "TextVQA" dataset to facilitate progress on this important problem. Existing datasets either have a small proportion of questions about text (e.g., the VQA dataset) or are too small (e.g., the VizWiz dataset). TextVQA contains 45,336 questions on 28,408 images that require reasoning about text to answer. Second, we introduce a novel model architecture that reads text in the image, reasons about it in the context of the image and the question, and predicts an answer which might be a deduction based on the text and the image or composed of the strings found in the image. Consequently, we call our approach Look, Read, Reason & Answer (LoRRA). We show that LoRRA outperforms existing state-of-the-art VQA models on our TextVQA dataset. We find that the gap between human performance and machine performance is significantly larger on TextVQA than on VQA 2.0, suggesting that TextVQA is well-suited to benchmark progress along directions complementary to VQA 2.0.

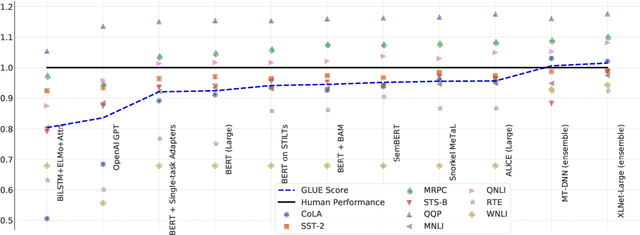

SuperGLUE: A Stickier Benchmark for General-Purpose Language Understanding Systems

May 02, 2019

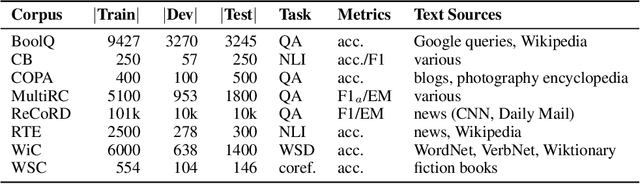

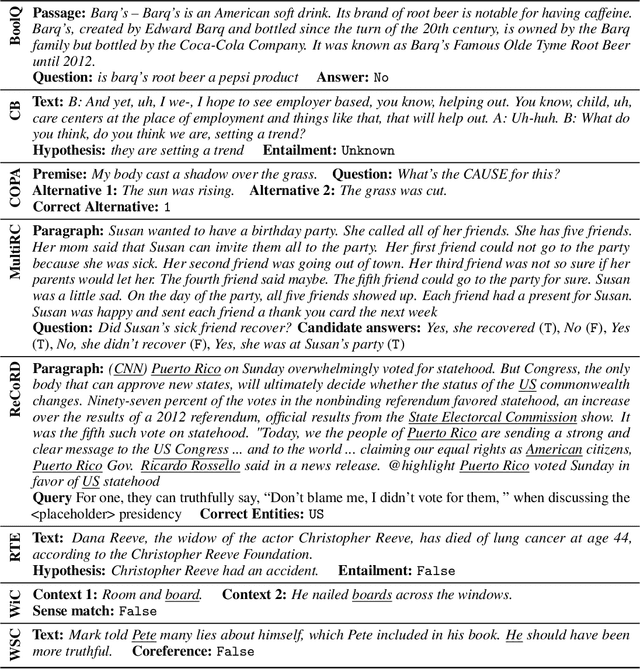

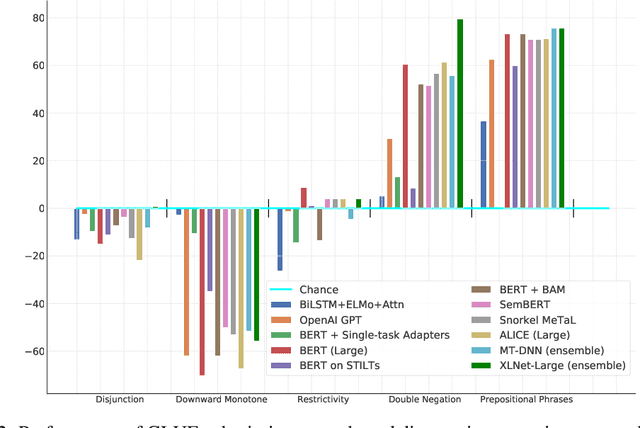

Abstract:In the last year, new models and methods for pretraining and transfer learning have driven striking performance improvements across a range of language understanding tasks. The GLUE benchmark, introduced one year ago, offers a single-number metric that summarizes progress on a diverse set of such tasks, but performance on the benchmark has recently come close to the level of non-expert humans, suggesting limited headroom for further research. This paper recaps lessons learned from the GLUE benchmark and presents SuperGLUE, a new benchmark styled after GLUE with a new set of more difficult language understanding tasks, improved resources, and a new public leaderboard. SuperGLUE will be available soon at super.gluebenchmark.com.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge