speech recognition

Speech recognition is the task of identifying words spoken aloud, analyzing the voice and language, and accurately transcribing the words.

Papers and Code

Enhancing Fully Formatted End-to-End Speech Recognition with Knowledge Distillation via Multi-Codebook Vector Quantization

Dec 22, 2025

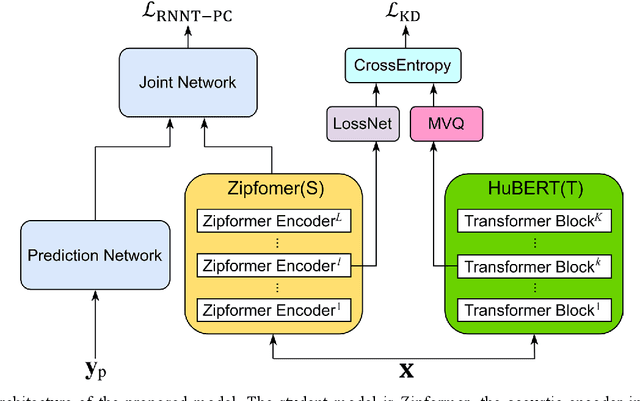

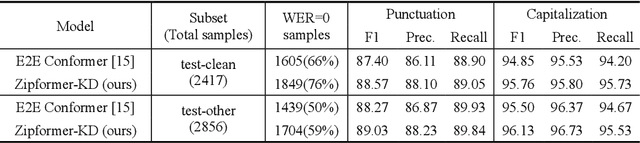

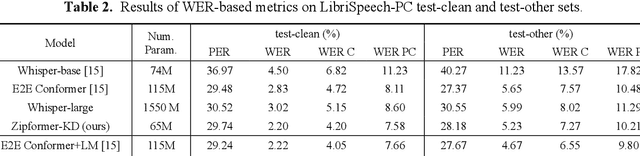

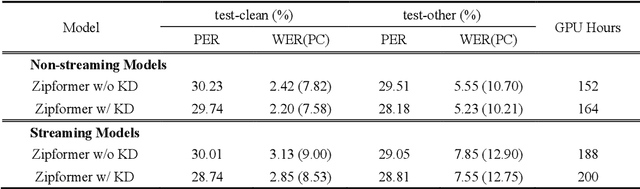

Conventional automatic speech recognition (ASR) models typically produce outputs as normalized texts lacking punctuation and capitalization, necessitating post-processing models to enhance readability. This approach, however, introduces additional complexity and latency due to the cascaded system design. In response to this challenge, there is a growing trend to develop end-to-end (E2E) ASR models capable of directly predicting punctuation and capitalization, though this area remains underexplored. In this paper, we propose an enhanced fully formatted E2E ASR model that leverages knowledge distillation (KD) through multi-codebook vector quantization (MVQ). Experimental results demonstrate that our model significantly outperforms previous works in word error rate (WER) both with and without punctuation and capitalization, and in punctuation error rate (PER). Evaluations on the LibriSpeech-PC test-clean and test-other subsets show that our model achieves state-of-the-art results.

VALLR-Pin: Dual-Decoding Visual Speech Recognition for Mandarin with Pinyin-Guided LLM Refinement

Dec 23, 2025Visual Speech Recognition aims to transcribe spoken words from silent lip-motion videos. This task is particularly challenging for Mandarin, as visemes are highly ambiguous and homophones are prevalent. We propose VALLR-Pin, a novel two-stage framework that extends the recent VALLR architecture from English to Mandarin. First, a shared video encoder feeds into dual decoders, which jointly predict both Chinese character sequences and their standard Pinyin romanization. The multi-task learning of character and phonetic outputs fosters robust visual-semantic representations. During inference, the text decoder generates multiple candidate transcripts. We construct a prompt by concatenating the Pinyin output with these candidate Chinese sequences and feed it to a large language model to resolve ambiguities and refine the transcription. This provides the LLM with explicit phonetic context to correct homophone-induced errors. Finally, we fine-tune the LLM on synthetic noisy examples: we generate imperfect Pinyin-text pairs from intermediate VALLR-Pin checkpoints using the training data, creating instruction-response pairs for error correction. This endows the LLM with awareness of our model's specific error patterns. In summary, VALLR-Pin synergizes visual features with phonetic and linguistic context to improve Mandarin lip-reading performance.

PROFASR-BENCH: A Benchmark for Context-Conditioned ASR in High-Stakes Professional Speech

Dec 29, 2025Automatic Speech Recognition (ASR) in professional settings faces challenges that existing benchmarks underplay: dense domain terminology, formal register variation, and near-zero tolerance for critical entity errors. We present ProfASR-Bench, a professional-talk evaluation suite for high-stakes applications across finance, medicine, legal, and technology. Each example pairs a natural-language prompt (domain cue and/or speaker profile) with an entity-rich target utterance, enabling controlled measurement of context-conditioned recognition. The corpus supports conventional ASR metrics alongside entity-aware scores and slice-wise reporting by accent and gender. Using representative families Whisper (encoder-decoder ASR) and Qwen-Omni (audio language models) under matched no-context, profile, domain+profile, oracle, and adversarial conditions, we find a consistent pattern: lightweight textual context produces little to no change in average word error rate (WER), even with oracle prompts, and adversarial prompts do not reliably degrade performance. We term this the context-utilization gap (CUG): current systems are nominally promptable yet underuse readily available side information. ProfASR-Bench provides a standardized context ladder, entity- and slice-aware reporting with confidence intervals, and a reproducible testbed for comparing fusion strategies across model families. Dataset: https://huggingface.co/datasets/prdeepakbabu/ProfASR-Bench Code: https://github.com/prdeepakbabu/ProfASR-Bench

TICL+: A Case Study On Speech In-Context Learning for Children's Speech Recognition

Dec 20, 2025Children's speech recognition remains challenging due to substantial acoustic and linguistic variability, limited labeled data, and significant differences from adult speech. Speech foundation models can address these challenges through Speech In-Context Learning (SICL), allowing adaptation to new domains without fine-tuning. However, the effectiveness of SICL depends on how in-context examples are selected. We extend an existing retrieval-based method, Text-Embedding KNN for SICL (TICL), introducing an acoustic reranking step to create TICL+. This extension prioritizes examples that are both semantically and acoustically aligned with the test input. Experiments on four children's speech corpora show that TICL+ achieves up to a 53.3% relative word error rate reduction over zero-shot performance and 37.6% over baseline TICL, highlighting the value of combining semantic and acoustic information for robust, scalable ASR in children's speech.

Phoneme-based speech recognition driven by large language models and sampling marginalization

Dec 20, 2025Recently, the Large Language Model-based Phoneme-to-Grapheme (LLM-P2G) method has shown excellent performance in speech recognition tasks and has become a feasible direction to replace the traditional WFST decoding method. This framework takes into account both recognition accuracy and system scalability through two-stage modeling of phoneme prediction and text generation. However, the existing LLM-P2G adopts the Top-K Marginalized (TKM) training strategy, and its candidate phoneme sequences rely on beam search generation, which has problems such as insufficient path diversity, low training efficiency, and high resource overhead. To this end, this paper proposes a sampling marginalized training strategy (Sampling-K Marginalized, SKM), which replaces beam search with random sampling to generate candidate paths, improving marginalized modeling and training efficiency. Experiments were conducted on Polish and German datasets, and the results showed that SKM further improved the model learning convergence speed and recognition performance while maintaining the complexity of the model. Comparative experiments with a speech recognition method that uses a projector combined with a large language model (SpeechLLM) also show that the SKM-driven LLM-P2G has more advantages in recognition accuracy and structural simplicity. The study verified the practical value and application potential of this method in cross-language speech recognition systems.

Contextual Biasing for LLM-Based ASR with Hotword Retrieval and Reinforcement Learning

Dec 26, 2025

Large language model (LLM)-based automatic speech recognition (ASR) has recently achieved strong performance across diverse tasks, yet contextual biasing for named entities and hotwords under large vocabularies remains challenging. In this work, we propose a scalable two-stage framework that integrates hotword retrieval with LLM-ASR adaptation. First, we extend the Global-Local Contrastive Language-Audio pre-trained model (GLCLAP) to retrieve a compact top-k set of hotword candidates from a large vocabulary via robustness-aware data augmentation and fuzzy matching. Second, we inject the retrieved candidates as textual prompts into an LLM-ASR model and fine-tune it with Generative Rejection-Based Policy Optimization (GRPO), using a task-driven reward that jointly optimizes hotword recognition and overall transcription accuracy. Experiments on hotword-focused test sets show substantial keyword error rate (KER) reductions while maintaining sentence accuracy on general ASR benchmarks, demonstrating the effectiveness of the proposed framework for large-vocabulary contextual biasing.

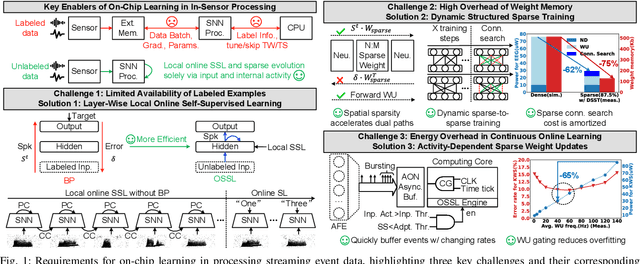

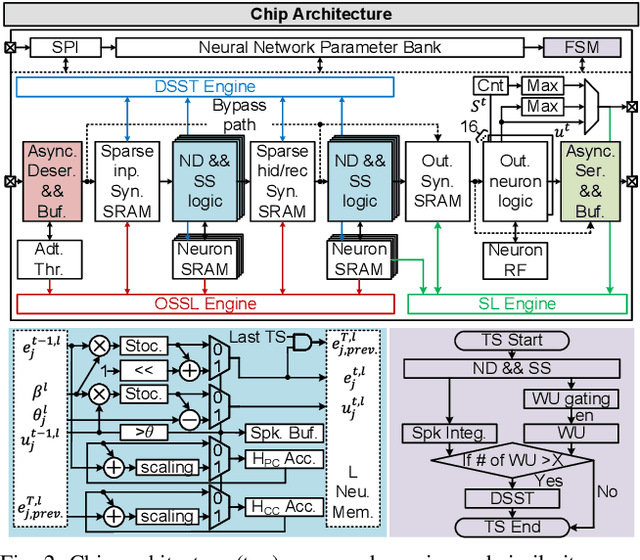

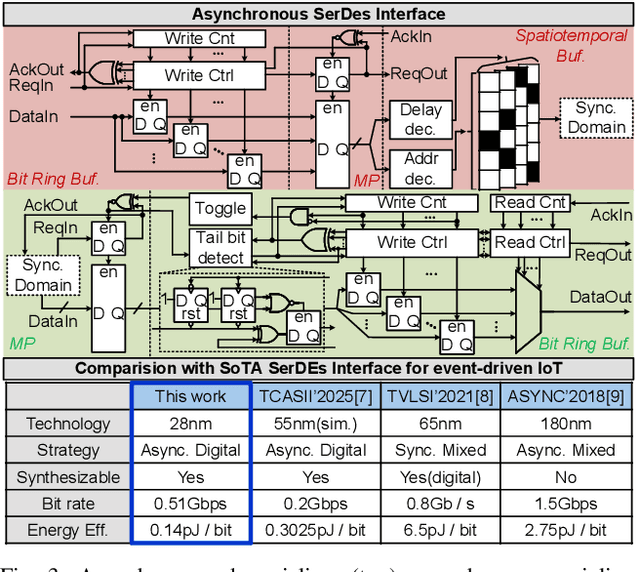

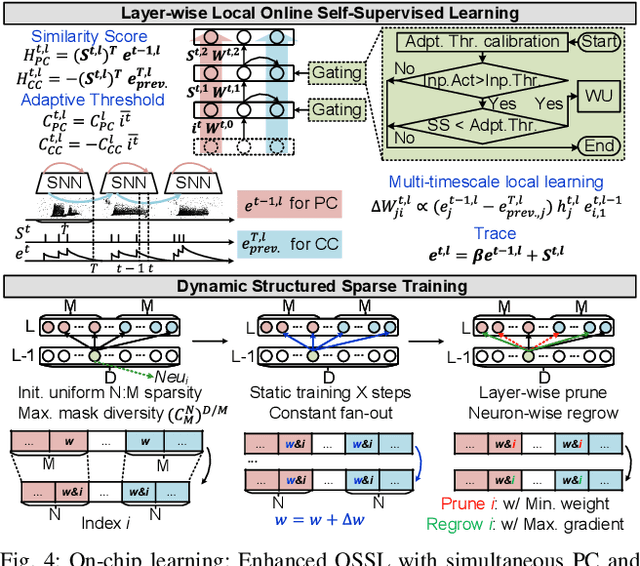

ElfCore: A 28nm Neural Processor Enabling Dynamic Structured Sparse Training and Online Self-Supervised Learning with Activity-Dependent Weight Update

Dec 24, 2025

In this paper, we present ElfCore, a 28nm digital spiking neural network processor tailored for event-driven sensory signal processing. ElfCore is the first to efficiently integrate: (1) a local online self-supervised learning engine that enables multi-layer temporal learning without labeled inputs; (2) a dynamic structured sparse training engine that supports high-accuracy sparse-to-sparse learning; and (3) an activity-dependent sparse weight update mechanism that selectively updates weights based solely on input activity and network dynamics. Demonstrated on tasks including gesture recognition, speech, and biomedical signal processing, ElfCore outperforms state-of-the-art solutions with up to 16X lower power consumption, 3.8X reduced on-chip memory requirements, and 5.9X greater network capacity efficiency.

* This paper has been published in the proceedings of the 2025 IEEE European Solid-State Electronics Research Conference (ESSERC)

Explainable Transformer-CNN Fusion for Noise-Robust Speech Emotion Recognition

Dec 20, 2025

Speech Emotion Recognition (SER) systems often degrade in performance when exposed to the unpredictable acoustic interference found in real-world environments. Additionally, the opacity of deep learning models hinders their adoption in trust-sensitive applications. To bridge this gap, we propose a Hybrid Transformer-CNN framework that unifies the contextual modeling of Wav2Vec 2.0 with the spectral stability of 1D-Convolutional Neural Networks. Our dual-stream architecture processes raw waveforms to capture long-range temporal dependencies while simultaneously extracting noise-resistant spectral features (MFCC, ZCR, RMSE) via a custom Attentive Temporal Pooling mechanism. We conducted extensive validation across four diverse benchmark datasets: RAVDESS, TESS, SAVEE, and CREMA-D. To rigorously test robustness, we subjected the model to non-stationary acoustic interference using real-world noise profiles from the SAS-KIIT dataset. The proposed framework demonstrates superior generalization and state-of-the-art accuracy across all datasets, significantly outperforming single-branch baselines under realistic environmental interference. Furthermore, we address the ``black-box" problem by integrating SHAP and Score-CAM into the evaluation pipeline. These tools provide granular visual explanations, revealing how the model strategically shifts attention between temporal and spectral cues to maintain reliability in the presence of complex environmental noise.

Zero-Shot Recognition of Dysarthric Speech Using Commercial Automatic Speech Recognition and Multimodal Large Language Models

Dec 19, 2025

Voice-based human-machine interaction is a primary modality for accessing intelligent systems, yet individuals with dysarthria face systematic exclusion due to recognition performance gaps. Whilst automatic speech recognition (ASR) achieves word error rates (WER) below 5% on typical speech, performance degrades dramatically for dysarthric speakers. Multimodal large language models (MLLMs) offer potential for leveraging contextual reasoning to compensate for acoustic degradation, yet their zero-shot capabilities remain uncharacterised. This study evaluates eight commercial speech-to-text services on the TORGO dysarthric speech corpus: four conventional ASR systems (AssemblyAI, Whisper large-v3, Deepgram Nova-3, Nova-3 Medical) and four MLLM-based systems (GPT-4o, GPT-4o Mini, Gemini 2.5 Pro, Gemini 2.5 Flash). Evaluation encompasses lexical accuracy, semantic preservation, and cost-latency trade-offs. Results demonstrate severity-dependent degradation: mild dysarthria achieves 3-5% WER approaching typical-speech benchmarks, whilst severe dysarthria exceeds 49% WER across all systems. A verbatim-transcription prompt yields architecture-specific effects: GPT-4o achieves 7.36 percentage point WER reduction with consistent improvement across all tested speakers, whilst Gemini variants exhibit degradation. Semantic metrics indicate that communicative intent remains partially recoverable despite elevated lexical error rates. These findings establish empirical baselines enabling evidence-based technology selection for assistive voice interface deployment.

Quantifying Quanvolutional Neural Networks Robustness for Speech in Healthcare Applications

Jan 05, 2026Speech-based machine learning systems are sensitive to noise, complicating reliable deployment in emotion recognition and voice pathology detection. We evaluate the robustness of a hybrid quantum machine learning model, quanvolutional neural networks (QNNs) against classical convolutional neural networks (CNNs) under four acoustic corruptions (Gaussian noise, pitch shift, temporal shift, and speed variation) in a clean-train/corrupted-test regime. Using AVFAD (voice pathology) and TESS (speech emotion), we compare three QNN models (Random, Basic, Strongly) to a simple CNN baseline (CNN-Base), ResNet-18 and VGG-16 using accuracy and corruption metrics (CE, mCE, RCE, RmCE), and analyze architectural factors (circuit complexity or depth, convergence) alongside per-emotion robustness. QNNs generally outperform the CNN-Base under pitch shift, temporal shift, and speed variation (up to 22% lower CE/RCE at severe temporal shift), while the CNN-Base remains more resilient to Gaussian noise. Among quantum circuits, QNN-Basic achieves the best overall robustness on AVFAD, and QNN-Random performs strongest on TESS. Emotion-wise, fear is most robust (80-90% accuracy under severe corruptions), neutral can collapse under strong Gaussian noise (5.5% accuracy), and happy is most vulnerable to pitch, temporal, and speed distortions. QNNs also converge up to six times faster than the CNN-Base. To our knowledge, this is a systematic study of QNN robustness for speech under common non-adversarial acoustic corruptions, indicating that shallow entangling quantum front-ends can improve noise resilience while sensitivity to additive noise remains a challenge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge