Sentiment Analysis

Sentiment analysis is the process of determining the sentiment of a piece of text, such as a tweet or a review.

Papers and Code

CLiVR: Conversational Learning System in Virtual Reality with AI-Powered Patients

Oct 21, 2025Simulations constitute a fundamental component of medical and nursing education and traditionally employ standardized patients (SP) and high-fidelity manikins to develop clinical reasoning and communication skills. However, these methods require substantial resources, limiting accessibility and scalability. In this study, we introduce CLiVR, a Conversational Learning system in Virtual Reality that integrates large language models (LLMs), speech processing, and 3D avatars to simulate realistic doctor-patient interactions. Developed in Unity and deployed on the Meta Quest 3 platform, CLiVR enables trainees to engage in natural dialogue with virtual patients. Each simulation is dynamically generated from a syndrome-symptom database and enhanced with sentiment analysis to provide feedback on communication tone. Through an expert user study involving medical school faculty (n=13), we assessed usability, realism, and perceived educational impact. Results demonstrated strong user acceptance, high confidence in educational potential, and valuable feedback for improvement. CLiVR offers a scalable, immersive supplement to SP-based training.

Divide, Cache, Conquer: Dichotomic Prompting for Efficient Multi-Label LLM-Based Classification

Nov 05, 2025We introduce a method for efficient multi-label text classification with large language models (LLMs), built on reformulating classification tasks as sequences of dichotomic (yes/no) decisions. Instead of generating all labels in a single structured response, each target dimension is queried independently, which, combined with a prefix caching mechanism, yields substantial efficiency gains for short-text inference without loss of accuracy. To demonstrate the approach, we focus on affective text analysis, covering 24 dimensions including emotions and sentiment. Using LLM-to-SLM distillation, a powerful annotator model (DeepSeek-V3) provides multiple annotations per text, which are aggregated to fine-tune smaller models (HerBERT-Large, CLARIN-1B, PLLuM-8B, Gemma3-1B). The fine-tuned models show significant improvements over zero-shot baselines, particularly on the dimensions seen during training. Our findings suggest that decomposing multi-label classification into dichotomic queries, combined with distillation and cache-aware inference, offers a scalable and effective framework for LLM-based classification. While we validate the method on affective states, the approach is general and applicable across domains.

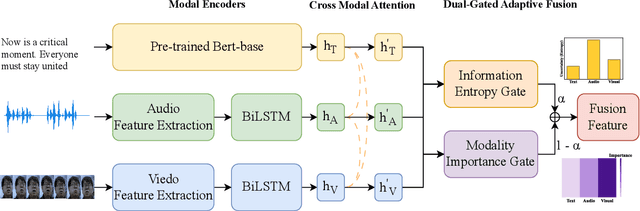

Beyond Simple Fusion: Adaptive Gated Fusion for Robust Multimodal Sentiment Analysis

Oct 02, 2025

Multimodal sentiment analysis (MSA) leverages information fusion from diverse modalities (e.g., text, audio, visual) to enhance sentiment prediction. However, simple fusion techniques often fail to account for variations in modality quality, such as those that are noisy, missing, or semantically conflicting. This oversight leads to suboptimal performance, especially in discerning subtle emotional nuances. To mitigate this limitation, we introduce a simple yet efficient \textbf{A}daptive \textbf{G}ated \textbf{F}usion \textbf{N}etwork that adaptively adjusts feature weights via a dual gate fusion mechanism based on information entropy and modality importance. This mechanism mitigates the influence of noisy modalities and prioritizes informative cues following unimodal encoding and cross-modal interaction. Experiments on CMU-MOSI and CMU-MOSEI show that AGFN significantly outperforms strong baselines in accuracy, effectively discerning subtle emotions with robust performance. Visualization analysis of feature representations demonstrates that AGFN enhances generalization by learning from a broader feature distribution, achieved by reducing the correlation between feature location and prediction error, thereby decreasing reliance on specific locations and creating more robust multimodal feature representations.

AI-Driven Contribution Evaluation and Conflict Resolution: A Framework & Design for Group Workload Investigation

Nov 10, 2025The equitable assessment of individual contribution in teams remains a persistent challenge, where conflict and disparity in workload can result in unfair performance evaluation, often requiring manual intervention - a costly and challenging process. We survey existing tool features and identify a gap in conflict resolution methods and AI integration. To address this, we propose a framework and implementation design for a novel AI-enhanced tool that assists in dispute investigation. The framework organises heterogeneous artefacts - submissions (code, text, media), communications (chat, email), coordination records (meeting logs, tasks), peer assessments, and contextual information - into three dimensions with nine benchmarks: Contribution, Interaction, and Role. Objective measures are normalised, aggregated per dimension, and paired with inequality measures (Gini index) to surface conflict markers. A Large Language Model (LLM) architecture performs validated and contextual analysis over these measures to generate interpretable and transparent advisory judgments. We argue for feasibility under current statutory and institutional policy, and outline practical analytics (sentimental, task fidelity, word/line count, etc.), bias safeguards, limitations, and practical challenges.

COLE: a Comprehensive Benchmark for French Language Understanding Evaluation

Oct 06, 2025

To address the need for a more comprehensive evaluation of French Natural Language Understanding (NLU), we introduce COLE, a new benchmark composed of 23 diverse task covering a broad range of NLU capabilities, including sentiment analysis, paraphrase detection, grammatical judgment, and reasoning, with a particular focus on linguistic phenomena relevant to the French language. We benchmark 94 large language models (LLM), providing an extensive analysis of the current state of French NLU. Our results highlight a significant performance gap between closed- and open-weights models and identify key challenging frontiers for current LLMs, such as zero-shot extractive question-answering (QA), fine-grained word sense disambiguation, and understanding of regional language variations. We release COLE as a public resource to foster further progress in French language modelling.

An Adaptive Multi Agent Bitcoin Trading System

Oct 09, 2025

This paper presents a Multi Agent Bitcoin Trading system that utilizes Large Lan- guage Models (LLMs) for alpha generation and portfolio management in the cryptocur- rencies market. Unlike equities, cryptocurrencies exhibit extreme volatility and are heavily influenced by rapidly shifting market sentiments and regulatory announcements, making them difficult to model using static regression models or neural networks trained solely on historical data [53]. The proposed framework overcomes this by structuring LLMs into specialised agents for technical analysis, sentiment evaluation, decision-making, and performance reflection. The system improves over time through a novel verbal feedback mechanism where a Reflect agent provides daily and weekly natural-language critiques of trading decisions. These textual evaluations are then injected into future prompts, al- lowing the system to adjust indicator priorities, sentiment weights, and allocation logic without parameter updates or finetuning. Back-testing on Bitcoin price data from July 2024 to April 2025 shows consistent outperformance across market regimes: the Quantita- tive agent delivered over 30% higher returns in bullish phases and 15% overall gains versus buy-and-hold, while the sentiment-driven agent turned sideways markets from a small loss into a gain of over 100%. Adding weekly feedback further improved total performance by 31% and reduced bearish losses by 10%. The results demonstrate that verbal feedback represents a new, scalable, and low-cost method of tuning LLMs for financial goals.

A Comparative Evaluation of Large Language Models for Persian Sentiment Analysis and Emotion Detection in Social Media Texts

Sep 18, 2025This study presents a comprehensive comparative evaluation of four state-of-the-art Large Language Models (LLMs)--Claude 3.7 Sonnet, DeepSeek-V3, Gemini 2.0 Flash, and GPT-4o--for sentiment analysis and emotion detection in Persian social media texts. Comparative analysis among LLMs has witnessed a significant rise in recent years, however, most of these analyses have been conducted on English language tasks, creating gaps in understanding cross-linguistic performance patterns. This research addresses these gaps through rigorous experimental design using balanced Persian datasets containing 900 texts for sentiment analysis (positive, negative, neutral) and 1,800 texts for emotion detection (anger, fear, happiness, hate, sadness, surprise). The main focus was to allow for a direct and fair comparison among different models, by using consistent prompts, uniform processing parameters, and by analyzing the performance metrics such as precision, recall, F1-scores, along with misclassification patterns. The results show that all models reach an acceptable level of performance, and a statistical comparison of the best three models indicates no significant differences among them. However, GPT-4o demonstrated a marginally higher raw accuracy value for both tasks, while Gemini 2.0 Flash proved to be the most cost-efficient. The findings indicate that the emotion detection task is more challenging for all models compared to the sentiment analysis task, and the misclassification patterns can represent some challenges in Persian language texts. These findings establish performance benchmarks for Persian NLP applications and offer practical guidance for model selection based on accuracy, efficiency, and cost considerations, while revealing cultural and linguistic challenges that require consideration in multilingual AI system deployment.

Fuzzy Reasoning Chain (FRC): An Innovative Reasoning Framework from Fuzziness to Clarity

Sep 26, 2025

With the rapid advancement of large language models (LLMs), natural language processing (NLP) has achieved remarkable progress. Nonetheless, significant challenges remain in handling texts with ambiguity, polysemy, or uncertainty. We introduce the Fuzzy Reasoning Chain (FRC) framework, which integrates LLM semantic priors with continuous fuzzy membership degrees, creating an explicit interaction between probability-based reasoning and fuzzy membership reasoning. This transition allows ambiguous inputs to be gradually transformed into clear and interpretable decisions while capturing conflicting or uncertain signals that traditional probability-based methods cannot. We validate FRC on sentiment analysis tasks, where both theoretical analysis and empirical results show that it ensures stable reasoning and facilitates knowledge transfer across different model scales. These findings indicate that FRC provides a general mechanism for managing subtle and ambiguous expressions with improved interpretability and robustness.

Analogy-Driven Financial Chain-of-Thought (AD-FCoT): A Prompting Approach for Financial Sentiment Analysis

Sep 16, 2025Financial news sentiment analysis is crucial for anticipating market movements. With the rise of AI techniques such as Large Language Models (LLMs), which demonstrate strong text understanding capabilities, there has been renewed interest in enhancing these systems. Existing methods, however, often struggle to capture the complex economic context of news and lack transparent reasoning, which undermines their reliability. We propose Analogy-Driven Financial Chain-of-Thought (AD-FCoT), a prompting framework that integrates analogical reasoning with chain-of-thought (CoT) prompting for sentiment prediction on historical financial news. AD-FCoT guides LLMs to draw parallels between new events and relevant historical scenarios with known outcomes, embedding these analogies into a structured, step-by-step reasoning chain. To our knowledge, this is among the first approaches to explicitly combine analogical examples with CoT reasoning in finance. Operating purely through prompting, AD-FCoT requires no additional training data or fine-tuning and leverages the model's internal financial knowledge to generate rationales that mirror human analytical reasoning. Experiments on thousands of news articles show that AD-FCoT outperforms strong baselines in sentiment classification accuracy and achieves substantially higher correlation with market returns. Its generated explanations also align with domain expertise, providing interpretable insights suitable for real-world financial analysis.

Fairness Testing in Retrieval-Augmented Generation: How Small Perturbations Reveal Bias in Small Language Models

Sep 30, 2025Large Language Models (LLMs) are widely used across multiple domains but continue to raise concerns regarding security and fairness. Beyond known attack vectors such as data poisoning and prompt injection, LLMs are also vulnerable to fairness bugs. These refer to unintended behaviors influenced by sensitive demographic cues (e.g., race or sexual orientation) that should not affect outcomes. Another key issue is hallucination, where models generate plausible yet false information. Retrieval-Augmented Generation (RAG) has emerged as a strategy to mitigate hallucinations by combining external retrieval with text generation. However, its adoption raises new fairness concerns, as the retrieved content itself may surface or amplify bias. This study conducts fairness testing through metamorphic testing (MT), introducing controlled demographic perturbations in prompts to assess fairness in sentiment analysis performed by three Small Language Models (SLMs) hosted on HuggingFace (Llama-3.2-3B-Instruct, Mistral-7B-Instruct-v0.3, and Llama-3.1-Nemotron-8B), each integrated into a RAG pipeline. Results show that minor demographic variations can break up to one third of metamorphic relations (MRs). A detailed analysis of these failures reveals a consistent bias hierarchy, with perturbations involving racial cues being the predominant cause of the violations. In addition to offering a comparative evaluation, this work reinforces that the retrieval component in RAG must be carefully curated to prevent bias amplification. The findings serve as a practical alert for developers, testers and small organizations aiming to adopt accessible SLMs without compromising fairness or reliability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge