League Of Legends

Papers and Code

PandaSkill -- Player Performance and Skill Rating in Esports: Application to League of Legends

Jan 20, 2025To take the esports scene to the next level, we introduce PandaSkill, a framework for assessing player performance and skill rating. Traditional rating systems like Elo and TrueSkill often overlook individual contributions and face challenges in professional esports due to limited game data and fragmented competitive scenes. PandaSkill leverages machine learning to estimate in-game player performance from individual player statistics. Each in-game role is modeled independently, ensuring a fair comparison between them. Then, using these performance scores, PandaSkill updates the player skill ratings using the Bayesian framework OpenSkill in a free-for-all setting. In this setting, skill ratings are updated solely based on performance scores rather than game outcomes, hightlighting individual contributions. To address the challenge of isolated rating pools that hinder cross-regional comparisons, PandaSkill introduces a dual-rating system that combines players' regional ratings with a meta-rating representing each region's overall skill level. Applying PandaSkill to five years of professional League of Legends matches worldwide, we show that our method produces skill ratings that better predict game outcomes and align more closely with expert opinions compared to existing methods.

Voice Communication Analysis in Esports

Nov 21, 2024

In most team-based esports, voice communications are prominent in the team efficiency and synergy. In fact it has been observed that not only the skill aspect of the team but also the team effective voice communication comes into play when trying to have good performance in official matches. With the recent emergence of LLM (Large Language Models) tools regarding NLP (Natural Language Processing) (Vaswani et. al.), we decided to try applying them in order to have a better understanding on how to improve the effectiveness of the voice communications. In this paper the study has been made through the prism of League of Legends esport. However the main concepts and ideas can be easily applicable in any other team related esports.

A Framework for Predicting the Impact of Game Balance Changes through Meta Discovery

Sep 11, 2024A metagame is a collection of knowledge that goes beyond the rules of a game. In competitive, team-based games like Pok\'emon or League of Legends, it refers to the set of current dominant characters and/or strategies within the player base. Developer changes to the balance of the game can have drastic and unforeseen consequences on these sets of meta characters. A framework for predicting the impact of balance changes could aid developers in making more informed balance decisions. In this paper we present such a Meta Discovery framework, leveraging Reinforcement Learning for automated testing of balance changes. Our results demonstrate the ability to predict the outcome of balance changes in Pok\'emon Showdown, a collection of competitive Pok\'emon tiers, with high accuracy.

Identifying and Clustering Counter Relationships of Team Compositions in PvP Games for Efficient Balance Analysis

Aug 30, 2024

How can balance be quantified in game settings? This question is crucial for game designers, especially in player-versus-player (PvP) games, where analyzing the strength relations among predefined team compositions-such as hero combinations in multiplayer online battle arena (MOBA) games or decks in card games-is essential for enhancing gameplay and achieving balance. We have developed two advanced measures that extend beyond the simplistic win rate to quantify balance in zero-sum competitive scenarios. These measures are derived from win value estimations, which employ strength rating approximations via the Bradley-Terry model and counter relationship approximations via vector quantization, significantly reducing the computational complexity associated with traditional win value estimations. Throughout the learning process of these models, we identify useful categories of compositions and pinpoint their counter relationships, aligning with the experiences of human players without requiring specific game knowledge. Our methodology hinges on a simple technique to enhance codebook utilization in discrete representation with a deterministic vector quantization process for an extremely small state space. Our framework has been validated in popular online games, including Age of Empires II, Hearthstone, Brawl Stars, and League of Legends. The accuracy of the observed strength relations in these games is comparable to traditional pairwise win value predictions, while also offering a more manageable complexity for analysis. Ultimately, our findings contribute to a deeper understanding of PvP game dynamics and present a methodology that significantly improves game balance evaluation and design.

League of Legends: Real-Time Result Prediction

Sep 02, 2023

This paper presents a study on the prediction of outcomes in matches of the electronic game League of Legends (LoL) using machine learning techniques. With the aim of exploring the ability to predict real-time results, considering different variables and stages of the match, we highlight the use of unpublished data as a fundamental part of this process. With the increasing popularity of LoL and the emergence of tournaments, betting related to the game has also emerged, making the investigation in this area even more relevant. A variety of models were evaluated and the results were encouraging. A model based on LightGBM showed the best performance, achieving an average accuracy of 81.62\% in intermediate stages of the match when the percentage of elapsed time was between 60\% and 80\%. On the other hand, the Logistic Regression and Gradient Boosting models proved to be more effective in early stages of the game, with promising results. This study contributes to the field of machine learning applied to electronic games, providing valuable insights into real-time prediction in League of Legends. The results obtained may be relevant for both players seeking to improve their strategies and the betting industry related to the game.

Esports Data-to-commentary Generation on Large-scale Data-to-text Dataset

Dec 21, 2022

Esports, a sports competition using video games, has become one of the most important sporting events in recent years. Although the amount of esports data is increasing than ever, only a small fraction of those data accompanies text commentaries for the audience to retrieve and understand the plays. Therefore, in this study, we introduce a task of generating game commentaries from structured data records to address the problem. We first build a large-scale esports data-to-text dataset using structured data and commentaries from a popular esports game, League of Legends. On this dataset, we devise several data preprocessing methods including linearization and data splitting to augment its quality. We then introduce several baseline encoder-decoder models and propose a hierarchical model to generate game commentaries. Considering the characteristics of esports commentaries, we design evaluation metrics including three aspects of the output: correctness, fluency, and strategic depth. Experimental results on our large-scale esports dataset confirmed the advantage of the hierarchical model, and the results revealed several challenges of this novel task.

Action2Score: An Embedding Approach To Score Player Action

Jul 21, 2022

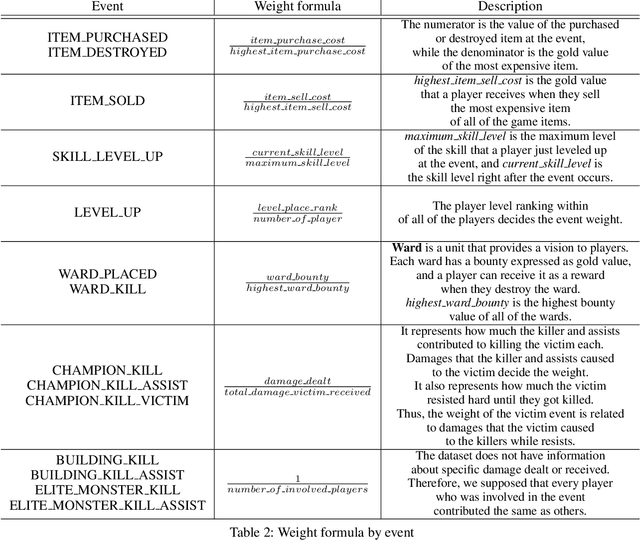

Multiplayer Online Battle Arena (MOBA) is one of the most successful game genres. MOBA games such as League of Legends have competitive environments where players race for their rank. In most MOBA games, a player's rank is determined by the match result (win or lose). It seems natural because of the nature of team play, but in some sense, it is unfair because the players who put a lot of effort lose their rank just in case of loss and some players even get free-ride on teammates' efforts in case of a win. To reduce the side-effects of the team-based ranking system and evaluate a player's performance impartially, we propose a novel embedding model that converts a player's actions into quantitative scores based on the actions' respective contribution to the team's victory. Our model is built using a sequence-based deep learning model with a novel loss function working on the team match. The sequence-based deep learning model process the action sequence from the game start to the end of a player in a team play using a GRU unit that takes a hidden state from the previous step and the current input selectively. The loss function is designed to help the action score to reflect the final score and the success of the team. We showed that our model can evaluate a player's individual performance fairly and analyze the contributions of the player's respective actions.

Mastering Strategy Card Game (Hearthstone) with Improved Techniques

Mar 09, 2023Strategy card game is a well-known genre that is demanding on the intelligent game-play and can be an ideal test-bench for AI. Previous work combines an end-to-end policy function and an optimistic smooth fictitious play, which shows promising performances on the strategy card game Legend of Code and Magic. In this work, we apply such algorithms to Hearthstone, a famous commercial game that is more complicated in game rules and mechanisms. We further propose several improved techniques and consequently achieve significant progress. For a machine-vs-human test we invite a Hearthstone streamer whose best rank was top 10 of the official league in China region that is estimated to be of millions of players. Our models defeat the human player in all Best-of-5 tournaments of full games (including both deck building and battle), showing a strong capability of decision making.

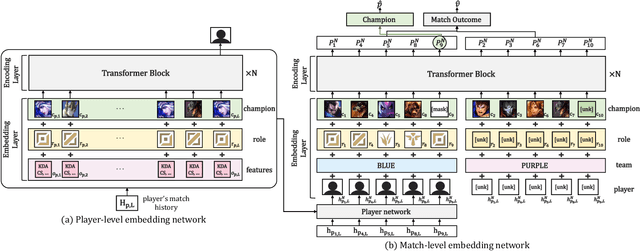

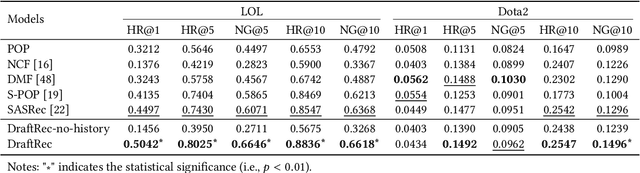

DraftRec: Personalized Draft Recommendation for Winning in Multi-Player Online Battle Arena Games

Apr 27, 2022

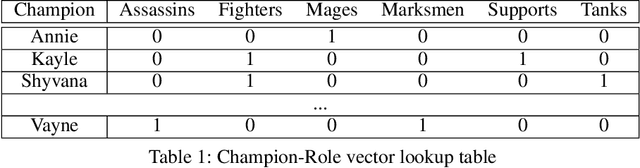

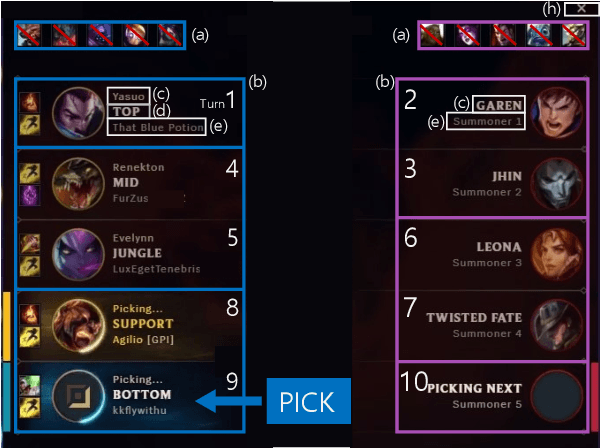

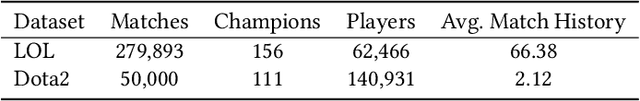

This paper presents a personalized character recommendation system for Multiplayer Online Battle Arena (MOBA) games which are considered as one of the most popular online video game genres around the world. When playing MOBA games, players go through a draft stage, where they alternately select a virtual character to play. When drafting, players select characters by not only considering their character preferences, but also the synergy and competence of their team's character combination. However, the complexity of drafting induces difficulties for beginners to choose the appropriate characters based on the characters of their team while considering their own champion preferences. To alleviate this problem, we propose DraftRec, a novel hierarchical model which recommends characters by considering each player's champion preferences and the interaction between the players. DraftRec consists of two networks: the player network and the match network. The player network captures the individual player's champion preference, and the match network integrates the complex relationship between the players and their respective champions. We train and evaluate our model from a manually collected 280,000 matches of League of Legends and a publicly available 50,000 matches of Dota2. Empirically, our method achieved state-of-the-art performance in character recommendation and match outcome prediction task. Furthermore, a comprehensive user survey confirms that DraftRec provides convincing and satisfying recommendations. Our code and dataset are available at https://github.com/dojeon-ai/DraftRec.

Using Machine Learning to Predict Game Outcomes Based on Player-Champion Experience in League of Legends

Aug 05, 2021

League of Legends (LoL) is the most widely played multiplayer online battle arena (MOBA) game in the world. An important aspect of LoL is competitive ranked play, which utilizes a skill-based matchmaking system to form fair teams. However, players' skill levels vary widely depending on which champion, or hero, that they choose to play as. In this paper, we propose a method for predicting game outcomes in ranked LoL games based on players' experience with their selected champion. Using a deep neural network, we found that game outcomes can be predicted with 75.1% accuracy after all players have selected champions, which occurs before gameplay begins. Our results have important implications for playing LoL and matchmaking. Firstly, individual champion skill plays a significant role in the outcome of a match, regardless of team composition. Secondly, even after the skill-based matchmaking, there is still a wide variance in team skill before gameplay begins. Finally, players should only play champions that they have mastered, if they want to win games.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge