Cpp

Papers and Code

Phase-Only Positioning in Distributed MIMO Under Phase Impairments: AP Selection Using Deep Learning

Feb 04, 2026Carrier phase positioning (CPP) can enable cm-level accuracy in next-generation wireless systems, while recent literature shows that accuracy remains high using phase-only measurements in distributed MIMO (D-MIMO). However, the impact of phase synchronization errors on such systems remains insufficiently explored. To address this gap, we first show that the proposed hyperbola intersection method achieves highly accurate positioning even in the presence of phase synchronization errors, when trained on appropriate data reflecting such impairments. We then introduce a deep learning (DL)-based D-MIMO antenna point (AP) selection framework that ensures high-precision localization under phase synchronization errors. Simulation results show that the proposed framework improves positioning accuracy compared to prior-art methods, while reducing inference complexity by approximately 19.7%.

Reinforcement Learning-Based Energy-Aware Coverage Path Planning for Precision Agriculture

Jan 23, 2026Coverage Path Planning (CPP) is a fundamental capability for agricultural robots; however, existing solutions often overlook energy constraints, resulting in incomplete operations in large-scale or resource-limited environments. This paper proposes an energy-aware CPP framework grounded in Soft Actor-Critic (SAC) reinforcement learning, designed for grid-based environments with obstacles and charging stations. To enable robust and adaptive decision-making under energy limitations, the framework integrates Convolutional Neural Networks (CNNs) for spatial feature extraction and Long Short-Term Memory (LSTM) networks for temporal dynamics. A dedicated reward function is designed to jointly optimize coverage efficiency, energy consumption, and return-to-base constraints. Experimental results demonstrate that the proposed approach consistently achieves over 90% coverage while ensuring energy safety, outperforming traditional heuristic algorithms such as Rapidly-exploring Random Tree (RRT), Particle Swarm Optimization (PSO), and Ant Colony Optimization (ACO) baselines by 13.4-19.5% in coverage and reducing constraint violations by 59.9-88.3%. These findings validate the proposed SAC-based framework as an effective and scalable solution for energy-constrained CPP in agricultural robotics.

* Accepted by RACS '25: International Conference on Research in Adaptive and Convergent Systems, November 16-19, 2025, Ho Chi Minh, Vietnam. 10 pages, 5 figures

Evolving Without Ending: Unifying Multimodal Incremental Learning for Continual Panoptic Perception

Jan 22, 2026Continual learning (CL) is a great endeavour in developing intelligent perception AI systems. However, the pioneer research has predominantly focus on single-task CL, which restricts the potential in multi-task and multimodal scenarios. Beyond the well-known issue of catastrophic forgetting, the multi-task CL also brings semantic obfuscation across multimodal alignment, leading to severe model degradation during incremental training steps. In this paper, we extend CL to continual panoptic perception (CPP), integrating multimodal and multi-task CL to enhance comprehensive image perception through pixel-level, instance-level, and image-level joint interpretation. We formalize the CL task in multimodal scenarios and propose an end-to-end continual panoptic perception model. Concretely, CPP model features a collaborative cross-modal encoder (CCE) for multimodal embedding. We also propose a malleable knowledge inheritance module via contrastive feature distillation and instance distillation, addressing catastrophic forgetting from task-interactive boosting manner. Furthermore, we propose a cross-modal consistency constraint and develop CPP+, ensuring multimodal semantic alignment for model updating under multi-task incremental scenarios. Additionally, our proposed model incorporates an asymmetric pseudo-labeling manner, enabling model evolving without exemplar replay. Extensive experiments on multimodal datasets and diverse CL tasks demonstrate the superiority of the proposed model, particularly in fine-grained CL tasks.

Multi-band Carrier Phase Positioning toward 6G: Performance Bounds and Efficient Estimators

Jan 08, 2026In addition to satellite systems, carrier phase positioning (CPP) is gaining attraction also in terrestrial mobile networks, particularly in 5G New Radio evolution toward 6G. One key challenge is to resolve the integer ambiguity problem, as the carrier phase provides only relative position information. This work introduces and studies a multi-band CPP scenario with intra- and inter-band carrier aggregation (CA) opportunities across FR1, mmWave-FR2, and emerging 6G FR3 bands. Specifically, we derive multi-band CPP performance bounds, showcasing the superiority of multi-band CPP for high-precision localization in current and future mobile networks, while noting also practical imperfections such as clock offsets between the user equipment (UE) and the network as well as mutual clock imperfections between the network nodes. A wide collection of numerical results is provided, covering the impacts of the available carrier bandwidth, number of aggregated carriers, transmit power, and the number of network nodes or base stations. The offered results highlight that only two carriers suffice to substantially facilitate resolving the integer ambiguity problem while also largely enhancing the robustness of positioning against imperfections imposed by the network-side clocks and multi-path propagation. In addition, we also propose a two-stage practical estimator that achieves the derived bounds under all realistic bandwidth and transmit power conditions. Furthermore, we show that with an additional search-based refinement step, the proposed estimator becomes particularly suitable for narrowband Internet of Things applications operating efficiently even under narrow carrier bandwidths. Finally, both the derived bounds and the proposed estimators are extended to scenarios where the bands assigned to each base station are nonuniform or fully disjoint, enhancing the practical deployment flexibility.

Beyond Coverage Path Planning: Can UAV Swarms Perfect Scattered Regions Inspections?

Dec 29, 2025Unmanned Aerial Vehicles (UAVs) have revolutionized inspection tasks by offering a safer, more efficient, and flexible alternative to traditional methods. However, battery limitations often constrain their effectiveness, necessitating the development of optimized flight paths and data collection techniques. While existing approaches like coverage path planning (CPP) ensure comprehensive data collection, they can be inefficient, especially when inspecting multiple non connected Regions of Interest (ROIs). This paper introduces the Fast Inspection of Scattered Regions (FISR) problem and proposes a novel solution, the multi UAV Disjoint Areas Inspection (mUDAI) method. The introduced approach implements a two fold optimization procedure, for calculating the best image capturing positions and the most efficient UAV trajectories, balancing data resolution and operational time, minimizing redundant data collection and resource consumption. The mUDAI method is designed to enable rapid, efficient inspections of scattered ROIs, making it ideal for applications such as security infrastructure assessments, agricultural inspections, and emergency site evaluations. A combination of simulated evaluations and real world deployments is used to validate and quantify the method's ability to improve operational efficiency while preserving high quality data capture, demonstrating its effectiveness in real world operations. An open source Python implementation of the mUDAI method can be found on GitHub (https://github.com/soc12/mUDAI) and the collected and processed data from the real world experiments are all hosted on Zenodo (https://zenodo.org/records/13866483). Finally, this online platform (https://sites.google.com/view/mudai-platform/) allows interested readers to interact with the mUDAI method and generate their own multi UAV FISR missions.

AKG kernel Agent: A Multi-Agent Framework for Cross-Platform Kernel Synthesis

Dec 29, 2025Modern AI models demand high-performance computation kernels. The growing complexity of LLMs, multimodal architectures, and recommendation systems, combined with techniques like sparsity and quantization, creates significant computational challenges. Moreover, frequent hardware updates and diverse chip architectures further complicate this landscape, requiring tailored kernel implementations for each platform. However, manual optimization cannot keep pace with these demands, creating a critical bottleneck in AI system development. Recent advances in LLM code generation capabilities have opened new possibilities for automating kernel development. In this work, we propose AKG kernel agent (AI-driven Kernel Generator), a multi-agent system that automates kernel generation, migration, and performance tuning. AKG kernel agent is designed to support multiple domain-specific languages (DSLs), including Triton, TileLang, CPP, and CUDA-C, enabling it to target different hardware backends while maintaining correctness and portability. The system's modular design allows rapid integration of new DSLs and hardware targets. When evaluated on KernelBench using Triton DSL across GPU and NPU backends, AKG kernel agent achieves an average speedup of 1.46$\times$ over PyTorch Eager baselines implementations, demonstrating its effectiveness in accelerating kernel development for modern AI workloads.

Failure Tolerant Phase-Only Indoor Positioning via Deep Learning

Aug 20, 2025

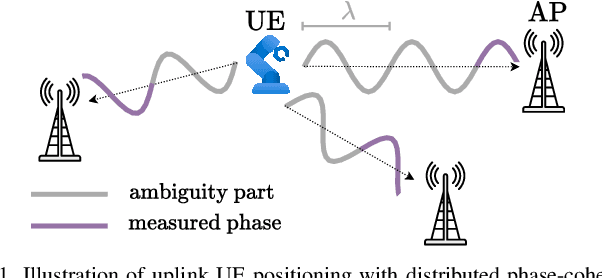

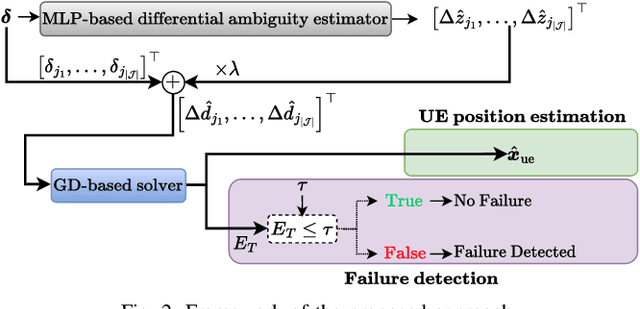

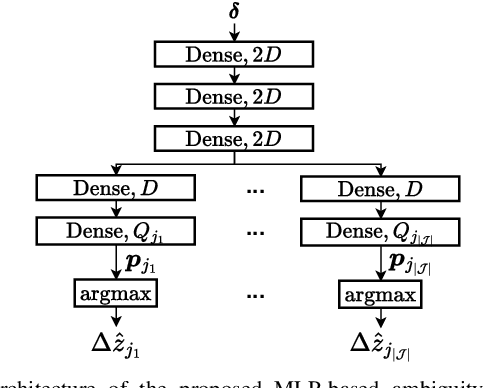

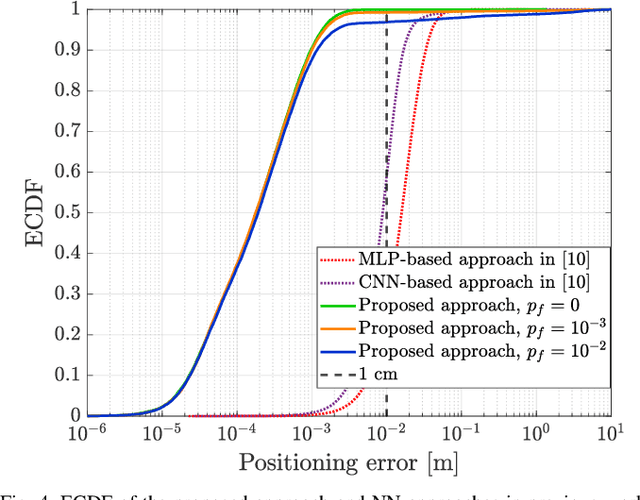

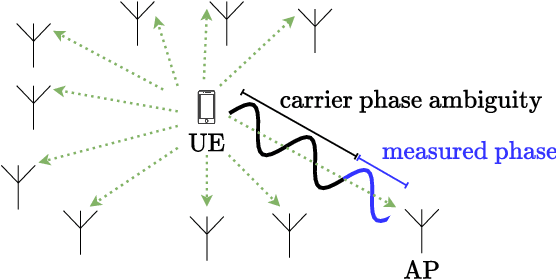

High-precision localization turns into a crucial added value and asset for next-generation wireless systems. Carrier phase positioning (CPP) enables sub-meter to centimeter-level accuracy and is gaining interest in 5G-Advanced standardization. While CPP typically complements time-of-arrival (ToA) measurements, recent literature has introduced a phase-only positioning approach in a distributed antenna/MIMO system context with minimal bandwidth requirements, using deep learning (DL) when operating under ideal hardware assumptions. In more practical scenarios, however, antenna failures can largely degrade the performance. In this paper, we address the challenging phase-only positioning task, and propose a new DL-based localization approach harnessing the so-called hyperbola intersection principle, clearly outperforming the previous methods. Additionally, we consider and propose a processing and learning mechanism that is robust to antenna element failures. Our results show that the proposed DL model achieves robust and accurate positioning despite antenna impairments, demonstrating the viability of data-driven, impairment-tolerant phase-only positioning mechanisms. Comprehensive set of numerical results demonstrates large improvements in localization accuracy against the prior art methods.

Steering Conceptual Bias via Transformer Latent-Subspace Activation

Jun 23, 2025This work examines whether activating latent subspaces in language models (LLMs) can steer scientific code generation toward a specific programming language. Five causal LLMs were first evaluated on scientific coding prompts to quantify their baseline bias among four programming languages. A static neuron-attribution method, perturbing the highest activated MLP weight for a C++ or CPP token, proved brittle and exhibited limited generalization across prompt styles and model scales. To address these limitations, a gradient-refined adaptive activation steering framework (G-ACT) was developed: per-prompt activation differences are clustered into a small set of steering directions, and lightweight per-layer probes are trained and refined online to select the appropriate steering vector. In LLaMA-3.2 3B, this approach reliably biases generation towards the CPP language by increasing the average probe classification accuracy by 15% and the early layers (0-6) improving the probe classification accuracy by 61.5% compared to the standard ACT framework. For LLaMA-3.3 70B, where attention-head signals become more diffuse, targeted injections at key layers still improve language selection. Although per-layer probing introduces a modest inference overhead, it remains practical by steering only a subset of layers and enables reproducible model behavior. These results demonstrate a scalable, interpretable and efficient mechanism for concept-level control for practical agentic systems.

Phase-Only Positioning: Overcoming Integer Ambiguity Challenge through Deep Learning

Jun 09, 2025

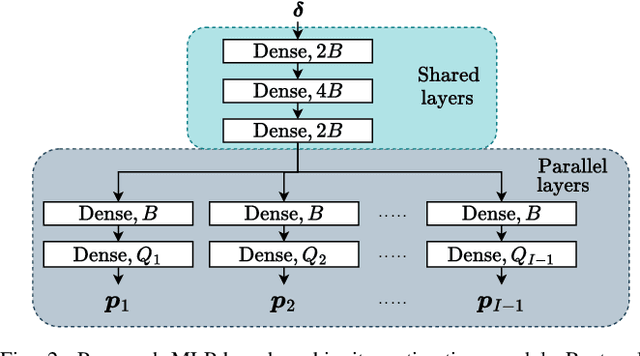

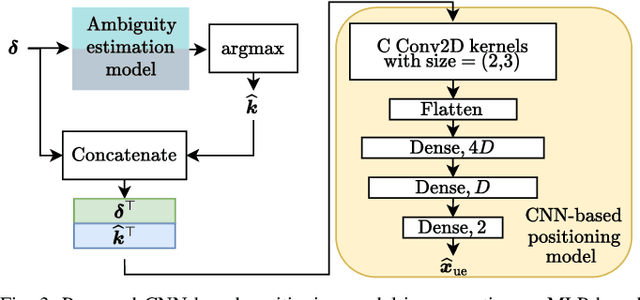

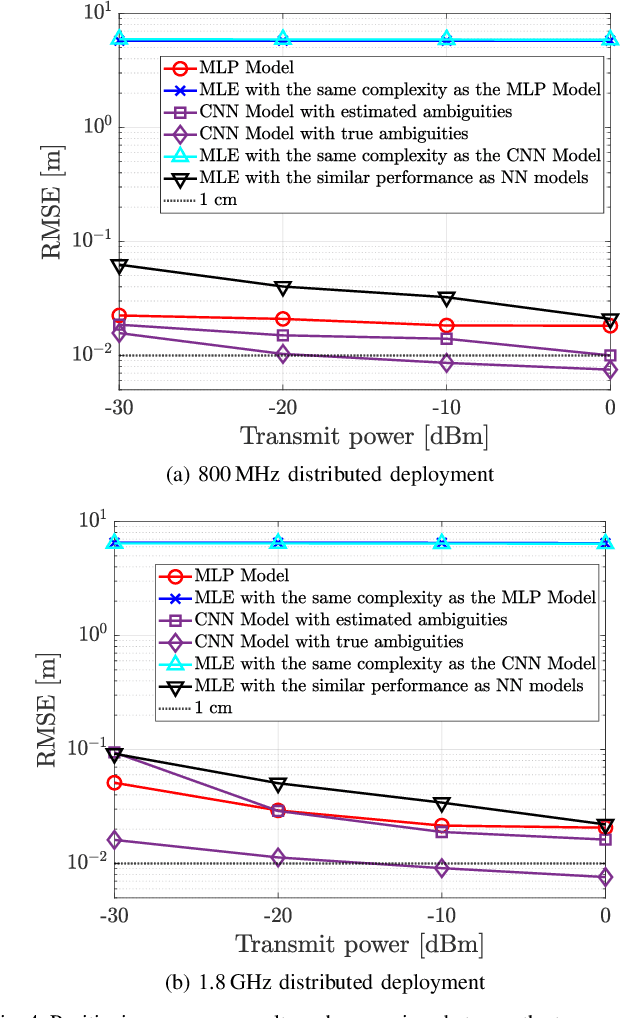

This paper investigates uplink carrier phase positioning (CPP) in cell-free (CF) or distributed antenna system context, assuming a challenging case where only phase measurements are utilized as observations. In general, CPP can achieve sub-meter to centimeter-level accuracy but is challenged by the integer ambiguity problem. In this work, we propose two deep learning approaches for phase-only positioning, overcoming the integer ambiguity challenge. The first one directly uses phase measurements, while the second one first estimates integer ambiguities and then integrates them with phase measurements for improved accuracy. Our numerical results demonstrate that an inference complexity reduction of two to three orders of magnitude is achieved, compared to maximum likelihood baseline solution, depending on the approach and parameter configuration. This emphasizes the potential of the developed deep learning solutions for efficient and precise positioning in future CF 6G systems.

Zero-Shot Detection of LLM-Generated Code via Approximated Task Conditioning

Jun 06, 2025Detecting Large Language Model (LLM)-generated code is a growing challenge with implications for security, intellectual property, and academic integrity. We investigate the role of conditional probability distributions in improving zero-shot LLM-generated code detection, when considering both the code and the corresponding task prompt that generated it. Our key insight is that when evaluating the probability distribution of code tokens using an LLM, there is little difference between LLM-generated and human-written code. However, conditioning on the task reveals notable differences. This contrasts with natural language text, where differences exist even in the unconditional distributions. Leveraging this, we propose a novel zero-shot detection approach that approximates the original task used to generate a given code snippet and then evaluates token-level entropy under the approximated task conditioning (ATC). We further provide a mathematical intuition, contextualizing our method relative to previous approaches. ATC requires neither access to the generator LLM nor the original task prompts, making it practical for real-world applications. To the best of our knowledge, it achieves state-of-the-art results across benchmarks and generalizes across programming languages, including Python, CPP, and Java. Our findings highlight the importance of task-level conditioning for LLM-generated code detection. The supplementary materials and code are available at https://github.com/maorash/ATC, including the dataset gathering implementation, to foster further research in this area.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge