Zhongle Xie

CtrlCoT: Dual-Granularity Chain-of-Thought Compression for Controllable Reasoning

Jan 28, 2026Abstract:Chain-of-thought (CoT) prompting improves LLM reasoning but incurs high latency and memory cost due to verbose traces, motivating CoT compression with preserved correctness. Existing methods either shorten CoTs at the semantic level, which is often conservative, or prune tokens aggressively, which can miss task-critical cues and degrade accuracy. Moreover, combining the two is non-trivial due to sequential dependency, task-agnostic pruning, and distribution mismatch. We propose \textbf{CtrlCoT}, a dual-granularity CoT compression framework that harmonizes semantic abstraction and token-level pruning through three components: Hierarchical Reasoning Abstraction produces CoTs at multiple semantic granularities; Logic-Preserving Distillation trains a logic-aware pruner to retain indispensable reasoning cues (e.g., numbers and operators) across pruning ratios; and Distribution-Alignment Generation aligns compressed traces with fluent inference-time reasoning styles to avoid fragmentation. On MATH-500 with Qwen2.5-7B-Instruct, CtrlCoT uses 30.7\% fewer tokens while achieving 7.6 percentage points higher than the strongest baseline, demonstrating more efficient and reliable reasoning. Our code will be publicly available at https://github.com/fanzhenxuan/Ctrl-CoT.

SafeLoad: Efficient Admission Control Framework for Identifying Memory-Overloading Queries in Cloud Data Warehouses

Jan 05, 2026Abstract:Memory overload is a common form of resource exhaustion in cloud data warehouses. When database queries fail due to memory overload, it not only wastes critical resources such as CPU time but also disrupts the execution of core business processes, as memory-overloading (MO) queries are typically part of complex workflows. If such queries are identified in advance and scheduled to memory-rich serverless clusters, it can prevent resource wastage and query execution failure. Therefore, cloud data warehouses desire an admission control framework with high prediction precision, interpretability, efficiency, and adaptability to effectively identify MO queries. However, existing admission control frameworks primarily focus on scenarios like SLA satisfaction and resource isolation, with limited precision in identifying MO queries. Moreover, there is a lack of publicly available MO-labeled datasets with workloads for training and benchmarking. To tackle these challenges, we propose SafeLoad, the first query admission control framework specifically designed to identify MO queries. Alongside, we release SafeBench, an open-source, industrial-scale benchmark for this task, which includes 150 million real queries. SafeLoad first filters out memory-safe queries using the interpretable discriminative rule. It then applies a hybrid architecture that integrates both a global model and cluster-level models, supplemented by a misprediction correction module to identify MO queries. Additionally, a self-tuning quota management mechanism dynamically adjusts prediction quotas per cluster to improve precision. Experimental results show that SafeLoad achieves state-of-the-art prediction performance with low online and offline time overhead. Specifically, SafeLoad improves precision by up to 66% over the best baseline and reduces wasted CPU time by up to 8.09x compared to scenarios without SafeLoad.

HAKES: Scalable Vector Database for Embedding Search Service

May 18, 2025Abstract:Modern deep learning models capture the semantics of complex data by transforming them into high-dimensional embedding vectors. Emerging applications, such as retrieval-augmented generation, use approximate nearest neighbor (ANN) search in the embedding vector space to find similar data. Existing vector databases provide indexes for efficient ANN searches, with graph-based indexes being the most popular due to their low latency and high recall in real-world high-dimensional datasets. However, these indexes are costly to build, suffer from significant contention under concurrent read-write workloads, and scale poorly to multiple servers. Our goal is to build a vector database that achieves high throughput and high recall under concurrent read-write workloads. To this end, we first propose an ANN index with an explicit two-stage design combining a fast filter stage with highly compressed vectors and a refine stage to ensure recall, and we devise a novel lightweight machine learning technique to fine-tune the index parameters. We introduce an early termination check to dynamically adapt the search process for each query. Next, we add support for writes while maintaining search performance by decoupling the management of the learned parameters. Finally, we design HAKES, a distributed vector database that serves the new index in a disaggregated architecture. We evaluate our index and system against 12 state-of-the-art indexes and three distributed vector databases, using high-dimensional embedding datasets generated by deep learning models. The experimental results show that our index outperforms index baselines in the high recall region and under concurrent read-write workloads. Furthermore, \namesys{} is scalable and achieves up to $16\times$ higher throughputs than the baselines. The HAKES project is open-sourced at https://www.comp.nus.edu.sg/~dbsystem/hakes/.

FloE: On-the-Fly MoE Inference on Memory-constrained GPU

May 12, 2025Abstract:With the widespread adoption of Mixture-of-Experts (MoE) models, there is a growing demand for efficient inference on memory-constrained devices. While offloading expert parameters to CPU memory and loading activated experts on demand has emerged as a potential solution, the large size of activated experts overburdens the limited PCIe bandwidth, hindering the effectiveness in latency-sensitive scenarios. To mitigate this, we propose FloE, an on-the-fly MoE inference system on memory-constrained GPUs. FloE is built on the insight that there exists substantial untapped redundancy within sparsely activated experts. It employs various compression techniques on the expert's internal parameter matrices to reduce the data movement load, combined with low-cost sparse prediction, achieving perceptible inference acceleration in wall-clock time on resource-constrained devices. Empirically, FloE achieves a 9.3x compression of parameters per expert in Mixtral-8x7B; enables deployment on a GPU with only 11GB VRAM, reducing the memory footprint by up to 8.5x; and delivers a 48.7x inference speedup compared to DeepSpeed-MII on a single GeForce RTX 3090 - all with only a 4.4$\%$ - 7.6$\%$ average performance degradation.

FloE: On-the-Fly MoE Inference

May 09, 2025Abstract:With the widespread adoption of Mixture-of-Experts (MoE) models, there is a growing demand for efficient inference on memory-constrained devices. While offloading expert parameters to CPU memory and loading activated experts on demand has emerged as a potential solution, the large size of activated experts overburdens the limited PCIe bandwidth, hindering the effectiveness in latency-sensitive scenarios. To mitigate this, we propose FloE, an on-the-fly MoE inference system on memory-constrained GPUs. FloE is built on the insight that there exists substantial untapped redundancy within sparsely activated experts. It employs various compression techniques on the expert's internal parameter matrices to reduce the data movement load, combined with low-cost sparse prediction, achieving perceptible inference acceleration in wall-clock time on resource-constrained devices. Empirically, FloE achieves a 9.3x compression of parameters per expert in Mixtral-8x7B; enables deployment on a GPU with only 11GB VRAM, reducing the memory footprint by up to 8.5x; and delivers a 48.7x inference speedup compared to DeepSpeed-MII on a single GeForce RTX 3090.

CHASe: Client Heterogeneity-Aware Data Selection for Effective Federated Active Learning

Apr 24, 2025

Abstract:Active learning (AL) reduces human annotation costs for machine learning systems by strategically selecting the most informative unlabeled data for annotation, but performing it individually may still be insufficient due to restricted data diversity and annotation budget. Federated Active Learning (FAL) addresses this by facilitating collaborative data selection and model training, while preserving the confidentiality of raw data samples. Yet, existing FAL methods fail to account for the heterogeneity of data distribution across clients and the associated fluctuations in global and local model parameters, adversely affecting model accuracy. To overcome these challenges, we propose CHASe (Client Heterogeneity-Aware Data Selection), specifically designed for FAL. CHASe focuses on identifying those unlabeled samples with high epistemic variations (EVs), which notably oscillate around the decision boundaries during training. To achieve both effectiveness and efficiency, \model{} encompasses techniques for 1) tracking EVs by analyzing inference inconsistencies across training epochs, 2) calibrating decision boundaries of inaccurate models with a new alignment loss, and 3) enhancing data selection efficiency via a data freeze and awaken mechanism with subset sampling. Experiments show that CHASe surpasses various established baselines in terms of effectiveness and efficiency, validated across diverse datasets, model complexities, and heterogeneous federation settings.

A Comprehensive Study of Shapley Value in Data Analytics

Dec 03, 2024

Abstract:Over the recent years, Shapley value (SV), a solution concept from cooperative game theory, has found numerous applications in data analytics (DA). This paper provides the first comprehensive study of SV used throughout the DA workflow, which involves three main steps: data fabric, data exploration, and result reporting. We summarize existing versatile forms of SV used in these steps by a unified definition and clarify the essential functionalities that SV can provide for data scientists. We categorize the arts in this field based on the technical challenges they tackled, which include computation efficiency, approximation error, privacy preservation, and appropriate interpretations. We discuss these challenges and analyze the corresponding solutions. We also implement SVBench, the first open-sourced benchmark for developing SV applications, and conduct experiments on six DA tasks to validate our analysis and discussions. Based on the qualitative and quantitative results, we identify the limitations of current efforts for applying SV to DA and highlight the directions of future research and engineering.

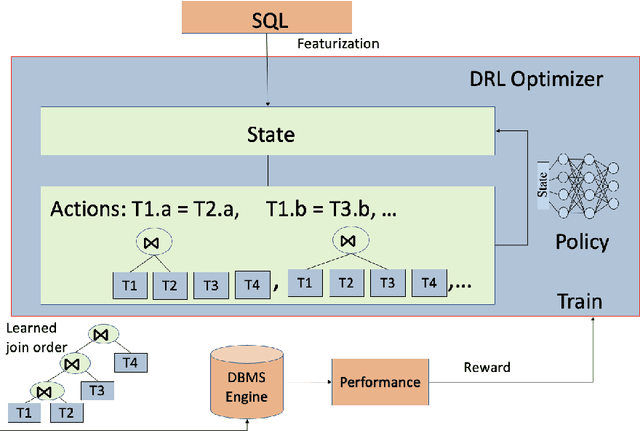

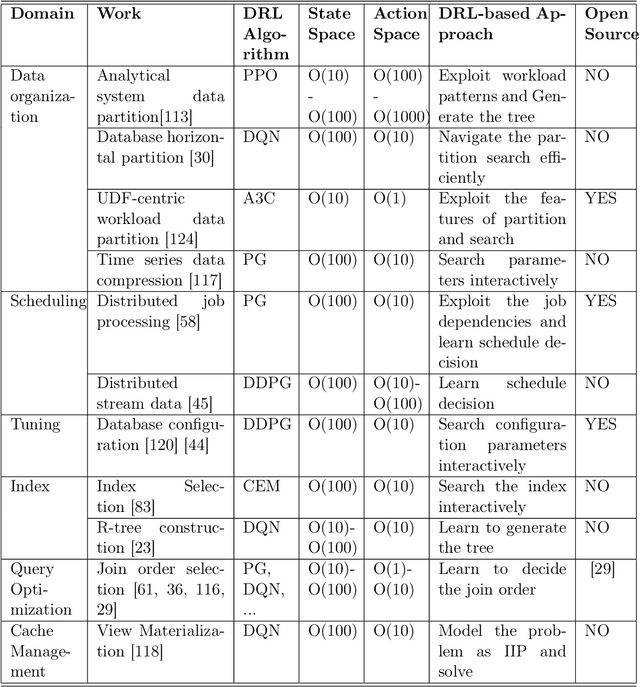

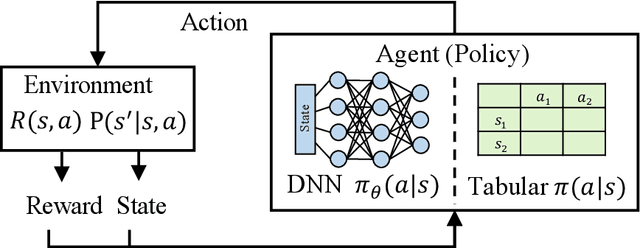

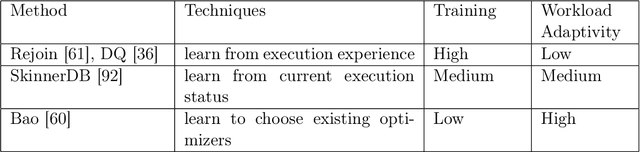

A Survey on Deep Reinforcement Learning for Data Processing and Analytics

Aug 11, 2021

Abstract:Data processing and analytics are fundamental and pervasive. Algorithms play a vital role in data processing and analytics where many algorithm designs have incorporated heuristics and general rules from human knowledge and experience to improve their effectiveness. Recently, reinforcement learning, deep reinforcement learning (DRL) in particular, is increasingly explored and exploited in many areas because it can learn better strategies in complicated environments it is interacting with than statically designed algorithms. Motivated by this trend, we provide a comprehensive review of recent works focusing on utilizing deep reinforcement learning to improve data processing and analytics. First, we present an introduction to key concepts, theories, and methods in deep reinforcement learning. Next, we discuss deep reinforcement learning deployment on database systems, facilitating data processing and analytics in various aspects, including data organization, scheduling, tuning, and indexing. Then, we survey the application of deep reinforcement learning in data processing and analytics, ranging from data preparation, natural language interface to healthcare, fintech, etc. Finally, we discuss important open challenges and future research directions of using deep reinforcement learning in data processing and analytics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge