Zhitian Zhang

TabReason: A Reinforcement Learning-Enhanced Reasoning LLM for Explainable Tabular Data Prediction

May 29, 2025Abstract:Predictive modeling on tabular data is the cornerstone of many real-world applications. Although gradient boosting machines and some recent deep models achieve strong performance on tabular data, they often lack interpretability. On the other hand, large language models (LLMs) have demonstrated powerful capabilities to generate human-like reasoning and explanations, but remain under-performed for tabular data prediction. In this paper, we propose a new approach that leverages reasoning-based LLMs, trained using reinforcement learning, to perform more accurate and explainable predictions on tabular data. Our method introduces custom reward functions that guide the model not only toward high prediction accuracy but also toward human-understandable reasons for its predictions. Experimental results show that our model achieves promising performance on financial benchmark datasets, outperforming most existing LLMs.

Test-Time Learning for Large Language Models

May 27, 2025

Abstract:While Large Language Models (LLMs) have exhibited remarkable emergent capabilities through extensive pre-training, they still face critical limitations in generalizing to specialized domains and handling diverse linguistic variations, known as distribution shifts. In this paper, we propose a Test-Time Learning (TTL) paradigm for LLMs, namely TLM, which dynamically adapts LLMs to target domains using only unlabeled test data during testing. Specifically, we first provide empirical evidence and theoretical insights to reveal that more accurate predictions from LLMs can be achieved by minimizing the input perplexity of the unlabeled test data. Based on this insight, we formulate the Test-Time Learning process of LLMs as input perplexity minimization, enabling self-supervised enhancement of LLM performance. Furthermore, we observe that high-perplexity samples tend to be more informative for model optimization. Accordingly, we introduce a Sample Efficient Learning Strategy that actively selects and emphasizes these high-perplexity samples for test-time updates. Lastly, to mitigate catastrophic forgetting and ensure adaptation stability, we adopt Low-Rank Adaptation (LoRA) instead of full-parameter optimization, which allows lightweight model updates while preserving more original knowledge from the model. We introduce the AdaptEval benchmark for TTL and demonstrate through experiments that TLM improves performance by at least 20% compared to original LLMs on domain knowledge adaptation.

Predicting Long-Term Human Behaviors in Discrete Representations via Physics-Guided Diffusion

May 29, 2024

Abstract:Long-term human trajectory prediction is a challenging yet critical task in robotics and autonomous systems. Prior work that studied how to predict accurate short-term human trajectories with only unimodal features often failed in long-term prediction. Reinforcement learning provides a good solution for learning human long-term behaviors but can suffer from challenges in data efficiency and optimization. In this work, we propose a long-term human trajectory forecasting framework that leverages a guided diffusion model to generate diverse long-term human behaviors in a high-level latent action space, obtained via a hierarchical action quantization scheme using a VQ-VAE to discretize continuous trajectories and the available context. The latent actions are predicted by our guided diffusion model, which uses physics-inspired guidance at test time to constrain generated multimodal action distributions. Specifically, we use reachability analysis during the reverse denoising process to guide the diffusion steps toward physically feasible latent actions. We evaluate our framework on two publicly available human trajectory forecasting datasets: SFU-Store-Nav and JRDB, and extensive experimental results show that our framework achieves superior performance in long-term human trajectory forecasting.

Good Things Come in Trees: Emotion and Context Aware Behaviour Trees for Ethical Robotic Decision-Making

May 10, 2024Abstract:Emotions guide our decision making process and yet have been little explored in practical ethical decision making scenarios. In this challenge, we explore emotions and how they can influence ethical decision making in a home robot context: which fetch requests should a robot execute, and why or why not? We discuss, in particular, two aspects of emotion: (1) somatic markers: objects to be retrieved are tagged as negative (dangerous, e.g. knives or mind-altering, e.g. medicine with overdose potential), providing a quick heuristic for where to focus attention to avoid the classic Frame Problem of artificial intelligence, (2) emotion inference: users' valence and arousal levels are taken into account in defining how and when a robot should respond to a human's requests, e.g. to carefully consider giving dangerous items to users experiencing intense emotions. Our emotion-based approach builds a foundation for the primary consideration of Safety, and is complemented by policies that support overriding based on Context (e.g. age of user, allergies) and Privacy (e.g. administrator settings). Transparency is another key aspect of our solution. Our solution is defined using behaviour trees, towards an implementable design that can provide reasoning information in real-time.

SFU-Store-Nav: A Multimodal Dataset for Indoor Human Navigation

Oct 28, 2020

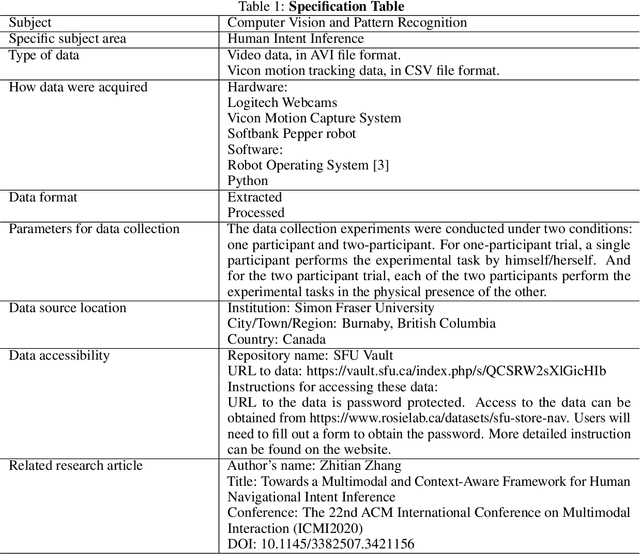

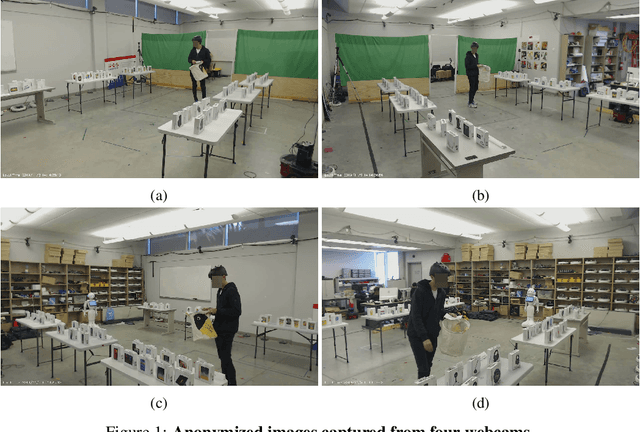

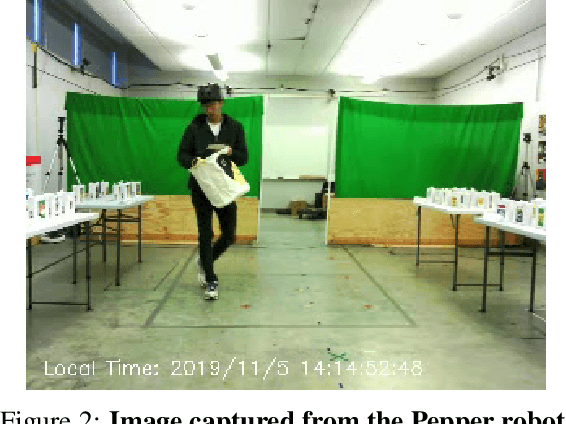

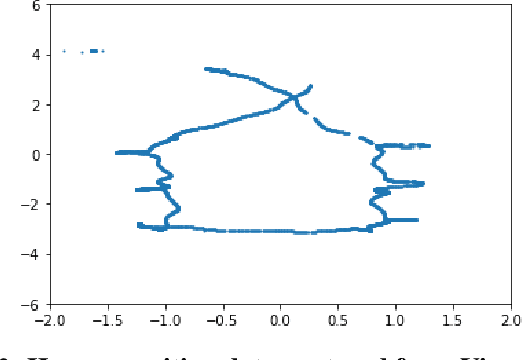

Abstract:This article describes a dataset collected in a set of experiments that involves human participants and a robot. The set of experiments was conducted in the computing science robotics lab in Simon Fraser University, Burnaby, BC, Canada, and its aim is to gather data containing common gestures, movements, and other behaviours that may indicate humans' navigational intent relevant for autonomous robot navigation. The experiment simulates a shopping scenario where human participants come in to pick up items from his/her shopping list and interact with a Pepper robot that is programmed to help the human participant. We collected visual data and motion capture data from 108 human participants. The visual data contains live recordings of the experiments and the motion capture data contains the position and orientation of the human participants in world coordinates. This dataset could be valuable for researchers in the robotics, machine learning and computer vision community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge