Zaifan Jiang

DivNet: Diversity-Aware Self-Correcting Sequential Recommendation Networks

Nov 01, 2024

Abstract:As the last stage of a typical \textit{recommendation system}, \textit{collective recommendation} aims to give the final touches to the recommended items and their layout so as to optimize overall objectives such as diversity and whole-page relevance. In practice, however, the interaction dynamics among the recommended items, their visual appearances and meta-data such as specifications are often too complex to be captured by experts' heuristics or simple models. To address this issue, we propose a \textit{\underline{div}ersity-aware self-correcting sequential recommendation \underline{net}works} (\textit{DivNet}) that is able to estimate utility by capturing the complex interactions among sequential items and diversify recommendations simultaneously. Experiments on both offline and online settings demonstrate that \textit{DivNet} can achieve better results compared to baselines with or without collective recommendations.

Preference as Reward, Maximum Preference Optimization with Importance Sampling

Jan 08, 2024

Abstract:Preference learning is a key technology for aligning language models with human values. Reinforcement Learning from Human Feedback (RLHF) is a model based algorithm to optimize preference learning, which first fitting a reward model for preference score, and then optimizing generating policy with on-policy PPO algorithm to maximize the reward. The processing of RLHF is complex, time-consuming and unstable. Direct Preference Optimization (DPO) algorithm using off-policy algorithm to direct optimize generating policy and eliminating the need for reward model, which is data efficient and stable. DPO use Bradley-Terry model and log-loss which leads to over-fitting to the preference data at the expense of ignoring KL-regularization term when preference is deterministic. IPO uses a root-finding MSE loss to solve the ignoring KL-regularization problem. In this paper, we'll figure out, although IPO fix the problem when preference is deterministic, but both DPO and IPO fails the KL-regularization term because the support of preference distribution not equal to reference distribution. Then, we design a simple and intuitive off-policy preference optimization algorithm from an importance sampling view, which we call Maximum Preference Optimization (MPO), and add off-policy KL-regularization terms which makes KL-regularization truly effective. The objective of MPO bears resemblance to RLHF's objective, and likes IPO, MPO is off-policy. So, MPO attains the best of both worlds. To simplify the learning process and save memory usage, MPO eliminates the needs for both reward model and reference policy.

Tile Networks: Learning Optimal Geometric Layout for Whole-page Recommendation

Mar 03, 2023

Abstract:Finding optimal configurations in a geometric space is a key challenge in many technological disciplines. Current approaches either rely heavily on human domain expertise and are difficult to scale. In this paper we show it is possible to solve configuration optimization problems for whole-page recommendation using reinforcement learning. The proposed \textit{Tile Networks} is a neural architecture that optimizes 2D geometric configurations by arranging items on proper positions. Empirical results on real dataset demonstrate its superior performance compared to traditional learning to rank approaches and recent deep models.

Model-based Constrained MDP for Budget Allocation in Sequential Incentive Marketing

Mar 02, 2023

Abstract:Sequential incentive marketing is an important approach for online businesses to acquire customers, increase loyalty and boost sales. How to effectively allocate the incentives so as to maximize the return (e.g., business objectives) under the budget constraint, however, is less studied in the literature. This problem is technically challenging due to the facts that 1) the allocation strategy has to be learned using historically logged data, which is counterfactual in nature, and 2) both the optimality and feasibility (i.e., that cost cannot exceed budget) needs to be assessed before being deployed to online systems. In this paper, we formulate the problem as a constrained Markov decision process (CMDP). To solve the CMDP problem with logged counterfactual data, we propose an efficient learning algorithm which combines bisection search and model-based planning. First, the CMDP is converted into its dual using Lagrangian relaxation, which is proved to be monotonic with respect to the dual variable. Furthermore, we show that the dual problem can be solved by policy learning, with the optimal dual variable being found efficiently via bisection search (i.e., by taking advantage of the monotonicity). Lastly, we show that model-based planing can be used to effectively accelerate the joint optimization process without retraining the policy for every dual variable. Empirical results on synthetic and real marketing datasets confirm the effectiveness of our methods.

Multi-Scale User Behavior Network for Entire Space Multi-Task Learning

Aug 16, 2022

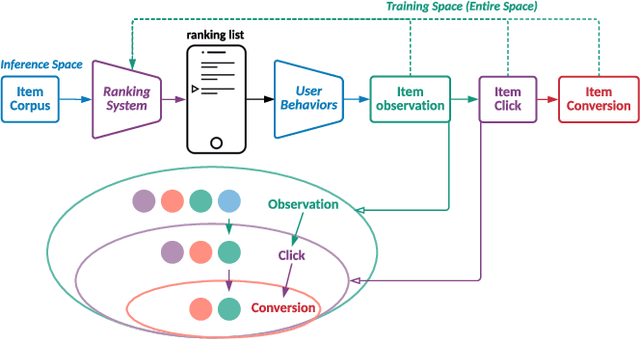

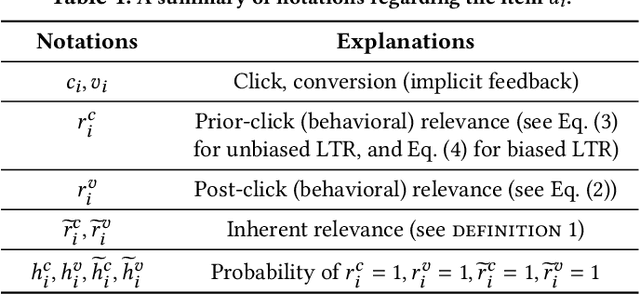

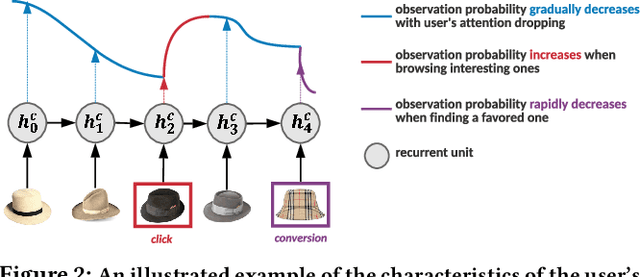

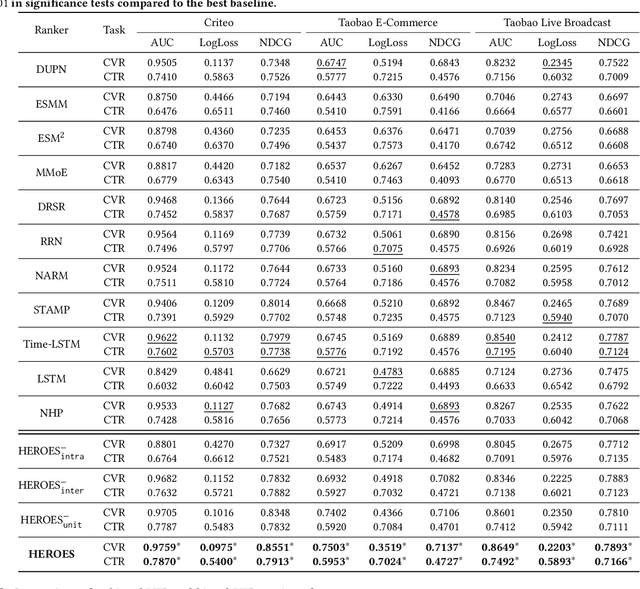

Abstract:Modelling the user's multiple behaviors is an essential part of modern e-commerce, whose widely adopted application is to jointly optimize click-through rate (CTR) and conversion rate (CVR) predictions. Most of existing methods overlook the effect of two key characteristics of the user's behaviors: for each item list, (i) contextual dependence refers to that the user's behaviors on any item are not purely determinated by the item itself but also are influenced by the user's previous behaviors (e.g., clicks, purchases) on other items in the same sequence; (ii) multiple time scales means that users are likely to click frequently but purchase periodically. To this end, we develop a new multi-scale user behavior network named Hierarchical rEcurrent Ranking On the Entire Space (HEROES) which incorporates the contextual information to estimate the user multiple behaviors in a multi-scale fashion. Concretely, we introduce a hierarchical framework, where the lower layer models the user's engagement behaviors while the upper layer estimates the user's satisfaction behaviors. The proposed architecture can automatically learn a suitable time scale for each layer to capture the dynamic user's behavioral patterns. Besides the architecture, we also introduce the Hawkes process to form a novel recurrent unit which can not only encode the items' features in the context but also formulate the excitation or discouragement from the user's previous behaviors. We further show that HEROES can be extended to build unbiased ranking systems through combinations with the survival analysis technique. Extensive experiments over three large-scale industrial datasets demonstrate the superiority of our model compared with the state-of-the-art methods.

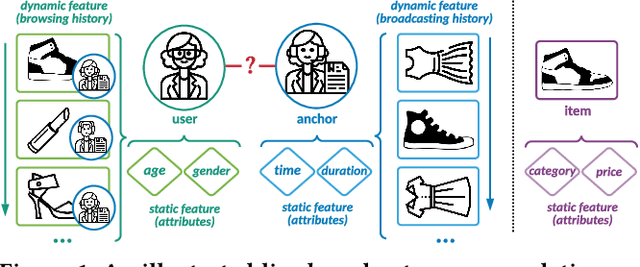

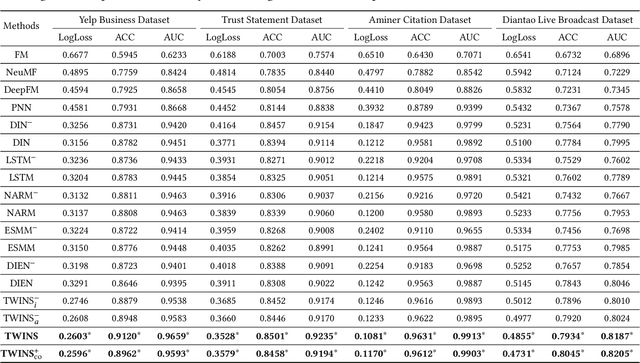

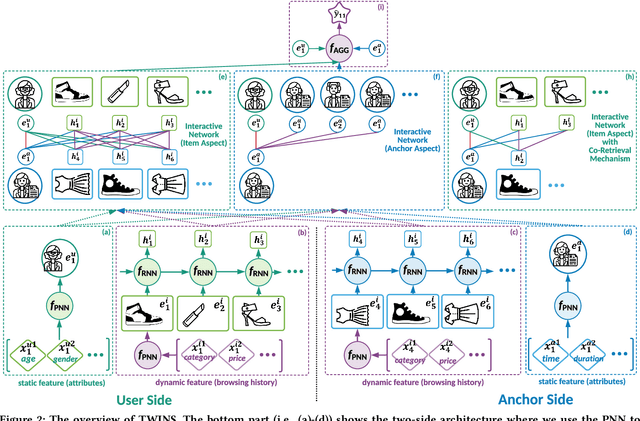

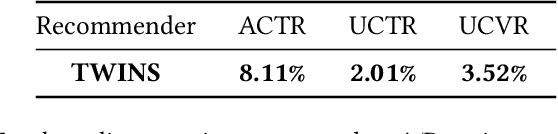

Who to Watch Next: Two-side Interactive Networks for Live Broadcast Recommendation

Feb 09, 2022

Abstract:With the prevalence of live broadcast business nowadays, a new type of recommendation service, called live broadcast recommendation, is widely used in many mobile e-commerce Apps. Different from classical item recommendation, live broadcast recommendation is to automatically recommend user anchors instead of items considering the interactions among triple-objects (i.e., users, anchors, items) rather than binary interactions between users and items. Existing methods based on binary objects, ranging from early matrix factorization to recently emerged deep learning, obtain objects' embeddings by mapping from pre-existing features. Directly applying these techniques would lead to limited performance, as they are failing to encode collaborative signals among triple-objects. In this paper, we propose a novel TWo-side Interactive NetworkS (TWINS) for live broadcast recommendation. In order to fully use both static and dynamic information on user and anchor sides, we combine a product-based neural network with a recurrent neural network to learn the embedding of each object. In addition, instead of directly measuring the similarity, TWINS effectively injects the collaborative effects into the embedding process in an explicit manner by modeling interactive patterns between the user's browsing history and the anchor's broadcast history in both item and anchor aspects. Furthermore, we design a novel co-retrieval technique to select key items among massive historic records efficiently. Offline experiments on real large-scale data show the superior performance of the proposed TWINS, compared to representative methods; and further results of online experiments on Diantao App show that TWINS gains average performance improvement of around 8% on ACTR metric, 3% on UCTR metric, 3.5% on UCVR metric.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge