Yun-Fu Liu

ReasonPlan: Unified Scene Prediction and Decision Reasoning for Closed-loop Autonomous Driving

May 26, 2025Abstract:Due to the powerful vision-language reasoning and generalization abilities, multimodal large language models (MLLMs) have garnered significant attention in the field of end-to-end (E2E) autonomous driving. However, their application to closed-loop systems remains underexplored, and current MLLM-based methods have not shown clear superiority to mainstream E2E imitation learning approaches. In this work, we propose ReasonPlan, a novel MLLM fine-tuning framework designed for closed-loop driving through holistic reasoning with a self-supervised Next Scene Prediction task and supervised Decision Chain-of-Thought process. This dual mechanism encourages the model to align visual representations with actionable driving context, while promoting interpretable and causally grounded decision making. We curate a planning-oriented decision reasoning dataset, namely PDR, comprising 210k diverse and high-quality samples. Our method outperforms the mainstream E2E imitation learning method by a large margin of 19% L2 and 16.1 driving score on Bench2Drive benchmark. Furthermore, ReasonPlan demonstrates strong zero-shot generalization on unseen DOS benchmark, highlighting its adaptability in handling zero-shot corner cases. Code and dataset will be found in https://github.com/Liuxueyi/ReasonPlan.

UncAD: Towards Safe End-to-end Autonomous Driving via Online Map Uncertainty

Apr 17, 2025Abstract:End-to-end autonomous driving aims to produce planning trajectories from raw sensors directly. Currently, most approaches integrate perception, prediction, and planning modules into a fully differentiable network, promising great scalability. However, these methods typically rely on deterministic modeling of online maps in the perception module for guiding or constraining vehicle planning, which may incorporate erroneous perception information and further compromise planning safety. To address this issue, we delve into the importance of online map uncertainty for enhancing autonomous driving safety and propose a novel paradigm named UncAD. Specifically, UncAD first estimates the uncertainty of the online map in the perception module. It then leverages the uncertainty to guide motion prediction and planning modules to produce multi-modal trajectories. Finally, to achieve safer autonomous driving, UncAD proposes an uncertainty-collision-aware planning selection strategy according to the online map uncertainty to evaluate and select the best trajectory. In this study, we incorporate UncAD into various state-of-the-art (SOTA) end-to-end methods. Experiments on the nuScenes dataset show that integrating UncAD, with only a 1.9% increase in parameters, can reduce collision rates by up to 26% and drivable area conflict rate by up to 42%. Codes, pre-trained models, and demo videos can be accessed at https://github.com/pengxuanyang/UncAD.

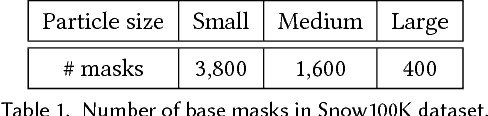

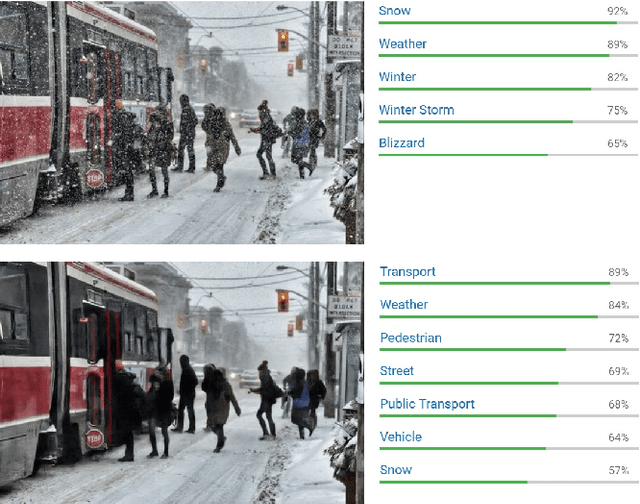

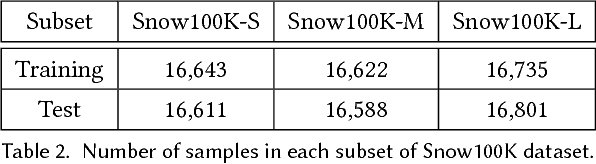

DesnowNet: Context-Aware Deep Network for Snow Removal

Aug 15, 2017

Abstract:Existing learning-based atmospheric particle-removal approaches such as those used for rainy and hazy images are designed with strong assumptions regarding spatial frequency, trajectory, and translucency. However, the removal of snow particles is more complicated because it possess the additional attributes of particle size and shape, and these attributes may vary within a single image. Currently, hand-crafted features are still the mainstream for snow removal, making significant generalization difficult to achieve. In response, we have designed a multistage network codenamed DesnowNet to in turn deal with the removal of translucent and opaque snow particles. We also differentiate snow into attributes of translucency and chromatic aberration for accurate estimation. Moreover, our approach individually estimates residual complements of the snow-free images to recover details obscured by opaque snow. Additionally, a multi-scale design is utilized throughout the entire network to model the diversity of snow. As demonstrated in experimental results, our approach outperforms state-of-the-art learning-based atmospheric phenomena removal methods and one semantic segmentation baseline on the proposed Snow100K dataset in both qualitative and quantitative comparisons. The results indicate our network would benefit applications involving computer vision and graphics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge