Yongpeng Jiang

Analyzing Key Objectives in Human-to-Robot Retargeting for Dexterous Manipulation

Jun 11, 2025Abstract:Kinematic retargeting from human hands to robot hands is essential for transferring dexterity from humans to robots in manipulation teleoperation and imitation learning. However, due to mechanical differences between human and robot hands, completely reproducing human motions on robot hands is impossible. Existing works on retargeting incorporate various optimization objectives, focusing on different aspects of hand configuration. However, the lack of experimental comparative studies leaves the significance and effectiveness of these objectives unclear. This work aims to analyze these retargeting objectives for dexterous manipulation through extensive real-world comparative experiments. Specifically, we propose a comprehensive retargeting objective formulation that integrates intuitively crucial factors appearing in recent approaches. The significance of each factor is evaluated through experimental ablation studies on the full objective in kinematic posture retargeting and real-world teleoperated manipulation tasks. Experimental results and conclusions provide valuable insights for designing more accurate and effective retargeting algorithms for real-world dexterous manipulation.

Robust Model-Based In-Hand Manipulation with Integrated Real-Time Motion-Contact Planning and Tracking

May 08, 2025Abstract:Robotic dexterous in-hand manipulation, where multiple fingers dynamically make and break contact, represents a step toward human-like dexterity in real-world robotic applications. Unlike learning-based approaches that rely on large-scale training or extensive data collection for each specific task, model-based methods offer an efficient alternative. Their online computing nature allows for ready application to new tasks without extensive retraining. However, due to the complexity of physical contacts, existing model-based methods encounter challenges in efficient online planning and handling modeling errors, which limit their practical applications. To advance the effectiveness and robustness of model-based contact-rich in-hand manipulation, this paper proposes a novel integrated framework that mitigates these limitations. The integration involves two key aspects: 1) integrated real-time planning and tracking achieved by a hierarchical structure; and 2) joint optimization of motions and contacts achieved by integrated motion-contact modeling. Specifically, at the high level, finger motion and contact force references are jointly generated using contact-implicit model predictive control. The high-level module facilitates real-time planning and disturbance recovery. At the low level, these integrated references are concurrently tracked using a hand force-motion model and actual tactile feedback. The low-level module compensates for modeling errors and enhances the robustness of manipulation. Extensive experiments demonstrate that our approach outperforms existing model-based methods in terms of accuracy, robustness, and real-time performance. Our method successfully completes five challenging tasks in real-world environments, even under appreciable external disturbances.

Robotic In-Hand Manipulation for Large-Range Precise Object Movement: The RGMC Champion Solution

Feb 11, 2025Abstract:In-hand manipulation using multiple dexterous fingers is a critical robotic skill that can reduce the reliance on large arm motions, thereby saving space and energy. This letter focuses on in-grasp object movement, which refers to manipulating an object to a desired pose through only finger motions within a stable grasp. The key challenge lies in simultaneously achieving high precision and large-range movements while maintaining a constant stable grasp. To address this problem, we propose a simple and practical approach based on kinematic trajectory optimization with no need for pretraining or object geometries, which can be easily applied to novel objects in real-world scenarios. Adopting this approach, we won the championship for the in-hand manipulation track at the 9th Robotic Grasping and Manipulation Competition (RGMC) held at ICRA 2024. Implementation details, discussion, and further quantitative experimental results are presented in this letter, which aims to comprehensively evaluate our approach and share our key takeaways from the competition. Supplementary materials including video and code are available at https://rgmc-xl-team.github.io/ingrasp_manipulation .

A Unified Interaction Control Framework for Safe Robotic Ultrasound Scanning with Human-Intention-Aware Compliance

Nov 29, 2024

Abstract:The ultrasound scanning robot operates in environments where frequent human-robot interactions occur. Most existing control methods for ultrasound scanning address only one specific interaction situation or implement hard switches between controllers for different situations, which compromises both safety and efficiency. In this paper, we propose a unified interaction control framework for ultrasound scanning robots capable of handling all common interactions, distinguishing both human-intended and unintended types, and adapting with appropriate compliance. Specifically, the robot suspends or modulates its ongoing main task if the interaction is intended, e.g., when the doctor grasps the robot to lead the end effector actively. Furthermore, it can identify unintended interactions and avoid potential collision in the null space beforehand. Even if that collision has happened, it can become compliant with the collision in the null space and try to reduce its impact on the main task (where the scan is ongoing) kinematically and dynamically. The multiple situations are integrated into a unified controller with a smooth transition to deal with the interactions by exhibiting human-intention-aware compliance. Experimental results validate the framework's ability to cope with all common interactions including intended intervention and unintended collision in a collaborative carotid artery ultrasound scanning task.

Contact-Implicit Model Predictive Control for Dexterous In-hand Manipulation: A Long-Horizon and Robust Approach

Mar 11, 2024

Abstract:Dexterous in-hand manipulation is an essential skill of production and life. Nevertheless, the highly stiff and mutable features of contacts cause limitations to real-time contact discovery and inference, which degrades the performance of model-based methods. Inspired by recent advancements in contact-rich locomotion and manipulation, this paper proposes a novel model-based approach to control dexterous in-hand manipulation and overcome the current limitations. The proposed approach has the attractive feature, which allows the robot to robustly execute long-horizon in-hand manipulation without pre-defined contact sequences or separated planning procedures. Specifically, we design a contact-implicit model predictive controller at high-level to generate real-time contact plans, which are executed by the low-level tracking controller. Compared with other model-based methods, such a long-horizon feature enables replanning and robust execution of contact-rich motions to achieve large-displacement in-hand tasks more efficiently; Compared with existing learning-based methods, the proposed approach achieves the dexterity and also generalizes to different objects without any pre-training. Detailed simulations and ablation studies demonstrate the efficiency and effectiveness of our method. It runs at 20Hz on the 23-degree-of-freedom long-horizon in-hand object rotation task.

Generalizable whole-body global manipulation of deformable linear objects by dual-arm robot in 3-D constrained environments

Oct 15, 2023Abstract:Constrained environments are common in practical applications of manipulating deformable linear objects (DLOs), where movements of both DLOs and robots should be constrained. This task is high-dimensional and highly constrained owing to the highly deformable DLOs, dual-arm robots with high degrees of freedom, and 3-D complex environments, which render global planning challenging. Furthermore, accurate DLO models needed by planning are often unavailable owing to their strong nonlinearity and diversity, resulting in unreliable planned paths. This article focuses on the global moving and shaping of DLOs in constrained environments by dual-arm robots. The main objectives are 1) to efficiently and accurately accomplish this task, and 2) to achieve generalizable and robust manipulation of various DLOs. To this end, we propose a complementary framework with whole-body planning and control using appropriate DLO model representations. First, a global planner is proposed to efficiently find feasible solutions based on a simplified DLO energy model, which considers the full system states and all constraints to plan more reliable paths. Then, a closed-loop manipulation scheme is proposed to compensate for the modeling errors and enhance the robustness and accuracy, which incorporates a model predictive controller that real-time adjusts the robot motion based on an adaptive DLO motion model. The key novelty is that our framework can efficiently solve the high-dimensional problem subject to multiple constraints and generalize to various DLOs without elaborate model identifications. Experiments demonstrate that our framework can accomplish considerably more complicated tasks than existing works, with significantly higher efficiency, generalizability, and reliability.

Contact-Aware Non-prehensile Robotic Manipulation for Object Retrieval in Cluttered Environments

Mar 07, 2023Abstract:Non-prehensile manipulation methods usually use a simple end effector, e.g., a single rod, to manipulate the object. Compared to the grasping method, such an end effector is compact and flexible, and hence it can perform tasks in a constrained workspace; As a trade-off, it has relatively few degrees of freedom (DoFs), resulting in an under-actuation problem with complex constraints for planning and control. This paper proposes a new non-prehensile manipulation method for the task of object retrieval in cluttered environments, using a rod-like pusher. Specifically, a candidate trajectory in a cluttered environment is first generated with an improved Rapidly-Exploring Random Tree (RRT) planner; Then, a Model Predictive Control (MPC) scheme is applied to stabilize the slider's poses through necessary contact with obstacles. Different from existing methods, the proposed approach is with the contact-aware feature, which enables the synthesized effect of active removal of obstacles, avoidance behavior, and switching contact face for improved dexterity. Hence both the feasibility and efficiency of the task are greatly promoted. The performance of the proposed method is validated in a planar object retrieval task, where the target object, surrounded by many fixed or movable obstacles, is manipulated and isolated. Both simulation and experimental results are presented.

Multi-Modal Interaction Control of Ultrasound Scanning Robots with Safe Human Guidance and Contact Recovery

Feb 11, 2023Abstract:Ultrasound scanning robots enable the automatic imaging of a patient's internal organs by maintaining close contact between the ultrasound probe and the patient's body during a scanning procedure. Comprehensive, high-quality ultrasound scans are essential for providing the patient with an accurate diagnosis and effective treatment plan. An ultrasound scanning robot usually works in a doctor-robot co-existing environment, hence both efficiency and safety during the collaboration should be considered. In this paper, we propose a novel multi-modal control scheme for ultrasound scanning robots, in which three interaction modes are integrated into a single control input. Specifically, the scanning mode drives the robot to track a time-varying trajectory on the patient's body under the desired impedance model; the recovery mode allows the robot to actively recontact the body whenever physical contact between the ultrasound probe and the patient's body is lost; the human-guided mode renders the robot passive such that the doctor can safely intervene to manually reposition the probe. The integration of multiple modes allows the doctor to intervene safely at any time during the task and also maximizes the robot's autonomous scanning ability. The performance of the robot is validated on a collaborative scanning task of a carotid artery examination.

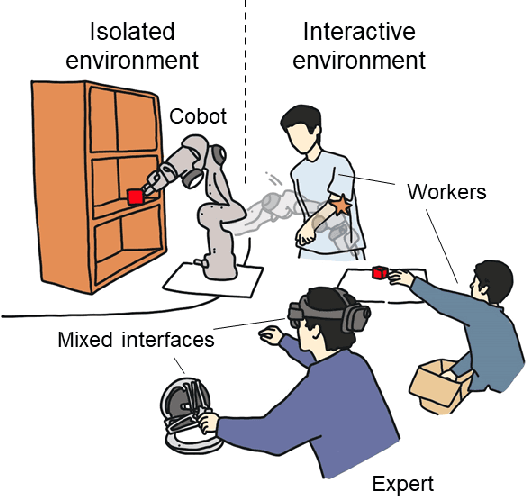

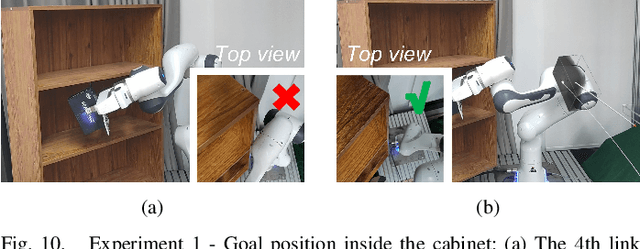

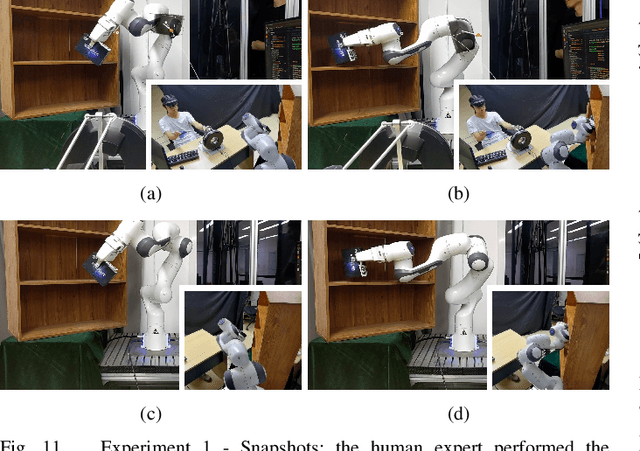

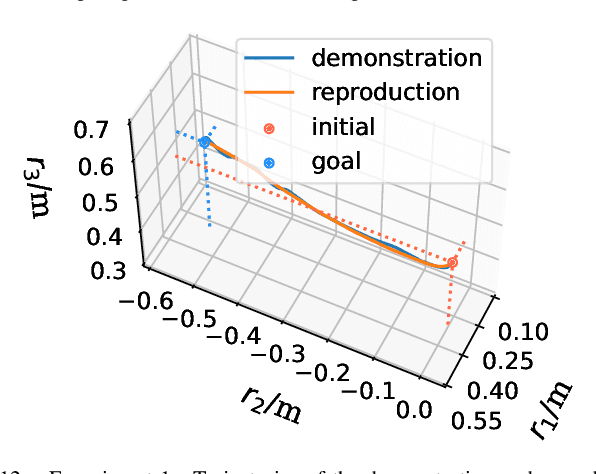

A Complementary Framework for Human-Robot Collaboration with a Mixed AR-Haptic Interface

Oct 12, 2022

Abstract:There is invariably a trade-off between safety and efficiency for collaborative robots (cobots) in human-robot collaborations. Robots that interact minimally with humans can work with high speed and accuracy but cannot adapt to new tasks or respond to unforeseen changes, whereas robots that work closely with humans can but only by becoming passive to humans, meaning that their main tasks suspended and efficiency compromised. Accordingly, this paper proposes a new complementary framework for human-robot collaboration that balances the safety of humans and the efficiency of robots. In this framework, the robot carries out given tasks using a vision-based adaptive controller, and the human expert collaborates with the robot in the null space. Such a decoupling drives the robot to deal with existing issues in task space (e.g., uncalibrated camera, limited field of view) and in null space (e.g., joint limits) by itself while allowing the expert to adjust the configuration of the robot body to respond to unforeseen changes (e.g., sudden invasion, change of environment) without affecting the robot's main task. Additionally, the robot can simultaneously learn the expert's demonstration in task space and null space beforehand with dynamic movement primitives (DMP). Therefore, an expert's knowledge and a robot's capability are both explored and complementary. Human demonstration and involvement are enabled via a mixed interaction interface, i.e., augmented reality (AR) and haptic devices. The stability of the closed-loop system is rigorously proved with Lyapunov methods. Experimental results in various scenarios are presented to illustrate the performance of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge