Yanhua Long

Bridging the gap: A comparative exploration of Speech-LLM and end-to-end architecture for multilingual conversational ASR

Jan 04, 2026Abstract:The INTERSPEECH 2025 Challenge on Multilingual Conversational Speech Language Models (MLC-SLM) promotes multilingual conversational ASR with large language models (LLMs). Our previous SHNU-mASR system adopted a competitive parallel-speech-encoder architecture that integrated Whisper and mHuBERT with an LLM. However, it faced two challenges: simple feature concatenation may not fully exploit complementary information, and the performance gap between LLM-based ASR and end-to-end(E2E) encoder-decoder ASR remained unexplored. In this work, we present an enhanced LLM-based ASR framework that combines fine-tuned Whisper and mHuBERT encoders with an LLM to enrich speech representations. We first evaluate E2E Whisper models with LoRA and full fine-tuning on the MLC-SLM ASR task, and then propose cross-attention-based fusion mechanisms for the parallel-speech-encoder. On the official evaluation set of the MLC-SLM Challenge, our system achieves a CER/WER of 10.69%, ranking on par with the top-ranked Track 1 systems, even though it uses only 1,500 hours of baseline training data compared with their large-scale training sets. Nonetheless, we find that our final LLM-based ASR still does not match the performance of a fine-tuned E2E Whisper model, providing valuable empirical guidance for future Speech-LLM design. Our code is publicly available at https://github.com/1535176727/MLC-SLM.

A Language-Agnostic Hierarchical LoRA-MoE Architecture for CTC-based Multilingual ASR

Jan 02, 2026Abstract:Large-scale multilingual ASR (mASR) models such as Whisper achieve strong performance but incur high computational and latency costs, limiting their deployment on resource-constrained edge devices. In this study, we propose a lightweight and language-agnostic multilingual ASR system based on a CTC architecture with domain adaptation. Specifically, we introduce a Language-agnostic Hierarchical LoRA-MoE (HLoRA) framework integrated into an mHuBERT-CTC model, enabling end-to-end decoding via LID-posterior-driven LoRA routing. The hierarchical design consists of a multilingual shared LoRA for learning language-invariant acoustic representations and language-specific LoRA experts for modeling language-dependent characteristics. The proposed routing mechanism removes the need for prior language identity information or explicit language labels during inference, achieving true language-agnostic decoding. Experiments on MSR-86K and the MLC-SLM 2025 Challenge datasets demonstrate that HLoRA achieves competitive performance with state-of-the-art two-stage inference methods using only single-pass decoding, significantly improving decoding efficiency for low-resource mASR applications.

Lightweight speech enhancement guided target speech extraction in noisy multi-speaker scenarios

Aug 27, 2025

Abstract:Target speech extraction (TSE) has achieved strong performance in relatively simple conditions such as one-speaker-plus-noise and two-speaker mixtures, but its performance remains unsatisfactory in noisy multi-speaker scenarios. To address this issue, we introduce a lightweight speech enhancement model, GTCRN, to better guide TSE in noisy environments. Building on our competitive previous speaker embedding/encoder-free framework SEF-PNet, we propose two extensions: LGTSE and D-LGTSE. LGTSE incorporates noise-agnostic enrollment guidance by denoising the input noisy speech before context interaction with enrollment speech, thereby reducing noise interference. D-LGTSE further improves system robustness against speech distortion by leveraging denoised speech as an additional noisy input during training, expanding the dynamic range of noisy conditions and enabling the model to directly learn from distorted signals. Furthermore, we propose a two-stage training strategy, first with GTCRN enhancement-guided pre-training and then joint fine-tuning, to fully exploit model potential.Experiments on the Libri2Mix dataset demonstrate significant improvements of 0.89 dB in SISDR, 0.16 in PESQ, and 1.97% in STOI, validating the effectiveness of our approach. Our code is publicly available at https://github.com/isHuangZiling/D-LGTSE.

Unified Architecture and Unsupervised Speech Disentanglement for Speaker Embedding-Free Enrollment in Personalized Speech Enhancement

May 18, 2025

Abstract:Conventional speech enhancement (SE) aims to improve speech perception and intelligibility by suppressing noise without requiring enrollment speech as reference, whereas personalized SE (PSE) addresses the cocktail party problem by extracting a target speaker's speech using enrollment speech. While these two tasks tackle different yet complementary challenges in speech signal processing, they often share similar model architectures, with PSE incorporating an additional branch to process enrollment speech. This suggests developing a unified model capable of efficiently handling both SE and PSE tasks, thereby simplifying deployment while maintaining high performance. However, PSE performance is sensitive to variations in enrollment speech, like emotional tone, which limits robustness in real-world applications. To address these challenges, we propose two novel models, USEF-PNet and DSEF-PNet, both extending our previous SEF-PNet framework. USEF-PNet introduces a unified architecture for processing enrollment speech, integrating SE and PSE into a single framework to enhance performance and streamline deployment. Meanwhile, DSEF-PNet incorporates an unsupervised speech disentanglement approach by pairing a mixture speech with two different enrollment utterances and enforcing consistency in the extracted target speech. This strategy effectively isolates high-quality speaker identity information from enrollment speech, reducing interference from factors such as emotion and content, thereby improving PSE robustness. Additionally, we explore a long-short enrollment pairing (LSEP) strategy to examine the impact of enrollment speech duration during both training and evaluation. Extensive experiments on the Libri2Mix and VoiceBank DEMAND demonstrate that our proposed USEF-PNet, DSEF-PNet all achieve substantial performance improvements, with random enrollment duration performing slightly better.

Exploring the Potential of SSL Models for Sound Event Detection

May 17, 2025Abstract:Self-supervised learning (SSL) models offer powerful representations for sound event detection (SED), yet their synergistic potential remains underexplored. This study systematically evaluates state-of-the-art SSL models to guide optimal model selection and integration for SED. We propose a framework that combines heterogeneous SSL representations (e.g., BEATs, HuBERT, WavLM) through three fusion strategies: individual SSL embedding integration, dual-modal fusion, and full aggregation. Experiments on the DCASE 2023 Task 4 Challenge reveal that dual-modal fusion (e.g., CRNN+BEATs+WavLM) achieves complementary performance gains, while CRNN+BEATs alone delivers the best results among individual SSL models. We further introduce normalized sound event bounding boxes (nSEBBs), an adaptive post-processing method that dynamically adjusts event boundary predictions, improving PSDS1 by up to 4% for standalone SSL models. These findings highlight the compatibility and complementarity of SSL architectures, providing guidance for task-specific fusion and robust SED system design.

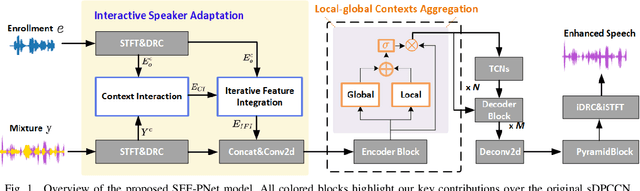

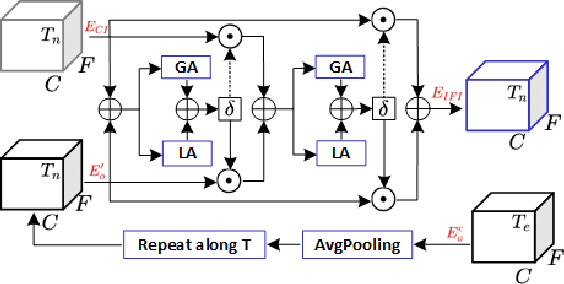

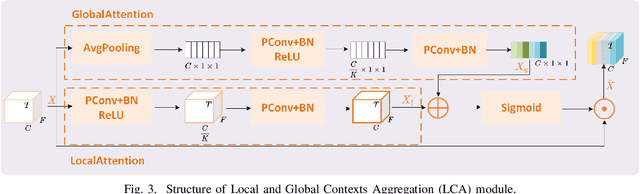

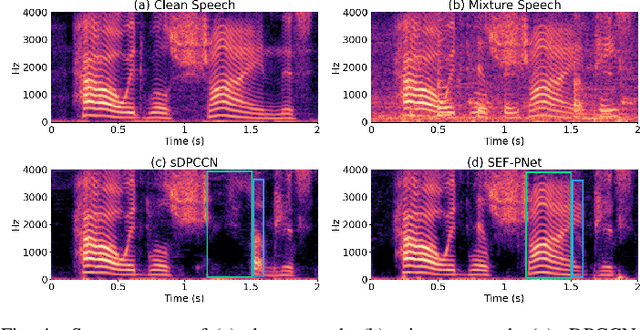

SEF-PNet: Speaker Encoder-Free Personalized Speech Enhancement with Local and Global Contexts Aggregation

Jan 20, 2025

Abstract:Personalized speech enhancement (PSE) methods typically rely on pre-trained speaker verification models or self-designed speaker encoders to extract target speaker clues, guiding the PSE model in isolating the desired speech. However, these approaches suffer from significant model complexity and often underutilize enrollment speaker information, limiting the potential performance of the PSE model. To address these limitations, we propose a novel Speaker Encoder-Free PSE network, termed SEF-PNet, which fully exploits the information present in both the enrollment speech and noisy mixtures. SEF-PNet incorporates two key innovations: Interactive Speaker Adaptation (ISA) and Local-Global Context Aggregation (LCA). ISA dynamically modulates the interactions between enrollment and noisy signals to enhance the speaker adaptation, while LCA employs advanced channel attention within the PSE encoder to effectively integrate local and global contextual information, thus improving feature learning. Experiments on the Libri2Mix dataset demonstrate that SEF-PNet significantly outperforms baseline models, achieving state-of-the-art PSE performance.

ICSD: An Open-source Dataset for Infant Cry and Snoring Detection

Aug 20, 2024

Abstract:The detection and analysis of infant cry and snoring events are crucial tasks within the field of audio signal processing. While existing datasets for general sound event detection are plentiful, they often fall short in providing sufficient, strongly labeled data specific to infant cries and snoring. To provide a benchmark dataset and thus foster the research of infant cry and snoring detection, this paper introduces the Infant Cry and Snoring Detection (ICSD) dataset, a novel, publicly available dataset specially designed for ICSD tasks. The ICSD comprises three types of subsets: a real strongly labeled subset with event-based labels annotated manually, a weakly labeled subset with only clip-level event annotations, and a synthetic subset generated and labeled with strong annotations. This paper provides a detailed description of the ICSD creation process, including the challenges encountered and the solutions adopted. We offer a comprehensive characterization of the dataset, discussing its limitations and key factors for ICSD usage. Additionally, we conduct extensive experiments on the ICSD dataset to establish baseline systems and offer insights into the main factors when using this dataset for ICSD research. Our goal is to develop a dataset that will be widely adopted by the community as a new open benchmark for future ICSD research.

Autoencoder with Group-based Decoder and Multi-task Optimization for Anomalous Sound Detection

Nov 15, 2023

Abstract:In industry, machine anomalous sound detection (ASD) is in great demand. However, collecting enough abnormal samples is difficult due to the high cost, which boosts the rapid development of unsupervised ASD algorithms. Autoencoder (AE) based methods have been widely used for unsupervised ASD, but suffer from problems including 'shortcut', poor anti-noise ability and sub-optimal quality of features. To address these challenges, we propose a new AE-based framework termed AEGM. Specifically, we first insert an auxiliary classifier into AE to enhance ASD in a multi-task learning manner. Then, we design a group-based decoder structure, accompanied by an adaptive loss function, to endow the model with domain-specific knowledge. Results on the DCASE 2021 Task 2 development set show that our methods achieve a relative improvement of 13.11% and 15.20% respectively in average AUC over the official AE and MobileNetV2 across test sets of seven machines.

UNISOUND System for VoxCeleb Speaker Recognition Challenge 2023

Aug 24, 2023

Abstract:This report describes the UNISOUND submission for Track1 and Track2 of VoxCeleb Speaker Recognition Challenge 2023 (VoxSRC 2023). We submit the same system on Track 1 and Track 2, which is trained with only VoxCeleb2-dev. Large-scale ResNet and RepVGG architectures are developed for the challenge. We propose a consistency-aware score calibration method, which leverages the stability of audio voiceprints in similarity score by a Consistency Measure Factor (CMF). CMF brings a huge performance boost in this challenge. Our final system is a fusion of six models and achieves the first place in Track 1 and second place in Track 2 of VoxSRC 2023. The minDCF of our submission is 0.0855 and the EER is 1.5880%.

Multi-pass Training and Cross-information Fusion for Low-resource End-to-end Accented Speech Recognition

Jun 20, 2023

Abstract:Low-resource accented speech recognition is one of the important challenges faced by current ASR technology in practical applications. In this study, we propose a Conformer-based architecture, called Aformer, to leverage both the acoustic information from large non-accented and limited accented training data. Specifically, a general encoder and an accent encoder are designed in the Aformer to extract complementary acoustic information. Moreover, we propose to train the Aformer in a multi-pass manner, and investigate three cross-information fusion methods to effectively combine the information from both general and accent encoders. All experiments are conducted on both the accented English and Mandarin ASR tasks. Results show that our proposed methods outperform the strong Conformer baseline by relative 10.2% to 24.5% word/character error rate reduction on six in-domain and out-of-domain accented test sets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge