Yachong Guo

Transformer representation learning is necessary for dynamic multi-modal physiological data on small-cohort patients

Apr 05, 2025

Abstract:Postoperative delirium (POD), a severe neuropsychiatric complication affecting nearly 50% of high-risk surgical patients, is defined as an acute disorder of attention and cognition, It remains significantly underdiagnosed in the intensive care units (ICUs) due to subjective monitoring methods. Early and accurate diagnosis of POD is critical and achievable. Here, we propose a POD prediction framework comprising a Transformer representation model followed by traditional machine learning algorithms. Our approaches utilizes multi-modal physiological data, including amplitude-integrated electroencephalography (aEEG), vital signs, electrocardiographic monitor data as well as hemodynamic parameters. We curated the first multi-modal POD dataset encompassing two patient types and evaluated the various Transformer architectures for representation learning. Empirical results indicate a consistent improvements of sensitivity and Youden index in patient TYPE I using Transformer representations, particularly our fusion adaptation of Pathformer. By enabling effective delirium diagnosis from postoperative day 1 to 3, our extensive experimental findings emphasize the potential of multi-modal physiological data and highlight the necessity of representation learning via multi-modal Transformer architecture in clinical diagnosis.

Automated Radiological Report Generation For Chest X-Rays With Weakly-Supervised End-to-End Deep Learning

Jun 18, 2020

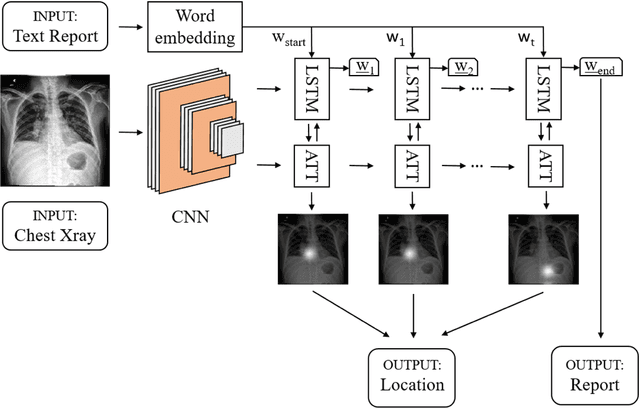

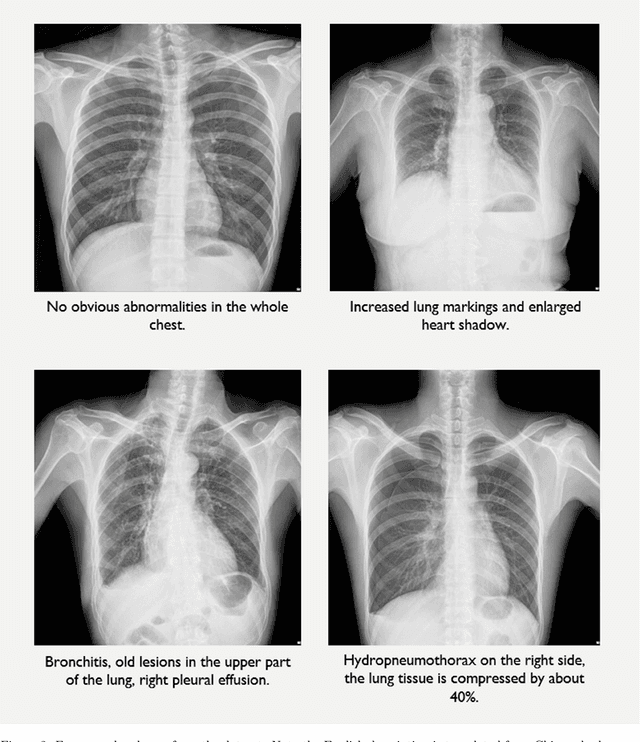

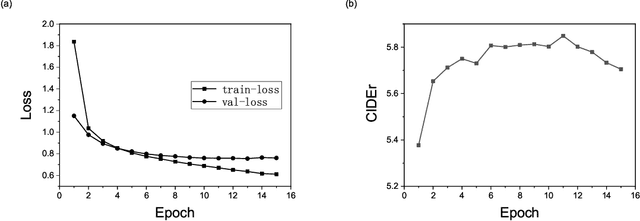

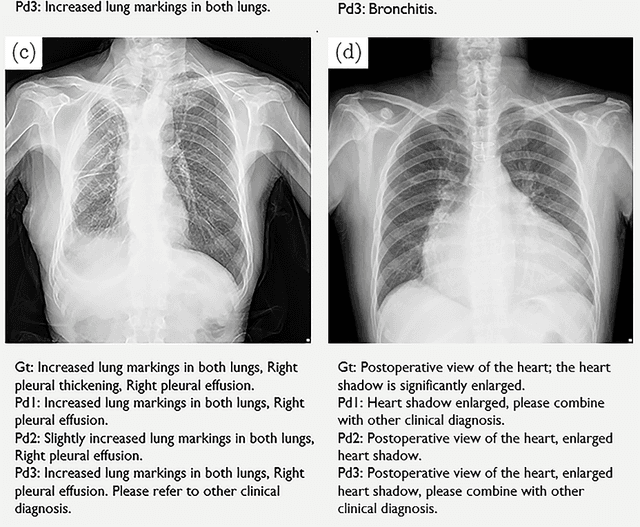

Abstract:The chest X-Ray (CXR) is the one of the most common clinical exam used to diagnose thoracic diseases and abnormalities. The volume of CXR scans generated daily in hospitals is huge. Therefore, an automated diagnosis system able to save the effort of doctors is of great value. At present, the applications of artificial intelligence in CXR diagnosis usually use pattern recognition to classify the scans. However, such methods rely on labeled databases, which are costly and usually have large error rates. In this work, we built a database containing more than 12,000 CXR scans and radiological reports, and developed a model based on deep convolutional neural network and recurrent network with attention mechanism. The model learns features from the CXR scans and the associated raw radiological reports directly; no additional labeling of the scans are needed. The model provides automated recognition of given scans and generation of reports. The quality of the generated reports was evaluated with both the CIDEr scores and by radiologists as well. The CIDEr scores are found to be around 5.8 on average for the testing dataset. Further blind evaluation suggested a comparable performance against human radiologist.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge