Xiaoman Wang

Vision-DeepResearch Benchmark: Rethinking Visual and Textual Search for Multimodal Large Language Models

Feb 02, 2026Abstract:Multimodal Large Language Models (MLLMs) have advanced VQA and now support Vision-DeepResearch systems that use search engines for complex visual-textual fact-finding. However, evaluating these visual and textual search abilities is still difficult, and existing benchmarks have two major limitations. First, existing benchmarks are not visual search-centric: answers that should require visual search are often leaked through cross-textual cues in the text questions or can be inferred from the prior world knowledge in current MLLMs. Second, overly idealized evaluation scenario: On the image-search side, the required information can often be obtained via near-exact matching against the full image, while the text-search side is overly direct and insufficiently challenging. To address these issues, we construct the Vision-DeepResearch benchmark (VDR-Bench) comprising 2,000 VQA instances. All questions are created via a careful, multi-stage curation pipeline and rigorous expert review, designed to assess the behavior of Vision-DeepResearch systems under realistic real-world conditions. Moreover, to address the insufficient visual retrieval capabilities of current MLLMs, we propose a simple multi-round cropped-search workflow. This strategy is shown to effectively improve model performance in realistic visual retrieval scenarios. Overall, our results provide practical guidance for the design of future multimodal deep-research systems. The code will be released in https://github.com/Osilly/Vision-DeepResearch.

DriveVLA-W0: World Models Amplify Data Scaling Law in Autonomous Driving

Oct 14, 2025

Abstract:Scaling Vision-Language-Action (VLA) models on large-scale data offers a promising path to achieving a more generalized driving intelligence. However, VLA models are limited by a ``supervision deficit'': the vast model capacity is supervised by sparse, low-dimensional actions, leaving much of their representational power underutilized. To remedy this, we propose \textbf{DriveVLA-W0}, a training paradigm that employs world modeling to predict future images. This task generates a dense, self-supervised signal that compels the model to learn the underlying dynamics of the driving environment. We showcase the paradigm's versatility by instantiating it for two dominant VLA archetypes: an autoregressive world model for VLAs that use discrete visual tokens, and a diffusion world model for those operating on continuous visual features. Building on the rich representations learned from world modeling, we introduce a lightweight action expert to address the inference latency for real-time deployment. Extensive experiments on the NAVSIM v1/v2 benchmark and a 680x larger in-house dataset demonstrate that DriveVLA-W0 significantly outperforms BEV and VLA baselines. Crucially, it amplifies the data scaling law, showing that performance gains accelerate as the training dataset size increases.

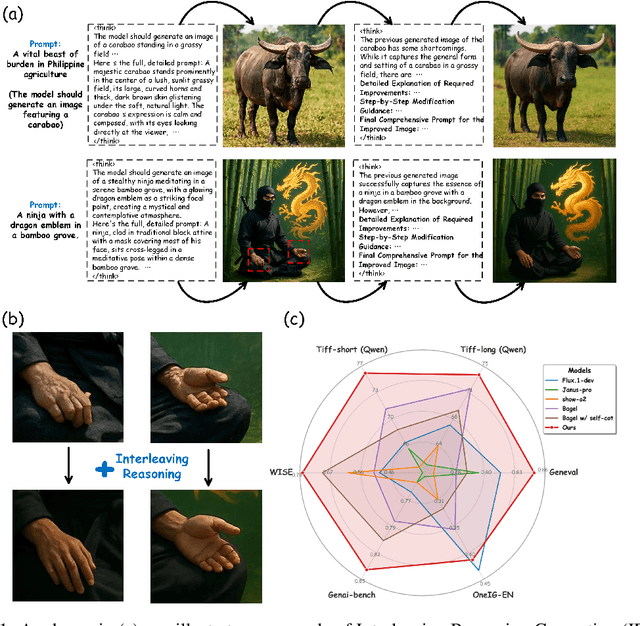

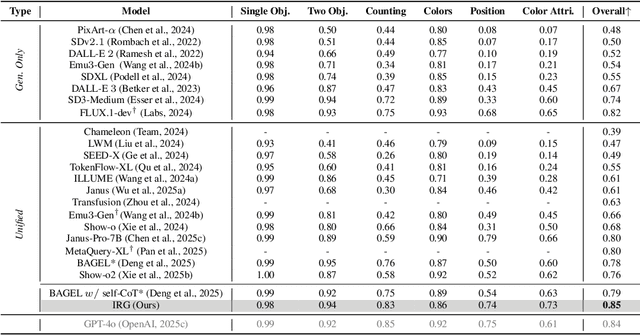

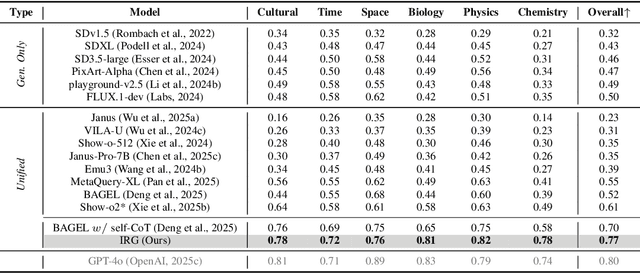

Interleaving Reasoning for Better Text-to-Image Generation

Sep 09, 2025

Abstract:Unified multimodal understanding and generation models recently have achieve significant improvement in image generation capability, yet a large gap remains in instruction following and detail preservation compared to systems that tightly couple comprehension with generation such as GPT-4o. Motivated by recent advances in interleaving reasoning, we explore whether such reasoning can further improve Text-to-Image (T2I) generation. We introduce Interleaving Reasoning Generation (IRG), a framework that alternates between text-based thinking and image synthesis: the model first produces a text-based thinking to guide an initial image, then reflects on the result to refine fine-grained details, visual quality, and aesthetics while preserving semantics. To train IRG effectively, we propose Interleaving Reasoning Generation Learning (IRGL), which targets two sub-goals: (1) strengthening the initial think-and-generate stage to establish core content and base quality, and (2) enabling high-quality textual reflection and faithful implementation of those refinements in a subsequent image. We curate IRGL-300K, a dataset organized into six decomposed learning modes that jointly cover learning text-based thinking, and full thinking-image trajectories. Starting from a unified foundation model that natively emits interleaved text-image outputs, our two-stage training first builds robust thinking and reflection, then efficiently tunes the IRG pipeline in the full thinking-image trajectory data. Extensive experiments show SoTA performance, yielding absolute gains of 5-10 points on GenEval, WISE, TIIF, GenAI-Bench, and OneIG-EN, alongside substantial improvements in visual quality and fine-grained fidelity. The code, model weights and datasets will be released in: https://github.com/Osilly/Interleaving-Reasoning-Generation .

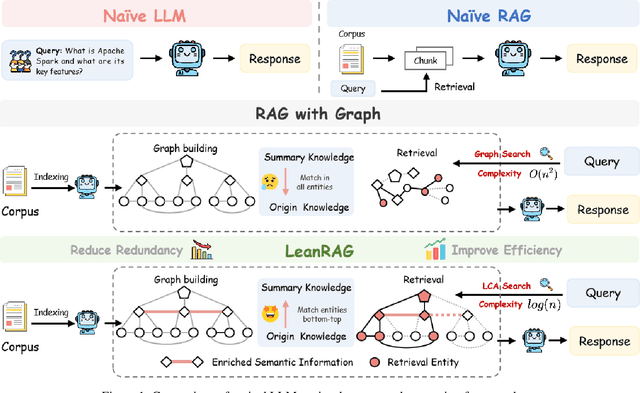

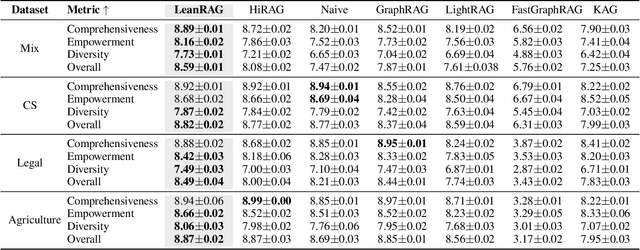

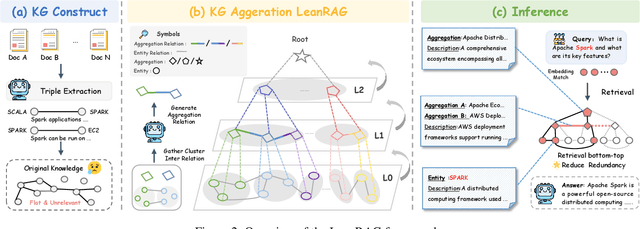

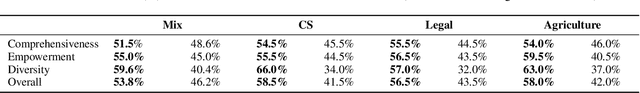

LeanRAG: Knowledge-Graph-Based Generation with Semantic Aggregation and Hierarchical Retrieval

Aug 14, 2025

Abstract:Retrieval-Augmented Generation (RAG) plays a crucial role in grounding Large Language Models by leveraging external knowledge, whereas the effectiveness is often compromised by the retrieval of contextually flawed or incomplete information. To address this, knowledge graph-based RAG methods have evolved towards hierarchical structures, organizing knowledge into multi-level summaries. However, these approaches still suffer from two critical, unaddressed challenges: high-level conceptual summaries exist as disconnected ``semantic islands'', lacking the explicit relations needed for cross-community reasoning; and the retrieval process itself remains structurally unaware, often degenerating into an inefficient flat search that fails to exploit the graph's rich topology. To overcome these limitations, we introduce LeanRAG, a framework that features a deeply collaborative design combining knowledge aggregation and retrieval strategies. LeanRAG first employs a novel semantic aggregation algorithm that forms entity clusters and constructs new explicit relations among aggregation-level summaries, creating a fully navigable semantic network. Then, a bottom-up, structure-guided retrieval strategy anchors queries to the most relevant fine-grained entities and then systematically traverses the graph's semantic pathways to gather concise yet contextually comprehensive evidence sets. The LeanRAG can mitigate the substantial overhead associated with path retrieval on graphs and minimizes redundant information retrieval. Extensive experiments on four challenging QA benchmarks with different domains demonstrate that LeanRAG significantly outperforming existing methods in response quality while reducing 46\% retrieval redundancy. Code is available at: https://github.com/RaZzzyz/LeanRAG

Exploring the Correlation between Human and Machine Evaluation of Simultaneous Speech Translation

Jun 14, 2024

Abstract:Assessing the performance of interpreting services is a complex task, given the nuanced nature of spoken language translation, the strategies that interpreters apply, and the diverse expectations of users. The complexity of this task become even more pronounced when automated evaluation methods are applied. This is particularly true because interpreted texts exhibit less linearity between the source and target languages due to the strategies employed by the interpreter. This study aims to assess the reliability of automatic metrics in evaluating simultaneous interpretations by analyzing their correlation with human evaluations. We focus on a particular feature of interpretation quality, namely translation accuracy or faithfulness. As a benchmark we use human assessments performed by language experts, and evaluate how well sentence embeddings and Large Language Models correlate with them. We quantify semantic similarity between the source and translated texts without relying on a reference translation. The results suggest GPT models, particularly GPT-3.5 with direct prompting, demonstrate the strongest correlation with human judgment in terms of semantic similarity between source and target texts, even when evaluating short textual segments. Additionally, the study reveals that the size of the context window has a notable impact on this correlation.

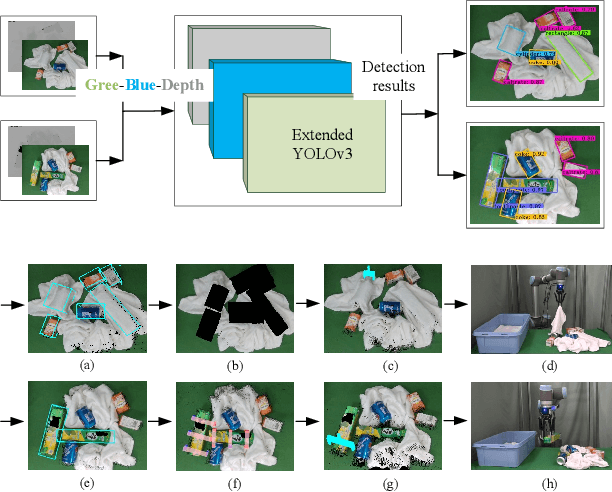

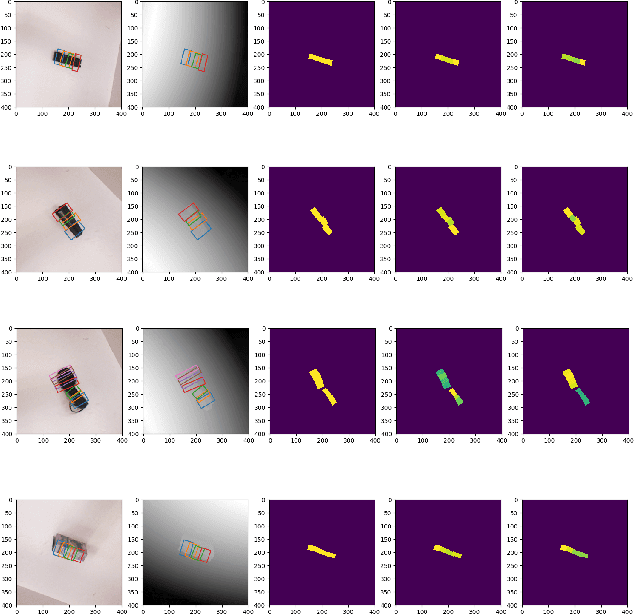

Object grasping planning for the situation when soft and rigid objects are mixed together

Sep 20, 2019

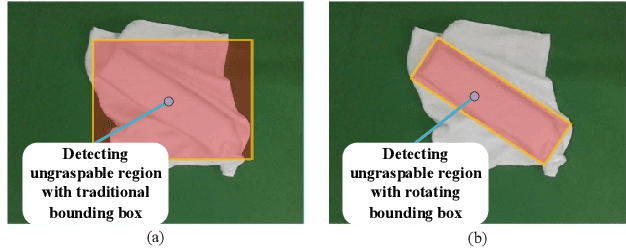

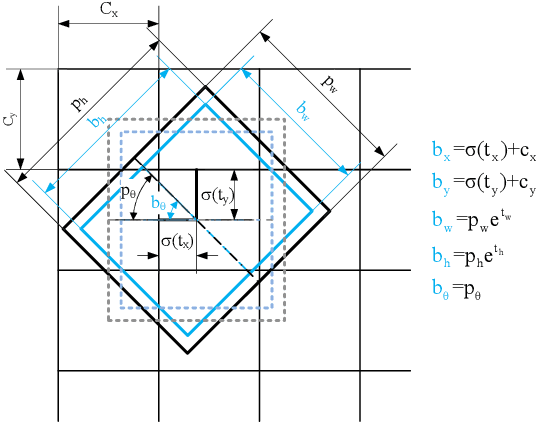

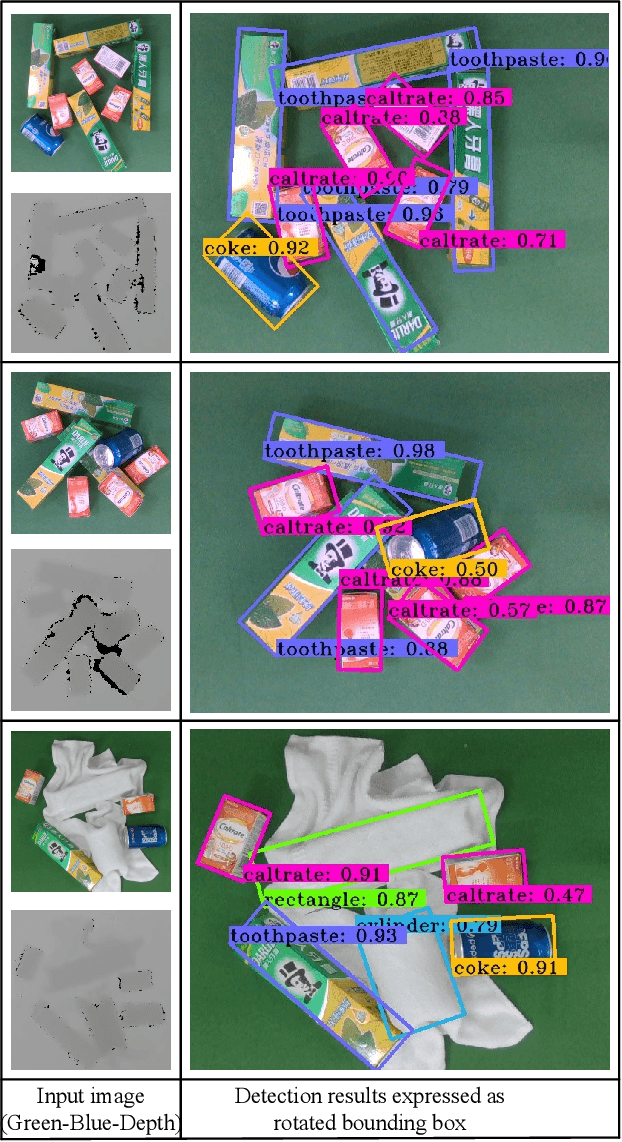

Abstract:In this paper, we propose a object detection method expressed as rotated bounding box to solve grasping challenge in the scenes where rigid objects and soft objects are mixed together. Compared with traditional detection methods, this method can output the angle information of rotated objects and thus can guarantee that within each rotated bounding box, there is a single instance. This technology is especially useful in the case of pile of objects with different orientations. In our method, when uncategorized objects with specific geometry shapes (rectangle or cylinder) are detected, the program will conclude that some rigid objects are covered by the towels. If no covered objects are detected, the grasp planning is based on 3D point cloud obtained from the mapping between 2D object detection result and its corresponding 3D point cloud. Based on the information provided by the 3D bounding box covering the object, grasping strategy for multiple cluttered rigid objects, collision avoidance strategy are proposed. The proposed method is verified by the experiment in which rigid objects and towels are mixed together.

Assembly of randomly placed parts realized by using only one robot arm with a general parallel-jaw gripper

Sep 19, 2019

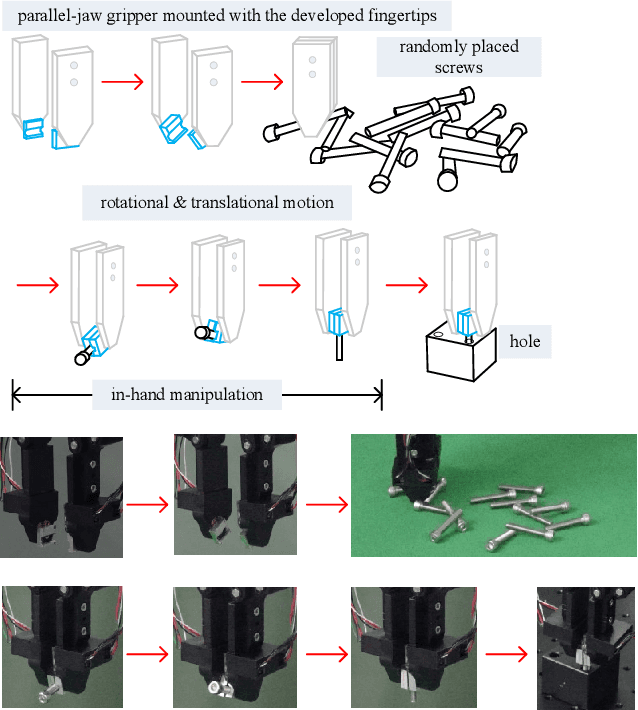

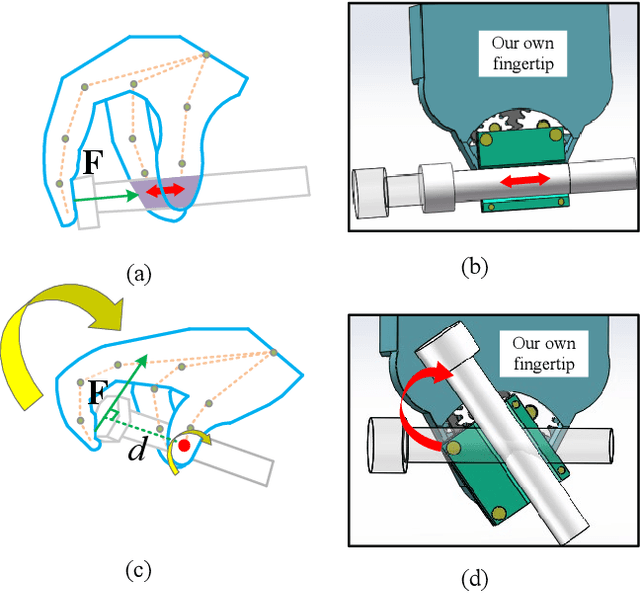

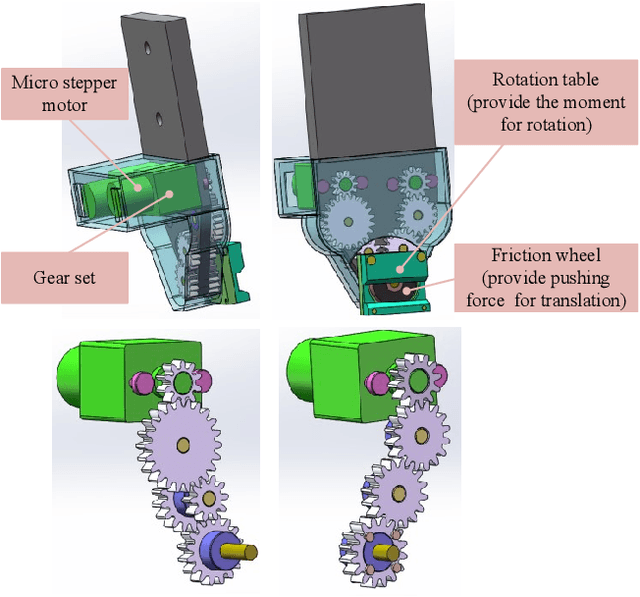

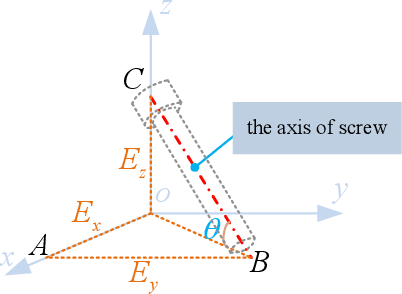

Abstract:In industry assembly lines, parts feeding machines are widely employed as the prologue of the whole procedure. They play the role of sorting the parts randomly placed in bins to the state with specified pose. With the help of the parts feeding machines, the subsequent assembly processes by robot arm can always start from the same condition. Thus it is expected that function of parting feeding machine and the robotic assembly can be integrated with one robot arm. This scheme can provide great flexibility and can also contribute to reduce the cost. The difficulties involved in this scheme lie in the fact that in the part feeding phase, the pose of the part after grasping may be not proper for the subsequent assembly. Sometimes it can not even guarantee a stable grasp. In this paper, we proposed a method to integrate parts feeding and assembly within one robot arm. This proposal utilizes a specially designed gripper tip mounted on the jaws of a two-fingered gripper. With the modified gripper, in-hand manipulation of the grasped object is realized, which can ensure the control of the orientation and offset position of the grasped object. The proposal in this paper is verified by a simulated assembly in which a robot arm completed the assembly process including parts picking from bin and a subsequent peg-in-hole assembly.

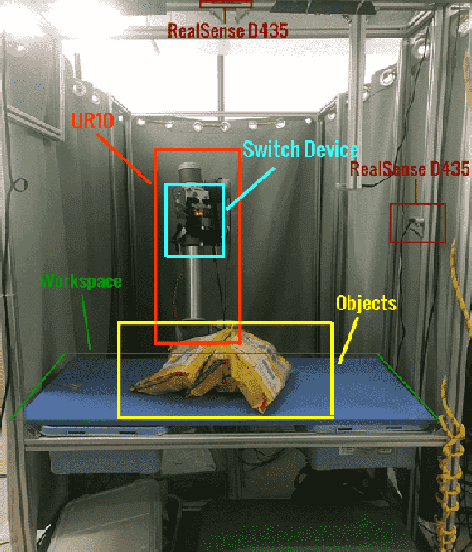

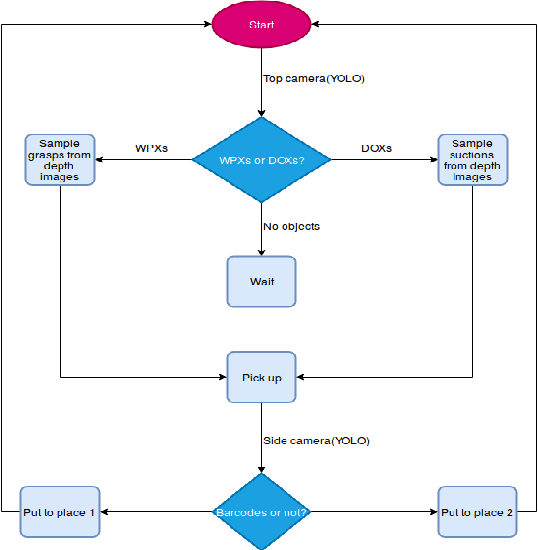

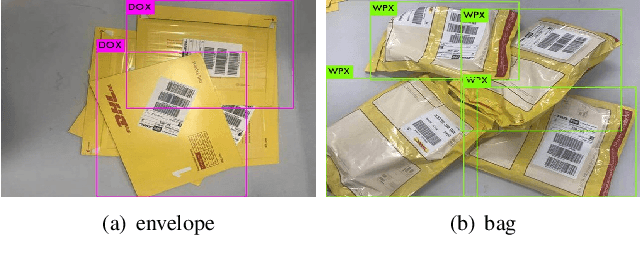

Vision Based Picking System for Automatic Express Package Dispatching

Apr 09, 2019

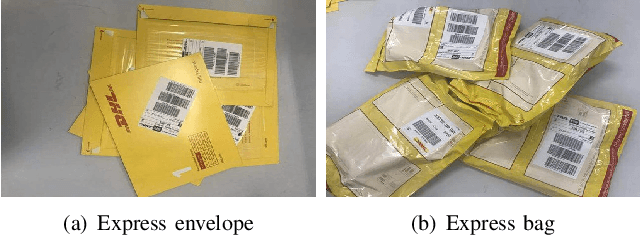

Abstract:This paper presents a vision based robotic system to handle the picking problem involved in automatic express package dispatching. By utilizing two RealSense RGB-D cameras and one UR10 industrial robot, package dispatching task which is usually done by human can be completed automatically. In order to determine grasp point for overlapped deformable objects, we improved the sampling algorithm proposed by the group in Berkeley to directly generate grasp candidate from depth images. For the purpose of package recognition, the deep network framework YOLO is integrated. We also designed a multi-modal robot hand composed of a two-fingered gripper and a vacuum suction cup to deal with different kinds of packages. All the technologies have been integrated in a work cell which simulates the practical conditions of an express package dispatching scenario. The proposed system is verified by experiments conducted for two typical express items.

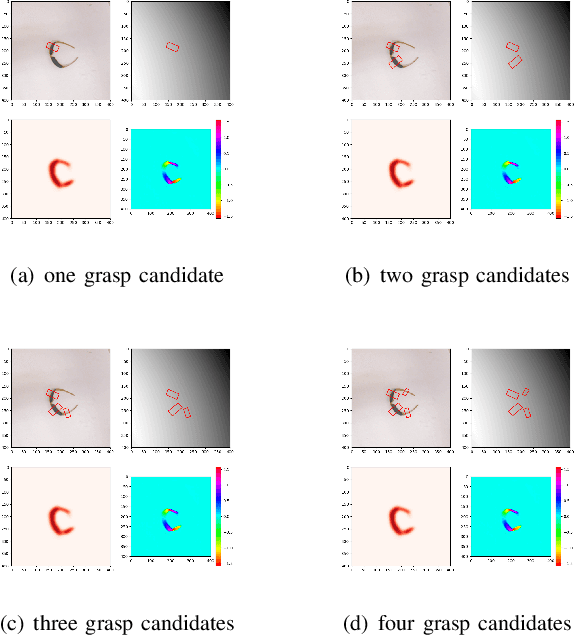

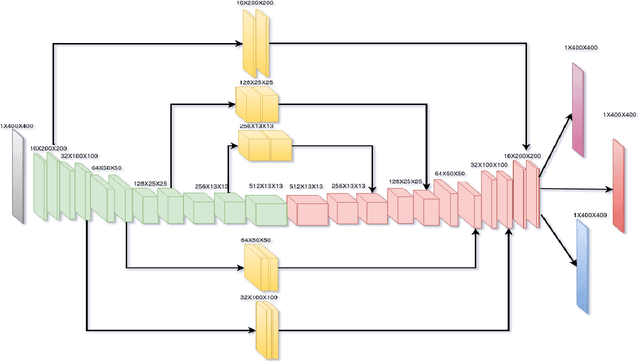

Efficient Fully Convolution Neural Network for Generating Pixel Wise Robotic Grasps With High Resolution Images

Feb 24, 2019

Abstract:This paper presents an efficient neural network model to generate robotic grasps with high resolution images. The proposed model uses fully convolution neural network to generate robotic grasps for each pixel using 400 $\times$ 400 high resolution RGB-D images. It first down-sample the images to get features and then up-sample those features to the original size of the input as well as combines local and global features from different feature maps. Compared to other regression or classification methods for detecting robotic grasps, our method looks more like the segmentation methods which solves the problem through pixel-wise ways. We use Cornell Grasp Dataset to train and evaluate the model and get high accuracy about 94.42% for image-wise and 91.02% for object-wise and fast prediction time about 8ms. We also demonstrate that without training on the multiple objects dataset, our model can directly output robotic grasps candidates for different objects because of the pixel wise implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge