Wojciech Samek

X-SYS: A Reference Architecture for Interactive Explanation Systems

Feb 13, 2026Abstract:The explainable AI (XAI) research community has proposed numerous technical methods, yet deploying explainability as systems remains challenging: Interactive explanation systems require both suitable algorithms and system capabilities that maintain explanation usability across repeated queries, evolving models and data, and governance constraints. We argue that operationalizing XAI requires treating explainability as an information systems problem where user interaction demands induce specific system requirements. We introduce X-SYS, a reference architecture for interactive explanation systems, that guides (X)AI researchers, developers and practitioners in connecting interactive explanation user interfaces (XUI) with system capabilities. X-SYS organizes around four quality attributes named STAR (scalability, traceability, responsiveness, and adaptability), and specifies a five-component decomposition (XUI Services, Explanation Services, Model Services, Data Services, Orchestration and Governance). It maps interaction patterns to system capabilities to decouple user interface evolution from backend computation. We implement X-SYS through SemanticLens, a system for semantic search and activation steering in vision-language models. SemanticLens demonstrates how contract-based service boundaries enable independent evolution, offline/online separation ensures responsiveness, and persistent state management supports traceability. Together, this work provides a reusable blueprint and concrete instantiation for interactive explanation systems supporting end-to-end design under operational constraints.

Towards Visually Explaining Statistical Tests with Applications in Biomedical Imaging

Jan 20, 2026Abstract:Deep neural two-sample tests have recently shown strong power for detecting distributional differences between groups, yet their black-box nature limits interpretability and practical adoption in biomedical analysis. Moreover, most existing post-hoc explainability methods rely on class labels, making them unsuitable for label-free statistical testing settings. We propose an explainable deep statistical testing framework that augments deep two-sample tests with sample-level and feature-level explanations, revealing which individual samples and which input features drive statistically significant group differences. Our method highlights which image regions and which individual samples contribute most to the detected group difference, providing spatial and instance-wise insight into the test's decision. Applied to biomedical imaging data, the proposed framework identifies influential samples and highlights anatomically meaningful regions associated with disease-related variation. This work bridges statistical inference and explainable AI, enabling interpretable, label-free population analysis in medical imaging.

Multimodal Deep Learning for Prediction of Progression-Free Survival in Patients with Neuroendocrine Tumors Undergoing 177Lu-based Peptide Receptor Radionuclide Therapy

Nov 07, 2025

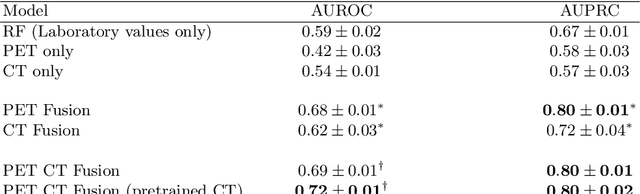

Abstract:Peptide receptor radionuclide therapy (PRRT) is an established treatment for metastatic neuroendocrine tumors (NETs), yet long-term disease control occurs only in a subset of patients. Predicting progression-free survival (PFS) could support individualized treatment planning. This study evaluates laboratory, imaging, and multimodal deep learning models for PFS prediction in PRRT-treated patients. In this retrospective, single-center study 116 patients with metastatic NETs undergoing 177Lu-DOTATOC were included. Clinical characteristics, laboratory values, and pretherapeutic somatostatin receptor positron emission tomography/computed tomographies (SR-PET/CT) were collected. Seven models were trained to classify low- vs. high-PFS groups, including unimodal (laboratory, SR-PET, or CT) and multimodal fusion approaches. Explainability was evaluated by feature importance analysis and gradient maps. Forty-two patients (36%) had short PFS (< 1 year), 74 patients long PFS (>1 year). Groups were similar in most characteristics, except for higher baseline chromogranin A (p = 0.003), elevated gamma-GT (p = 0.002), and fewer PRRT cycles (p < 0.001) in short-PFS patients. The Random Forest model trained only on laboratory biomarkers reached an AUROC of 0.59 +- 0.02. Unimodal three-dimensional convolutional neural networks using SR-PET or CT performed worse (AUROC 0.42 +- 0.03 and 0.54 +- 0.01, respectively). A multimodal fusion model laboratory values, SR-PET, and CT -augmented with a pretrained CT branch - achieved the best results (AUROC 0.72 +- 0.01, AUPRC 0.80 +- 0.01). Multimodal deep learning combining SR-PET, CT, and laboratory biomarkers outperformed unimodal approaches for PFS prediction after PRRT. Upon external validation, such models may support risk-adapted follow-up strategies.

Atlas-Alignment: Making Interpretability Transferable Across Language Models

Oct 31, 2025Abstract:Interpretability is crucial for building safe, reliable, and controllable language models, yet existing interpretability pipelines remain costly and difficult to scale. Interpreting a new model typically requires costly training of model-specific sparse autoencoders, manual or semi-automated labeling of SAE components, and their subsequent validation. We introduce Atlas-Alignment, a framework for transferring interpretability across language models by aligning unknown latent spaces to a Concept Atlas - a labeled, human-interpretable latent space - using only shared inputs and lightweight representational alignment techniques. Once aligned, this enables two key capabilities in previously opaque models: (1) semantic feature search and retrieval, and (2) steering generation along human-interpretable atlas concepts. Through quantitative and qualitative evaluations, we show that simple representational alignment methods enable robust semantic retrieval and steerable generation without the need for labeled concept data. Atlas-Alignment thus amortizes the cost of explainable AI and mechanistic interpretability: by investing in one high-quality Concept Atlas, we can make many new models transparent and controllable at minimal marginal cost.

LieSolver: A PDE-constrained solver for IBVPs using Lie symmetries

Oct 29, 2025Abstract:We introduce a method for efficiently solving initial-boundary value problems (IBVPs) that uses Lie symmetries to enforce the associated partial differential equation (PDE) exactly by construction. By leveraging symmetry transformations, the model inherently incorporates the physical laws and learns solutions from initial and boundary data. As a result, the loss directly measures the model's accuracy, leading to improved convergence. Moreover, for well-posed IBVPs, our method enables rigorous error estimation. The approach yields compact models, facilitating an efficient optimization. We implement LieSolver and demonstrate its application to linear homogeneous PDEs with a range of initial conditions, showing that it is faster and more accurate than physics-informed neural networks (PINNs). Overall, our method improves both computational efficiency and the reliability of predictions for PDE-constrained problems.

Model Science: getting serious about verification, explanation and control of AI systems

Aug 27, 2025Abstract:The growing adoption of foundation models calls for a paradigm shift from Data Science to Model Science. Unlike data-centric approaches, Model Science places the trained model at the core of analysis, aiming to interact, verify, explain, and control its behavior across diverse operational contexts. This paper introduces a conceptual framework for a new discipline called Model Science, along with the proposal for its four key pillars: Verification, which requires strict, context-aware evaluation protocols; Explanation, which is understood as various approaches to explore of internal model operations; Control, which integrates alignment techniques to steer model behavior; and Interface, which develops interactive and visual explanation tools to improve human calibration and decision-making. The proposed framework aims to guide the development of credible, safe, and human-aligned AI systems.

* 8 pages

Attribution-guided Pruning for Compression, Circuit Discovery, and Targeted Correction in LLMs

Jun 16, 2025Abstract:Large Language Models (LLMs) are central to many contemporary AI applications, yet their extensive parameter counts pose significant challenges for deployment in memory- and compute-constrained environments. Recent works in eXplainable AI (XAI), particularly on attribution methods, suggest that interpretability can also enable model compression by identifying and removing components irrelevant to inference. In this paper, we leverage Layer-wise Relevance Propagation (LRP) to perform attribution-guided pruning of LLMs. While LRP has shown promise in structured pruning for vision models, we extend it to unstructured pruning in LLMs and demonstrate that it can substantially reduce model size with minimal performance loss. Our method is especially effective in extracting task-relevant subgraphs -- so-called ``circuits'' -- which can represent core functions (e.g., indirect object identification). Building on this, we introduce a technique for model correction, by selectively removing circuits responsible for spurious behaviors (e.g., toxic outputs). All in all, we gather these techniques as a uniform holistic framework and showcase its effectiveness and limitations through extensive experiments for compression, circuit discovery and model correction on Llama and OPT models, highlighting its potential for improving both model efficiency and safety. Our code is publicly available at https://github.com/erfanhatefi/SparC3.

Deep Learning-based Multi Project InP Wafer Simulation for Unsupervised Surface Defect Detection

Jun 12, 2025Abstract:Quality management in semiconductor manufacturing often relies on template matching with known golden standards. For Indium-Phosphide (InP) multi-project wafer manufacturing, low production scale and high design variability lead to such golden standards being typically unavailable. Defect detection, in turn, is manual and labor-intensive. This work addresses this challenge by proposing a methodology to generate a synthetic golden standard using Deep Neural Networks, trained to simulate photo-realistic InP wafer images from CAD data. We evaluate various training objectives and assess the quality of the simulated images on both synthetic data and InP wafer photographs. Our deep-learning-based method outperforms a baseline decision-tree-based approach, enabling the use of a 'simulated golden die' from CAD plans in any user-defined region of a wafer for more efficient defect detection. We apply our method to a template matching procedure, to demonstrate its practical utility in surface defect detection.

Relevance-driven Input Dropout: an Explanation-guided Regularization Technique

May 27, 2025Abstract:Overfitting is a well-known issue extending even to state-of-the-art (SOTA) Machine Learning (ML) models, resulting in reduced generalization, and a significant train-test performance gap. Mitigation measures include a combination of dropout, data augmentation, weight decay, and other regularization techniques. Among the various data augmentation strategies, occlusion is a prominent technique that typically focuses on randomly masking regions of the input during training. Most of the existing literature emphasizes randomness in selecting and modifying the input features instead of regions that strongly influence model decisions. We propose Relevance-driven Input Dropout (RelDrop), a novel data augmentation method which selectively occludes the most relevant regions of the input, nudging the model to use other important features in the prediction process, thus improving model generalization through informed regularization. We further conduct qualitative and quantitative analyses to study how Relevance-driven Input Dropout (RelDrop) affects model decision-making. Through a series of experiments on benchmark datasets, we demonstrate that our approach improves robustness towards occlusion, results in models utilizing more features within the region of interest, and boosts inference time generalization performance. Our code is available at https://github.com/Shreyas-Gururaj/LRP_Relevance_Dropout.

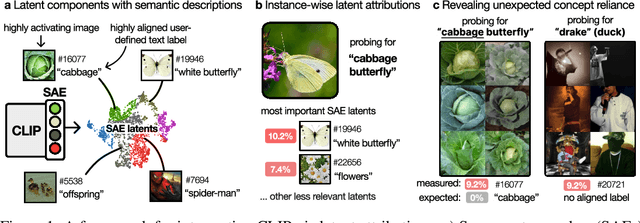

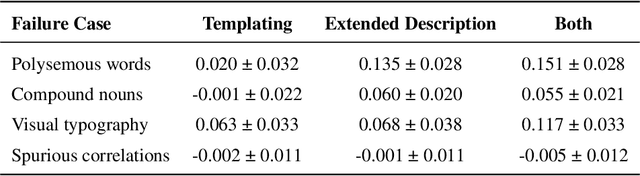

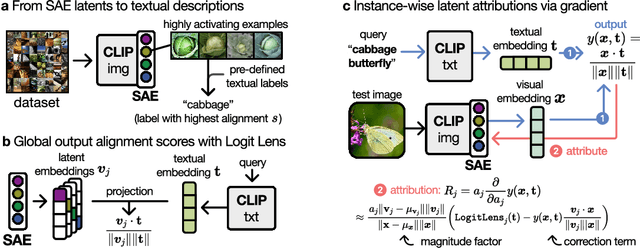

From What to How: Attributing CLIP's Latent Components Reveals Unexpected Semantic Reliance

May 26, 2025

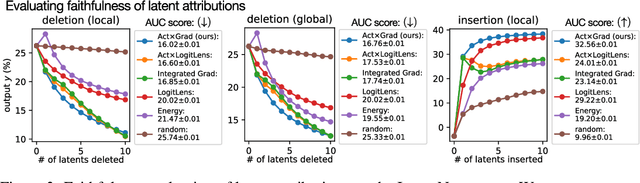

Abstract:Transformer-based CLIP models are widely used for text-image probing and feature extraction, making it relevant to understand the internal mechanisms behind their predictions. While recent works show that Sparse Autoencoders (SAEs) yield interpretable latent components, they focus on what these encode and miss how they drive predictions. We introduce a scalable framework that reveals what latent components activate for, how they align with expected semantics, and how important they are to predictions. To achieve this, we adapt attribution patching for instance-wise component attributions in CLIP and highlight key faithfulness limitations of the widely used Logit Lens technique. By combining attributions with semantic alignment scores, we can automatically uncover reliance on components that encode semantically unexpected or spurious concepts. Applied across multiple CLIP variants, our method uncovers hundreds of surprising components linked to polysemous words, compound nouns, visual typography and dataset artifacts. While text embeddings remain prone to semantic ambiguity, they are more robust to spurious correlations compared to linear classifiers trained on image embeddings. A case study on skin lesion detection highlights how such classifiers can amplify hidden shortcuts, underscoring the need for holistic, mechanistic interpretability. We provide code at https://github.com/maxdreyer/attributing-clip.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge