Simon Baur

Multimodal Deep Learning for Prediction of Progression-Free Survival in Patients with Neuroendocrine Tumors Undergoing 177Lu-based Peptide Receptor Radionuclide Therapy

Nov 07, 2025

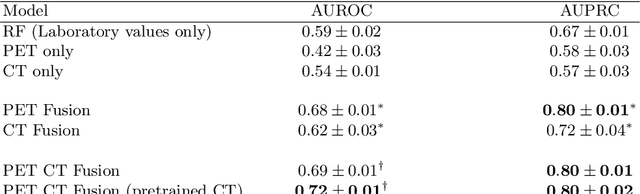

Abstract:Peptide receptor radionuclide therapy (PRRT) is an established treatment for metastatic neuroendocrine tumors (NETs), yet long-term disease control occurs only in a subset of patients. Predicting progression-free survival (PFS) could support individualized treatment planning. This study evaluates laboratory, imaging, and multimodal deep learning models for PFS prediction in PRRT-treated patients. In this retrospective, single-center study 116 patients with metastatic NETs undergoing 177Lu-DOTATOC were included. Clinical characteristics, laboratory values, and pretherapeutic somatostatin receptor positron emission tomography/computed tomographies (SR-PET/CT) were collected. Seven models were trained to classify low- vs. high-PFS groups, including unimodal (laboratory, SR-PET, or CT) and multimodal fusion approaches. Explainability was evaluated by feature importance analysis and gradient maps. Forty-two patients (36%) had short PFS (< 1 year), 74 patients long PFS (>1 year). Groups were similar in most characteristics, except for higher baseline chromogranin A (p = 0.003), elevated gamma-GT (p = 0.002), and fewer PRRT cycles (p < 0.001) in short-PFS patients. The Random Forest model trained only on laboratory biomarkers reached an AUROC of 0.59 +- 0.02. Unimodal three-dimensional convolutional neural networks using SR-PET or CT performed worse (AUROC 0.42 +- 0.03 and 0.54 +- 0.01, respectively). A multimodal fusion model laboratory values, SR-PET, and CT -augmented with a pretrained CT branch - achieved the best results (AUROC 0.72 +- 0.01, AUPRC 0.80 +- 0.01). Multimodal deep learning combining SR-PET, CT, and laboratory biomarkers outperformed unimodal approaches for PFS prediction after PRRT. Upon external validation, such models may support risk-adapted follow-up strategies.

Synthetic Generation of Dermatoscopic Images with GAN and Closed-Form Factorization

Oct 07, 2024

Abstract:In the realm of dermatological diagnoses, where the analysis of dermatoscopic and microscopic skin lesion images is pivotal for the accurate and early detection of various medical conditions, the costs associated with creating diverse and high-quality annotated datasets have hampered the accuracy and generalizability of machine learning models. We propose an innovative unsupervised augmentation solution that harnesses Generative Adversarial Network (GAN) based models and associated techniques over their latent space to generate controlled semiautomatically-discovered semantic variations in dermatoscopic images. We created synthetic images to incorporate the semantic variations and augmented the training data with these images. With this approach, we were able to increase the performance of machine learning models and set a new benchmark amongst non-ensemble based models in skin lesion classification on the HAM10000 dataset; and used the observed analytics and generated models for detailed studies on model explainability, affirming the effectiveness of our solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge