Wenjie Xuan

Rethink Sparse Signals for Pose-guided Text-to-image Generation

Jun 26, 2025Abstract:Recent works favored dense signals (e.g., depth, DensePose), as an alternative to sparse signals (e.g., OpenPose), to provide detailed spatial guidance for pose-guided text-to-image generation. However, dense representations raised new challenges, including editing difficulties and potential inconsistencies with textual prompts. This fact motivates us to revisit sparse signals for pose guidance, owing to their simplicity and shape-agnostic nature, which remains underexplored. This paper proposes a novel Spatial-Pose ControlNet(SP-Ctrl), equipping sparse signals with robust controllability for pose-guided image generation. Specifically, we extend OpenPose to a learnable spatial representation, making keypoint embeddings discriminative and expressive. Additionally, we introduce keypoint concept learning, which encourages keypoint tokens to attend to the spatial positions of each keypoint, thus improving pose alignment. Experiments on animal- and human-centric image generation tasks demonstrate that our method outperforms recent spatially controllable T2I generation approaches under sparse-pose guidance and even matches the performance of dense signal-based methods. Moreover, SP-Ctrl shows promising capabilities in diverse and cross-species generation through sparse signals. Codes will be available at https://github.com/DREAMXFAR/SP-Ctrl.

Detect Changes like Humans: Incorporating Semantic Priors for Improved Change Detection

Dec 22, 2024

Abstract:When given two similar images, humans identify their differences by comparing the appearance ({\it e.g., color, texture}) with the help of semantics ({\it e.g., objects, relations}). However, mainstream change detection models adopt a supervised training paradigm, where the annotated binary change map is the main constraint. Thus, these methods primarily emphasize the difference-aware features between bi-temporal images and neglect the semantic understanding of the changed landscapes, which undermines the accuracy in the presence of noise and illumination variations. To this end, this paper explores incorporating semantic priors to improve the ability to detect changes. Firstly, we propose a Semantic-Aware Change Detection network, namely SA-CDNet, which transfers the common knowledge of the visual foundation models ({\it i.e., FastSAM}) to change detection. Inspired by the human visual paradigm, a novel dual-stream feature decoder is derived to distinguish changes by combining semantic-aware features and difference-aware features. Secondly, we design a single-temporal semantic pre-training strategy to enhance the semantic understanding of landscapes, which brings further increments. Specifically, we construct pseudo-change detection data from public single-temporal remote sensing segmentation datasets for large-scale pre-training, where an extra branch is also introduced for the proxy semantic segmentation task. Experimental results on five challenging benchmarks demonstrate the superiority of our method over the existing state-of-the-art methods. The code is available at \href{https://github.com/thislzm/SA-CD}{SA-CD}.

RFL-CDNet: Towards Accurate Change Detection via Richer Feature Learning

Apr 27, 2024

Abstract:Change Detection is a crucial but extremely challenging task of remote sensing image analysis, and much progress has been made with the rapid development of deep learning. However, most existing deep learning-based change detection methods mainly focus on intricate feature extraction and multi-scale feature fusion, while ignoring the insufficient utilization of features in the intermediate stages, thus resulting in sub-optimal results. To this end, we propose a novel framework, named RFL-CDNet, that utilizes richer feature learning to boost change detection performance. Specifically, we first introduce deep multiple supervision to enhance intermediate representations, thus unleashing the potential of backbone feature extractor at each stage. Furthermore, we design the Coarse-To-Fine Guiding (C2FG) module and the Learnable Fusion (LF) module to further improve feature learning and obtain more discriminative feature representations. The C2FG module aims to seamlessly integrate the side prediction from the previous coarse-scale into the current fine-scale prediction in a coarse-to-fine manner, while LF module assumes that the contribution of each stage and each spatial location is independent, thus designing a learnable module to fuse multiple predictions. Experiments on several benchmark datasets show that our proposed RFL-CDNet achieves state-of-the-art performance on WHU cultivated land dataset and CDD dataset, and the second-best performance on WHU building dataset. The source code and models are publicly available at https://github.com/Hhaizee/RFL-CDNet.

When ControlNet Meets Inexplicit Masks: A Case Study of ControlNet on its Contour-following Ability

Mar 01, 2024

Abstract:ControlNet excels at creating content that closely matches precise contours in user-provided masks. However, when these masks contain noise, as a frequent occurrence with non-expert users, the output would include unwanted artifacts. This paper first highlights the crucial role of controlling the impact of these inexplicit masks with diverse deterioration levels through in-depth analysis. Subsequently, to enhance controllability with inexplicit masks, an advanced Shape-aware ControlNet consisting of a deterioration estimator and a shape-prior modulation block is devised. The deterioration estimator assesses the deterioration factor of the provided masks. Then this factor is utilized in the modulation block to adaptively modulate the model's contour-following ability, which helps it dismiss the noise part in the inexplicit masks. Extensive experiments prove its effectiveness in encouraging ControlNet to interpret inaccurate spatial conditions robustly rather than blindly following the given contours. We showcase application scenarios like modifying shape priors and composable shape-controllable generation. Codes are soon available.

PNT-Edge: Towards Robust Edge Detection with Noisy Labels by Learning Pixel-level Noise Transitions

Jul 26, 2023Abstract:Relying on large-scale training data with pixel-level labels, previous edge detection methods have achieved high performance. However, it is hard to manually label edges accurately, especially for large datasets, and thus the datasets inevitably contain noisy labels. This label-noise issue has been studied extensively for classification, while still remaining under-explored for edge detection. To address the label-noise issue for edge detection, this paper proposes to learn Pixel-level NoiseTransitions to model the label-corruption process. To achieve it, we develop a novel Pixel-wise Shift Learning (PSL) module to estimate the transition from clean to noisy labels as a displacement field. Exploiting the estimated noise transitions, our model, named PNT-Edge, is able to fit the prediction to clean labels. In addition, a local edge density regularization term is devised to exploit local structure information for better transition learning. This term encourages learning large shifts for the edges with complex local structures. Experiments on SBD and Cityscapes demonstrate the effectiveness of our method in relieving the impact of label noise. Codes will be available at github.

An End-to-end Supervised Domain Adaptation Framework for Cross-Domain Change Detection

Apr 01, 2022

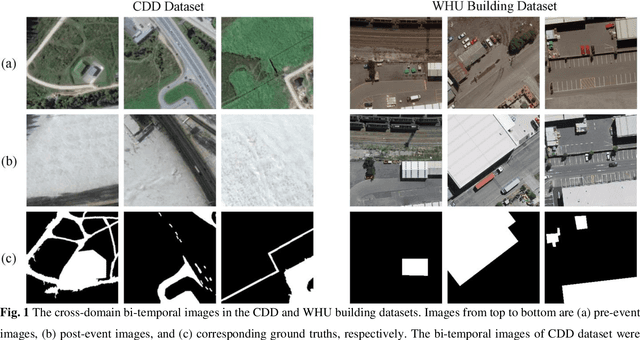

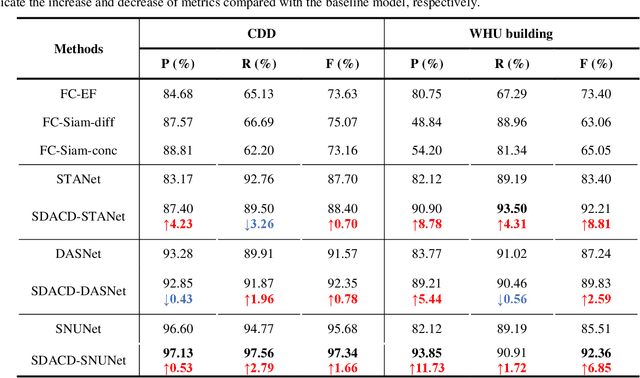

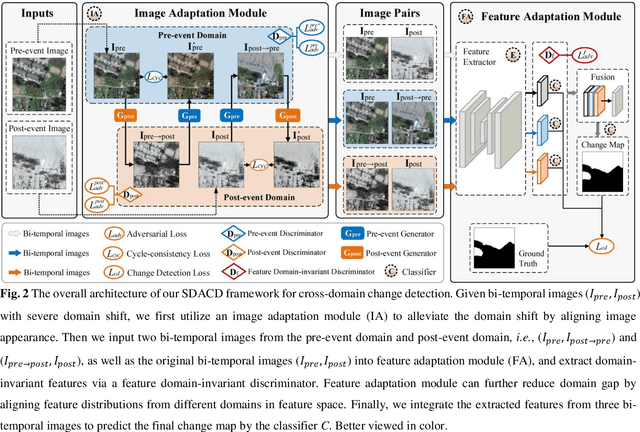

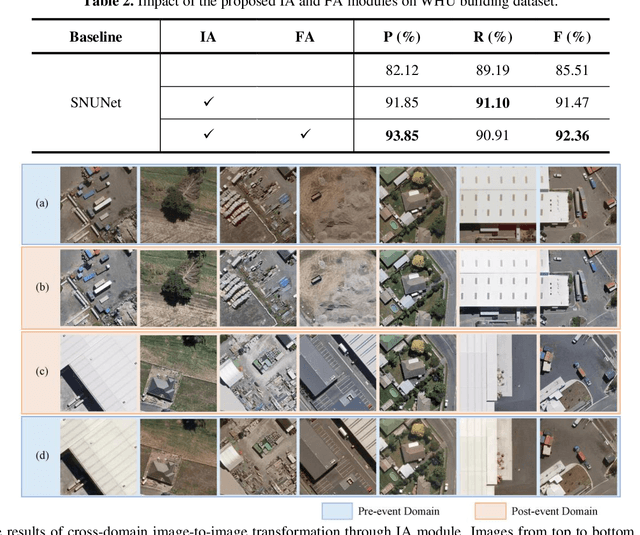

Abstract:Existing deep learning-based change detection methods try to elaborately design complicated neural networks with powerful feature representations, but ignore the universal domain shift induced by time-varying land cover changes, including luminance fluctuations and season changes between pre-event and post-event images, thereby producing sub-optimal results. In this paper, we propose an end-to-end Supervised Domain Adaptation framework for cross-domain Change Detection, namely SDACD, to effectively alleviate the domain shift between bi-temporal images for better change predictions. Specifically, our SDACD presents collaborative adaptations from both image and feature perspectives with supervised learning. Image adaptation exploits generative adversarial learning with cycle-consistency constraints to perform cross-domain style transformation, effectively narrowing the domain gap in a two-side generation fashion. As to feature adaptation, we extract domain-invariant features to align different feature distributions in the feature space, which could further reduce the domain gap of cross-domain images. To further improve the performance, we combine three types of bi-temporal images for the final change prediction, including the initial input bi-temporal images and two generated bi-temporal images from the pre-event and post-event domains. Extensive experiments and analyses on two benchmarks demonstrate the effectiveness and universality of our proposed framework. Notably, our framework pushes several representative baseline models up to new State-Of-The-Art records, achieving 97.34% and 92.36% on the CDD and WHU building datasets, respectively. The source code and models are publicly available at https://github.com/Perfect-You/SDACD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge