Wei Zhuo

Fully Self-Supervised Learning for Semantic Segmentation

Feb 24, 2022

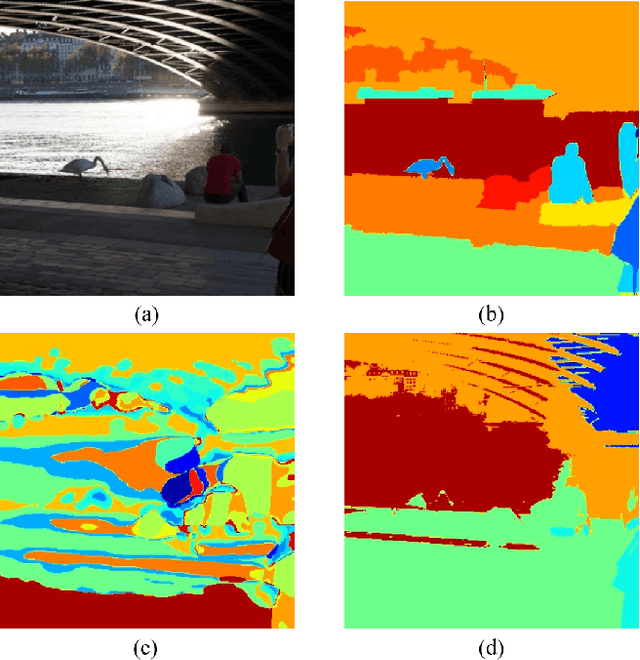

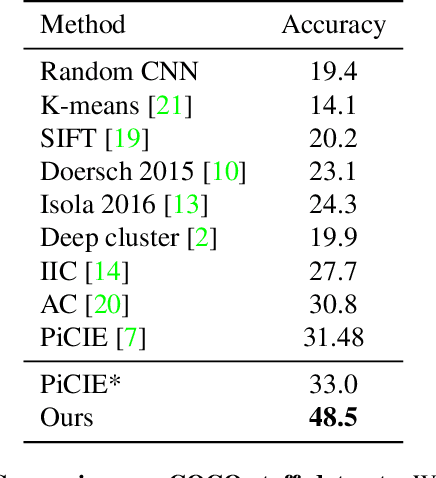

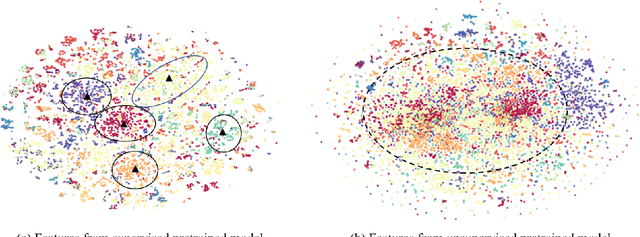

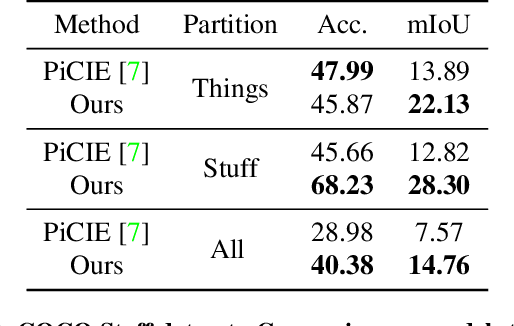

Abstract:In this work, we present a fully self-supervised framework for semantic segmentation(FS^4). A fully bootstrapped strategy for semantic segmentation, which saves efforts for the huge amount of annotation, is crucial for building customized models from end-to-end for open-world domains. This application is eagerly needed in realistic scenarios. Even though recent self-supervised semantic segmentation methods have gained great progress, these works however heavily depend on the fully-supervised pretrained model and make it impossible a fully self-supervised pipeline. To solve this problem, we proposed a bootstrapped training scheme for semantic segmentation, which fully leveraged the global semantic knowledge for self-supervision with our proposed PGG strategy and CAE module. In particular, we perform pixel clustering and assignments for segmentation supervision. Preventing it from clustering a mess, we proposed 1) a pyramid-global-guided (PGG) training strategy to supervise the learning with pyramid image/patch-level pseudo labels, which are generated by grouping the unsupervised features. The stable global and pyramid semantic pseudo labels can prevent the segmentation from learning too many clutter regions or degrading to one background region; 2) in addition, we proposed context-aware embedding (CAE) module to generate global feature embedding in view of its neighbors close both in space and appearance in a non-trivial way. We evaluate our method on the large-scale COCO-Stuff dataset and achieved 7.19 mIoU improvements on both things and stuff objects

Graph Neural Networks with Feature and Structure Aware Random Walk

Nov 19, 2021

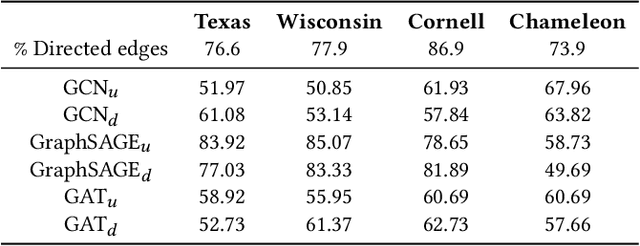

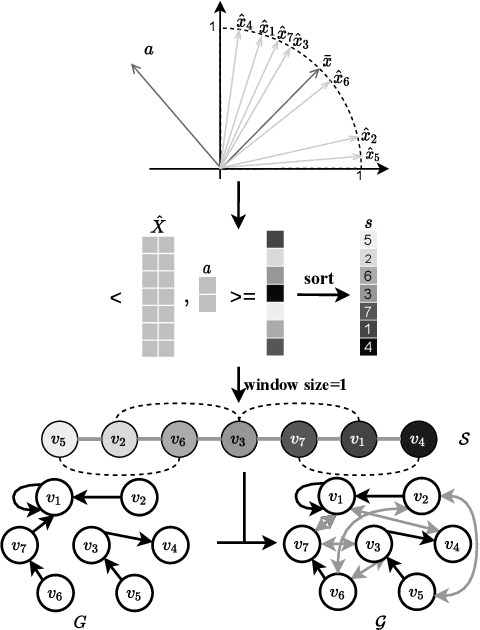

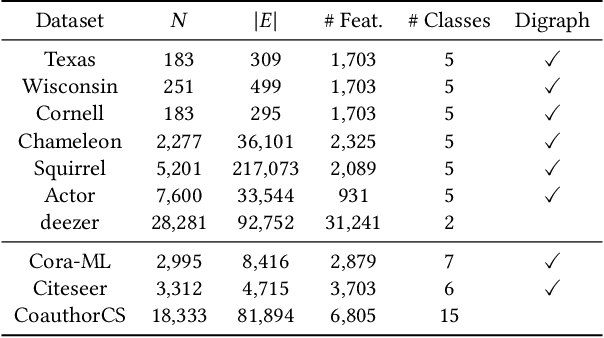

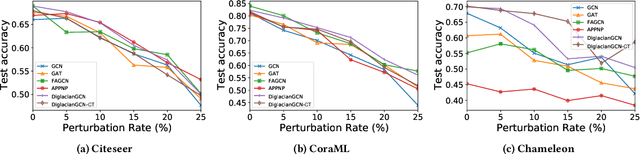

Abstract:Graph Neural Networks (GNNs) have received increasing attention for representation learning in various machine learning tasks. However, most existing GNNs applying neighborhood aggregation usually perform poorly on the graph with heterophily where adjacent nodes belong to different classes. In this paper, we show that in typical heterphilous graphs, the edges may be directed, and whether to treat the edges as is or simply make them undirected greatly affects the performance of the GNN models. Furthermore, due to the limitation of heterophily, it is highly beneficial for the nodes to aggregate messages from similar nodes beyond local neighborhood.These motivate us to develop a model that adaptively learns the directionality of the graph, and exploits the underlying long-distance correlations between nodes. We first generalize the graph Laplacian to digraph based on the proposed Feature-Aware PageRank algorithm, which simultaneously considers the graph directionality and long-distance feature similarity between nodes. Then digraph Laplacian defines a graph propagation matrix that leads to a model called {\em DiglacianGCN}. Based on this, we further leverage the node proximity measured by commute times between nodes, in order to preserve the nodes' long-distance correlation on the topology level. Extensive experiments on ten datasets with different levels of homophily demonstrate the effectiveness of our method over existing solutions in the task of node classification.

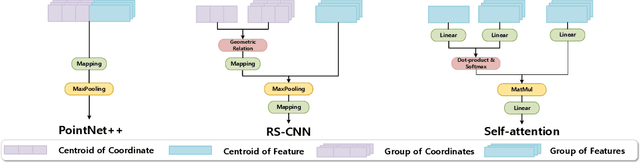

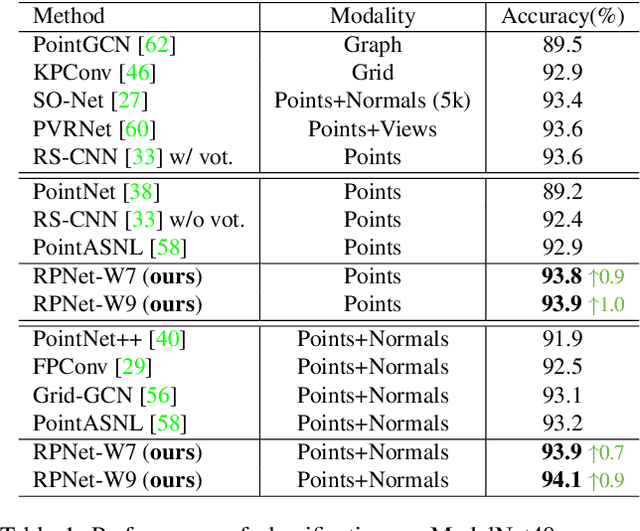

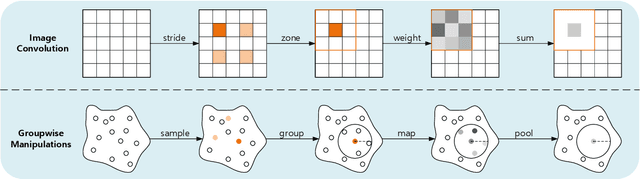

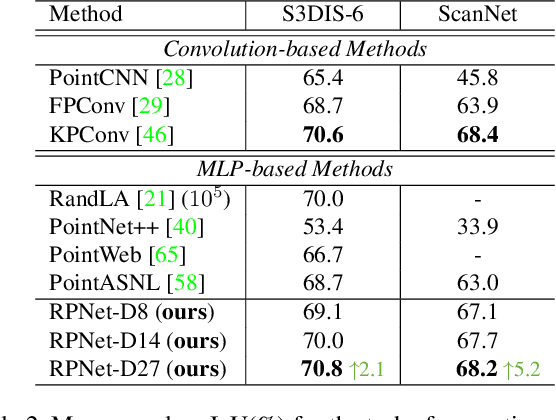

Learning Inner-Group Relations on Point Clouds

Aug 27, 2021

Abstract:The prevalence of relation networks in computer vision is in stark contrast to underexplored point-based methods. In this paper, we explore the possibilities of local relation operators and survey their feasibility. We propose a scalable and efficient module, called group relation aggregator. The module computes a feature of a group based on the aggregation of the features of the inner-group points weighted by geometric relations and semantic relations. We adopt this module to design our RPNet. We further verify the expandability of RPNet, in terms of both depth and width, on the tasks of classification and segmentation. Surprisingly, empirical results show that wider RPNet fits for classification, while deeper RPNet works better on segmentation. RPNet achieves state-of-the-art for classification and segmentation on challenging benchmarks. We also compare our local aggregator with PointNet++, with around 30% parameters and 50% computation saving. Finally, we conduct experiments to reveal the robustness of RPNet with regard to rigid transformation and noises.

A Behavior-aware Graph Convolution Network Model for Video Recommendation

Jun 27, 2021

Abstract:Interactions between users and videos are the major data source of performing video recommendation. Despite lots of existing recommendation methods, user behaviors on videos, which imply the complex relations between users and videos, are still far from being fully explored. In the paper, we present a model named Sagittarius. Sagittarius adopts a graph convolutional neural network to capture the influence between users and videos. In particular, Sagittarius differentiates between different user behaviors by weighting and fuses the semantics of user behaviors into the embeddings of users and videos. Moreover, Sagittarius combines multiple optimization objectives to learn user and video embeddings and then achieves the video recommendation by the learned user and video embeddings. The experimental results on multiple datasets show that Sagittarius outperforms several state-of-the-art models in terms of recall, unique recall and NDCG.

Bootstrap Representation Learning for Segmentation on Medical Volumes and Sequences

Jun 23, 2021

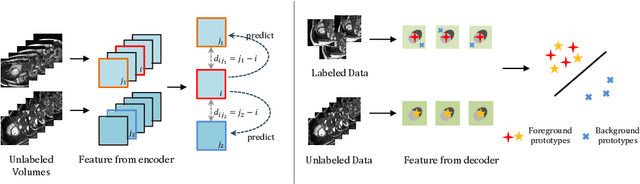

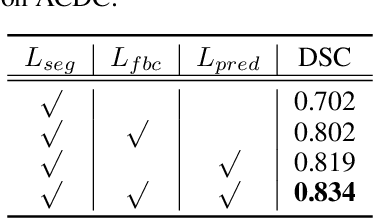

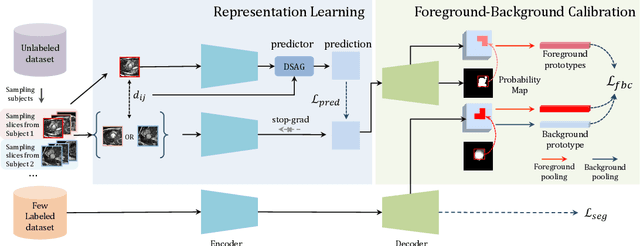

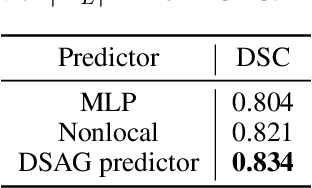

Abstract:In this work, we propose a novel straightforward method for medical volume and sequence segmentation with limited annotations. To avert laborious annotating, the recent success of self-supervised learning(SSL) motivates the pre-training on unlabeled data. Despite its success, it is still challenging to adapt typical SSL methods to volume/sequence segmentation, due to their lack of mining on local semantic discrimination and rare exploitation on volume and sequence structures. Based on the continuity between slices/frames and the common spatial layout of organs across volumes/sequences, we introduced a novel bootstrap self-supervised representation learning method by leveraging the predictable possibility of neighboring slices. At the core of our method is a simple and straightforward dense self-supervision on the predictions of local representations and a strategy of predicting locals based on global context, which enables stable and reliable supervision for both global and local representation mining among volumes. Specifically, we first proposed an asymmetric network with an attention-guided predictor to enforce distance-specific prediction and supervision on slices within and across volumes/sequences. Secondly, we introduced a novel prototype-based foreground-background calibration module to enhance representation consistency. The two parts are trained jointly on labeled and unlabeled data. When evaluated on three benchmark datasets of medical volumes and sequences, our model outperforms existing methods with a large margin of 4.5\% DSC on ACDC, 1.7\% on Prostate, and 2.3\% on CAMUS. Intensive evaluations reveals the effectiveness and superiority of our method.

ST++: Make Self-training Work Better for Semi-supervised Semantic Segmentation

Jun 09, 2021

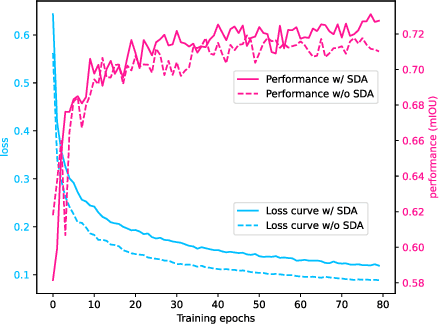

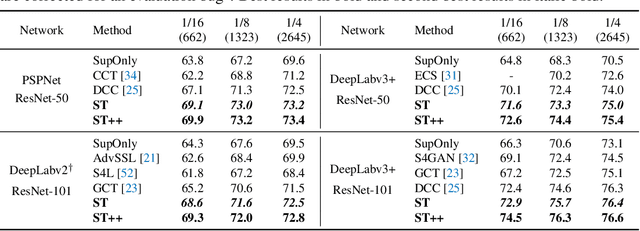

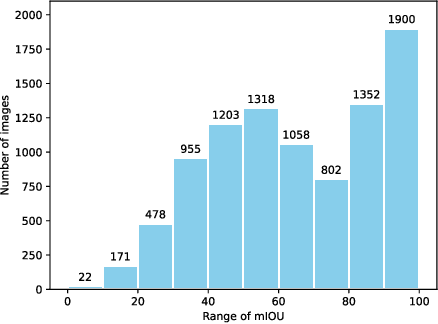

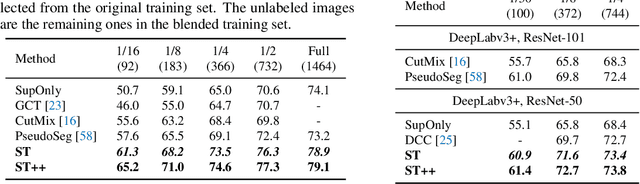

Abstract:In this paper, we investigate if we could make the self-training -- a simple but popular framework -- work better for semi-supervised segmentation. Since the core issue in semi-supervised setting lies in effective and efficient utilization of unlabeled data, we notice that increasing the diversity and hardness of unlabeled data is crucial to performance improvement. Being aware of this fact, we propose to adopt the most plain self-training scheme coupled with appropriate strong data augmentations on unlabeled data (namely ST) for this task, which surprisingly outperforms previous methods under various settings without any bells and whistles. Moreover, to alleviate the negative impact of the wrongly pseudo labeled images, we further propose an advanced self-training framework (namely ST++), that performs selective re-training via selecting and prioritizing the more reliable unlabeled images. As a result, the proposed ST++ boosts the performance of semi-supervised model significantly and surpasses existing methods by a large margin on the Pascal VOC 2012 and Cityscapes benchmark. Overall, we hope this straightforward and simple framework will serve as a strong baseline or competitor for future works. Code is available at https://github.com/LiheYoung/ST-PlusPlus.

Self-Supervised Graph Learning with Proximity-based Views and Channel Contrast

Jun 07, 2021

Abstract:We consider graph representation learning in a self-supervised manner. Graph neural networks (GNNs) use neighborhood aggregation as a core component that results in feature smoothing among nodes in proximity. While successful in various prediction tasks, such a paradigm falls short of capturing nodes' similarities over a long distance, which proves to be important for high-quality learning. To tackle this problem, we strengthen the graph with two additional graph views, in which nodes are directly linked to those with the most similar features or local structures. Not restricted by connectivity in the original graph, the generated views allow the model to enhance its expressive power with new and complementary perspectives from which to look at the relationship between nodes. Following a contrastive learning approach, We propose a method that aims to maximize the agreement between representations across generated views and the original graph. We also propose a channel-level contrast approach that greatly reduces computation cost, compared to the commonly used node level contrast, which requires computation cost quadratic in the number of nodes. Extensive experiments on seven assortative graphs and four disassortative graphs demonstrate the effectiveness of our approach.

Mining Latent Classes for Few-shot Segmentation

Mar 29, 2021

Abstract:Few-shot segmentation (FSS) aims to segment unseen classes given only a few annotated samples. Existing methods suffer the problem of feature undermining, i.e. potential novel classes are treated as background during training phase. Our method aims to alleviate this problem and enhance the feature embedding on latent novel classes. In our work, we propose a novel joint-training framework. Based on conventional episodic training on support-query pairs, we add an additional mining branch that exploits latent novel classes via transferable sub-clusters, and a new rectification technique on both background and foreground categories to enforce more stable prototypes. Over and above that, our transferable sub-cluster has the ability to leverage extra unlabeled data for further feature enhancement. Extensive experiments on two FSS benchmarks demonstrate that our method outperforms previous state-of-the-art by a large margin of 3.7% mIOU on PASCAL-5i and 7.0% mIOU on COCO-20i at the cost of 74% fewer parameters and 2.5x faster inference speed.

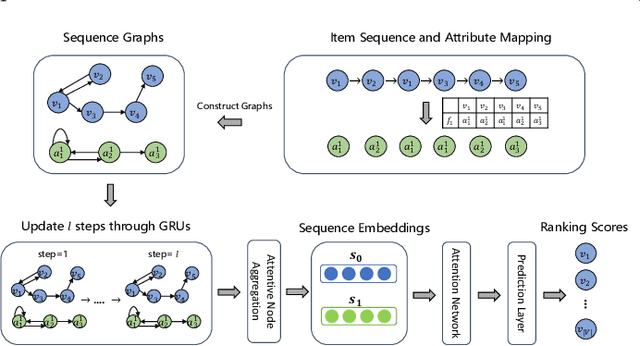

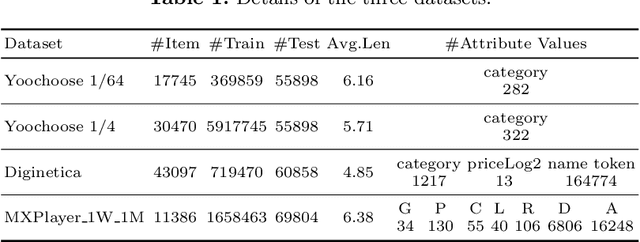

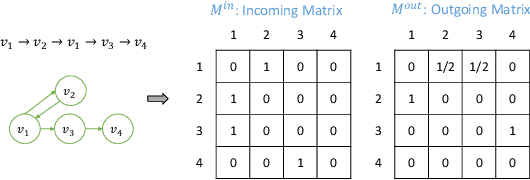

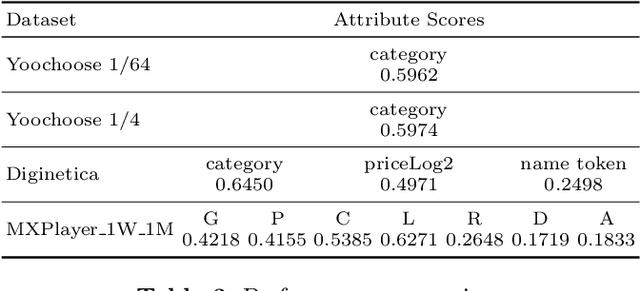

Improving Sequential Recommendation with Attribute-augmented Graph Neural Networks

Mar 10, 2021

Abstract:Many practical recommender systems provide item recommendation for different users only via mining user-item interactions but totally ignoring the rich attribute information of items that users interact with. In this paper, we propose an attribute-augmented graph neural network model named Murzim. Murzim takes as input the graphs constructed from the user-item interaction sequences and corresponding item attribute sequences. By combining the GNNs with node aggregation and an attention network, Murzim can capture user preference patterns, generate embeddings for user-item interaction sequences, and then generate recommendations through next-item prediction. We conduct extensive experiments on multiple datasets. Experimental results show that Murzim outperforms several state-of-the-art methods in terms of recall and MRR, which illustrates that Murzim can make use of item attribute information to produce better recommendations. At present, Murzim has been deployed in MX Player, one of India's largest streaming platforms, and is recommending videos for tens of thousands of users.

Few-Shot Object Detection with Attention-RPN and Multi-Relation Detector

Aug 06, 2019

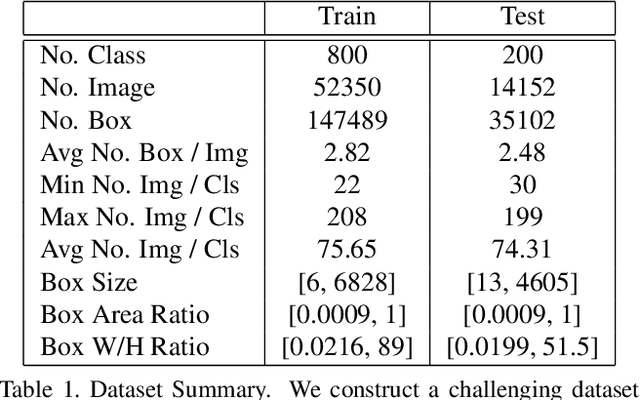

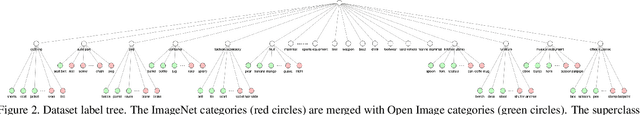

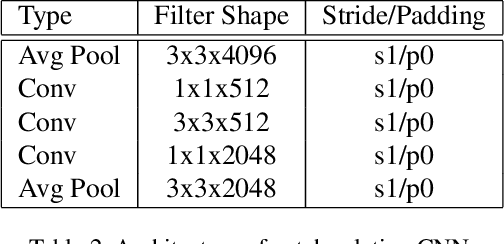

Abstract:Conventional methods for object detection usually requires substantial amount of training data and to prepare such high quality training data is labor intensive. In this paper, we propose few-shot object detection which aims to detect objects of unseen class with a few training examples. Central to our method is the Attention-RPN and the multi-relation module which fully exploit the similarity between the few shot training examples and the test set to detect novel objects while suppressing the false detection in background. To train our network, we have prepared a new dataset which contains 1000 categories of varies objects with high quality annotations. To the best of our knowledge, this is also the first dataset specifically designed for few shot object detection. Once our network is trained, we can apply object detection for unseen classes without further training or fine tuning. This is also the major advantage of few shot object detection. Our method is general, and has a wide range of applications. We demonstrate the effectiveness of our method quantitatively and qualitatively on different datasets. The dataset link is: https://github.com/fanq15/Few-Shot-Object-Detection-Dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge