Tomasz Piotr Kucner

Efficient Human-Aware Task Allocation for Multi-Robot Systems in Shared Environments

Aug 27, 2025Abstract:Multi-robot systems are increasingly deployed in applications, such as intralogistics or autonomous delivery, where multiple robots collaborate to complete tasks efficiently. One of the key factors enabling their efficient cooperation is Multi-Robot Task Allocation (MRTA). Algorithms solving this problem optimize task distribution among robots to minimize the overall execution time. In shared environments, apart from the relative distance between the robots and the tasks, the execution time is also significantly impacted by the delay caused by navigating around moving people. However, most existing MRTA approaches are dynamics-agnostic, relying on static maps and neglecting human motion patterns, leading to inefficiencies and delays. In this paper, we introduce \acrfull{method name}. This method leverages Maps of Dynamics (MoDs), spatio-temporal queryable models designed to capture historical human movement patterns, to estimate the impact of humans on the task execution time during deployment. \acrshort{method name} utilizes a stochastic cost function that includes MoDs. Experimental results show that integrating MoDs enhances task allocation performance, resulting in reduced mission completion times by up to $26\%$ compared to the dynamics-agnostic method and up to $19\%$ compared to the baseline. This work underscores the importance of considering human dynamics in MRTA within shared environments and presents an efficient framework for deploying multi-robot systems in environments populated by humans.

A Lightweight Crowd Model for Robot Social Navigation

Aug 27, 2025Abstract:Robots operating in human-populated environments must navigate safely and efficiently while minimizing social disruption. Achieving this requires estimating crowd movement to avoid congested areas in real-time. Traditional microscopic models struggle to scale in dense crowds due to high computational cost, while existing macroscopic crowd prediction models tend to be either overly simplistic or computationally intensive. In this work, we propose a lightweight, real-time macroscopic crowd prediction model tailored for human motion, which balances prediction accuracy and computational efficiency. Our approach simplifies both spatial and temporal processing based on the inherent characteristics of pedestrian flow, enabling robust generalization without the overhead of complex architectures. We demonstrate a 3.6 times reduction in inference time, while improving prediction accuracy by 3.1 %. Integrated into a socially aware planning framework, the model enables efficient and socially compliant robot navigation in dynamic environments. This work highlights that efficient human crowd modeling enables robots to navigate dense environments without costly computations.

Discrete Contrastive Learning for Diffusion Policies in Autonomous Driving

Mar 07, 2025Abstract:Learning to perform accurate and rich simulations of human driving behaviors from data for autonomous vehicle testing remains challenging due to human driving styles' high diversity and variance. We address this challenge by proposing a novel approach that leverages contrastive learning to extract a dictionary of driving styles from pre-existing human driving data. We discretize these styles with quantization, and the styles are used to learn a conditional diffusion policy for simulating human drivers. Our empirical evaluation confirms that the behaviors generated by our approach are both safer and more human-like than those of the machine-learning-based baseline methods. We believe this has the potential to enable higher realism and more effective techniques for evaluating and improving the performance of autonomous vehicles.

Context-aware Multi-task Learning for Pedestrian Intent and Trajectory Prediction

Jul 24, 2024Abstract:The advancement of socially-aware autonomous vehicles hinges on precise modeling of human behavior. Within this broad paradigm, the specific challenge lies in accurately predicting pedestrian's trajectory and intention. Traditional methodologies have leaned heavily on historical trajectory data, frequently overlooking vital contextual cues such as pedestrian-specific traits and environmental factors. Furthermore, there's a notable knowledge gap as trajectory and intention prediction have largely been approached as separate problems, despite their mutual dependence. To bridge this gap, we introduce PTINet (Pedestrian Trajectory and Intention Prediction Network), which jointly learns the trajectory and intention prediction by combining past trajectory observations, local contextual features (individual pedestrian behaviors), and global features (signs, markings etc.). The efficacy of our approach is evaluated on widely used public datasets: JAAD and PIE, where it has demonstrated superior performance over existing state-of-the-art models in trajectory and intention prediction. The results from our experiments and ablation studies robustly validate PTINet's effectiveness in jointly exploring intention and trajectory prediction for pedestrian behaviour modelling. The experimental evaluation indicates the advantage of using global and local contextual features for pedestrian trajectory and intention prediction. The effectiveness of PTINet in predicting pedestrian behavior paves the way for the development of automated systems capable of seamlessly interacting with pedestrians in urban settings.

Learning State-Space Models for Mapping Spatial Motion Patterns

Sep 01, 2023

Abstract:Mapping the surrounding environment is essential for the successful operation of autonomous robots. While extensive research has focused on mapping geometric structures and static objects, the environment is also influenced by the movement of dynamic objects. Incorporating information about spatial motion patterns can allow mobile robots to navigate and operate successfully in populated areas. In this paper, we propose a deep state-space model that learns the map representations of spatial motion patterns and how they change over time at a certain place. To evaluate our methods, we use two different datasets: one generated dataset with specific motion patterns and another with real-world pedestrian data. We test the performance of our model by evaluating its learning ability, mapping quality, and application to downstream tasks. The results demonstrate that our model can effectively learn the corresponding motion pattern, and has the potential to be applied to robotic application tasks.

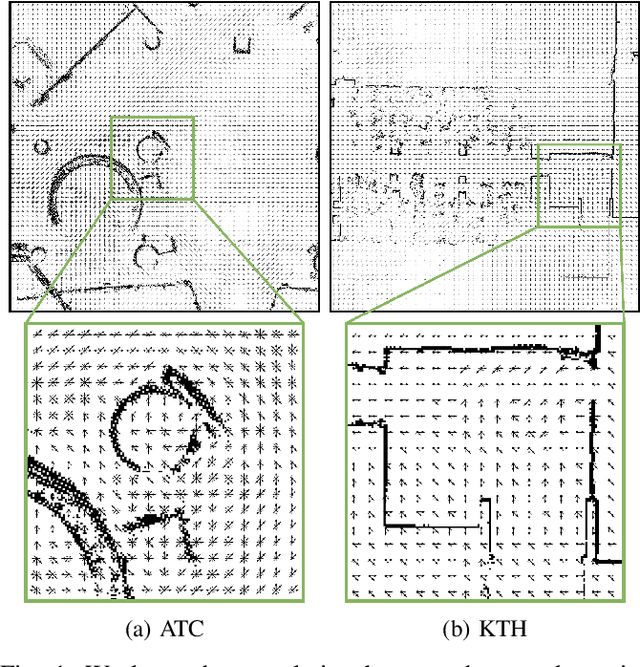

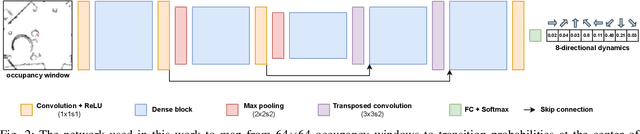

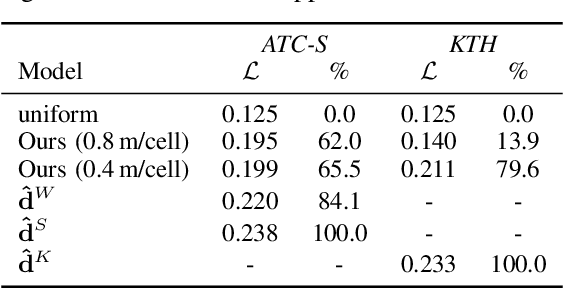

Generating people flow from architecture of real unseen environments

Aug 23, 2022

Abstract:Mapping people dynamics is a crucial skill, because it enables robots to coexist in human-inhabited environments. However, learning a model of people dynamics is a time consuming process which requires observation of large amount of people moving in an environment. Moreover, approaches for mapping dynamics are unable to transfer the learned models across environments: each model only able to describe the dynamics of the environment it has been built in. However, the effect of architectural geometry on people movement can be used to estimate their dynamics, and recent work has looked into learning maps of dynamics from geometry. So far however, these methods have evaluated their performance only on small-size synthetic data, leaving the actual ability of these approaches to generalize to real conditions unexplored. In this work we propose a novel approach to learn people dynamics from geometry, where a model is trained and evaluated on real human trajectories in large-scale environments. We then show the ability of our method to generalize to unseen environments, which is unprecedented for maps of dynamics.

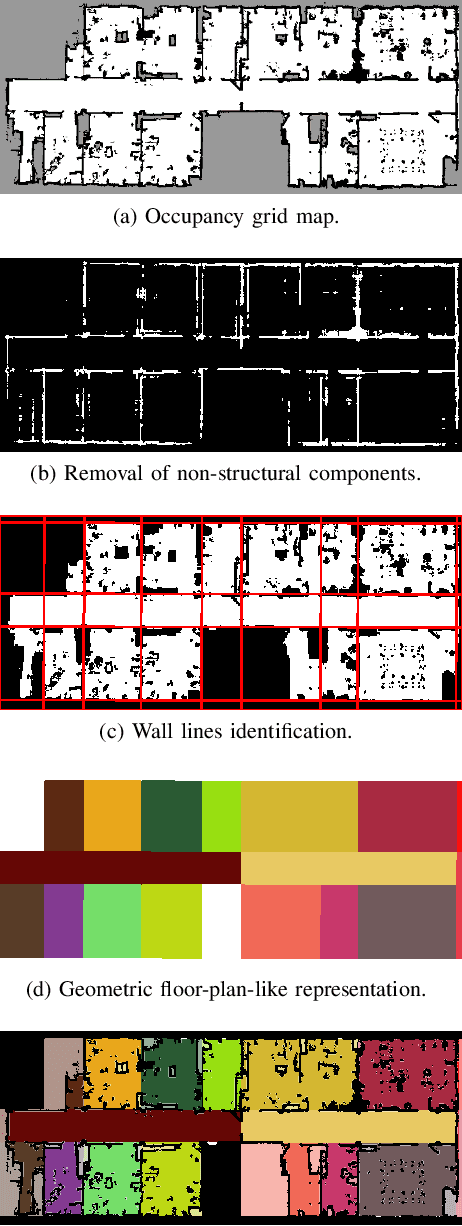

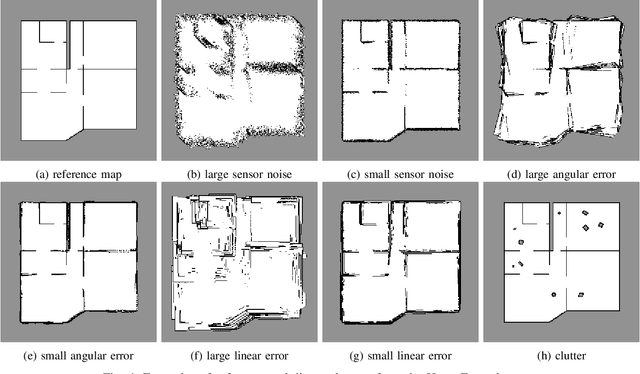

Robust Structure Identification and Room Segmentation of Cluttered Indoor Environments from Occupancy Grid Maps

Mar 07, 2022

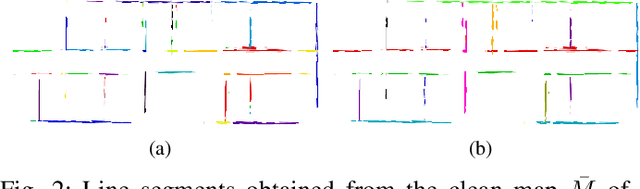

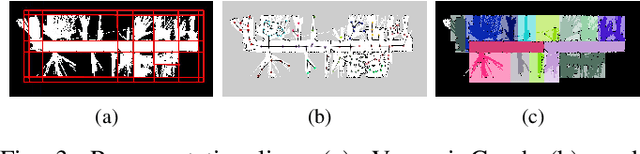

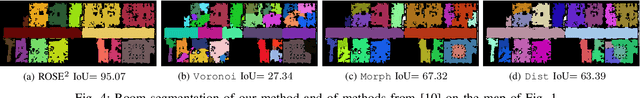

Abstract:Identifying the environment's structure, i.e., to detect core components as rooms and walls, can facilitate several tasks fundamental for the successful operation of indoor autonomous mobile robots, including semantic environment understanding. These robots often rely on 2D occupancy maps for core tasks such as localisation and motion and task planning. However, reliable identification of structure and room segmentation from 2D occupancy maps is still an open problem due to clutter (e.g., furniture and movable object), occlusions, and partial coverage. We propose a method for the RObust StructurE identification and ROom SEgmentation (ROSE^2 ) of 2D occupancy maps, which may be cluttered and incomplete. ROSE^2 identifies the main directions of walls and is resilient to clutter and partial observations, allowing to extract a clean, abstract geometrical floor-plan-like description of the environment, which is used to segment, i.e., to identify rooms in, the original occupancy grid map. ROSE^2 is tested in several real-world publicly-available cluttered maps obtained in different conditions. The results show how it can robustly identify the environment structure in 2D occupancy maps suffering from clutter and partial observations, while significantly improving room segmentation accuracy. Thanks to the combination of clutter removal and robust room segmentation ROSE^2 consistently achieves higher performance than the state-of-the-art methods, against which it is compared.

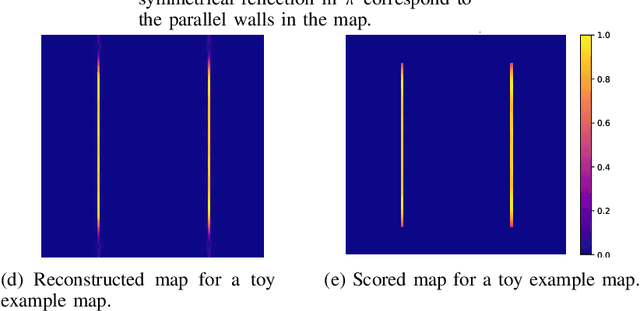

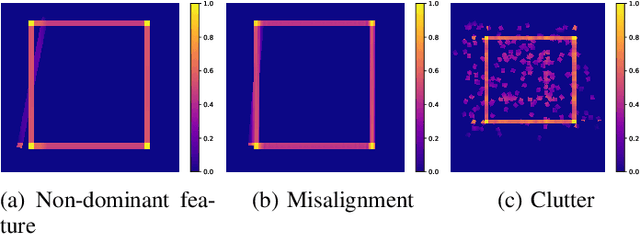

Robust Frequency-Based Structure Extraction

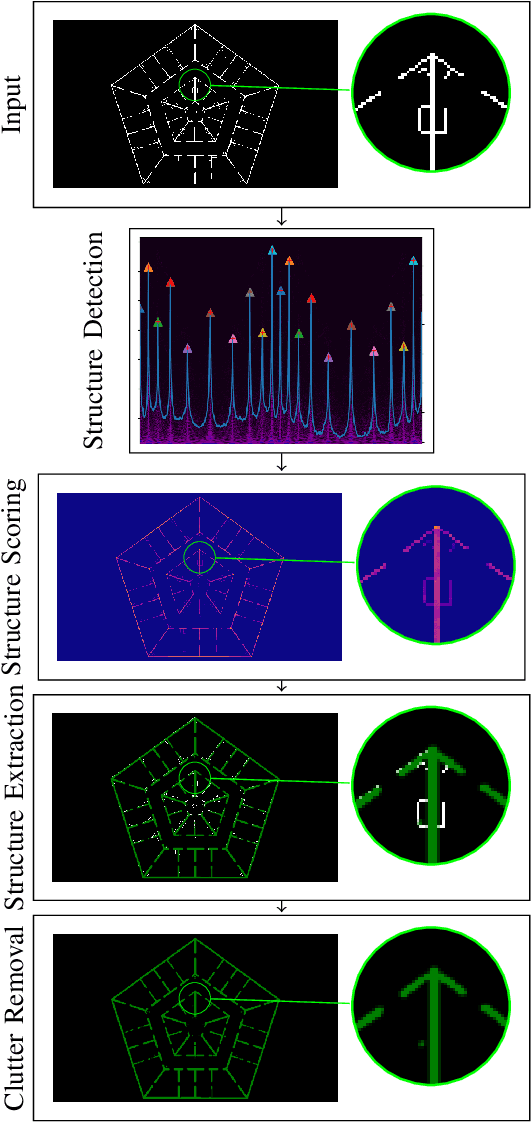

Apr 19, 2020

Abstract:We propose a method for measuring how well each point in an indoor 2D robot map agrees with the underlying structure that governs the construction of the environment. This structure scoring has applications for, e. g., easier robot deployment and Cleaning of maps. In particular, we demonstrate its effectiveness for removing clutter and artifacts from real-world maps, which in turn is an enabler for other map processing components, e. g., room segmentation. Starting from the Fourier transform, we detect peaks in the unfolded frequency spectrum that correspond to a set of dominant directions. This allows us to reconstruct a nominal reference map and score the input map through its correspondence with this reference, without requiring access to a ground-truth map.

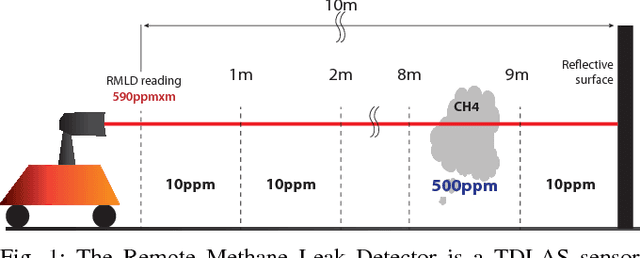

A Next-Best-Smell Approach for Remote Gas Detection with a Mobile Robot

Jan 21, 2018

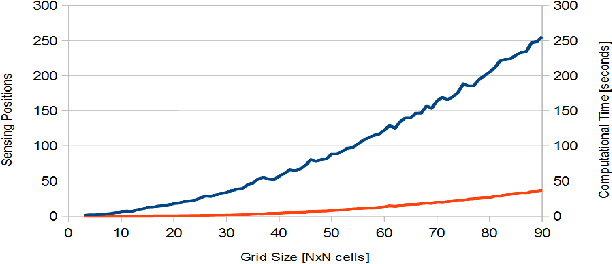

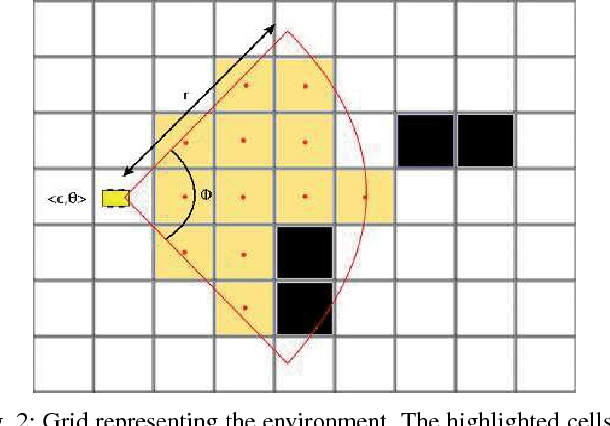

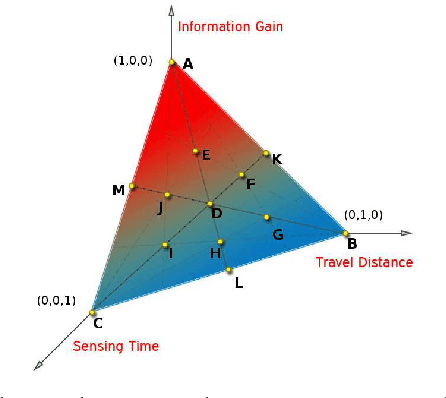

Abstract:The problem of gas detection is relevant to many real-world applications, such as leak detection in industrial settings and landfill monitoring. Using mobile robots for gas detection has several advantages and can reduce danger for humans. In our work, we address the problem of planning a path for a mobile robotic platform equipped with a remote gas sensor, which minimizes the time to detect all gas sources in a given environment. We cast this problem as a coverage planning problem by defining a basic sensing operation -- a scan with the remote gas sensor -- as the field of "view" of the sensor. Given the computing effort required by previously proposed offline approaches, in this paper we suggest a online coverage algorithm, called Next-Best-Smell, adapted from the Next-Best-View class of exploration algorithms. Our algorithm evaluates candidate locations with a global utility function, which combines utility values for travel distance, information gain, and sensing time, using Multi-Criteria Decision Making. In our experiments, conducted both in simulation and with a real robot, we found the performance of the Next-Best-Smell approach to be comparable with that of the state-of-the-art offline algorithm, at much lower computational cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge