Tianshui Chen

RestoreFormer++: Towards Real-World Blind Face Restoration from Undegraded Key-Value Pairs

Aug 14, 2023Abstract:Blind face restoration aims at recovering high-quality face images from those with unknown degradations. Current algorithms mainly introduce priors to complement high-quality details and achieve impressive progress. However, most of these algorithms ignore abundant contextual information in the face and its interplay with the priors, leading to sub-optimal performance. Moreover, they pay less attention to the gap between the synthetic and real-world scenarios, limiting the robustness and generalization to real-world applications. In this work, we propose RestoreFormer++, which on the one hand introduces fully-spatial attention mechanisms to model the contextual information and the interplay with the priors, and on the other hand, explores an extending degrading model to help generate more realistic degraded face images to alleviate the synthetic-to-real-world gap. Compared with current algorithms, RestoreFormer++ has several crucial benefits. First, instead of using a multi-head self-attention mechanism like the traditional visual transformer, we introduce multi-head cross-attention over multi-scale features to fully explore spatial interactions between corrupted information and high-quality priors. In this way, it can facilitate RestoreFormer++ to restore face images with higher realness and fidelity. Second, in contrast to the recognition-oriented dictionary, we learn a reconstruction-oriented dictionary as priors, which contains more diverse high-quality facial details and better accords with the restoration target. Third, we introduce an extending degrading model that contains more realistic degraded scenarios for training data synthesizing, and thus helps to enhance the robustness and generalization of our RestoreFormer++ model. Extensive experiments show that RestoreFormer++ outperforms state-of-the-art algorithms on both synthetic and real-world datasets.

Perception and Semantic Aware Regularization for Sequential Confidence Calibration

May 31, 2023Abstract:Deep sequence recognition (DSR) models receive increasing attention due to their superior application to various applications. Most DSR models use merely the target sequences as supervision without considering other related sequences, leading to over-confidence in their predictions. The DSR models trained with label smoothing regularize labels by equally and independently smoothing each token, reallocating a small value to other tokens for mitigating overconfidence. However, they do not consider tokens/sequences correlations that may provide more effective information to regularize training and thus lead to sub-optimal performance. In this work, we find tokens/sequences with high perception and semantic correlations with the target ones contain more correlated and effective information and thus facilitate more effective regularization. To this end, we propose a Perception and Semantic aware Sequence Regularization framework, which explore perceptively and semantically correlated tokens/sequences as regularization. Specifically, we introduce a semantic context-free recognition and a language model to acquire similar sequences with high perceptive similarities and semantic correlation, respectively. Moreover, over-confidence degree varies across samples according to their difficulties. Thus, we further design an adaptive calibration intensity module to compute a difficulty score for each samples to obtain finer-grained regularization. Extensive experiments on canonical sequence recognition tasks, including scene text and speech recognition, demonstrate that our method sets novel state-of-the-art results. Code is available at https://github.com/husterpzh/PSSR.

Open-World Pose Transfer via Sequential Test-Time Adaption

Mar 20, 2023

Abstract:Pose transfer aims to transfer a given person into a specified posture, has recently attracted considerable attention. A typical pose transfer framework usually employs representative datasets to train a discriminative model, which is often violated by out-of-distribution (OOD) instances. Recently, test-time adaption (TTA) offers a feasible solution for OOD data by using a pre-trained model that learns essential features with self-supervision. However, those methods implicitly make an assumption that all test distributions have a unified signal that can be learned directly. In open-world conditions, the pose transfer task raises various independent signals: OOD appearance and skeleton, which need to be extracted and distributed in speciality. To address this point, we develop a SEquential Test-time Adaption (SETA). In the test-time phrase, SETA extracts and distributes external appearance texture by augmenting OOD data for self-supervised training. To make non-Euclidean similarity among different postures explicit, SETA uses the image representations derived from a person re-identification (Re-ID) model for similarity computation. By addressing implicit posture representation in the test-time sequentially, SETA greatly improves the generalization performance of current pose transfer models. In our experiment, we first show that pose transfer can be applied to open-world applications, including Tiktok reenactment and celebrity motion synthesis.

OccluMix: Towards De-Occlusion Virtual Try-on by Semantically-Guided Mixup

Jan 03, 2023Abstract:Image Virtual try-on aims at replacing the cloth on a personal image with a garment image (in-shop clothes), which has attracted increasing attention from the multimedia and computer vision communities. Prior methods successfully preserve the character of clothing images, however, occlusion remains a pernicious effect for realistic virtual try-on. In this work, we first present a comprehensive analysis of the occlusions and categorize them into two aspects: i) Inherent-Occlusion: the ghost of the former cloth still exists in the try-on image; ii) Acquired-Occlusion: the target cloth warps to the unreasonable body part. Based on the in-depth analysis, we find that the occlusions can be simulated by a novel semantically-guided mixup module, which can generate semantic-specific occluded images that work together with the try-on images to facilitate training a de-occlusion try-on (DOC-VTON) framework. Specifically, DOC-VTON first conducts a sharpened semantic parsing on the try-on person. Aided by semantics guidance and pose prior, various complexities of texture are selectively blending with human parts in a copy-and-paste manner. Then, the Generative Module (GM) is utilized to take charge of synthesizing the final try-on image and learning to de-occlusion jointly. In comparison to the state-of-the-art methods, DOC-VTON achieves better perceptual quality by reducing occlusion effects.

Category-Adaptive Label Discovery and Noise Rejection for Multi-label Image Recognition with Partial Positive Labels

Nov 15, 2022

Abstract:As a promising solution of reducing annotation cost, training multi-label models with partial positive labels (MLR-PPL), in which merely few positive labels are known while other are missing, attracts increasing attention. Due to the absence of any negative labels, previous works regard unknown labels as negative and adopt traditional MLR algorithms. To reject noisy labels, recent works regard large loss samples as noise but ignore the semantic correlation different multi-label images. In this work, we propose to explore semantic correlation among different images to facilitate the MLR-PPL task. Specifically, we design a unified framework, Category-Adaptive Label Discovery and Noise Rejection, that discovers unknown labels and rejects noisy labels for each category in an adaptive manner. The framework consists of two complementary modules: (1) Category-Adaptive Label Discovery module first measures the semantic similarity between positive samples and then complement unknown labels with high similarities; (2) Category-Adaptive Noise Rejection module first computes the sample weights based on semantic similarities from different samples and then discards noisy labels with low weights. Besides, we propose a novel category-adaptive threshold updating that adaptively adjusts the threshold, to avoid the time-consuming manual tuning process. Extensive experiments demonstrate that our proposed method consistently outperforms current leading algorithms.

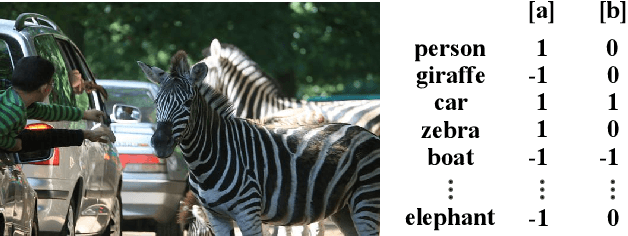

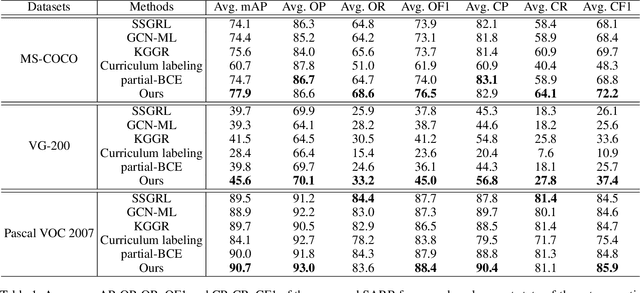

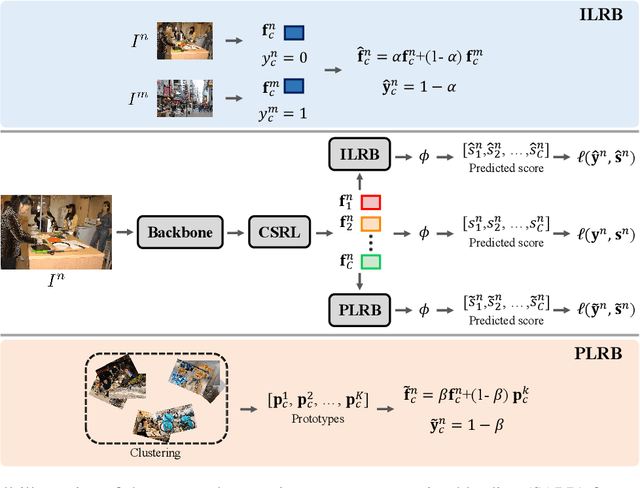

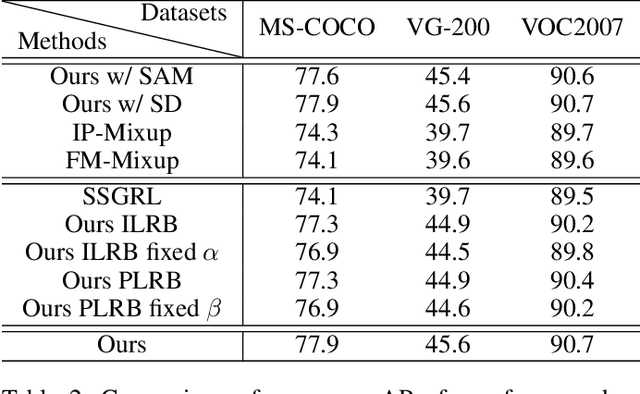

Semantic-Aware Representation Blending for Multi-Label Image Recognition with Partial Labels

May 26, 2022

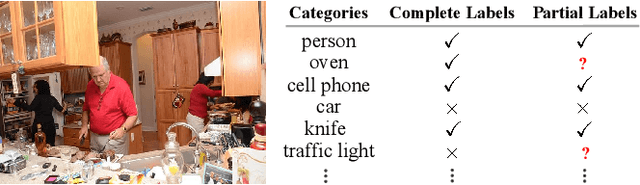

Abstract:Despite achieving impressive progress, current multi-label image recognition (MLR) algorithms heavily depend on large-scale datasets with complete labels, making collecting large-scale datasets extremely time-consuming and labor-intensive. Training the multi-label image recognition models with partial labels (MLR-PL) is an alternative way to address this issue, in which merely some labels are known while others are unknown for each image (see Figure 1). However, current MLP-PL algorithms mainly rely on the pre-trained image classification or similarity models to generate pseudo labels for the unknown labels. Thus, they depend on a certain amount of data annotations and inevitably suffer from obvious performance drops, especially when the known label proportion is low. To address this dilemma, we propose a unified semantic-aware representation blending (SARB) that consists of two crucial modules to blend multi-granularity category-specific semantic representation across different images to transfer information of known labels to complement unknown labels. Extensive experiments on the MS-COCO, Visual Genome, and Pascal VOC 2007 datasets show that the proposed SARB consistently outperforms current state-of-the-art algorithms on all known label proportion settings. Concretely, it obtain the average mAP improvement of 1.9%, 4.5%, 1.0% on the three benchmark datasets compared with the second-best algorithm.

Heterogeneous Semantic Transfer for Multi-label Recognition with Partial Labels

May 23, 2022

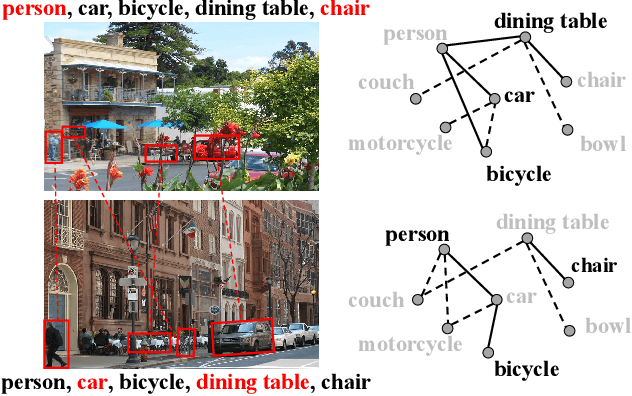

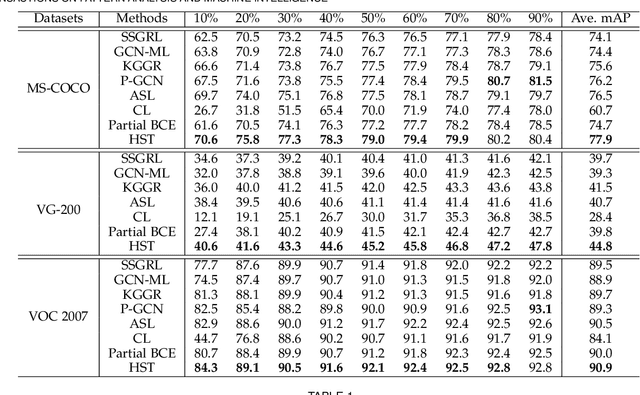

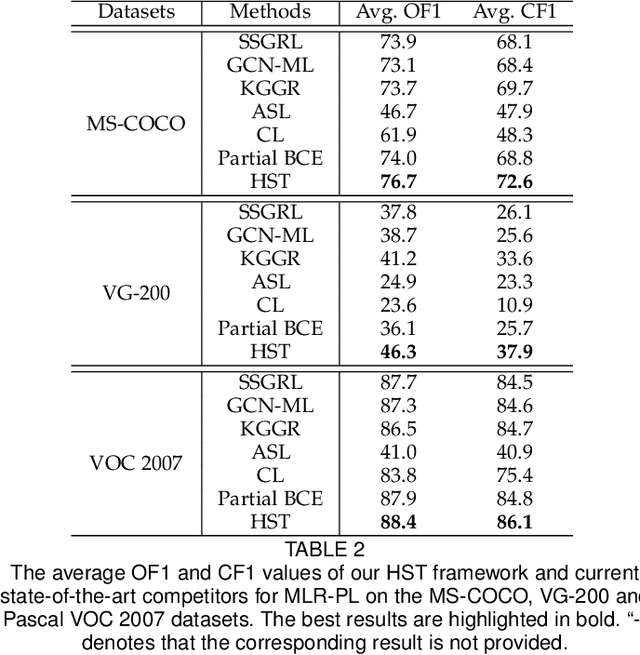

Abstract:Multi-label image recognition with partial labels (MLR-PL), in which some labels are known while others are unknown for each image, may greatly reduce the cost of annotation and thus facilitate large-scale MLR. We find that strong semantic correlations exist within each image and across different images, and these correlations can help transfer the knowledge possessed by the known labels to retrieve the unknown labels and thus improve the performance of the MLR-PL task (see Figure 1). In this work, we propose a novel heterogeneous semantic transfer (HST) framework that consists of two complementary transfer modules that explore both within-image and cross-image semantic correlations to transfer the knowledge possessed by known labels to generate pseudo labels for the unknown labels. Specifically, an intra-image semantic transfer (IST) module learns an image-specific label co-occurrence matrix for each image and maps the known labels to complement the unknown labels based on these matrices. Additionally, a cross-image transfer (CST) module learns category-specific feature-prototype similarities and then helps complement the unknown labels that have high degrees of similarity with the corresponding prototypes. Finally, both the known and generated pseudo labels are used to train MLR models. Extensive experiments conducted on the Microsoft COCO, Visual Genome, and Pascal VOC 2007 datasets show that the proposed HST framework achieves superior performance to that of current state-of-the-art algorithms. Specifically, it obtains mean average precision (mAP) improvements of 1.4%, 3.3%, and 0.4% on the three datasets over the results of the best-performing previously developed algorithm.

Exploring Negatives in Contrastive Learning for Unpaired Image-to-Image Translation

Apr 23, 2022

Abstract:Unpaired image-to-image translation aims to find a mapping between the source domain and the target domain. To alleviate the problem of the lack of supervised labels for the source images, cycle-consistency based methods have been proposed for image structure preservation by assuming a reversible relationship between unpaired images. However, this assumption only uses limited correspondence between image pairs. Recently, contrastive learning (CL) has been used to further investigate the image correspondence in unpaired image translation by using patch-based positive/negative learning. Patch-based contrastive routines obtain the positives by self-similarity computation and recognize the rest patches as negatives. This flexible learning paradigm obtains auxiliary contextualized information at a low cost. As the negatives own an impressive sample number, with curiosity, we make an investigation based on a question: are all negatives necessary for feature contrastive learning? Unlike previous CL approaches that use negatives as much as possible, in this paper, we study the negatives from an information-theoretic perspective and introduce a new negative Pruning technology for Unpaired image-to-image Translation (PUT) by sparsifying and ranking the patches. The proposed algorithm is efficient, flexible and enables the model to learn essential information between corresponding patches stably. By putting quality over quantity, only a few negative patches are required to achieve better results. Lastly, we validate the superiority, stability, and versatility of our model through comparative experiments.

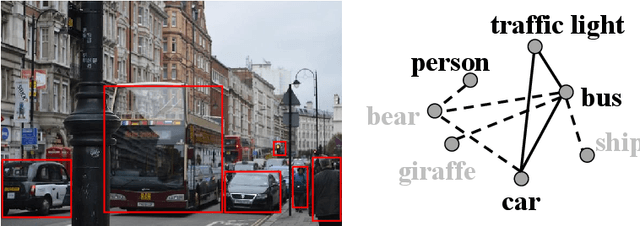

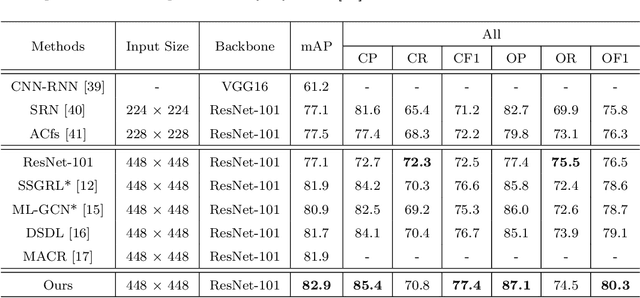

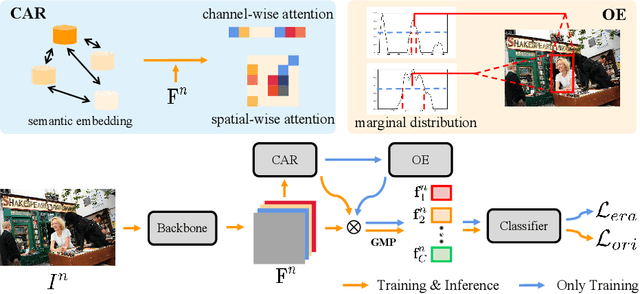

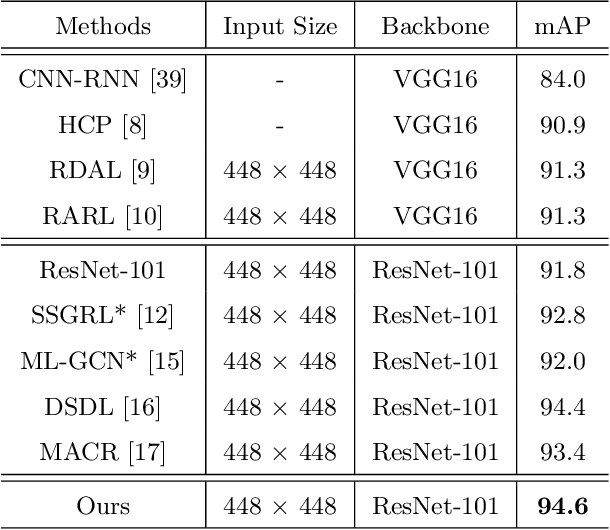

Semantic Representation and Dependency Learning for Multi-Label Image Recognition

Apr 08, 2022

Abstract:Recently many multi-label image recognition (MLR) works have made significant progress by introducing pre-trained object detection models to generate lots of proposals or utilizing statistical label co-occurrence enhance the correlation among different categories. However, these works have some limitations: (1) the effectiveness of the network significantly depends on pre-trained object detection models that bring expensive and unaffordable computation; (2) the network performance degrades when there exist occasional co-occurrence objects in images, especially for the rare categories. To address these problems, we propose a novel and effective semantic representation and dependency learning (SRDL) framework to learn category-specific semantic representation for each category and capture semantic dependency among all categories. Specifically, we design a category-specific attentional regions (CAR) module to generate channel/spatial-wise attention matrices to guide model to focus on semantic-aware regions. We also design an object erasing (OE) module to implicitly learn semantic dependency among categories by erasing semantic-aware regions to regularize the network training. Extensive experiments and comparisons on two popular MLR benchmark datasets (i.e., MS-COCO and Pascal VOC 2007) demonstrate the effectiveness of the proposed framework over current state-of-the-art algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge