Tian Xia

Mitigating attribute amplification in counterfactual image generation

Mar 14, 2024

Abstract:Causal generative modelling is gaining interest in medical imaging due to its ability to answer interventional and counterfactual queries. Most work focuses on generating counterfactual images that look plausible, using auxiliary classifiers to enforce effectiveness of simulated interventions. We investigate pitfalls in this approach, discovering the issue of attribute amplification, where unrelated attributes are spuriously affected during interventions, leading to biases across protected characteristics and disease status. We show that attribute amplification is caused by the use of hard labels in the counterfactual training process and propose soft counterfactual fine-tuning to mitigate this issue. Our method substantially reduces the amplification effect while maintaining effectiveness of generated images, demonstrated on a large chest X-ray dataset. Our work makes an important advancement towards more faithful and unbiased causal modelling in medical imaging.

Counterfactual contrastive learning: robust representations via causal image synthesis

Mar 14, 2024Abstract:Contrastive pretraining is well-known to improve downstream task performance and model generalisation, especially in limited label settings. However, it is sensitive to the choice of augmentation pipeline. Positive pairs should preserve semantic information while destroying domain-specific information. Standard augmentation pipelines emulate domain-specific changes with pre-defined photometric transformations, but what if we could simulate realistic domain changes instead? In this work, we show how to utilise recent progress in counterfactual image generation to this effect. We propose CF-SimCLR, a counterfactual contrastive learning approach which leverages approximate counterfactual inference for positive pair creation. Comprehensive evaluation across five datasets, on chest radiography and mammography, demonstrates that CF-SimCLR substantially improves robustness to acquisition shift with higher downstream performance on both in- and out-of-distribution data, particularly for domains which are under-represented during training.

UniCtrl: Improving the Spatiotemporal Consistency of Text-to-Video Diffusion Models via Training-Free Unified Attention Control

Mar 06, 2024Abstract:Video Diffusion Models have been developed for video generation, usually integrating text and image conditioning to enhance control over the generated content. Despite the progress, ensuring consistency across frames remains a challenge, particularly when using text prompts as control conditions. To address this problem, we introduce UniCtrl, a novel, plug-and-play method that is universally applicable to improve the spatiotemporal consistency and motion diversity of videos generated by text-to-video models without additional training. UniCtrl ensures semantic consistency across different frames through cross-frame self-attention control, and meanwhile, enhances the motion quality and spatiotemporal consistency through motion injection and spatiotemporal synchronization. Our experimental results demonstrate UniCtrl's efficacy in enhancing various text-to-video models, confirming its effectiveness and universality.

Measuring Bargaining Abilities of LLMs: A Benchmark and A Buyer-Enhancement Method

Feb 29, 2024Abstract:Bargaining is an important and unique part of negotiation between humans. As LLM-driven agents learn to negotiate and act like real humans, how to evaluate agents' bargaining abilities remains an open problem. For the first time, we formally described the Bargaining task as an asymmetric incomplete information game, defining the gains of the Buyer and Seller in multiple bargaining processes. It allows us to quantitatively assess an agent's performance in the Bargain task. We collected a real product price dataset, AmazonHistoryPrice, and conducted evaluations of various LLM agents' bargaining abilities. We find that playing a Buyer is much harder than a Seller, and increasing model size can not effectively improve the Buyer's performance. To address the challenge, we propose a novel approach called OG-Narrator that integrates a deterministic Offer Generator to control the price range of Buyer's offers, and an LLM Narrator to create natural language sentences for generated offers. Experimental results show that OG-Narrator improves the buyer's deal rates from 26.67% to 88.88% and brings a ten times of multiplication of profits on all baselines, even a model that has not been aligned.

Sensor Misalignment-tolerant AUV Navigation with Passive DoA and Doppler Measurements

Feb 11, 2024

Abstract:We present a sensor misalignment-tolerant AUV navigation method that leverages measurements from an acoustic array and dead reckoned information. Recent studies have demonstrated the potential use of passive acoustic Direction of Arrival (DoA) measurements for AUV navigation without requiring ranging measurements. However, the sensor misalignment between the acoustic array and the attitude sensor was not accounted for. Such misalignment may deteriorate the navigation accuracy. This paper proposes a novel approach that allows simultaneous AUV navigation, beacon localization, and sensor alignment. An Unscented Kalman Filter (UKF) that enables the necessary calculations to be completed at an affordable computational load is developed. A Nonlinear Least Squares (NLS)-based technique is employed to find an initial solution for beacon localization and sensor alignment as early as possible using a short-term window of measurements. Experimental results demonstrate the performance of the proposed method.

R-Judge: Benchmarking Safety Risk Awareness for LLM Agents

Jan 18, 2024

Abstract:Large language models (LLMs) have exhibited great potential in autonomously completing tasks across real-world applications. Despite this, these LLM agents introduce unexpected safety risks when operating in interactive environments. Instead of centering on LLM-generated content safety in most prior studies, this work addresses the imperative need for benchmarking the behavioral safety of LLM agents within diverse environments. We introduce R-Judge, a benchmark crafted to evaluate the proficiency of LLMs in judging safety risks given agent interaction records. R-Judge comprises 162 agent interaction records, encompassing 27 key risk scenarios among 7 application categories and 10 risk types. It incorporates human consensus on safety with annotated safety risk labels and high-quality risk descriptions. Utilizing R-Judge, we conduct a comprehensive evaluation of 8 prominent LLMs commonly employed as the backbone for agents. The best-performing model, GPT-4, achieves 72.29% in contrast to the human score of 89.38%, showing considerable room for enhancing the risk awareness of LLMs. Notably, leveraging risk descriptions as environment feedback significantly improves model performance, revealing the importance of salient safety risk feedback. Furthermore, we design an effective chain of safety analysis technique to help the judgment of safety risks and conduct an in-depth case study to facilitate future research. R-Judge is publicly available at https://github.com/Lordog/R-Judge.

High Fidelity Image Counterfactuals with Probabilistic Causal Models

Jul 18, 2023

Abstract:We present a general causal generative modelling framework for accurate estimation of high fidelity image counterfactuals with deep structural causal models. Estimation of interventional and counterfactual queries for high-dimensional structured variables, such as images, remains a challenging task. We leverage ideas from causal mediation analysis and advances in generative modelling to design new deep causal mechanisms for structured variables in causal models. Our experiments demonstrate that our proposed mechanisms are capable of accurate abduction and estimation of direct, indirect and total effects as measured by axiomatic soundness of counterfactuals.

Penalty Gradient Normalization for Generative Adversarial Networks

Jun 23, 2023Abstract:In this paper, we propose a novel normalization method called penalty gradient normalization (PGN) to tackle the training instability of Generative Adversarial Networks (GANs) caused by the sharp gradient space. Unlike existing work such as gradient penalty and spectral normalization, the proposed PGN only imposes a penalty gradient norm constraint on the discriminator function, which increases the capacity of the discriminator. Moreover, the proposed penalty gradient normalization can be applied to different GAN architectures with little modification. Extensive experiments on three datasets show that GANs trained with penalty gradient normalization outperform existing methods in terms of both Frechet Inception and Distance and Inception Score.

Causal Machine Learning for Healthcare and Precision Medicine

May 31, 2022

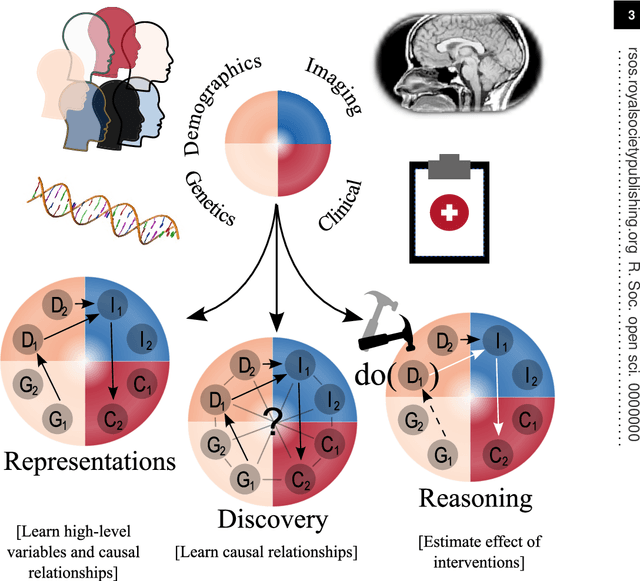

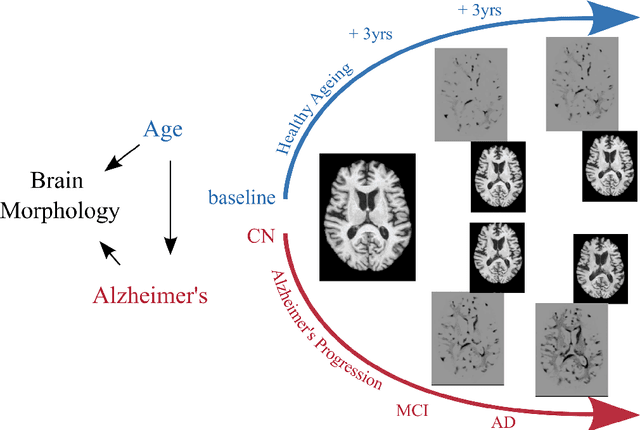

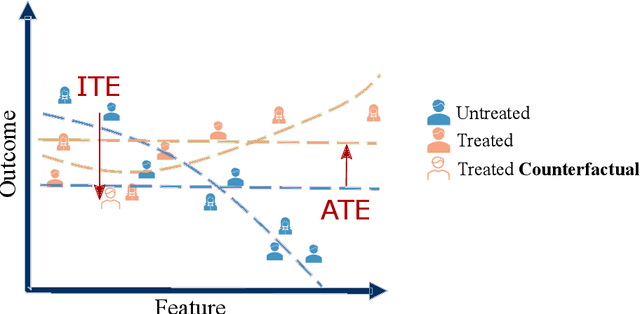

Abstract:Causal machine learning (CML) has experienced increasing popularity in healthcare. Beyond the inherent capabilities of adding domain knowledge into learning systems, CML provides a complete toolset for investigating how a system would react to an intervention (e.g.\ outcome given a treatment). Quantifying effects of interventions allows actionable decisions to be made whilst maintaining robustness in the presence of confounders. Here, we explore how causal inference can be incorporated into different aspects of clinical decision support (CDS) systems by using recent advances in machine learning. Throughout this paper, we use Alzheimer's disease (AD) to create examples for illustrating how CML can be advantageous in clinical scenarios. Furthermore, we discuss important challenges present in healthcare applications such as processing high-dimensional and unstructured data, generalisation to out-of-distribution samples, and temporal relationships, that despite the great effort from the research community remain to be solved. Finally, we review lines of research within causal representation learning, causal discovery and causal reasoning which offer the potential towards addressing the aforementioned challenges.

Adversarial Counterfactual Augmentation: Application in Alzheimer's Disease Classification

Mar 15, 2022

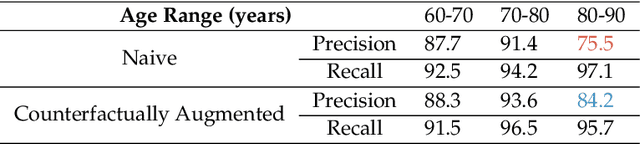

Abstract:Data augmentation has been widely used in deep learning to reduce over-fitting and improve the robustness of models. However, traditional data augmentation techniques, e.g., rotation, cropping, flipping, etc., do not consider \textit{semantic} transformations, e.g., changing the age of a brain image. Previous works tried to achieve semantic augmentation by generating \textit{counterfactuals}, but they focused on how to train deep generative models and randomly created counterfactuals with the generative models without considering which counterfactuals are most \textit{effective} for improving downstream training. Different from these approaches, in this work, we propose a novel adversarial counterfactual augmentation scheme that aims to find the most \textit{effective} counterfactuals to improve downstream tasks with a pre-trained generative model. Specifically, we construct an adversarial game where we update the input \textit{conditional factor} of the generator and the downstream \textit{classifier} with gradient backpropagation alternatively and iteratively. The key idea is to find conditional factors that can result in \textit{hard} counterfactuals for the classifier. This can be viewed as finding the `\textit{weakness}' of the classifier and purposely forcing it to \textit{overcome} its weakness via the generative model. To demonstrate the effectiveness of the proposed approach, we validate the method with the classification of Alzheimer's Disease (AD) as the downstream task based on a pre-trained brain ageing synthesis model. We show the proposed approach improves test accuracy and can alleviate spurious correlations. Code will be released upon acceptance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge