Shengdong Zhang

LMS-Net: A Learned Mumford-Shah Network For Few-Shot Medical Image Segmentation

Feb 08, 2025

Abstract:Few-shot semantic segmentation (FSS) methods have shown great promise in handling data-scarce scenarios, particularly in medical image segmentation tasks. However, most existing FSS architectures lack sufficient interpretability and fail to fully incorporate the underlying physical structures of semantic regions. To address these issues, in this paper, we propose a novel deep unfolding network, called the Learned Mumford-Shah Network (LMS-Net), for the FSS task. Specifically, motivated by the effectiveness of pixel-to-prototype comparison in prototypical FSS methods and the capability of deep priors to model complex spatial structures, we leverage our learned Mumford-Shah model (LMS model) as a mathematical foundation to integrate these insights into a unified framework. By reformulating the LMS model into prototype update and mask update tasks, we propose an alternating optimization algorithm to solve it efficiently. Further, the iterative steps of this algorithm are unfolded into corresponding network modules, resulting in LMS-Net with clear interpretability. Comprehensive experiments on three publicly available medical segmentation datasets verify the effectiveness of our method, demonstrating superior accuracy and robustness in handling complex structures and adapting to challenging segmentation scenarios. These results highlight the potential of LMS-Net to advance FSS in medical imaging applications. Our code will be available at: https://github.com/SDZhang01/LMSNet

Bringing RGB and IR Together: Hierarchical Multi-Modal Enhancement for Robust Transmission Line Detection

Jan 25, 2025Abstract:Ensuring a stable power supply in rural areas relies heavily on effective inspection of power equipment, particularly transmission lines (TLs). However, detecting TLs from aerial imagery can be challenging when dealing with misalignments between visible light (RGB) and infrared (IR) images, as well as mismatched high- and low-level features in convolutional networks. To address these limitations, we propose a novel Hierarchical Multi-Modal Enhancement Network (HMMEN) that integrates RGB and IR data for robust and accurate TL detection. Our method introduces two key components: (1) a Mutual Multi-Modal Enhanced Block (MMEB), which fuses and enhances hierarchical RGB and IR feature maps in a coarse-to-fine manner, and (2) a Feature Alignment Block (FAB) that corrects misalignments between decoder outputs and IR feature maps by leveraging deformable convolutions. We employ MobileNet-based encoders for both RGB and IR inputs to accommodate edge-computing constraints and reduce computational overhead. Experimental results on diverse weather and lighting conditionsfog, night, snow, and daytimedemonstrate the superiority and robustness of our approach compared to state-of-the-art methods, resulting in fewer false positives, enhanced boundary delineation, and better overall detection performance. This framework thus shows promise for practical large-scale power line inspections with unmanned aerial vehicles.

Stochastic Whitening Batch Normalization

Jun 03, 2021

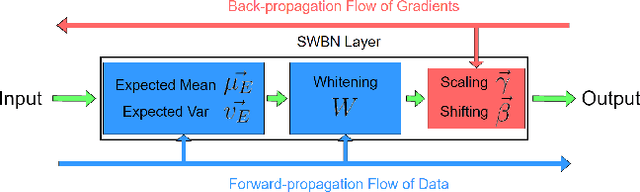

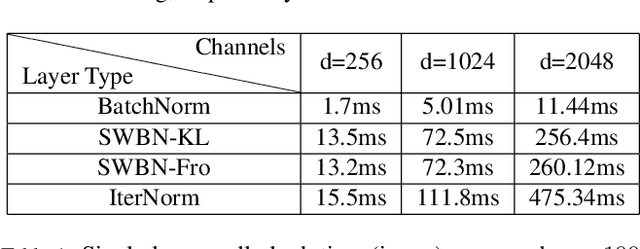

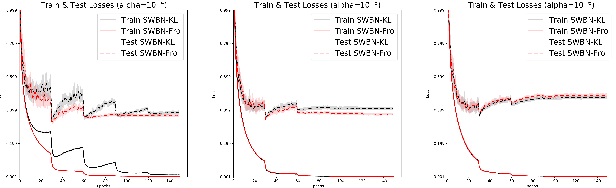

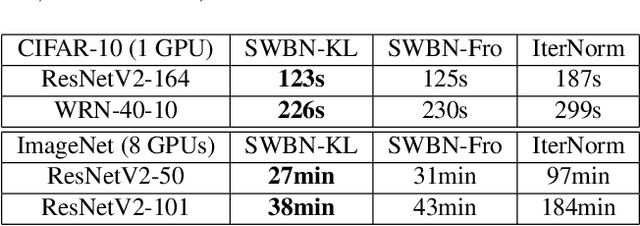

Abstract:Batch Normalization (BN) is a popular technique for training Deep Neural Networks (DNNs). BN uses scaling and shifting to normalize activations of mini-batches to accelerate convergence and improve generalization. The recently proposed Iterative Normalization (IterNorm) method improves these properties by whitening the activations iteratively using Newton's method. However, since Newton's method initializes the whitening matrix independently at each training step, no information is shared between consecutive steps. In this work, instead of exact computation of whitening matrix at each time step, we estimate it gradually during training in an online fashion, using our proposed Stochastic Whitening Batch Normalization (SWBN) algorithm. We show that while SWBN improves the convergence rate and generalization of DNNs, its computational overhead is less than that of IterNorm. Due to the high efficiency of the proposed method, it can be easily employed in most DNN architectures with a large number of layers. We provide comprehensive experiments and comparisons between BN, IterNorm, and SWBN layers to demonstrate the effectiveness of the proposed technique in conventional (many-shot) image classification and few-shot classification tasks.

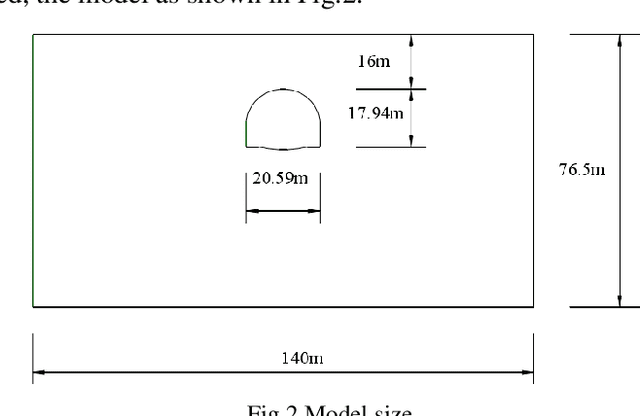

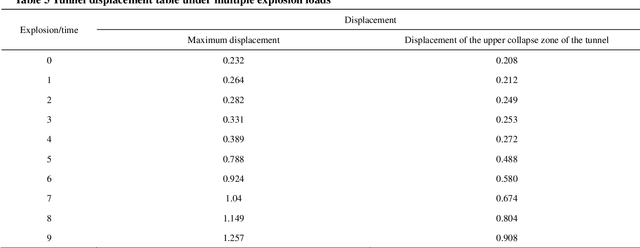

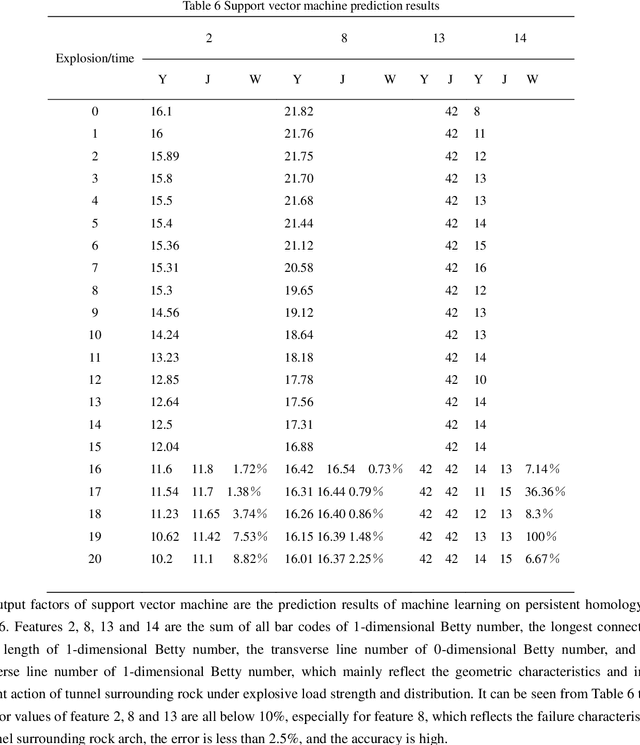

Analysis of tunnel failure characteristics under multiple explosion loads based on persistent homology-based machine learning

Sep 19, 2020

Abstract:The study of tunnel failure characteristics under the load of external explosion source is an important problem in tunnel design and protection, in particular, it is of great significance to construct an intelligent topological feature description of the tunnel failure process. The failure characteristics of tunnels under explosive loading are described by using discrete element method and persistent homology-based machine learning. Firstly, the discrete element model of shallow buried tunnel was established in the discrete element software, and the explosive load was equivalent to a series of uniformly distributed loads acting on the surface by Saint-Venant principle, and the dynamic response of the tunnel under multiple explosive loads was obtained through iterative calculation. The topological characteristics of surrounding rock is studied by persistent homology-based machine learning. The geometric, physical and interunit characteristics of the tunnel subjected to explosive loading are extracted, and the nonlinear mapping relationship between the topological quantity of persistent homology, and the failure characteristics of the surrounding rock is established, and the results of the intelligent description of the failure characteristics of the tunnel are obtained. The research shows that the length of the longest Betty 1 bar code is closely related to the stability of the tunnel, which can be used for effective early warning of the tunnel failure, and an intelligent description of the tunnel failure process can be established to provide a new idea for tunnel engineering protection.

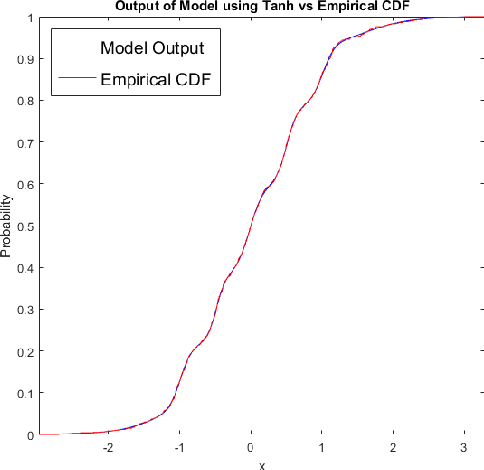

From CDF to --- A Density Estimation Method for High Dimensional Data

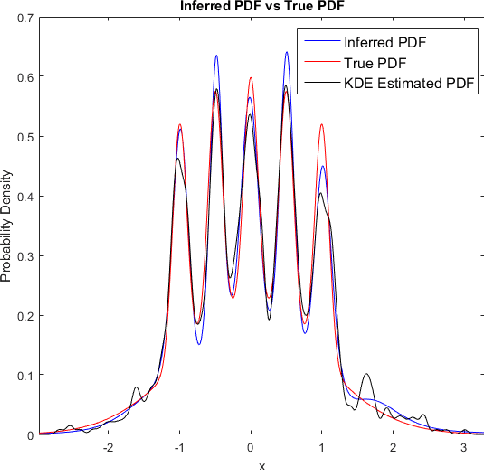

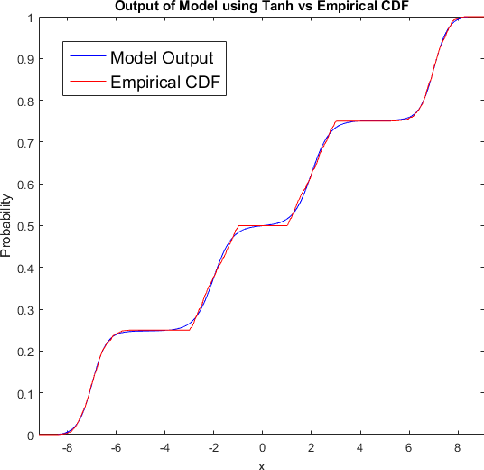

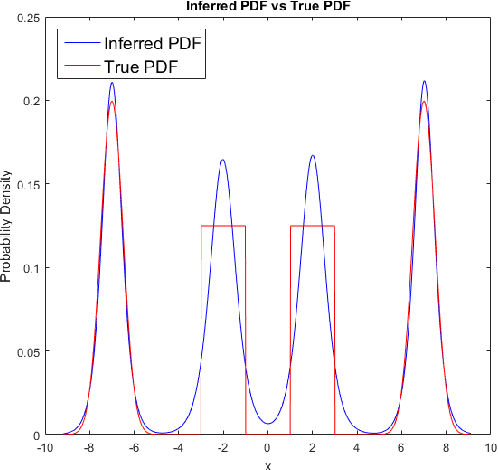

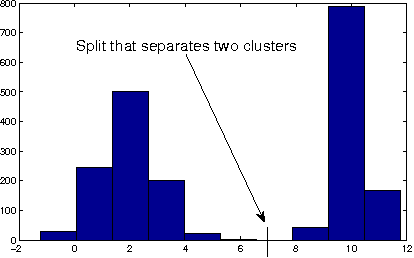

Apr 15, 2018

Abstract:CDF2PDF is a method of PDF estimation by approximating CDF. The original idea of it was previously proposed in [1] called SIC. However, SIC requires additional hyper-parameter tunning, and no algorithms for computing higher order derivative from a trained NN are provided in [1]. CDF2PDF improves SIC by avoiding the time-consuming hyper-parameter tuning part and enabling higher order derivative computation to be done in polynomial time. Experiments of this method for one-dimensional data shows promising results.

Deep Symbolic Representation Learning for Heterogeneous Time-series Classification

Dec 05, 2016

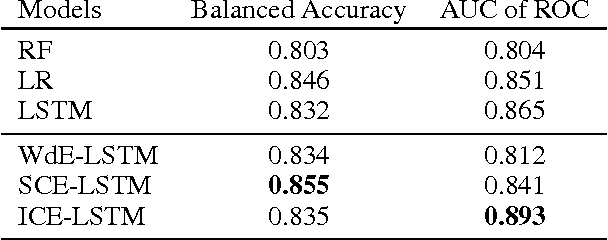

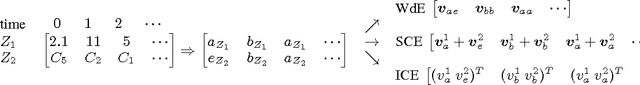

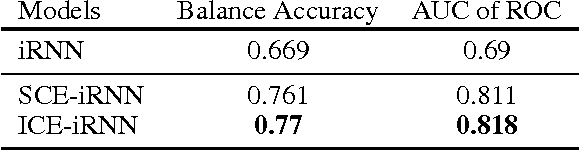

Abstract:In this paper, we consider the problem of event classification with multi-variate time series data consisting of heterogeneous (continuous and categorical) variables. The complex temporal dependencies between the variables combined with sparsity of the data makes the event classification problem particularly challenging. Most state-of-art approaches address this either by designing hand-engineered features or breaking up the problem over homogeneous variates. In this work, we propose and compare three representation learning algorithms over symbolized sequences which enables classification of heterogeneous time-series data using a deep architecture. The proposed representations are trained jointly along with the rest of the network architecture in an end-to-end fashion that makes the learned features discriminative for the given task. Experiments on three real-world datasets demonstrate the effectiveness of the proposed approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge