Pushpak Bhattacharyya

EmotionGIF-IITP-AINLPML: Ensemble-based Automated Deep Neural System for predicting category(ies) of a GIF response

Dec 23, 2020

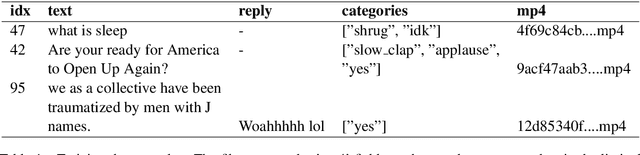

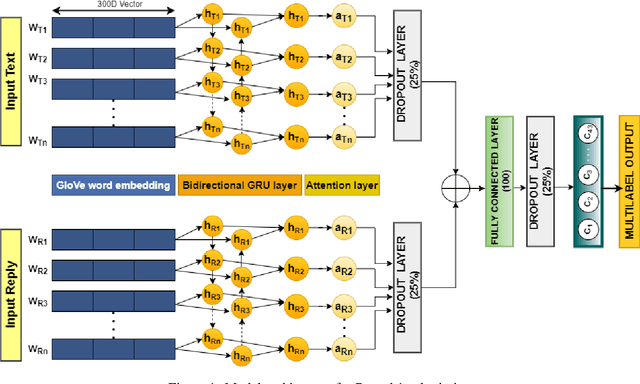

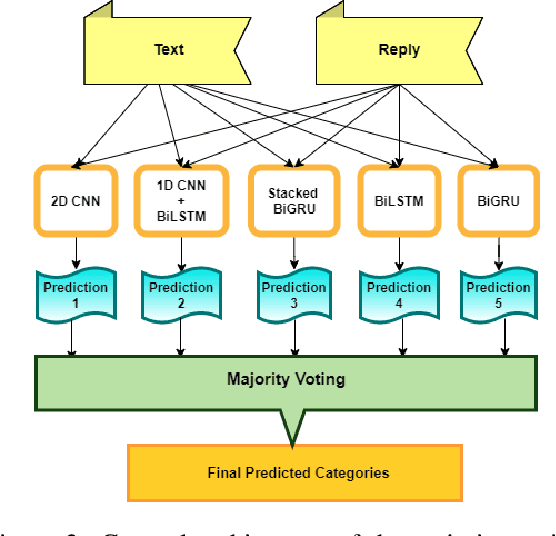

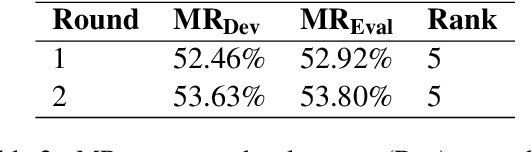

Abstract:In this paper, we describe the systems submitted by our IITP-AINLPML team in the shared task of SocialNLP 2020, EmotionGIF 2020, on predicting the category(ies) of a GIF response for a given unlabelled tweet. For the round 1 phase of the task, we propose an attention-based Bi-directional GRU network trained on both the tweet (text) and their replies (text wherever available) and the given category(ies) for its GIF response. In the round 2 phase, we build several deep neural-based classifiers for the task and report the final predictions through a majority voting based ensemble technique. Our proposed models attain the best Mean Recall (MR) scores of 52.92% and 53.80% in round 1 and round 2, respectively.

Assessing the Severity of Health States based on Social Media Posts

Sep 21, 2020

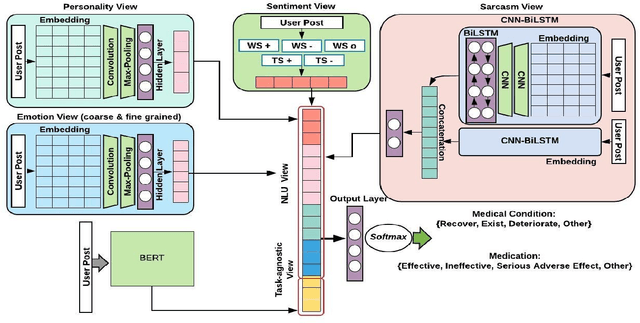

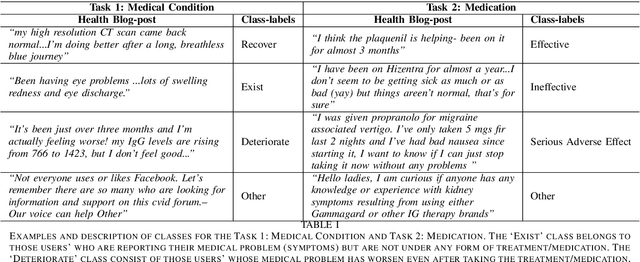

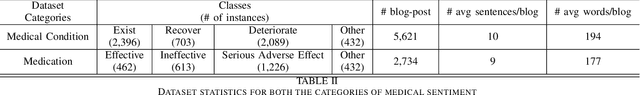

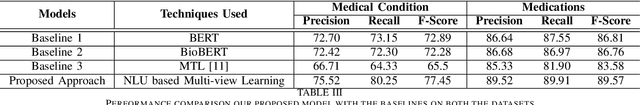

Abstract:The unprecedented growth of Internet users has resulted in an abundance of unstructured information on social media including health forums, where patients request health-related information or opinions from other users. Previous studies have shown that online peer support has limited effectiveness without expert intervention. Therefore, a system capable of assessing the severity of health state from the patients' social media posts can help health professionals (HP) in prioritizing the user's post. In this study, we inspect the efficacy of different aspects of Natural Language Understanding (NLU) to identify the severity of the user's health state in relation to two perspectives(tasks) (a) Medical Condition (i.e., Recover, Exist, Deteriorate, Other) and (b) Medication (i.e., Effective, Ineffective, Serious Adverse Effect, Other) in online health communities. We propose a multiview learning framework that models both the textual content as well as contextual-information to assess the severity of the user's health state. Specifically, our model utilizes the NLU views such as sentiment, emotions, personality, and use of figurative language to extract the contextual information. The diverse NLU views demonstrate its effectiveness on both the tasks and as well as on the individual disease to assess a user's health.

Tweet to News Conversion: An Investigation into Unsupervised Controllable Text Generation

Aug 21, 2020

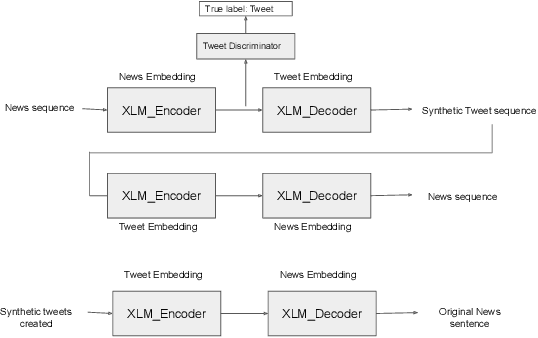

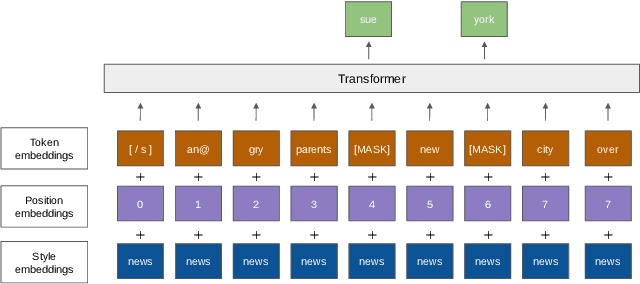

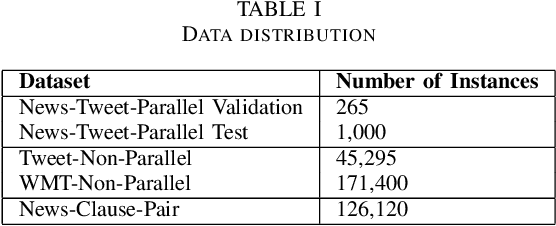

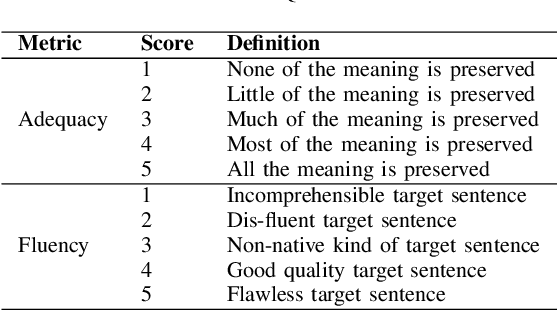

Abstract:Text generator systems have become extremely popular with the advent of recent deep learning models such as encoder-decoder. Controlling the information and style of the generated output without supervision is an important and challenging Natural Language Processing (NLP) task. In this paper, we define the task of constructing a coherent paragraph from a set of disaster domain tweets, without any parallel data. We tackle the problem by building two systems in pipeline. The first system focuses on unsupervised style transfer and converts the individual tweets into news sentences. The second system stitches together the outputs from the first system to form a coherent news paragraph. We also propose a novel training mechanism, by splitting the sentences into propositions and training the second system to merge the sentences. We create a validation and test set consisting of tweet-sets and their equivalent news paragraphs to perform empirical evaluation. In a completely unsupervised setting, our model was able to achieve a BLEU score of 19.32, while successfully transferring styles and joining tweets to form a meaningful news paragraph.

Extracting N-ary Cross-sentence Relations using Constrained Subsequence Kernel

Jun 15, 2020

Abstract:Most of the past work in relation extraction deals with relations occurring within a sentence and having only two entity arguments. We propose a new formulation of the relation extraction task where the relations are more general than intra-sentence relations in the sense that they may span multiple sentences and may have more than two arguments. Moreover, the relations are more specific than corpus-level relations in the sense that their scope is limited only within a document and not valid globally throughout the corpus. We propose a novel sequence representation to characterize instances of such relations. We then explore various classifiers whose features are derived from this sequence representation. For SVM classifier, we design a Constrained Subsequence Kernel which is a variant of Generalized Subsequence Kernel. We evaluate our approach on three datasets across two domains: biomedical and general domain.

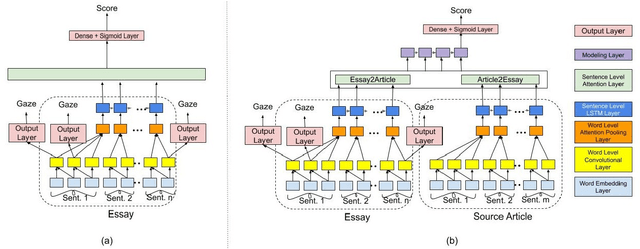

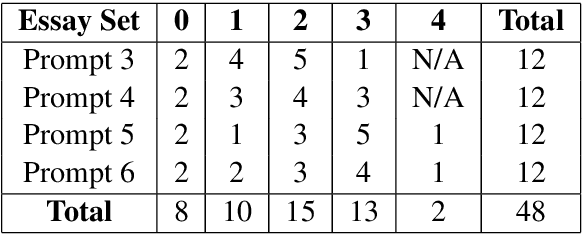

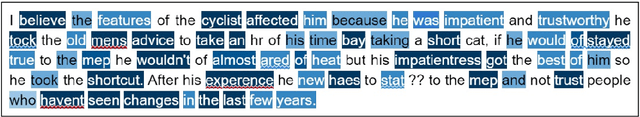

Happy Are Those Who Grade without Seeing: A Multi-Task Learning Approach to Grade Essays Using Gaze Behaviour

May 25, 2020

Abstract:The gaze behaviour of a reader is helpful in solving several NLP tasks such as automatic essay grading, named entity recognition, sarcasm detection $\textit{etc.}$ However, collecting gaze behaviour from readers is costly in terms of time and money. In this paper, we propose a way to improve automatic essay grading using gaze behaviour, where the gaze features are learnt at run time using a multi-task learning framework. To demonstrate the efficacy of this multi-task learning based approach to automatic essay grading, we collect gaze behaviour for 48 essays across 4 essay sets, and learn gaze behaviour for the rest of the essays, numbering over 7000 essays. Using the learnt gaze behaviour, we can achieve a statistically significant improvement in performance over the state-of-the-art system for the essay sets where we have gaze data. We also achieve a statistically significant improvement for 4 other essay sets, numbering about 6000 essays, where we have no gaze behaviour data available. Our approach establishes that learning gaze behaviour improves automatic essay grading.

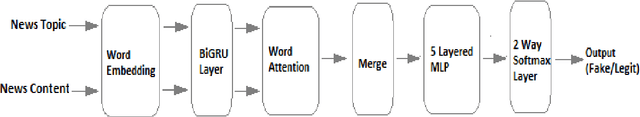

A Deep Learning Approach for Automatic Detection of Fake News

May 11, 2020

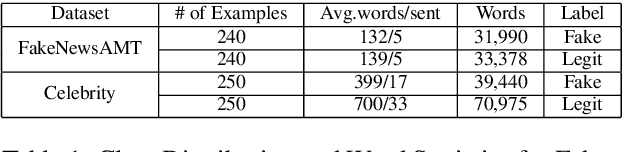

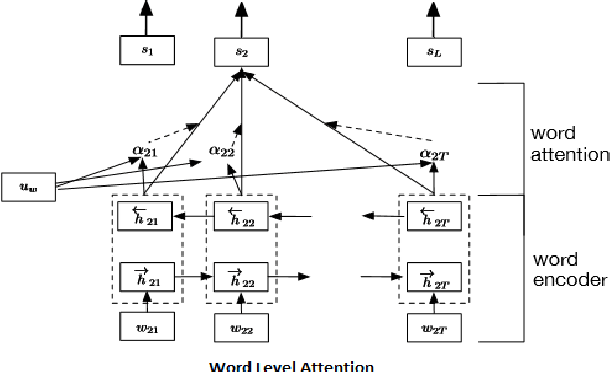

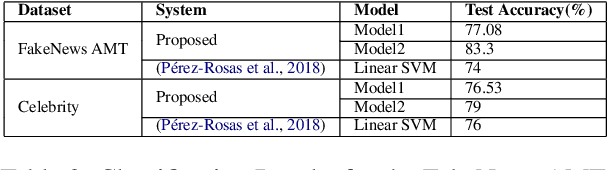

Abstract:Fake news detection is a very prominent and essential task in the field of journalism. This challenging problem is seen so far in the field of politics, but it could be even more challenging when it is to be determined in the multi-domain platform. In this paper, we propose two effective models based on deep learning for solving fake news detection problem in online news contents of multiple domains. We evaluate our techniques on the two recently released datasets, namely FakeNews AMT and Celebrity for fake news detection. The proposed systems yield encouraging performance, outperforming the current handcrafted feature engineering based state-of-the-art system with a significant margin of 3.08% and 9.3% by the two models, respectively. In order to exploit the datasets, available for the related tasks, we perform cross-domain analysis (i.e. model trained on FakeNews AMT and tested on Celebrity and vice versa) to explore the applicability of our systems across the domains.

Recommendation Chart of Domains for Cross-Domain Sentiment Analysis:Findings of A 20 Domain Study

Apr 09, 2020

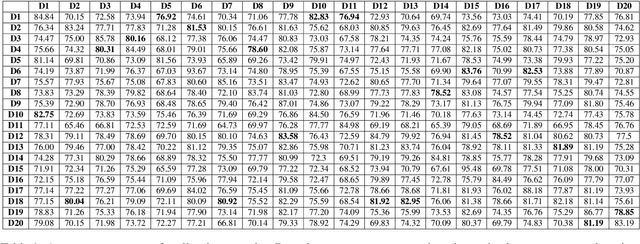

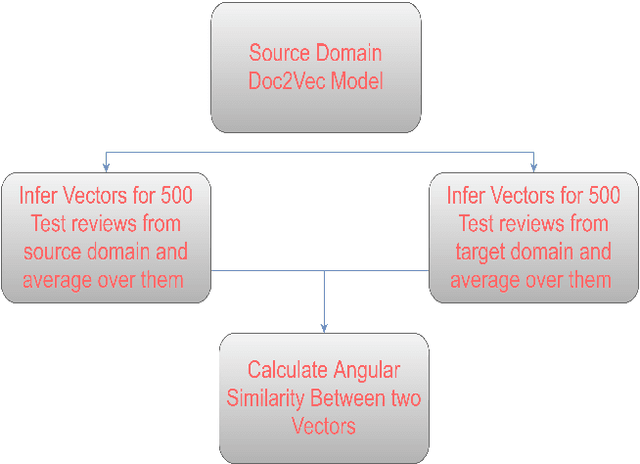

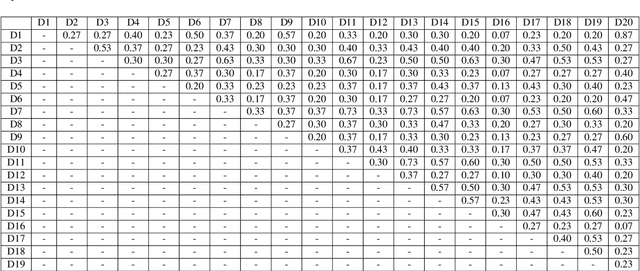

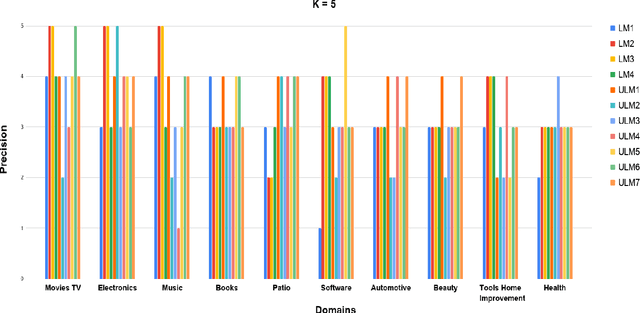

Abstract:Cross-domain sentiment analysis (CDSA) helps to address the problem of data scarcity in scenarios where labelled data for a domain (known as the target domain) is unavailable or insufficient. However, the decision to choose a domain (known as the source domain) to leverage from is, at best, intuitive. In this paper, we investigate text similarity metrics to facilitate source domain selection for CDSA. We report results on 20 domains (all possible pairs) using 11 similarity metrics. Specifically, we compare CDSA performance with these metrics for different domain-pairs to enable the selection of a suitable source domain, given a target domain. These metrics include two novel metrics for evaluating domain adaptability to help source domain selection of labelled data and utilize word and sentence-based embeddings as metrics for unlabelled data. The goal of our experiments is a recommendation chart that gives the K best source domains for CDSA for a given target domain. We show that the best K source domains returned by our similarity metrics have a precision of over 50%, for varying values of K.

Reinforced Multi-task Approach for Multi-hop Question Generation

Apr 07, 2020

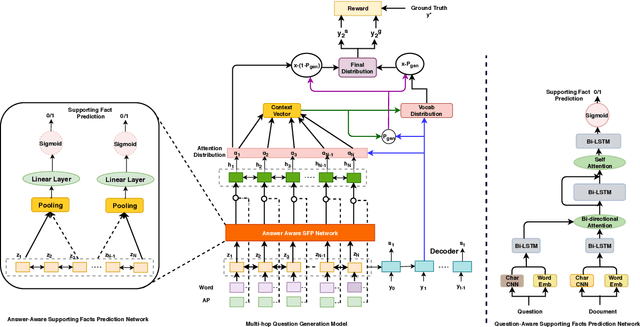

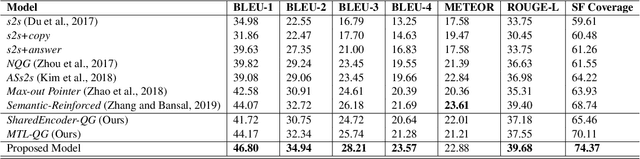

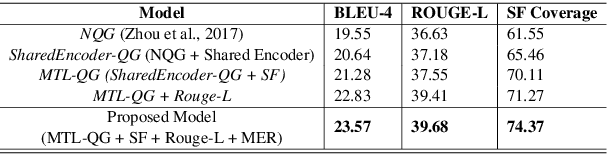

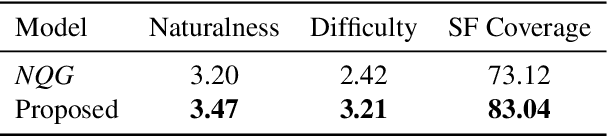

Abstract:Question generation (QG) attempts to solve the inverse of question answering (QA) problem by generating a natural language question given a document and an answer. While sequence to sequence neural models surpass rule-based systems for QG, they are limited in their capacity to focus on more than one supporting fact. For QG, we often require multiple supporting facts to generate high-quality questions. Inspired by recent works on multi-hop reasoning in QA, we take up Multi-hop question generation, which aims at generating relevant questions based on supporting facts in the context. We employ multitask learning with the auxiliary task of answer-aware supporting fact prediction to guide the question generator. In addition, we also proposed a question-aware reward function in a Reinforcement Learning (RL) framework to maximize the utilization of the supporting facts. We demonstrate the effectiveness of our approach through experiments on the multi-hop question answering dataset, HotPotQA. Empirical evaluation shows our model to outperform the single-hop neural question generation models on both automatic evaluation metrics such as BLEU, METEOR, and ROUGE, and human evaluation metrics for quality and coverage of the generated questions.

Utilizing Language Relatedness to improve Machine Translation: A Case Study on Languages of the Indian Subcontinent

Mar 19, 2020

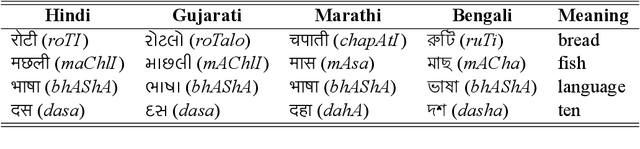

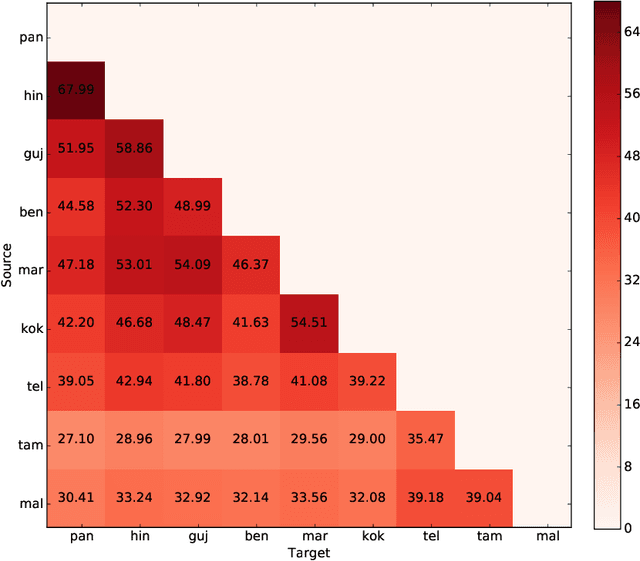

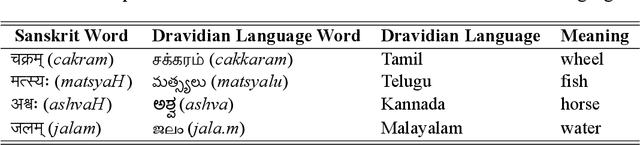

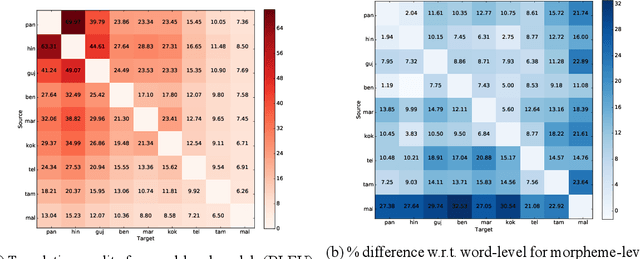

Abstract:In this work, we present an extensive study of statistical machine translation involving languages of the Indian subcontinent. These languages are related by genetic and contact relationships. We describe the similarities between Indic languages arising from these relationships. We explore how lexical and orthographic similarity among these languages can be utilized to improve translation quality between Indic languages when limited parallel corpora is available. We also explore how the structural correspondence between Indic languages can be utilized to re-use linguistic resources for English to Indic language translation. Our observations span 90 language pairs from 9 Indic languages and English. To the best of our knowledge, this is the first large-scale study specifically devoted to utilizing language relatedness to improve translation between related languages.

Related Tasks can Share! A Multi-task Framework for Affective language

Feb 06, 2020

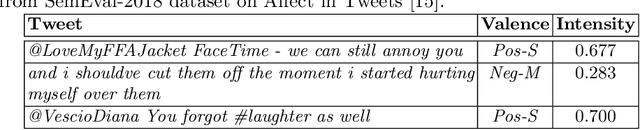

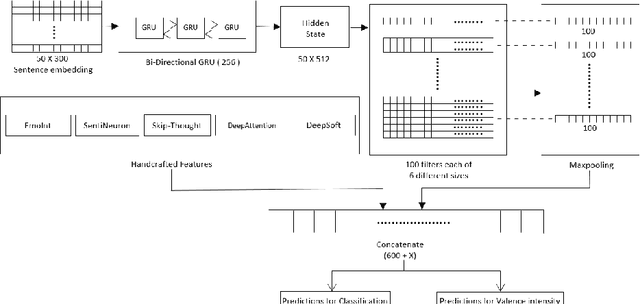

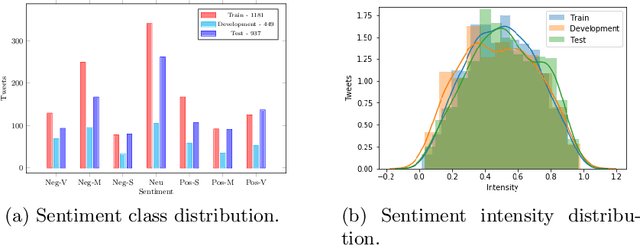

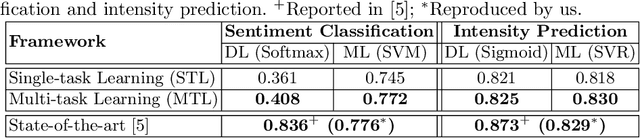

Abstract:Expressing the polarity of sentiment as 'positive' and 'negative' usually have limited scope compared with the intensity/degree of polarity. These two tasks (i.e. sentiment classification and sentiment intensity prediction) are closely related and may offer assistance to each other during the learning process. In this paper, we propose to leverage the relatedness of multiple tasks in a multi-task learning framework. Our multi-task model is based on convolutional-Gated Recurrent Unit (GRU) framework, which is further assisted by a diverse hand-crafted feature set. Evaluation and analysis suggest that joint-learning of the related tasks in a multi-task framework can outperform each of the individual tasks in the single-task frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge