Pierre Merriaux

Unifying Appearance Codes and Bilateral Grids for Driving Scene Gaussian Splatting

Jun 06, 2025Abstract:Neural rendering techniques, including NeRF and Gaussian Splatting (GS), rely on photometric consistency to produce high-quality reconstructions. However, in real-world scenarios, it is challenging to guarantee perfect photometric consistency in acquired images. Appearance codes have been widely used to address this issue, but their modeling capability is limited, as a single code is applied to the entire image. Recently, the bilateral grid was introduced to perform pixel-wise color mapping, but it is difficult to optimize and constrain effectively. In this paper, we propose a novel multi-scale bilateral grid that unifies appearance codes and bilateral grids. We demonstrate that this approach significantly improves geometric accuracy in dynamic, decoupled autonomous driving scene reconstruction, outperforming both appearance codes and bilateral grids. This is crucial for autonomous driving, where accurate geometry is important for obstacle avoidance and control. Our method shows strong results across four datasets: Waymo, NuScenes, Argoverse, and PandaSet. We further demonstrate that the improvement in geometry is driven by the multi-scale bilateral grid, which effectively reduces floaters caused by photometric inconsistency.

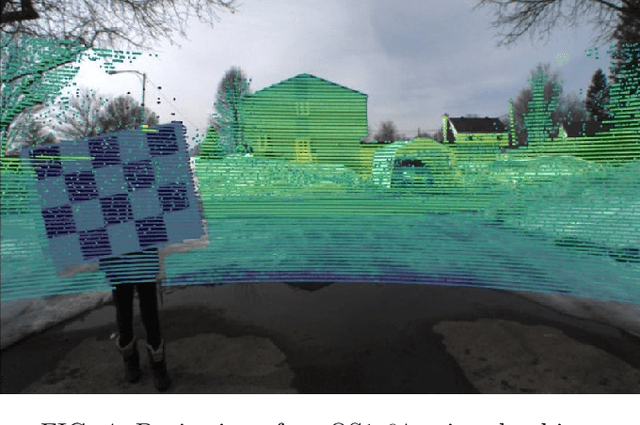

Living in a Material World: Learning Material Properties from Full-Waveform Flash Lidar Data for Semantic Segmentation

May 07, 2023Abstract:Advances in lidar technology have made the collection of 3D point clouds fast and easy. While most lidar sensors return per-point intensity (or reflectance) values along with range measurements, flash lidar sensors are able to provide information about the shape of the return pulse. The shape of the return waveform is affected by many factors, including the distance that the light pulse travels and the angle of incidence with a surface. Importantly, the shape of the return waveform also depends on the material properties of the reflecting surface. In this paper, we investigate whether the material type or class can be determined from the full-waveform response. First, as a proof of concept, we demonstrate that the extra information about material class, if known accurately, can improve performance on scene understanding tasks such as semantic segmentation. Next, we learn two different full-waveform material classifiers: a random forest classifier and a temporal convolutional neural network (TCN) classifier. We find that, in some cases, material types can be distinguished, and that the TCN generally performs better across a wider range of materials. However, factors such as angle of incidence, material colour, and material similarity may hinder overall performance.

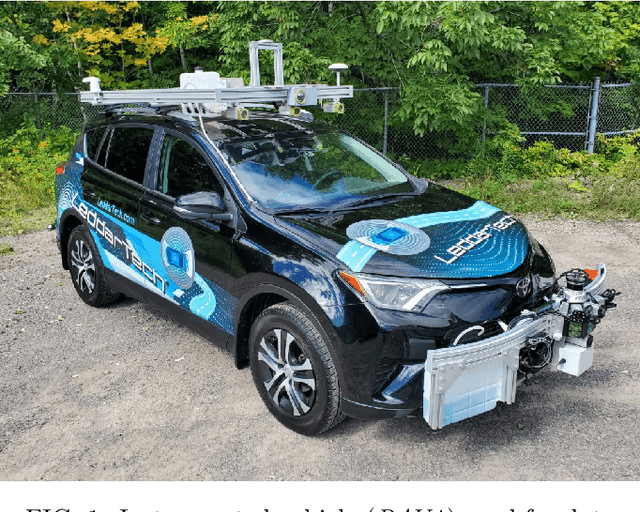

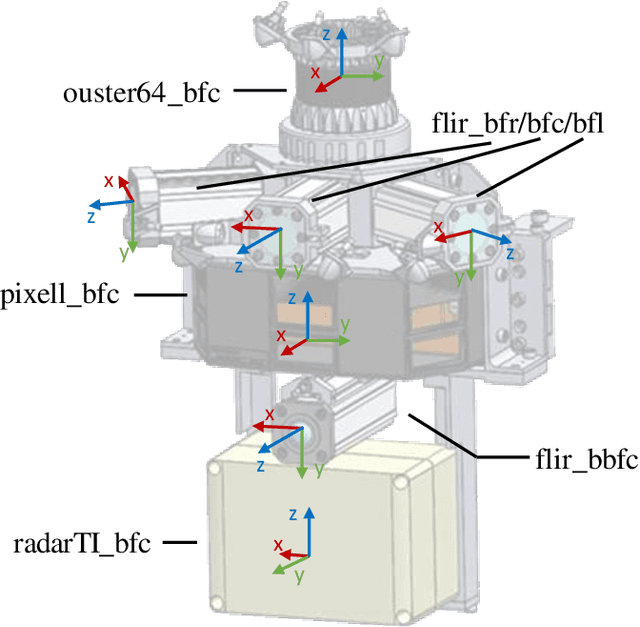

PixSet : An Opportunity for 3D Computer Vision to Go Beyond Point Clouds With a Full-Waveform LiDAR Dataset

Feb 24, 2021

Abstract:Leddar PixSet is a new publicly available dataset (dataset.leddartech.com) for autonomous driving research and development. One key novelty of this dataset is the presence of full-waveform data from the Leddar Pixell sensor, a solid-state flash LiDAR. Full-waveform data has been shown to improve the performance of perception algorithms in airborne applications but is yet to be demonstrated for terrestrial applications such as autonomous driving. The PixSet dataset contains approximately 29k frames from 97 sequences recorded in high-density urban areas, using a set of various sensors (cameras, LiDARs, radar, IMU, etc.) Each frame has been manually annotated with 3D bounding boxes.

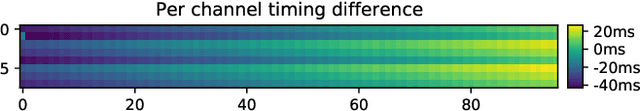

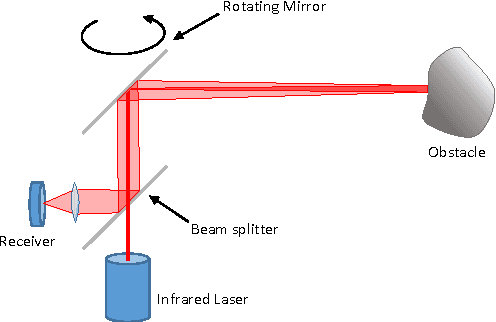

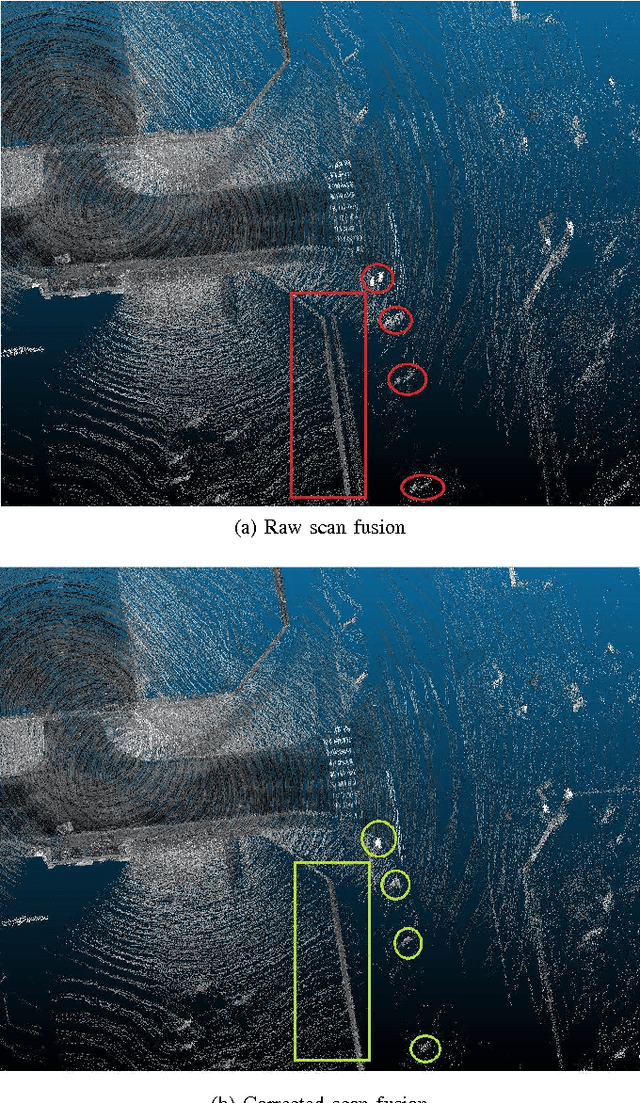

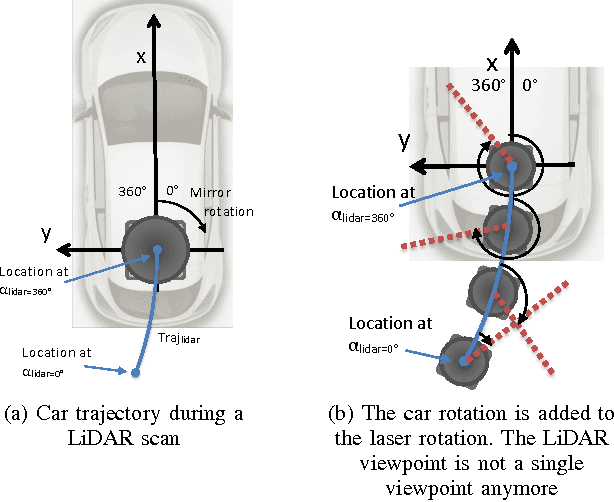

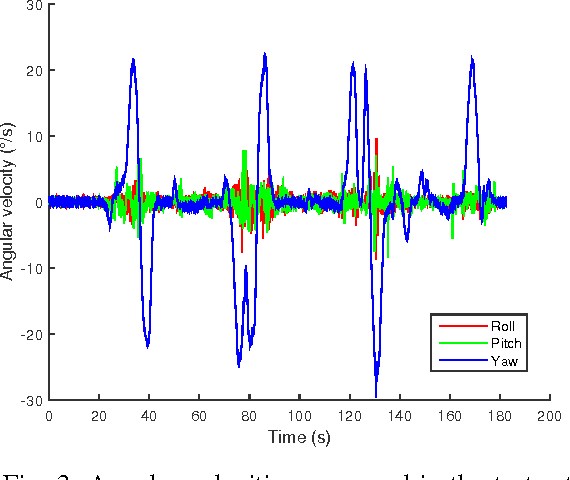

LiDAR point clouds correction acquired from a moving car based on CAN-bus data

Jun 19, 2017

Abstract:In this paper, we investigate the impact of different kind of car trajectories on LiDAR scans. In fact, LiDAR scanning speeds are considerably slower than car speeds introducing distortions. We propose a method to overcome this issue as well as new metrics based on CAN bus data. Our results suggest that the vehicle trajectory should be taken into account when building 3D large-scale maps from a LiDAR mounted on a moving vehicle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge