Weiqing Xiao

Unifying Appearance Codes and Bilateral Grids for Driving Scene Gaussian Splatting

Jun 06, 2025Abstract:Neural rendering techniques, including NeRF and Gaussian Splatting (GS), rely on photometric consistency to produce high-quality reconstructions. However, in real-world scenarios, it is challenging to guarantee perfect photometric consistency in acquired images. Appearance codes have been widely used to address this issue, but their modeling capability is limited, as a single code is applied to the entire image. Recently, the bilateral grid was introduced to perform pixel-wise color mapping, but it is difficult to optimize and constrain effectively. In this paper, we propose a novel multi-scale bilateral grid that unifies appearance codes and bilateral grids. We demonstrate that this approach significantly improves geometric accuracy in dynamic, decoupled autonomous driving scene reconstruction, outperforming both appearance codes and bilateral grids. This is crucial for autonomous driving, where accurate geometry is important for obstacle avoidance and control. Our method shows strong results across four datasets: Waymo, NuScenes, Argoverse, and PandaSet. We further demonstrate that the improvement in geometry is driven by the multi-scale bilateral grid, which effectively reduces floaters caused by photometric inconsistency.

Rectified Iterative Disparity for Stereo Matching

Jun 16, 2024

Abstract:Both uncertainty-assisted and iteration-based methods have achieved great success in stereo matching. However, existing uncertainty estimation methods take a single image and the corresponding disparity as input, which imposes higher demands on the estimation network. In this paper, we propose Cost volume-based disparity Uncertainty Estimation (UEC). Based on the rich similarity information in the cost volume coming from the image pairs, the proposed UEC can achieve competitive performance with low computational cost. Secondly, we propose two methods of uncertainty-assisted disparity estimation, Uncertainty-based Disparity Rectification (UDR) and Uncertainty-based Disparity update Conditioning (UDC). These two methods optimise the disparity update process of the iterative-based approach without adding extra parameters. In addition, we propose Disparity Rectification loss that significantly improves the accuracy of small amount of disparity updates. We present a high-performance stereo architecture, DR Stereo, which is a combination of the proposed methods. Experimental results from SceneFlow, KITTI, Middlebury 2014, and ETH3D show that DR-Stereo achieves very competitive disparity estimation performance.

Stepwise Regression and Pre-trained Edge for Robust Stereo Matching

Jun 11, 2024

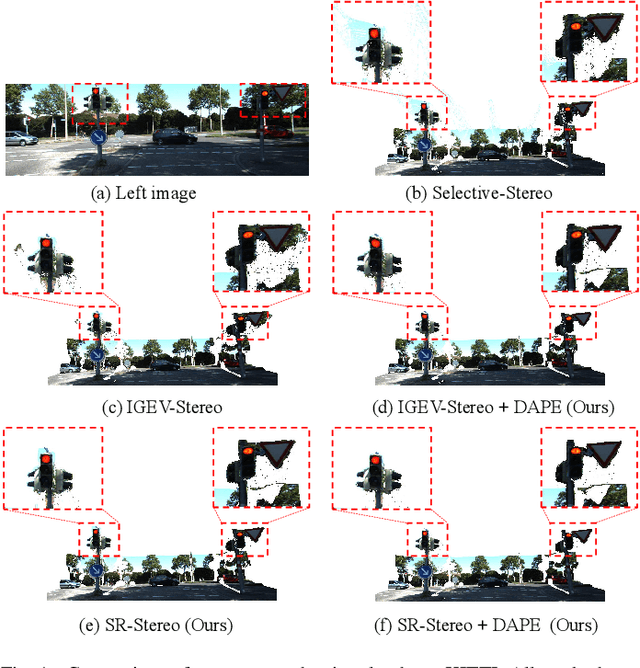

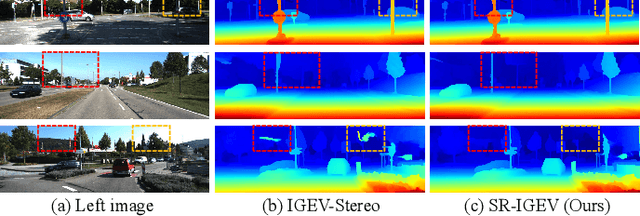

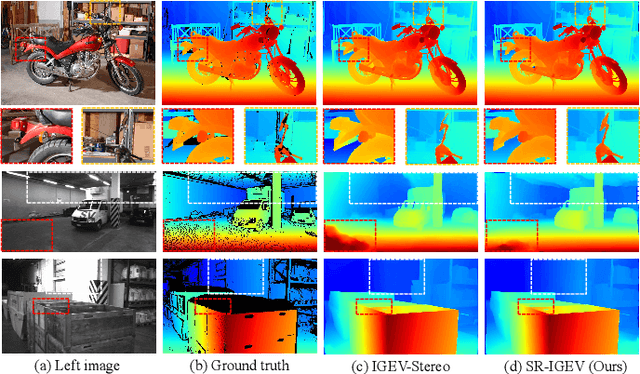

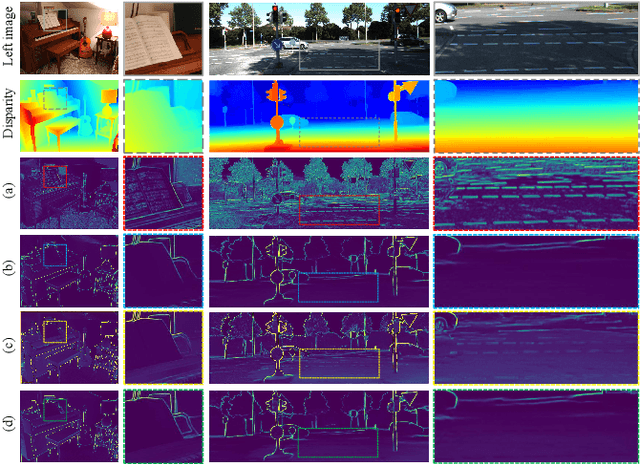

Abstract:Due to the difficulty in obtaining real samples and ground truth, the generalization performance and the fine-tuned performance are critical for the feasibility of stereo matching methods in real-world applications. However, the presence of substantial disparity distributions and density variations across different datasets presents significant challenges for the generalization and fine-tuning of the model. In this paper, we propose a novel stereo matching method, called SR-Stereo, which mitigates the distributional differences across different datasets by predicting the disparity clips and uses a loss weight related to the regression target scale to improve the accuracy of the disparity clips. Moreover, this stepwise regression architecture can be easily extended to existing iteration-based methods to improve the performance without changing the structure. In addition, to mitigate the edge blurring of the fine-tuned model on sparse ground truth, we propose Domain Adaptation Based on Pre-trained Edges (DAPE). Specifically, we use the predicted disparity and RGB image to estimate the edge map of the target domain image. The edge map is filtered to generate edge map background pseudo-labels, which together with the sparse ground truth disparity on the target domain are used as a supervision to jointly fine-tune the pre-trained stereo matching model. These proposed methods are extensively evaluated on SceneFlow, KITTI, Middbury 2014 and ETH3D. The SR-Stereo achieves competitive disparity estimation performance and state-of-the-art cross-domain generalisation performance. Meanwhile, the proposed DAPE significantly improves the disparity estimation performance of fine-tuned models, especially in the textureless and detail regions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge