Yohan Dupuis

An Improved 3D Skeletons UP-Fall Dataset: Enhancing Data Quality for Efficient Impact Fall Detection

Feb 26, 2025Abstract:Detecting impact where an individual makes contact with the ground within a fall event is crucial in fall detection systems, particularly for elderly care where prompt intervention can prevent serious injuries. The UP-Fall dataset, a key resource in fall detection research, has proven valuable but suffers from limitations in data accuracy and comprehensiveness. These limitations cause confusion in distinguishing between non-impact events, such as sliding, and real falls with impact, where the person actually hits the ground. This confusion compromises the effectiveness of current fall detection systems. This study presents enhancements to the UP-Fall dataset aiming at improving it for impact fall detection by incorporating 3D skeleton data. Our preprocessing techniques ensure high data accuracy and comprehensiveness, enabling a more reliable impact fall detection. Extensive experiments were conducted using various machine learning and deep learning algorithms to benchmark the improved 3D skeletons dataset. The results demonstrate substantial improvements in the performance of fall detection models trained on the enhanced dataset. This contribution aims to enhance the safety and well-being of the elderly population at risk. To support further research and development of building more reliable impact fall detection systems, we have made the improved 3D skeletons UP-Fall dataset publicly available at this link https://zenodo.org/records/12773013.

Machine Learning and Feature Ranking for Impact Fall Detection Event Using Multisensor Data

Dec 21, 2023Abstract:Falls among individuals, especially the elderly population, can lead to serious injuries and complications. Detecting impact moments within a fall event is crucial for providing timely assistance and minimizing the negative consequences. In this work, we aim to address this challenge by applying thorough preprocessing techniques to the multisensor dataset, the goal is to eliminate noise and improve data quality. Furthermore, we employ a feature selection process to identify the most relevant features derived from the multisensor UP-FALL dataset, which in turn will enhance the performance and efficiency of machine learning models. We then evaluate the efficiency of various machine learning models in detecting the impact moment using the resulting data information from multiple sensors. Through extensive experimentation, we assess the accuracy of our approach using various evaluation metrics. Our results achieve high accuracy rates in impact detection, showcasing the power of leveraging multisensor data for fall detection tasks. This highlights the potential of our approach to enhance fall detection systems and improve the overall safety and well-being of individuals at risk of falls.

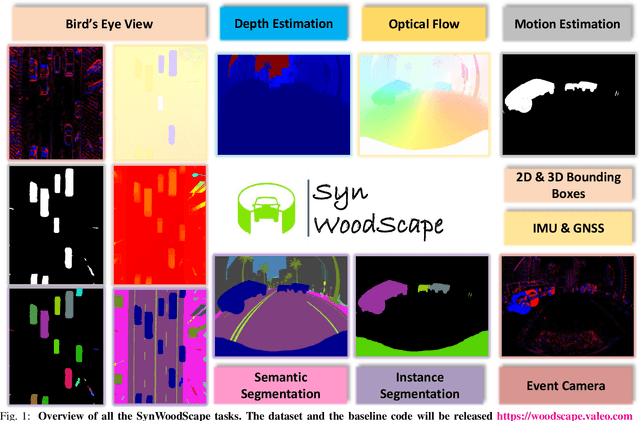

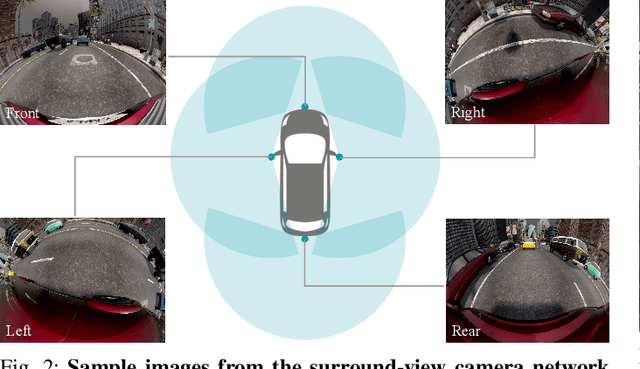

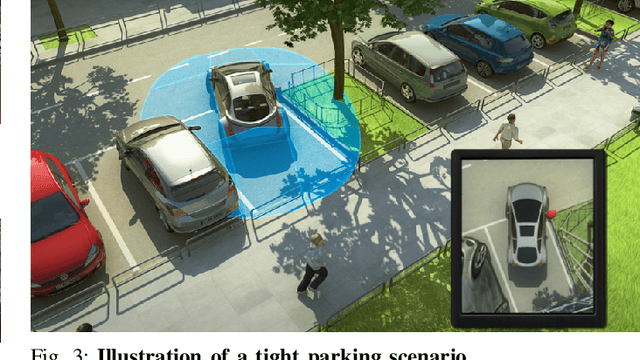

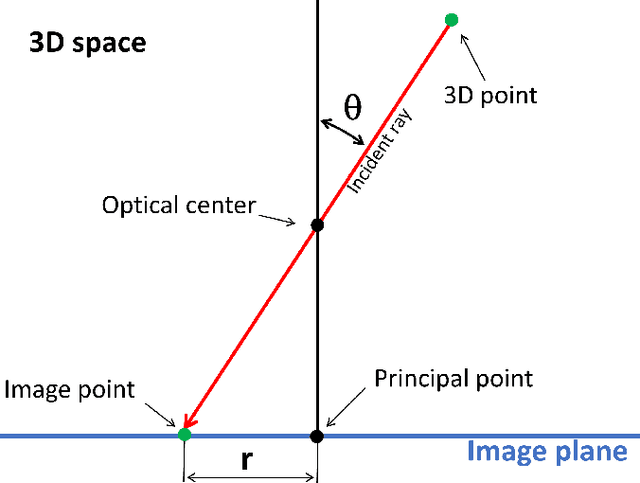

SynWoodScape: Synthetic Surround-view Fisheye Camera Dataset for Autonomous Driving

Mar 09, 2022

Abstract:Surround-view cameras are a primary sensor for automated driving, used for near field perception. It is one of the most commonly used sensors in commercial vehicles. Four fisheye cameras with a 190{\deg} field of view cover the 360{\deg} around the vehicle. Due to its high radial distortion, the standard algorithms do not extend easily. Previously, we released the first public fisheye surround-view dataset named WoodScape. In this work, we release a synthetic version of the surround-view dataset, covering many of its weaknesses and extending it. Firstly, it is not possible to obtain ground truth for pixel-wise optical flow and depth. Secondly, WoodScape did not have all four cameras simultaneously in order to sample diverse frames. However, this means that multi-camera algorithms cannot be designed, which is enabled in the new dataset. We implemented surround-view fisheye geometric projections in CARLA Simulator matching WoodScape's configuration and created SynWoodScape. We release 80k images from the synthetic dataset with annotations for 10+ tasks. We also release the baseline code and supporting scripts.

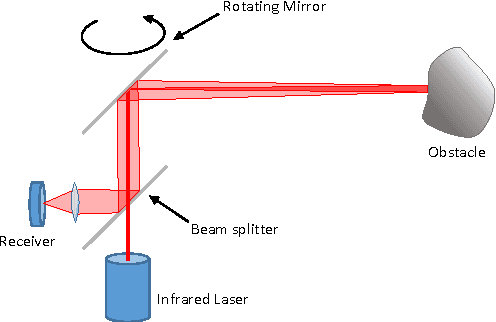

Efficient LiDAR data compression for embedded V2I or V2V data handling

Apr 11, 2019

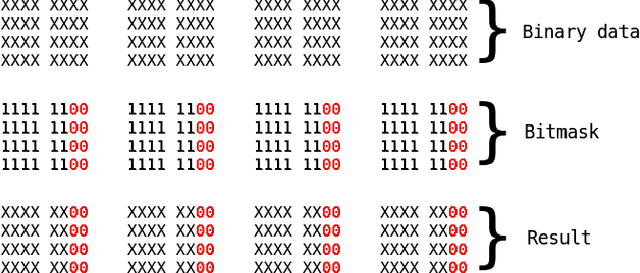

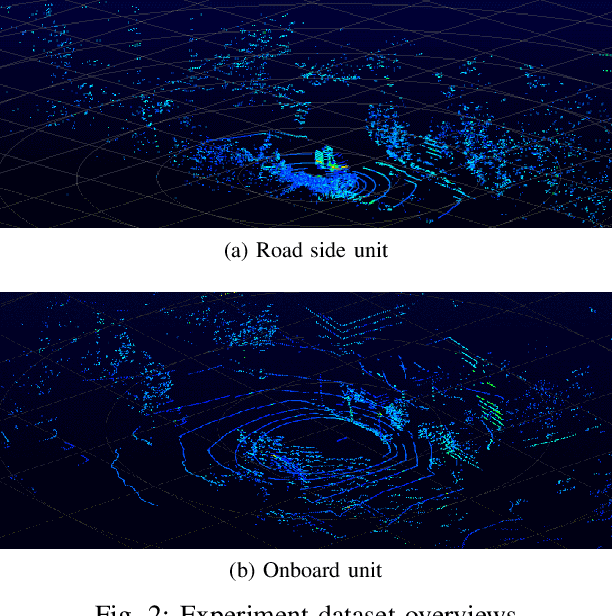

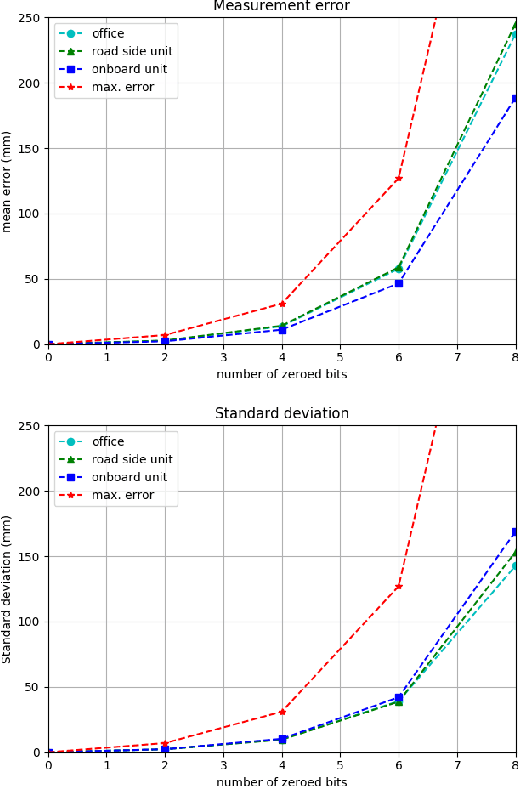

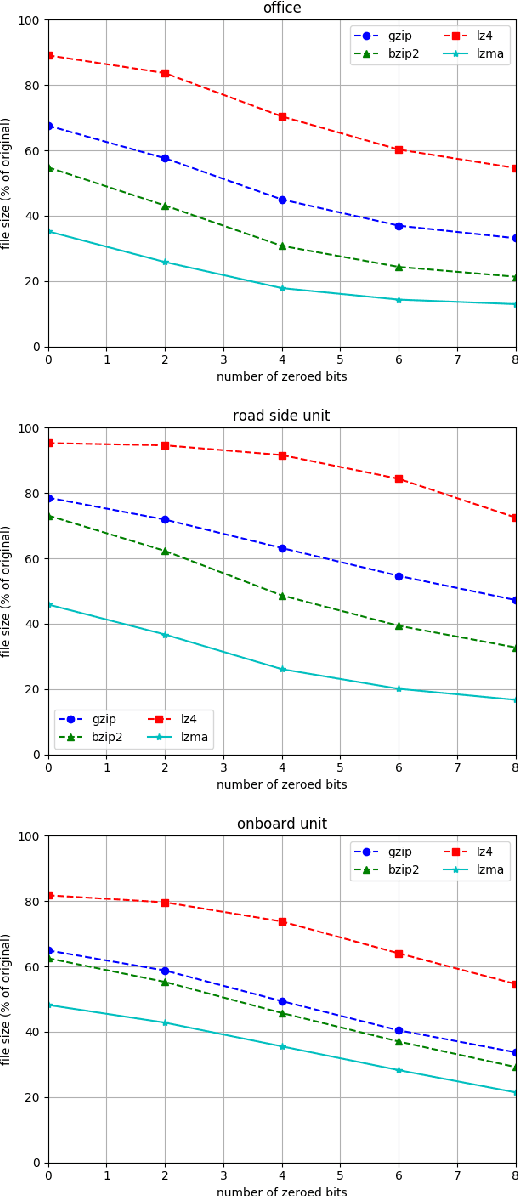

Abstract:LiDAR are increasingly being used in intelligent vehicles (IV) or intelligent transportation systems (ITS). Storage and transmission of data generated by LiDAR sensors are one of the most challenging aspects of their deployment. In this paper we present a method that can be used to efficiently compress LiDAR data in order to facilitate storage and transmission in V2V or V2I applications. This method can be used to perform lossless or lossy compression and is specifically designed for embedded applications with low processing power. This method is also designed to be easily applicable to existing processing chains by keeping the structure of the data stream intact. We benchmarked our method using several publicly available datasets and compared it with state-of-the-art LiDAR data compression methods from the literature.

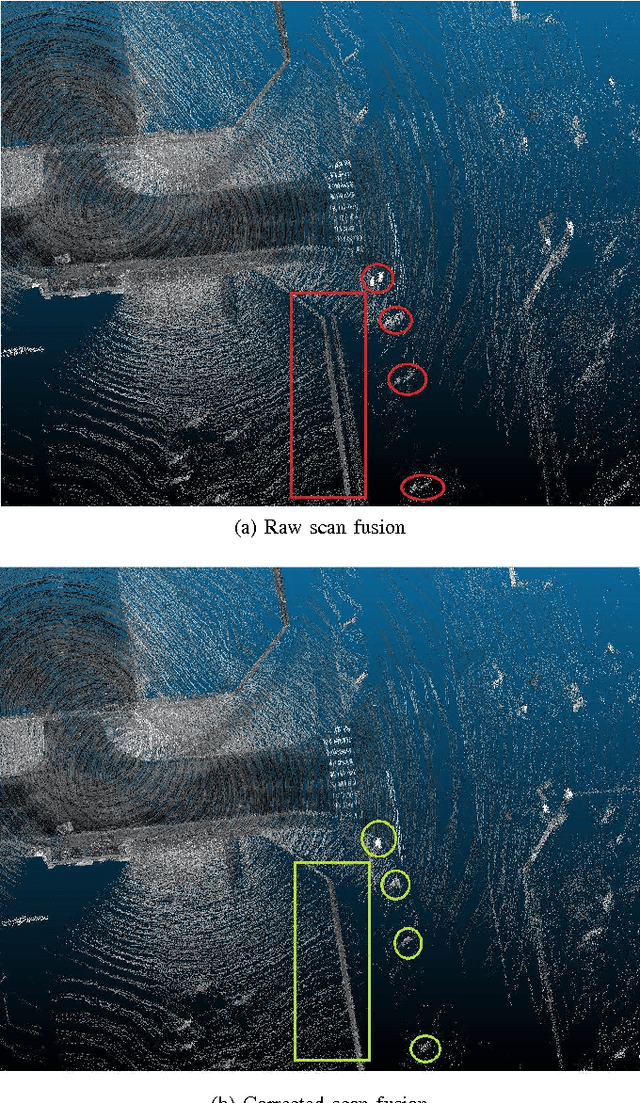

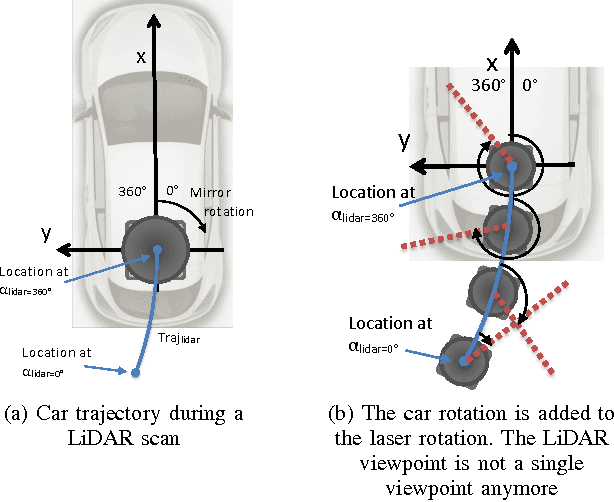

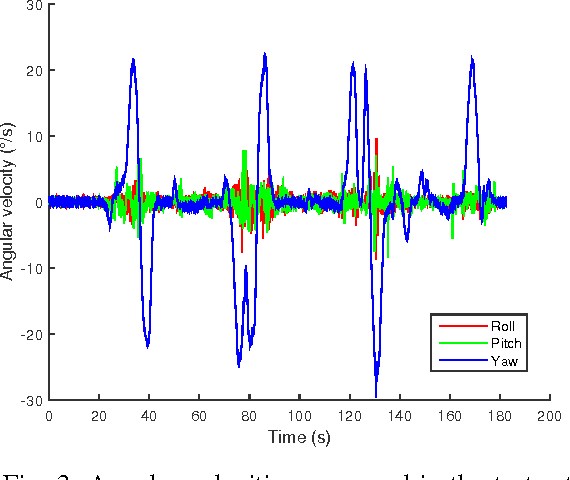

LiDAR point clouds correction acquired from a moving car based on CAN-bus data

Jun 19, 2017

Abstract:In this paper, we investigate the impact of different kind of car trajectories on LiDAR scans. In fact, LiDAR scanning speeds are considerably slower than car speeds introducing distortions. We propose a method to overcome this issue as well as new metrics based on CAN bus data. Our results suggest that the vehicle trajectory should be taken into account when building 3D large-scale maps from a LiDAR mounted on a moving vehicle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge