Peihan Liu

Privately Fine-Tuned LLMs Preserve Temporal Dynamics in Tabular Data

Feb 02, 2026Abstract:Research on differentially private synthetic tabular data has largely focused on independent and identically distributed rows where each record corresponds to a unique individual. This perspective neglects the temporal complexity in longitudinal datasets, such as electronic health records, where a user contributes an entire (sub) table of sequential events. While practitioners might attempt to model such data by flattening user histories into high-dimensional vectors for use with standard marginal-based mechanisms, we demonstrate that this strategy is insufficient. Flattening fails to preserve temporal coherence even when it maintains valid marginal distributions. We introduce PATH, a novel generative framework that treats the full table as the unit of synthesis and leverages the autoregressive capabilities of privately fine-tuned large language models. Extensive evaluations show that PATH effectively captures long-range dependencies that traditional methods miss. Empirically, our method reduces the distributional distance to real trajectories by over 60% and reduces state transition errors by nearly 50% compared to leading marginal mechanisms while achieving similar marginal fidelity.

Convex Relaxation for Solving Large-Margin Classifiers in Hyperbolic Space

May 27, 2024

Abstract:Hyperbolic spaces have increasingly been recognized for their outstanding performance in handling data with inherent hierarchical structures compared to their Euclidean counterparts. However, learning in hyperbolic spaces poses significant challenges. In particular, extending support vector machines to hyperbolic spaces is in general a constrained non-convex optimization problem. Previous and popular attempts to solve hyperbolic SVMs, primarily using projected gradient descent, are generally sensitive to hyperparameters and initializations, often leading to suboptimal solutions. In this work, by first rewriting the problem into a polynomial optimization, we apply semidefinite relaxation and sparse moment-sum-of-squares relaxation to effectively approximate the optima. From extensive empirical experiments, these methods are shown to perform better than the projected gradient descent approach.

Safeguarding Data in Multimodal AI: A Differentially Private Approach to CLIP Training

Jun 13, 2023

Abstract:The surge in multimodal AI's success has sparked concerns over data privacy in vision-and-language tasks. While CLIP has revolutionized multimodal learning through joint training on images and text, its potential to unintentionally disclose sensitive information necessitates the integration of privacy-preserving mechanisms. We introduce a differentially private adaptation of the Contrastive Language-Image Pretraining (CLIP) model that effectively addresses privacy concerns while retaining accuracy. Our proposed method, Dp-CLIP, is rigorously evaluated on benchmark datasets encompassing diverse vision-and-language tasks such as image classification and visual question answering. We demonstrate that our approach retains performance on par with the standard non-private CLIP model. Furthermore, we analyze our proposed algorithm under linear representation settings. We derive the convergence rate of our algorithm and show a trade-off between utility and privacy when gradients are clipped per-batch and the loss function does not satisfy smoothness conditions assumed in the literature for the analysis of DP-SGD.

Improving Adversarial Transferability with Scheduled Step Size and Dual Example

Jan 30, 2023Abstract:Deep neural networks are widely known to be vulnerable to adversarial examples, especially showing significantly poor performance on adversarial examples generated under the white-box setting. However, most white-box attack methods rely heavily on the target model and quickly get stuck in local optima, resulting in poor adversarial transferability. The momentum-based methods and their variants are proposed to escape the local optima for better transferability. In this work, we notice that the transferability of adversarial examples generated by the iterative fast gradient sign method (I-FGSM) exhibits a decreasing trend when increasing the number of iterations. Motivated by this finding, we argue that the information of adversarial perturbations near the benign sample, especially the direction, benefits more on the transferability. Thus, we propose a novel strategy, which uses the Scheduled step size and the Dual example (SD), to fully utilize the adversarial information near the benign sample. Our proposed strategy can be easily integrated with existing adversarial attack methods for better adversarial transferability. Empirical evaluations on the standard ImageNet dataset demonstrate that our proposed method can significantly enhance the transferability of existing adversarial attacks.

How Robust is your Fair Model? Exploring the Robustness of Diverse Fairness Strategies

Jul 12, 2022

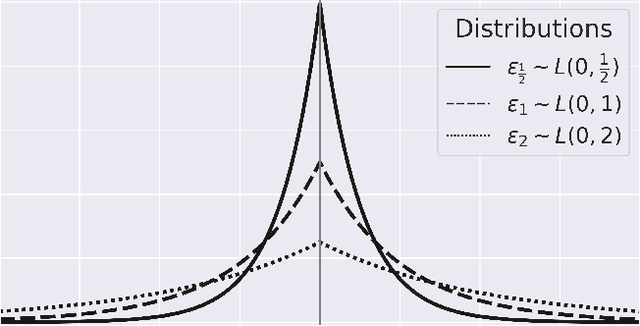

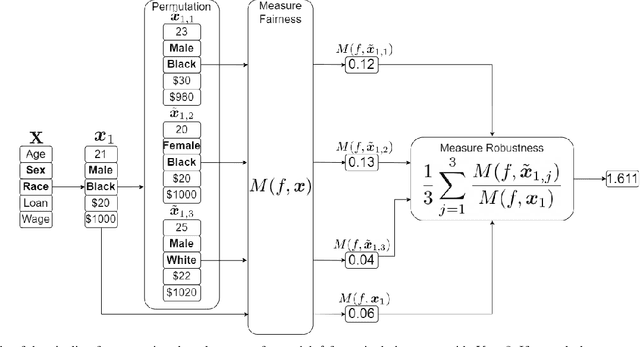

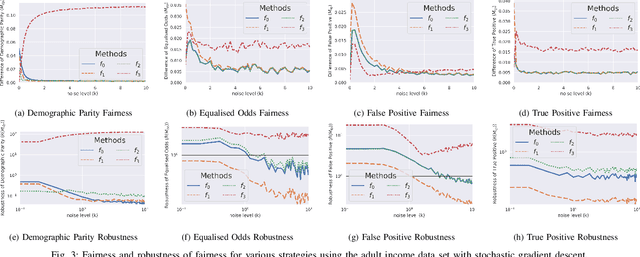

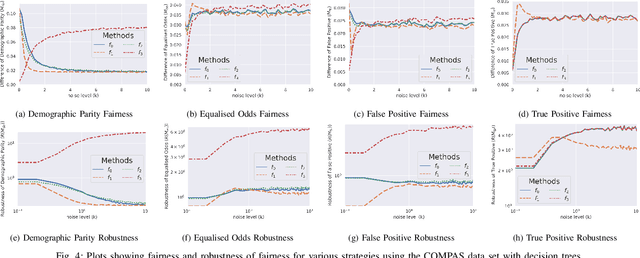

Abstract:With the introduction of machine learning in high-stakes decision making, ensuring algorithmic fairness has become an increasingly important problem to solve. In response to this, many mathematical definitions of fairness have been proposed, and a variety of optimisation techniques have been developed, all designed to maximise a defined notion of fairness. However, fair solutions are reliant on the quality of the training data, and can be highly sensitive to noise. Recent studies have shown that robustness (the ability for a model to perform well on unseen data) plays a significant role in the type of strategy that should be used when approaching a new problem and, hence, measuring the robustness of these strategies has become a fundamental problem. In this work, we therefore propose a new criterion to measure the robustness of various fairness optimisation strategies - the robustness ratio. We conduct multiple extensive experiments on five bench mark fairness data sets using three of the most popular fairness strategies with respect to four of the most popular definitions of fairness. Our experiments empirically show that fairness methods that rely on threshold optimisation are very sensitive to noise in all the evaluated data sets, despite mostly outperforming other methods. This is in contrast to the other two methods, which are less fair for low noise scenarios but fairer for high noise ones. To the best of our knowledge, we are the first to quantitatively evaluate the robustness of fairness optimisation strategies. This can potentially can serve as a guideline in choosing the most suitable fairness strategy for various data sets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge