Pan Liu

CHIMERE

A Confidence-Variance Theory for Pseudo-Label Selection in Semi-Supervised Learning

Jan 16, 2026Abstract:Most pseudo-label selection strategies in semi-supervised learning rely on fixed confidence thresholds, implicitly assuming that prediction confidence reliably indicates correctness. In practice, deep networks are often overconfident: high-confidence predictions can still be wrong, while informative low-confidence samples near decision boundaries are discarded. This paper introduces a Confidence-Variance (CoVar) theory framework that provides a principled joint reliability criterion for pseudo-label selection. Starting from the entropy minimization principle, we derive a reliability measure that combines maximum confidence (MC) with residual-class variance (RCV), which characterizes how probability mass is distributed over non-maximum classes. The derivation shows that reliable pseudo-labels should have both high MC and low RCV, and that the influence of RCV increases as confidence grows, thereby correcting overconfident but unstable predictions. From this perspective, we cast pseudo-label selection as a spectral relaxation problem that maximizes separability in a confidence-variance feature space, and design a threshold-free selection mechanism to distinguish high- from low-reliability predictions. We integrate CoVar as a plug-in module into representative semi-supervised semantic segmentation and image classification methods. Across PASCAL VOC 2012, Cityscapes, CIFAR-10, and Mini-ImageNet with varying label ratios and backbones, it consistently improves over strong baselines, indicating that combining confidence with residual-class variance provides a more reliable basis for pseudo-label selection than fixed confidence thresholds. (Code: https://github.com/ljs11528/CoVar_Pseudo_Label_Selection.git)

How vehicles change lanes after encountering crashes: Empirical analysis and modeling

Jan 13, 2026Abstract:When a traffic crash occurs, following vehicles need to change lanes to bypass the obstruction. We define these maneuvers as post crash lane changes. In such scenarios, vehicles in the target lane may refuse to yield even after the lane change has already begun, increasing the complexity and crash risk of post crash LCs. However, the behavioral characteristics and motion patterns of post crash LCs remain unknown. To address this gap, we construct a post crash LC dataset by extracting vehicle trajectories from drone videos captured after crashes. Our empirical analysis reveals that, compared to mandatory LCs (MLCs) and discretionary LCs (DLCs), post crash LCs exhibit longer durations, lower insertion speeds, and higher crash risks. Notably, 79.4% of post crash LCs involve at least one instance of non yielding behavior from the new follower, compared to 21.7% for DLCs and 28.6% for MLCs. Building on these findings, we develop a novel trajectory prediction framework for post crash LCs. At its core is a graph based attention module that explicitly models yielding behavior as an auxiliary interaction aware task. This module is designed to guide both a conditional variational autoencoder and a Transformer based decoder to predict the lane changer's trajectory. By incorporating the interaction aware module, our model outperforms existing baselines in trajectory prediction performance by more than 10% in both average displacement error and final displacement error across different prediction horizons. Moreover, our model provides more reliable crash risk analysis by reducing false crash rates and improving conflict prediction accuracy. Finally, we validate the model's transferability using additional post crash LC datasets collected from different sites.

Software-Hardware Co-optimization for Modular E2E AV Paradigm: A Unified Framework of Optimization Approaches, Simulation Environment and Evaluation Metrics

Jan 12, 2026Abstract:Modular end-to-end (ME2E) autonomous driving paradigms combine modular interpretability with global optimization capability and have demonstrated strong performance. However, existing studies mainly focus on accuracy improvement, while critical system-level factors such as inference latency and energy consumption are often overlooked, resulting in increasingly complex model designs that hinder practical deployment. Prior efforts on model compression and acceleration typically optimize either the software or hardware side in isolation. Software-only optimization cannot fundamentally remove intermediate tensor access and operator scheduling overheads, whereas hardware-only optimization is constrained by model structure and precision. As a result, the real-world benefits of such optimizations are often limited. To address these challenges, this paper proposes a reusable software and hardware co-optimization and closed-loop evaluation framework for ME2E autonomous driving inference. The framework jointly integrates software-level model optimization with hardware-level computation optimization under a unified system-level objective. In addition, a multidimensional evaluation metric is introduced to assess system performance by jointly considering safety, comfort, efficiency, latency, and energy, enabling quantitative comparison of different optimization strategies. Experiments across multiple ME2E autonomous driving stacks show that the proposed framework preserves baseline-level driving performance while significantly reducing inference latency and energy consumption, achieving substantial overall system-level improvements. These results demonstrate that the proposed framework provides practical and actionable guidance for efficient deployment of ME2E autonomous driving systems.

EDA-RoF: Elastic Digital-Analog Radio-Over-Fiber (RoF) Modulation and Demodulation Architecture Enabling Seamless Transition Between Analog RoF and Digital RoF

Dec 23, 2025Abstract:We propose and demonstrate an elastic digital-analog radio-over-fiber (RoF) modulation and demodulation architecture, seamlessly bridging A-RoF and D-RoF solutions, achieving quasilinear SNR scaling with respect to 1/η, and evidenced by R^2=0.9908.

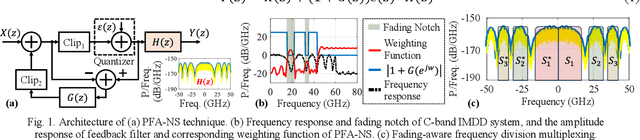

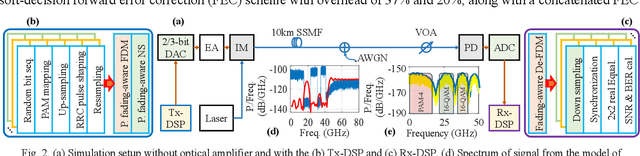

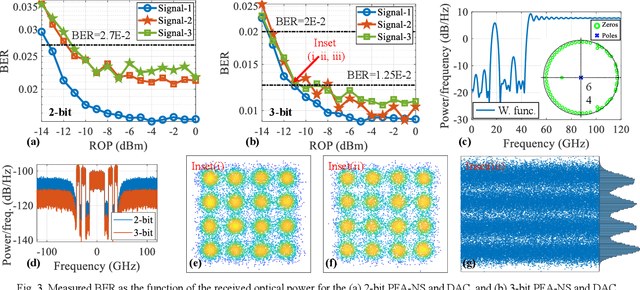

PFA-NS: Power-Fading-Aware Noise Shaping Enabled C-Band IMDD System with Low Resolution DAC

Dec 23, 2025

Abstract:We propose and demonstrate a power-fading-aware noise-shaping technique for C-band IMDD system with low resolution DAC, which shapes and concentrates quantization noise within the fading-induced notch areas, yielding 94% improvement in data-rate over traditional counterpart.

A Trajectory-free Crash Detection Framework with Generative Approach and Segment Map Diffusion

Nov 17, 2025Abstract:Real-time crash detection is essential for developing proactive safety management strategy and enhancing overall traffic efficiency. To address the limitations associated with trajectory acquisition and vehicle tracking, road segment maps recording the individual-level traffic dynamic data were directly served in crash detection. A novel two-stage trajectory-free crash detection framework, was present to generate the rational future road segment map and identify crashes. The first-stage diffusion-based segment map generation model, Mapfusion, conducts a noisy-to-normal process that progressively adds noise to the road segment map until the map is corrupted to pure Gaussian noise. The denoising process is guided by sequential embedding components capturing the temporal dynamics of segment map sequences. Furthermore, the generation model is designed to incorporate background context through ControlNet to enhance generation control. Crash detection is achieved by comparing the monitored segment map with the generations from diffusion model in second stage. Trained on non-crash vehicle motion data, Mapfusion successfully generates realistic road segment evolution maps based on learned motion patterns and remains robust across different sampling intervals. Experiments on real-world crashes indicate the effectiveness of the proposed two-stage method in accurately detecting crashes.

CheXWorld: Exploring Image World Modeling for Radiograph Representation Learning

Apr 18, 2025

Abstract:Humans can develop internal world models that encode common sense knowledge, telling them how the world works and predicting the consequences of their actions. This concept has emerged as a promising direction for establishing general-purpose machine-learning models in recent preliminary works, e.g., for visual representation learning. In this paper, we present CheXWorld, the first effort towards a self-supervised world model for radiographic images. Specifically, our work develops a unified framework that simultaneously models three aspects of medical knowledge essential for qualified radiologists, including 1) local anatomical structures describing the fine-grained characteristics of local tissues (e.g., architectures, shapes, and textures); 2) global anatomical layouts describing the global organization of the human body (e.g., layouts of organs and skeletons); and 3) domain variations that encourage CheXWorld to model the transitions across different appearance domains of radiographs (e.g., varying clarity, contrast, and exposure caused by collecting radiographs from different hospitals, devices, or patients). Empirically, we design tailored qualitative and quantitative analyses, revealing that CheXWorld successfully captures these three dimensions of medical knowledge. Furthermore, transfer learning experiments across eight medical image classification and segmentation benchmarks showcase that CheXWorld significantly outperforms existing SSL methods and large-scale medical foundation models. Code & pre-trained models are available at https://github.com/LeapLabTHU/CheXWorld.

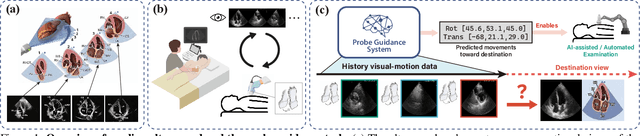

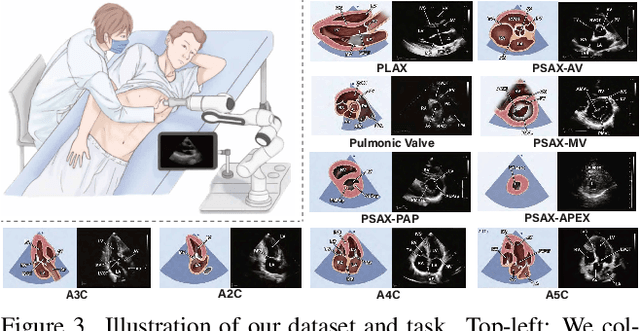

EchoWorld: Learning Motion-Aware World Models for Echocardiography Probe Guidance

Apr 17, 2025

Abstract:Echocardiography is crucial for cardiovascular disease detection but relies heavily on experienced sonographers. Echocardiography probe guidance systems, which provide real-time movement instructions for acquiring standard plane images, offer a promising solution for AI-assisted or fully autonomous scanning. However, developing effective machine learning models for this task remains challenging, as they must grasp heart anatomy and the intricate interplay between probe motion and visual signals. To address this, we present EchoWorld, a motion-aware world modeling framework for probe guidance that encodes anatomical knowledge and motion-induced visual dynamics, while effectively leveraging past visual-motion sequences to enhance guidance precision. EchoWorld employs a pre-training strategy inspired by world modeling principles, where the model predicts masked anatomical regions and simulates the visual outcomes of probe adjustments. Built upon this pre-trained model, we introduce a motion-aware attention mechanism in the fine-tuning stage that effectively integrates historical visual-motion data, enabling precise and adaptive probe guidance. Trained on more than one million ultrasound images from over 200 routine scans, EchoWorld effectively captures key echocardiographic knowledge, as validated by qualitative analysis. Moreover, our method significantly reduces guidance errors compared to existing visual backbones and guidance frameworks, excelling in both single-frame and sequential evaluation protocols. Code is available at https://github.com/LeapLabTHU/EchoWorld.

Non-Destructive Detection of Sub-Micron Imperceptible Scratches On Laser Chips Based On Consistent Texture Entropy Recursive Optimization Semi-Supervised Network

Mar 17, 2025

Abstract:Laser chips, the core components of semiconductor lasers, are extensively utilized in various industries, showing great potential for future application. Smoothness emitting surfaces are crucial in chip production, as even imperceptible scratches can significantly degrade performance and lifespan, thus impeding production efficiency and yield. Therefore, non-destructively detecting these imperceptible scratches on the emitting surfaces is essential for enhancing yield and reducing costs. These sub-micron level scratches, barely visible against the background, are extremely difficult to detect with conventional methods, compounded by a lack of labeled datasets. To address this challenge, this paper introduces TexRecNet, a consistent texture entropy recursive optimization semi-supervised network. The network, based on a recursive optimization architecture, iteratively improves the detection accuracy of imperceptible scratch edges, using outputs from previous cycles to inform subsequent inputs and guide the network's positional encoding. It also introduces image texture entropy, utilizing a substantial amount of unlabeled data to expand the training set while maintaining training signal reliability. Ultimately, by analyzing the inconsistency of the network output sequences obtained during the recursive process, a semi-supervised training strategy with recursive consistency constraints is proposed, using outputs from the recursive process for non-destructive signal augmentation and consistently optimizes the loss function for efficient end-to-end training. Experimental results show that this method, utilizing a substantial amount of unsupervised data, achieves 75.6% accuracy and 74.8% recall in detecting imperceptible scratches, an 8.5% and 33.6% improvement over conventional Unet, enhancing quality control in laser chips.

NsBM-GAT: A Non-stationary Block Maximum and Graph Attention Framework for General Traffic Crash Risk Prediction

Mar 06, 2025

Abstract:Accurate prediction of traffic crash risks for individual vehicles is essential for enhancing vehicle safety. While significant attention has been given to traffic crash risk prediction, existing studies face two main challenges: First, due to the scarcity of individual vehicle data before crashes, most models rely on hypothetical scenarios deemed dangerous by researchers. This raises doubts about their applicability to actual pre-crash conditions. Second, some crash risk prediction frameworks were learned from dashcam videos. Although such videos capture the pre-crash behavior of individual vehicles, they often lack critical information about the movements of surrounding vehicles. However, the interaction between a vehicle and its surrounding vehicles is highly influential in crash occurrences. To overcome these challenges, we propose a novel non-stationary extreme value theory (EVT), where the covariate function is optimized in a nonlinear fashion using a graph attention network. The EVT component incorporates the stochastic nature of crashes through probability distribution, which enhances model interpretability. Notably, the nonlinear covariate function enables the model to capture the interactive behavior between the target vehicle and its multiple surrounding vehicles, facilitating crash risk prediction across different driving tasks. We train and test our model using 100 sets of vehicle trajectory data before real crashes, collected via drones over three years from merging and weaving segments. We demonstrate that our model successfully learns micro-level precursors of crashes and fits a more accurate distribution with the aid of the nonlinear covariate function. Our experiments on the testing dataset show that the proposed model outperforms existing models by providing more accurate predictions for both rear-end and sideswipe crashes simultaneously.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge