Nikolaos Pappas

University of Washington

Backward Compatibility During Data Updates by Weight Interpolation

Jan 25, 2023

Abstract:Backward compatibility of model predictions is a desired property when updating a machine learning driven application. It allows to seamlessly improve the underlying model without introducing regression bugs. In classification tasks these bugs occur in the form of negative flips. This means an instance that was correctly classified by the old model is now classified incorrectly by the updated model. This has direct negative impact on the user experience of such systems e.g. a frequently used voice assistant query is suddenly misclassified. A common reason to update the model is when new training data becomes available and needs to be incorporated. Simply retraining the model with the updated data introduces the unwanted negative flips. We study the problem of regression during data updates and propose Backward Compatible Weight Interpolation (BCWI). This method interpolates between the weights of the old and new model and we show in extensive experiments that it reduces negative flips without sacrificing the improved accuracy of the new model. BCWI is straight forward to implement and does not increase inference cost. We also explore the use of importance weighting during interpolation and averaging the weights of multiple new models in order to further reduce negative flips.

Dialog2API: Task-Oriented Dialogue with API Description and Example Programs

Dec 20, 2022

Abstract:Functionality and dialogue experience are two important factors of task-oriented dialogue systems. Conventional approaches with closed schema (e.g., conversational semantic parsing) often fail as both the functionality and dialogue experience are strongly constrained by the underlying schema. We introduce a new paradigm for task-oriented dialogue - Dialog2API - to greatly expand the functionality and provide seamless dialogue experience. The conversational model interacts with the environment by generating and executing programs triggering a set of pre-defined APIs. The model also manages the dialogue policy and interact with the user through generating appropriate natural language responses. By allowing generating free-form programs, Dialog2API supports composite goals by combining different APIs, whereas unrestricted program revision provides natural and robust dialogue experience. To facilitate Dialog2API, the core model is provided with API documents, an execution environment and optionally some example dialogues annotated with programs. We propose an approach tailored for the Dialog2API, where the dialogue states are represented by a stack of programs, with most recently mentioned program on the top of the stack. Dialog2API can work with many application scenarios such as software automation and customer service. In this paper, we construct a dataset for AWS S3 APIs and present evaluation results of in-context learning baselines.

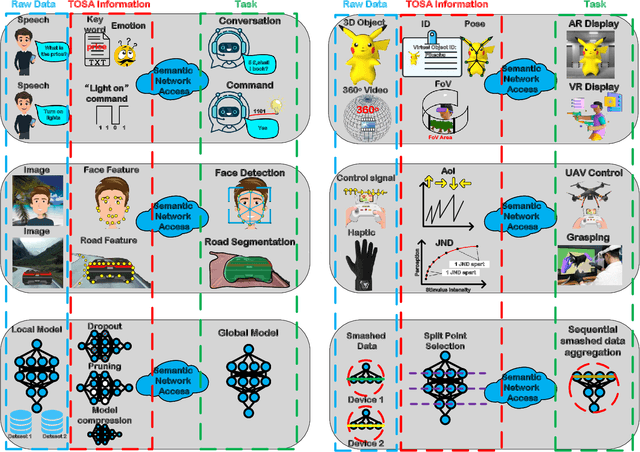

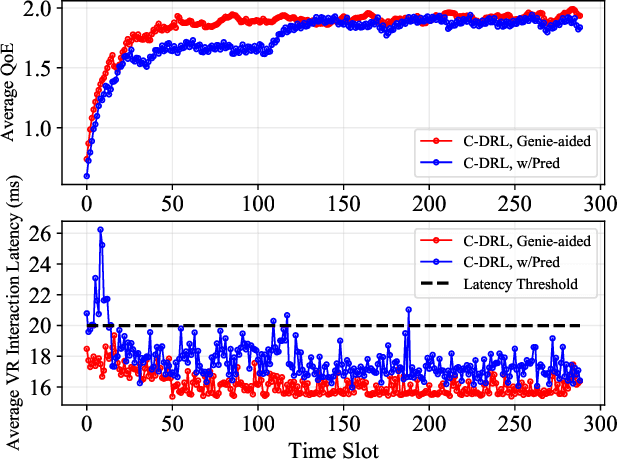

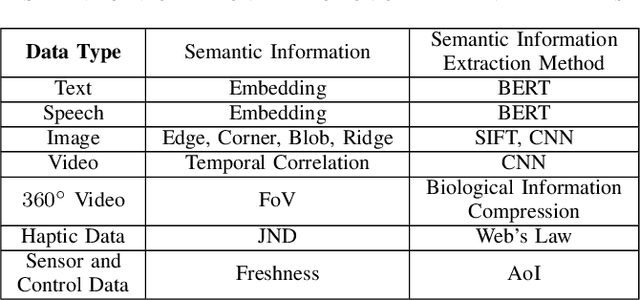

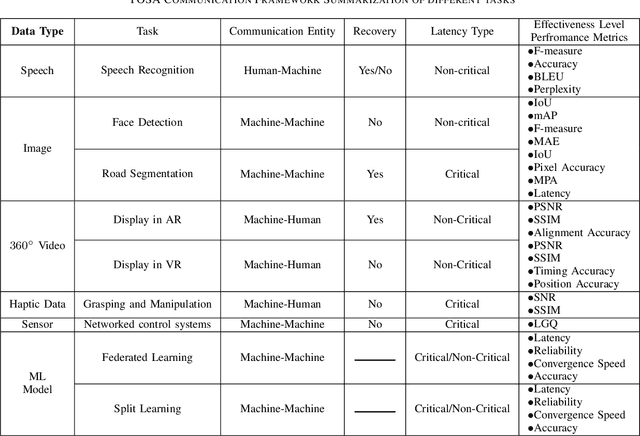

Task-Oriented and Semantics-Aware 6G Networks

Oct 17, 2022

Abstract:Upon the arrival of emerging devices, including Extended Reality (XR) and Unmanned Aerial Vehicles (UAVs), the traditional bit-oriented communication framework is approaching Shannon's physical capacity limit and fails to guarantee the massive amount of transmission within latency requirements. By jointly exploiting the context of data and its importance to the task, an emerging communication paradigm shift to semantic level and effectiveness level is envisioned to be a key revolution in Sixth Generation (6G) networks. However, an explicit and systematic communication framework incorporating both semantic level and effectiveness level has not been proposed yet. In this article, we propose a generic task-oriented and semantics-aware (TOSA) communication framework for various tasks with diverse data types, which incorporates both semantic level information and effectiveness level performance metrics. We first analyze the unique characteristics of all data types, and summarise the semantic information, along with corresponding extraction methods. We then propose a detailed TOSA communication framework for different time critical and non-critical tasks. In the TOSA framework, we present the TOSA information, extraction methods, recovery methods, and effectiveness level performance metrics. Last but not least, we present a TOSA framework tailored for Virtual Reality (VR) data with interactive VR tasks to validate the effectiveness of the proposed TOSA communication framework.

Modeling Context With Linear Attention for Scalable Document-Level Translation

Oct 16, 2022

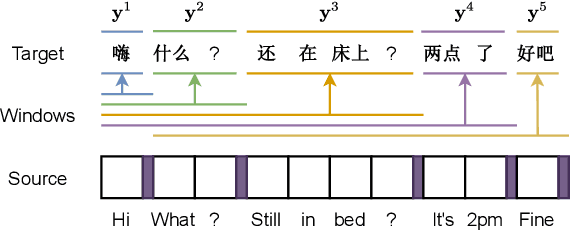

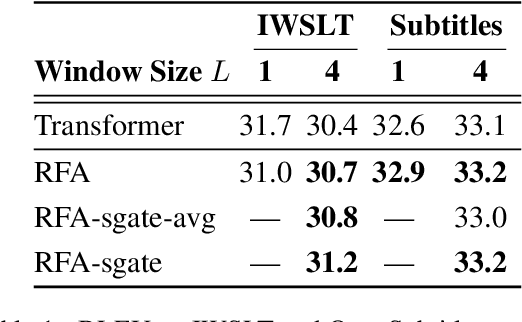

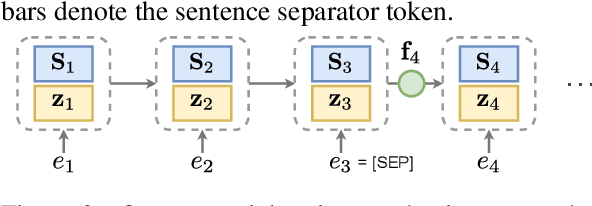

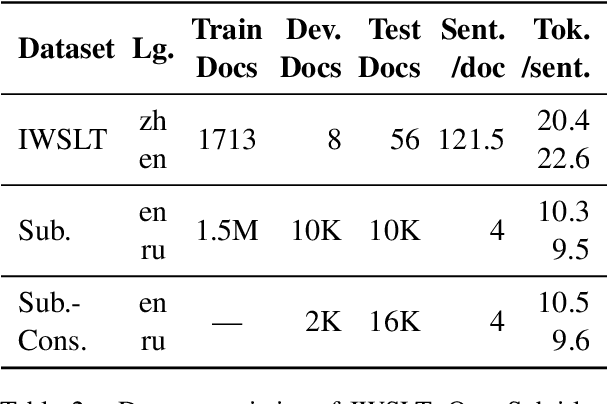

Abstract:Document-level machine translation leverages inter-sentence dependencies to produce more coherent and consistent translations. However, these models, predominantly based on transformers, are difficult to scale to long documents as their attention layers have quadratic complexity in the sequence length. Recent efforts on efficient attention improve scalability, but their effect on document translation remains unexplored. In this work, we investigate the efficacy of a recent linear attention model by Peng et al. (2021) on document translation and augment it with a sentential gate to promote a recency inductive bias. We evaluate the model on IWSLT 2015 and OpenSubtitles 2018 against the transformer, demonstrating substantially increased decoding speed on long sequences with similar or better BLEU scores. We show that sentential gating further improves translation quality on IWSLT.

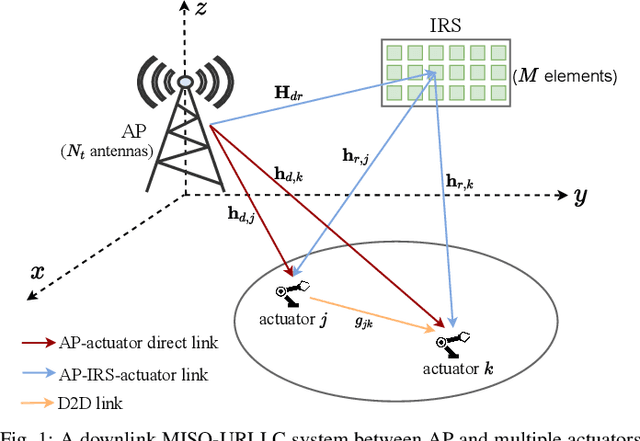

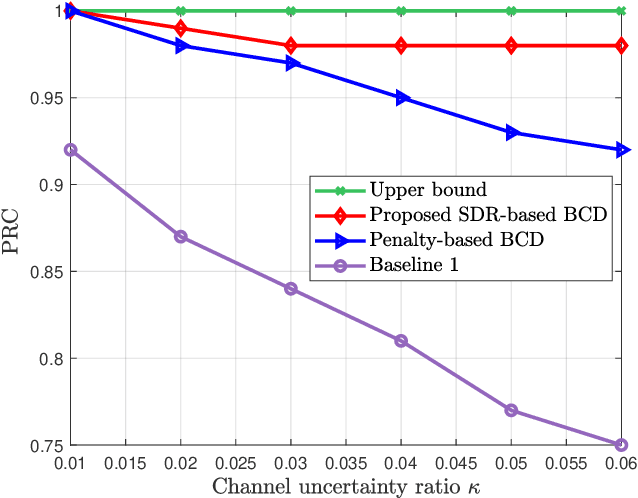

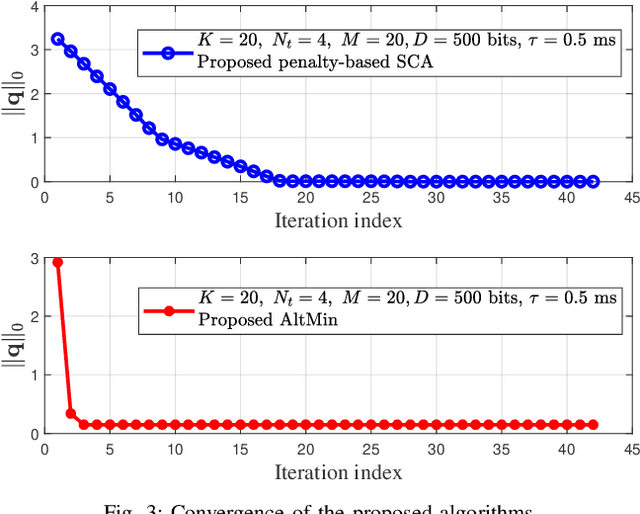

Robust Beamforming Design for IRS-Aided URLLC in D2D Networks

Jul 11, 2022

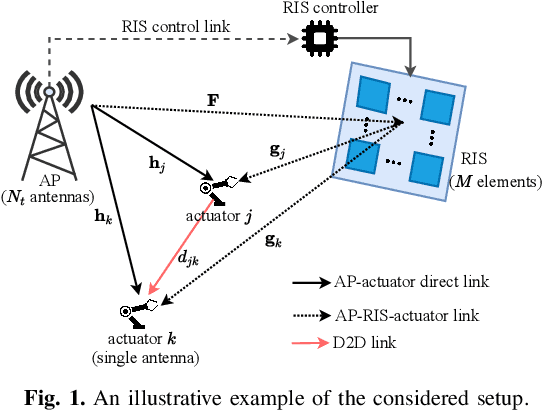

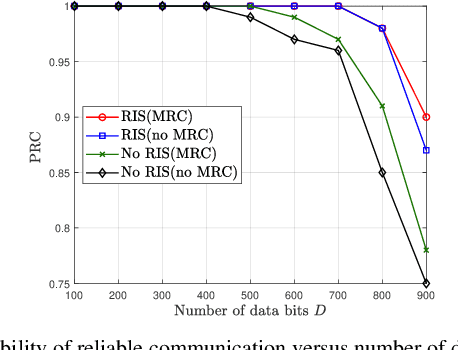

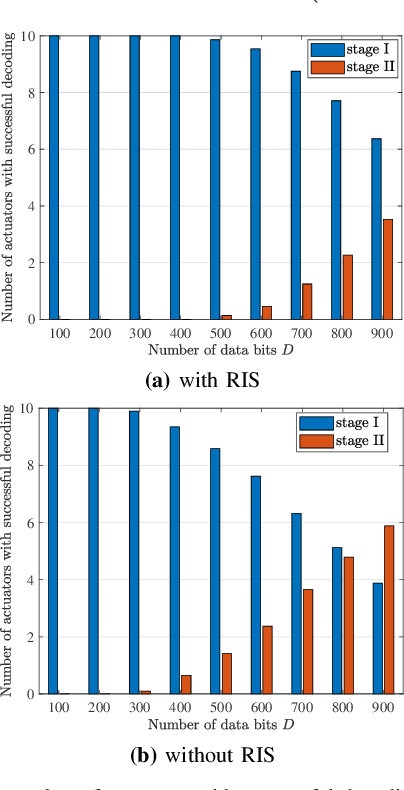

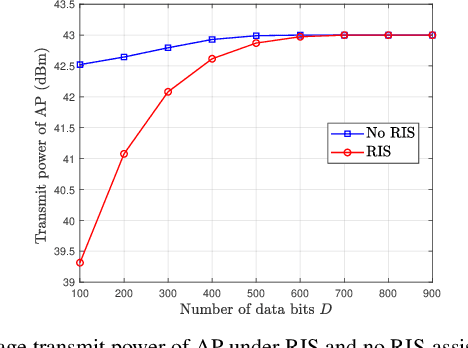

Abstract:Intelligent reflecting surface (IRS) and device-to-device (D2D) communication are two promising technologies for improving transmission reliability between transceivers in communication systems. In this paper, we consider the design of reliable communication between the access point (AP) and actuators for a downlink multiuser multiple-input single-output (MISO) system in the industrial IoT (IIoT) scenario. We propose a two-stage protocol combining IRS with D2D communication so that all actuators can successfully receive the message from AP within a given delay. The superiority of the protocol is that the communication reliability between AP and actuators is doubly augmented by the IRS-aided first-stage transmission and the second-stage D2D transmission. A joint optimization problem of active and passive beamforming is formulated, which aims to maximize the number of actuators with successful decoding. We study the joint beamforming problem for cases where the channel state information (CSI) is perfect and imperfect. For each case, we develop efficient algorithms that include convergence and complexity analysis. Simulation results demonstrate the necessity and role of IRS with a well-optimized reflection matrix, and the D2D network in promoting reliable communication. Moreover, the proposed protocol can enable reliable communication even in the presence of stringent latency requirements and CSI estimation errors.

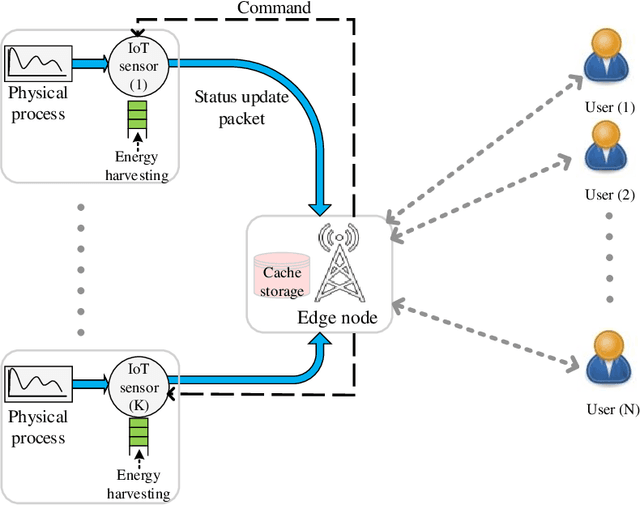

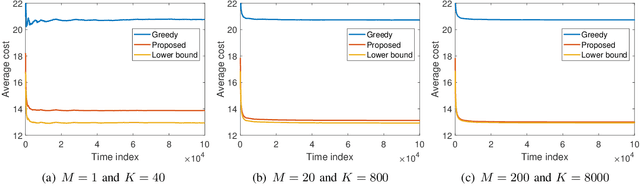

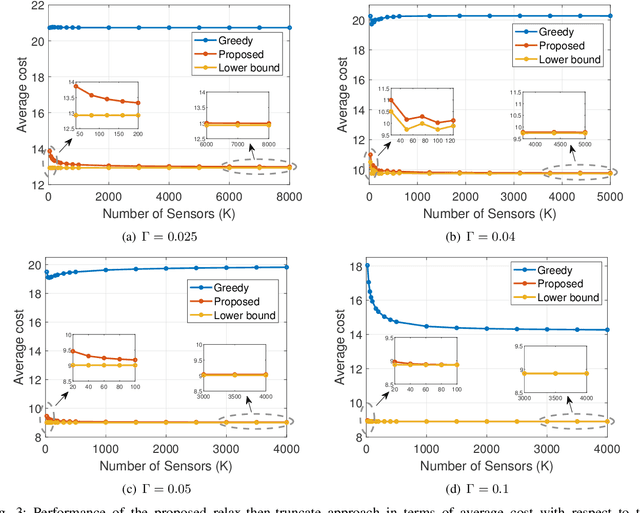

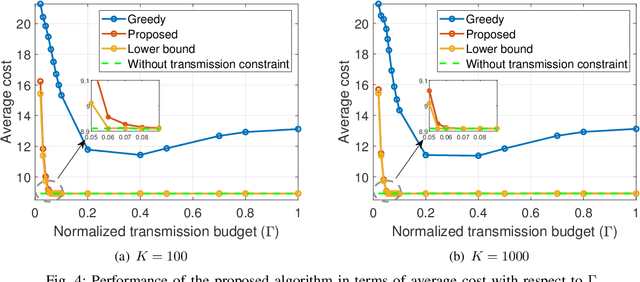

On-Demand AoI Minimization in Resource-Constrained Cache-Enabled IoT Networks with Energy Harvesting Sensors

Jan 28, 2022

Abstract:We consider a resource-constrained IoT network, where multiple users make on-demand requests to a cache-enabled edge node to send status updates about various random processes, each monitored by an energy harvesting sensor. The edge node serves users' requests by deciding whether to command the corresponding sensor to send a fresh status update or retrieve the most recently received measurement from the cache. Our objective is to find the best actions of the edge node to minimize the average age of information (AoI) of the received measurements upon request, i.e., average on-demand AoI, subject to per-slot transmission and energy constraints. First, we derive a Markov decision process model and propose an iterative algorithm that obtains an optimal policy. Then, we develop an asymptotically optimal low-complexity algorithm -- termed relax-then-truncate -- and prove that it is optimal as the number of sensors goes to infinity. Simulation results illustrate that the proposed relax-then-truncate approach significantly reduces the average on-demand AoI compared to a request-aware greedy (myopic) policy and also depict that it performs close to the optimal solution even for moderate numbers of sensors.

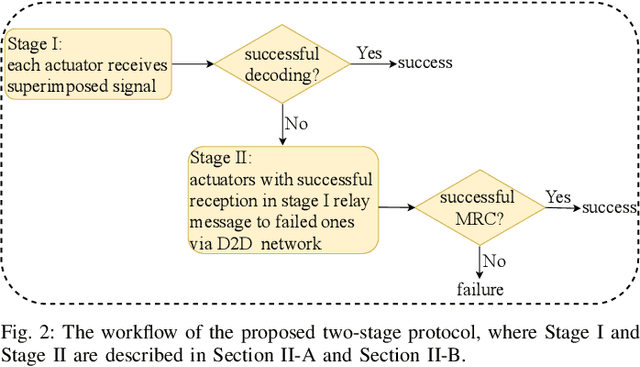

RIS-aided D2D Communication Design for URLLC Packet Delivery

Nov 26, 2021

Abstract:In this paper, we consider a smart factory scenario where a set of actuators receive critical control signals from an access point (AP) with reliability and low latency requirements. We investigate jointly active beamforming at the AP and passive phase shifting at the reconfigurable intelligent surface (RIS) for successfully delivering the control signals from the AP to the actuators within a required time duration. The transmission follows a two-stage design. In the first stage, each actuator can both receive the direct signal from AP and the reflected signal from the RIS. In the second stage, the actuators with successful reception in the first stage, relay the message through the D2D network to the actuators with failed receptions. We formulate a non-convex optimization problem where we first obtain an equivalent but more tractable form by addressing the problem with discrete indicator functions. Then, Frobenius inner product based equality is applied for decoupling the optimization variables. Further, we adopt a penalty-based approach to resolve the rank-one constraints. Finally, we deal with the $\ell_0$-norm by $\ell_1$-norm approximation and add an extra term $\ell_1-\ell_2$ for sparsity. Numerical results reveal that the proposed two-stage RIS-aided D2D communication protocol is effective for enabling reliable communication with latency requirements.

ABC: Attention with Bounded-memory Control

Oct 06, 2021

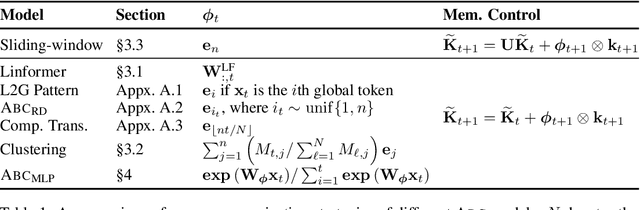

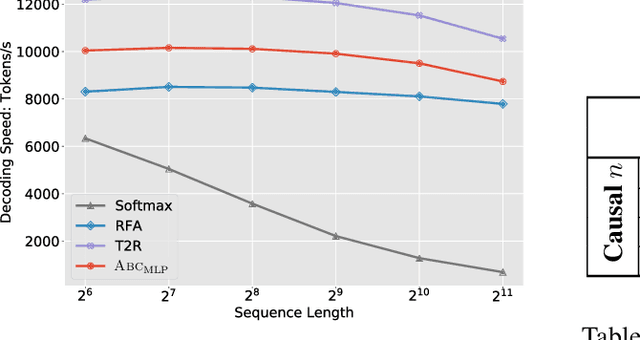

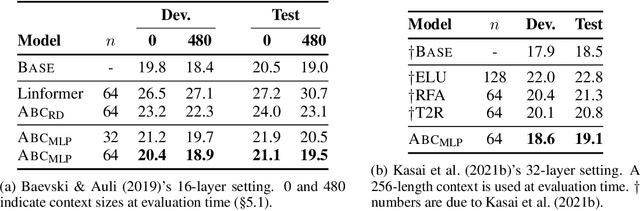

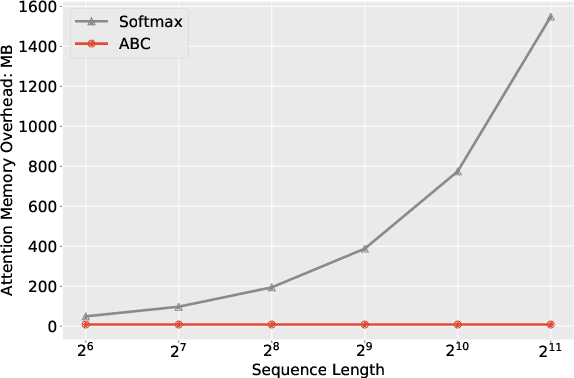

Abstract:Transformer architectures have achieved state-of-the-art results on a variety of sequence modeling tasks. However, their attention mechanism comes with a quadratic complexity in sequence lengths, making the computational overhead prohibitive, especially for long sequences. Attention context can be seen as a random-access memory with each token taking a slot. Under this perspective, the memory size grows linearly with the sequence length, and so does the overhead of reading from it. One way to improve the efficiency is to bound the memory size. We show that disparate approaches can be subsumed into one abstraction, attention with bounded-memory control (ABC), and they vary in their organization of the memory. ABC reveals new, unexplored possibilities. First, it connects several efficient attention variants that would otherwise seem apart. Second, this abstraction gives new insights--an established approach (Wang et al., 2020b) previously thought to be not applicable in causal attention, actually is. Last, we present a new instance of ABC, which draws inspiration from existing ABC approaches, but replaces their heuristic memory-organizing functions with a learned, contextualized one. Our experiments on language modeling, machine translation, and masked language model finetuning show that our approach outperforms previous efficient attention models; compared to the strong transformer baselines, it significantly improves the inference time and space efficiency with no or negligible accuracy loss.

Sentence Bottleneck Autoencoders from Transformer Language Models

Aug 31, 2021

Abstract:Representation learning for text via pretraining a language model on a large corpus has become a standard starting point for building NLP systems. This approach stands in contrast to autoencoders, also trained on raw text, but with the objective of learning to encode each input as a vector that allows full reconstruction. Autoencoders are attractive because of their latent space structure and generative properties. We therefore explore the construction of a sentence-level autoencoder from a pretrained, frozen transformer language model. We adapt the masked language modeling objective as a generative, denoising one, while only training a sentence bottleneck and a single-layer modified transformer decoder. We demonstrate that the sentence representations discovered by our model achieve better quality than previous methods that extract representations from pretrained transformers on text similarity tasks, style transfer (an example of controlled generation), and single-sentence classification tasks in the GLUE benchmark, while using fewer parameters than large pretrained models.

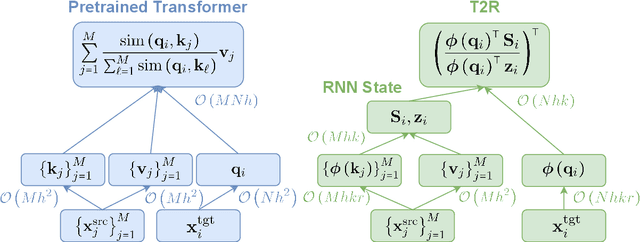

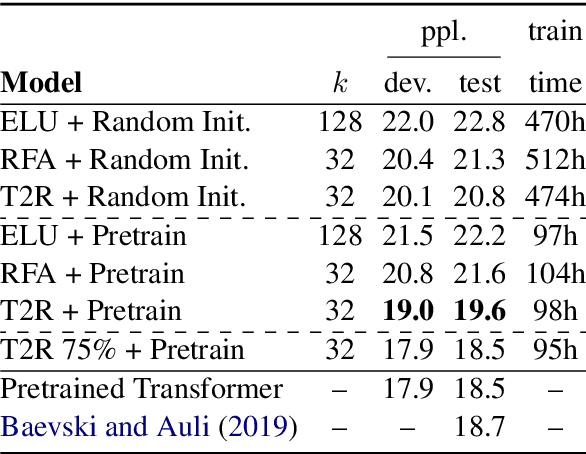

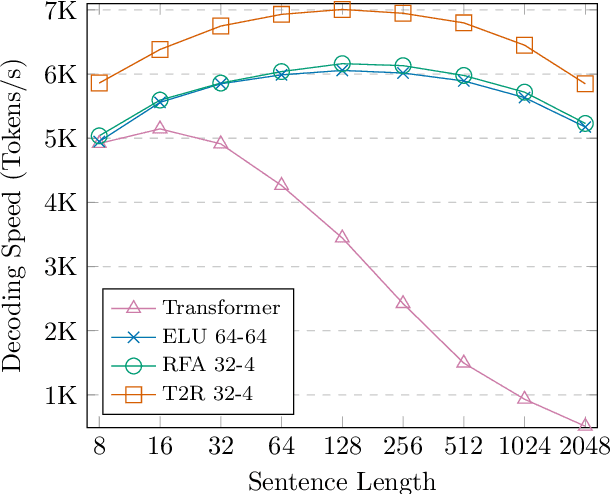

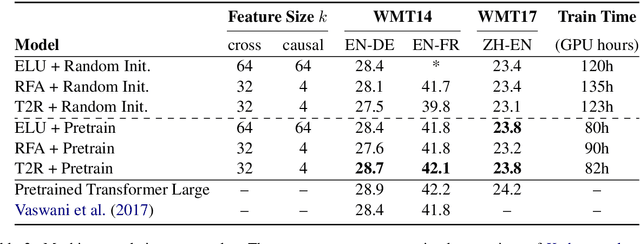

Finetuning Pretrained Transformers into RNNs

Mar 24, 2021

Abstract:Transformers have outperformed recurrent neural networks (RNNs) in natural language generation. This comes with a significant computational overhead, as the attention mechanism scales with a quadratic complexity in sequence length. Efficient transformer variants have received increasing interest from recent works. Among them, a linear-complexity recurrent variant has proven well suited for autoregressive generation. It approximates the softmax attention with randomized or heuristic feature maps, but can be difficult to train or yield suboptimal accuracy. This work aims to convert a pretrained transformer into its efficient recurrent counterpart, improving the efficiency while retaining the accuracy. Specifically, we propose a swap-then-finetune procedure: in an off-the-shelf pretrained transformer, we replace the softmax attention with its linear-complexity recurrent alternative and then finetune. With a learned feature map, our approach provides an improved tradeoff between efficiency and accuracy over the standard transformer and other recurrent variants. We also show that the finetuning process needs lower training cost than training these recurrent variants from scratch. As many recent models for natural language tasks are increasingly dependent on large-scale pretrained transformers, this work presents a viable approach to improving inference efficiency without repeating the expensive pretraining process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge