Mirko Marras

FRCSyn Challenge at WACV 2024:Face Recognition Challenge in the Era of Synthetic Data

Nov 17, 2023

Abstract:Despite the widespread adoption of face recognition technology around the world, and its remarkable performance on current benchmarks, there are still several challenges that must be covered in more detail. This paper offers an overview of the Face Recognition Challenge in the Era of Synthetic Data (FRCSyn) organized at WACV 2024. This is the first international challenge aiming to explore the use of synthetic data in face recognition to address existing limitations in the technology. Specifically, the FRCSyn Challenge targets concerns related to data privacy issues, demographic biases, generalization to unseen scenarios, and performance limitations in challenging scenarios, including significant age disparities between enrollment and testing, pose variations, and occlusions. The results achieved in the FRCSyn Challenge, together with the proposed benchmark, contribute significantly to the application of synthetic data to improve face recognition technology.

Faithful Path Language Modelling for Explainable Recommendation over Knowledge Graph

Oct 25, 2023

Abstract:Path reasoning methods over knowledge graphs have gained popularity for their potential to improve transparency in recommender systems. However, the resulting models still rely on pre-trained knowledge graph embeddings, fail to fully exploit the interdependence between entities and relations in the KG for recommendation, and may generate inaccurate explanations. In this paper, we introduce PEARLM, a novel approach that efficiently captures user behaviour and product-side knowledge through language modelling. With our approach, knowledge graph embeddings are directly learned from paths over the KG by the language model, which also unifies entities and relations in the same optimisation space. Constraints on the sequence decoding additionally guarantee path faithfulness with respect to the KG. Experiments on two datasets show the effectiveness of our approach compared to state-of-the-art baselines. Source code and datasets: AVAILABLE AFTER GETTING ACCEPTED.

Counterfactual Graph Augmentation for Consumer Unfairness Mitigation in Recommender Systems

Aug 23, 2023Abstract:In recommendation literature, explainability and fairness are becoming two prominent perspectives to consider. However, prior works have mostly addressed them separately, for instance by explaining to consumers why a certain item was recommended or mitigating disparate impacts in recommendation utility. None of them has leveraged explainability techniques to inform unfairness mitigation. In this paper, we propose an approach that relies on counterfactual explanations to augment the set of user-item interactions, such that using them while inferring recommendations leads to fairer outcomes. Modeling user-item interactions as a bipartite graph, our approach augments the latter by identifying new user-item edges that not only can explain the original unfairness by design, but can also mitigate it. Experiments on two public data sets show that our approach effectively leads to a better trade-off between fairness and recommendation utility compared with state-of-the-art mitigation procedures. We further analyze the characteristics of added edges to highlight key unfairness patterns. Source code available at https://github.com/jackmedda/RS-BGExplainer/tree/cikm2023.

fair Exposure in Deep Face Rankings at a Distance

Aug 22, 2023Abstract:Law enforcement regularly faces the challenge of ranking suspects from their facial images. Deep face models aid this process but frequently introduce biases that disproportionately affect certain demographic segments. While bias investigation is common in domains like job candidate ranking, the field of forensic face rankings remains underexplored. In this paper, we propose a novel experimental framework, encompassing six state-of-the-art face encoders and two public data sets, designed to scrutinize the extent to which demographic groups suffer from biases in exposure in the context of forensic face rankings. Through comprehensive experiments that cover both re-identification and identification tasks, we show that exposure biases within this domain are far from being countered, demanding attention towards establishing ad-hoc policies and corrective measures. The source code is available at https://github.com/atzoriandrea/ijcb2023-unfair-face-rankings

GNNUERS: Fairness Explanation in GNNs for Recommendation via Counterfactual Reasoning

Apr 14, 2023Abstract:In recent years, personalization research has been delving into issues of explainability and fairness. While some techniques have emerged to provide post-hoc and self-explanatory individual recommendations, there is still a lack of methods aimed at uncovering unfairness in recommendation systems beyond identifying biased user and item features. This paper proposes a new algorithm, GNNUERS, which uses counterfactuals to pinpoint user unfairness explanations in terms of user-item interactions within a bi-partite graph. By perturbing the graph topology, GNNUERS reduces differences in utility between protected and unprotected demographic groups. The paper evaluates the approach using four real-world graphs from different domains and demonstrates its ability to systematically explain user unfairness in three state-of-the-art GNN-based recommendation models. This perturbed network analysis reveals insightful patterns that confirm the nature of the unfairness underlying the explanations. The source code and preprocessed datasets are available at https://github.com/jackmedda/RS-BGExplainer

Knowledge is Power, Understanding is Impact: Utility and Beyond Goals, Explanation Quality, and Fairness in Path Reasoning Recommendation

Jan 14, 2023

Abstract:Path reasoning is a notable recommendation approach that models high-order user-product relations, based on a Knowledge Graph (KG). This approach can extract reasoning paths between recommended products and already experienced products and, then, turn such paths into textual explanations for the user. Unfortunately, evaluation protocols in this field appear heterogeneous and limited, making it hard to contextualize the impact of the existing methods. In this paper, we replicated three state-of-the-art relevant path reasoning recommendation methods proposed in top-tier conferences. Under a common evaluation protocol, based on two public data sets and in comparison with other knowledge-aware methods, we then studied the extent to which they meet recommendation utility and beyond objectives, explanation quality, and consumer and provider fairness. Our study provides a picture of the progress in this field, highlighting open issues and future directions. Source code: \url{https://github.com/giacoballoccu/rep-path-reasoning-recsys}.

Trusting the Explainers: Teacher Validation of Explainable Artificial Intelligence for Course Design

Dec 26, 2022Abstract:Deep learning models for learning analytics have become increasingly popular over the last few years; however, these approaches are still not widely adopted in real-world settings, likely due to a lack of trust and transparency. In this paper, we tackle this issue by implementing explainable AI methods for black-box neural networks. This work focuses on the context of online and blended learning and the use case of student success prediction models. We use a pairwise study design, enabling us to investigate controlled differences between pairs of courses. Our analyses cover five course pairs that differ in one educationally relevant aspect and two popular instance-based explainable AI methods (LIME and SHAP). We quantitatively compare the distances between the explanations across courses and methods. We then validate the explanations of LIME and SHAP with 26 semi-structured interviews of university-level educators regarding which features they believe contribute most to student success, which explanations they trust most, and how they could transform these insights into actionable course design decisions. Our results show that quantitatively, explainers significantly disagree with each other about what is important, and qualitatively, experts themselves do not agree on which explanations are most trustworthy. All code, extended results, and the interview protocol are provided at https://github.com/epfl-ml4ed/trusting-explainers.

Do Not Trust a Model Because It is Confident: Uncovering and Characterizing Unknown Unknowns to Student Success Predictors in Online-Based Learning

Dec 16, 2022Abstract:Student success models might be prone to develop weak spots, i.e., examples hard to accurately classify due to insufficient representation during model creation. This weakness is one of the main factors undermining users' trust, since model predictions could for instance lead an instructor to not intervene on a student in need. In this paper, we unveil the need of detecting and characterizing unknown unknowns in student success prediction in order to better understand when models may fail. Unknown unknowns include the students for which the model is highly confident in its predictions, but is actually wrong. Therefore, we cannot solely rely on the model's confidence when evaluating the predictions quality. We first introduce a framework for the identification and characterization of unknown unknowns. We then assess its informativeness on log data collected from flipped courses and online courses using quantitative analyses and interviews with instructors. Our results show that unknown unknowns are a critical issue in this domain and that our framework can be applied to support their detection. The source code is available at https://github.com/epfl-ml4ed/unknown-unknowns.

Ripple: Concept-Based Interpretation for Raw Time Series Models in Education

Dec 13, 2022Abstract:Time series is the most prevalent form of input data for educational prediction tasks. The vast majority of research using time series data focuses on hand-crafted features, designed by experts for predictive performance and interpretability. However, extracting these features is labor-intensive for humans and computers. In this paper, we propose an approach that utilizes irregular multivariate time series modeling with graph neural networks to achieve comparable or better accuracy with raw time series clickstreams in comparison to hand-crafted features. Furthermore, we extend concept activation vectors for interpretability in raw time series models. We analyze these advances in the education domain, addressing the task of early student performance prediction for downstream targeted interventions and instructional support. Our experimental analysis on 23 MOOCs with millions of combined interactions over six behavioral dimensions show that models designed with our approach can (i) beat state-of-the-art educational time series baselines with no feature extraction and (ii) provide interpretable insights for personalized interventions. Source code: https://github.com/epfl-ml4ed/ripple/.

The More Secure, The Less Equally Usable: Gender and Ethnicity fairness of Deep Face Recognition along Security Thresholds

Sep 30, 2022

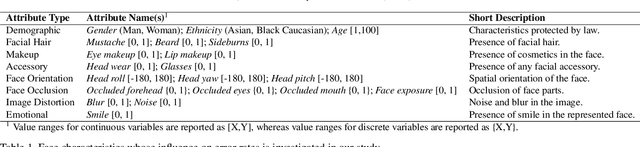

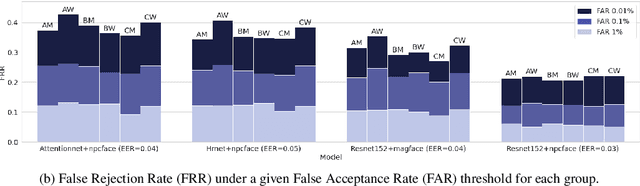

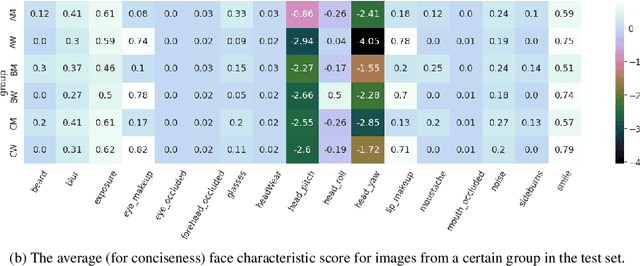

Abstract:Face biometrics are playing a key role in making modern smart city applications more secure and usable. Commonly, the recognition threshold of a face recognition system is adjusted based on the degree of security for the considered use case. The likelihood of a match can be for instance decreased by setting a high threshold in case of a payment transaction verification. Prior work in face recognition has unfortunately showed that error rates are usually higher for certain demographic groups. These disparities have hence brought into question the fairness of systems empowered with face biometrics. In this paper, we investigate the extent to which disparities among demographic groups change under different security levels. Our analysis includes ten face recognition models, three security thresholds, and six demographic groups based on gender and ethnicity. Experiments show that the higher the security of the system is, the higher the disparities in usability among demographic groups are. Compelling unfairness issues hence exist and urge countermeasures in real-world high-stakes environments requiring severe security levels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge