Minghan Li

Generate, Filter, and Fuse: Query Expansion via Multi-Step Keyword Generation for Zero-Shot Neural Rankers

Nov 15, 2023

Abstract:Query expansion has been proved to be effective in improving recall and precision of first-stage retrievers, and yet its influence on a complicated, state-of-the-art cross-encoder ranker remains under-explored. We first show that directly applying the expansion techniques in the current literature to state-of-the-art neural rankers can result in deteriorated zero-shot performance. To this end, we propose GFF, a pipeline that includes a large language model and a neural ranker, to Generate, Filter, and Fuse query expansions more effectively in order to improve the zero-shot ranking metrics such as nDCG@10. Specifically, GFF first calls an instruction-following language model to generate query-related keywords through a reasoning chain. Leveraging self-consistency and reciprocal rank weighting, GFF further filters and combines the ranking results of each expanded query dynamically. By utilizing this pipeline, we show that GFF can improve the zero-shot nDCG@10 on BEIR and TREC DL 2019/2020. We also analyze different modelling choices in the GFF pipeline and shed light on the future directions in query expansion for zero-shot neural rankers.

BoxVIS: Video Instance Segmentation with Box Annotations

Mar 26, 2023Abstract:It is expensive and labour-extensive to label the pixel-wise object masks in a video. As a results, the amount of pixel-wise annotations in existing video instance segmentation (VIS) datasets is small, limiting the generalization capability of trained VIS models. An alternative but much cheaper solution is to use bounding boxes to label instances in videos. Inspired by the recent success of box-supervised image instance segmentation, we first adapt the state-of-the-art pixel-supervised VIS models to a box-supervised VIS (BoxVIS) baseline, and observe only slight performance degradation. We consequently propose to improve BoxVIS performance from two aspects. First, we propose a box-center guided spatial-temporal pairwise affinity (STPA) loss to predict instance masks for better spatial and temporal consistency. Second, we collect a larger scale box-annotated VIS dataset (BVISD) by consolidating the videos from current VIS benchmarks and converting images from the COCO dataset to short pseudo video clips. With the proposed BVISD and the STPA loss, our trained BoxVIS model demonstrates promising instance mask prediction performance. Specifically, it achieves 43.2\% and 29.0\% mask AP on the YouTube-VIS 2021 and OVIS valid sets, respectively, exhibiting comparable or even better generalization performance than state-of-the-art pixel-supervised VIS models by using only 16\% annotation time and cost. Codes and data of BoxVIS can be found at \url{https://github.com/MinghanLi/BoxVIS}.

MDQE: Mining Discriminative Query Embeddings to Segment Occluded Instances on Challenging Videos

Mar 25, 2023Abstract:While impressive progress has been achieved, video instance segmentation (VIS) methods with per-clip input often fail on challenging videos with occluded objects and crowded scenes. This is mainly because instance queries in these methods cannot encode well the discriminative embeddings of instances, making the query-based segmenter difficult to distinguish those `hard' instances. To address these issues, we propose to mine discriminative query embeddings (MDQE) to segment occluded instances on challenging videos. First, we initialize the positional embeddings and content features of object queries by considering their spatial contextual information and the inter-frame object motion. Second, we propose an inter-instance mask repulsion loss to distance each instance from its nearby non-target instances. The proposed MDQE is the first VIS method with per-clip input that achieves state-of-the-art results on challenging videos and competitive performance on simple videos. In specific, MDQE with ResNet50 achieves 33.0\% and 44.5\% mask AP on OVIS and YouTube-VIS 2021, respectively. Code of MDQE can be found at \url{https://github.com/MinghanLi/MDQE_CVPR2023}.

One-to-Few Label Assignment for End-to-End Dense Detection

Mar 21, 2023

Abstract:One-to-one (o2o) label assignment plays a key role for transformer based end-to-end detection, and it has been recently introduced in fully convolutional detectors for end-to-end dense detection. However, o2o can degrade the feature learning efficiency due to the limited number of positive samples. Though extra positive samples are introduced to mitigate this issue in recent DETRs, the computation of self- and cross- attentions in the decoder limits its practical application to dense and fully convolutional detectors. In this work, we propose a simple yet effective one-to-few (o2f) label assignment strategy for end-to-end dense detection. Apart from defining one positive and many negative anchors for each object, we define several soft anchors, which serve as positive and negative samples simultaneously. The positive and negative weights of these soft anchors are dynamically adjusted during training so that they can contribute more to ``representation learning'' in the early training stage, and contribute more to ``duplicated prediction removal'' in the later stage. The detector trained in this way can not only learn a strong feature representation but also perform end-to-end dense detection. Experiments on COCO and CrowdHuman datasets demonstrate the effectiveness of the o2f scheme. Code is available at https://github.com/strongwolf/o2f.

How to Train Your DRAGON: Diverse Augmentation Towards Generalizable Dense Retrieval

Feb 15, 2023

Abstract:Various techniques have been developed in recent years to improve dense retrieval (DR), such as unsupervised contrastive learning and pseudo-query generation. Existing DRs, however, often suffer from effectiveness tradeoffs between supervised and zero-shot retrieval, which some argue was due to the limited model capacity. We contradict this hypothesis and show that a generalizable DR can be trained to achieve high accuracy in both supervised and zero-shot retrieval without increasing model size. In particular, we systematically examine the contrastive learning of DRs, under the framework of Data Augmentation (DA). Our study shows that common DA practices such as query augmentation with generative models and pseudo-relevance label creation using a cross-encoder, are often inefficient and sub-optimal. We hence propose a new DA approach with diverse queries and sources of supervision to progressively train a generalizable DR. As a result, DRAGON, our dense retriever trained with diverse augmentation, is the first BERT-base-sized DR to achieve state-of-the-art effectiveness in both supervised and zero-shot evaluations and even competes with models using more complex late interaction (ColBERTv2 and SPLADE++).

Improving Out-of-Distribution Generalization of Neural Rerankers with Contextualized Late Interaction

Feb 13, 2023Abstract:Recent progress in information retrieval finds that embedding query and document representation into multi-vector yields a robust bi-encoder retriever on out-of-distribution datasets. In this paper, we explore whether late interaction, the simplest form of multi-vector, is also helpful to neural rerankers that only use the [CLS] vector to compute the similarity score. Although intuitively, the attention mechanism of rerankers at the previous layers already gathers the token-level information, we find adding late interaction still brings an extra 5% improvement in average on out-of-distribution datasets, with little increase in latency and no degradation in in-domain effectiveness. Through extensive experiments and analysis, we show that the finding is consistent across different model sizes and first-stage retrievers of diverse natures and that the improvement is more prominent on longer queries.

SLIM: Sparsified Late Interaction for Multi-Vector Retrieval with Inverted Indexes

Feb 13, 2023Abstract:This paper introduces a method called Sparsified Late Interaction for Multi-vector retrieval with inverted indexes (SLIM). Although multi-vector models have demonstrated their effectiveness in various information retrieval tasks, most of their pipelines require custom optimization to be efficient in both time and space. Among them, ColBERT is probably the most established method which is based on the late interaction of contextualized token embeddings of pre-trained language models. Unlike ColBERT where all its token embeddings are low-dimensional and dense, SLIM projects each token embedding into a high-dimensional, sparse lexical space before performing late interaction. In practice, we further propose to approximate SLIM using the lower- and upper-bound of the late interaction to reduce latency and storage. In this way, the sparse outputs can be easily incorporated into an inverted search index and are fully compatible with off-the-shelf search tools such as Pyserini and Elasticsearch. SLIM has competitive accuracy on information retrieval benchmarks such as MS MARCO Passages and BEIR compared to ColBERT while being much smaller and faster on CPUs. Source code and data will be available at https://github.com/castorini/pyserini/blob/master/docs/experiments-slim.md.

Domain Adaptation for Dense Retrieval through Self-Supervision by Pseudo-Relevance Labeling

Dec 13, 2022

Abstract:Although neural information retrieval has witnessed great improvements, recent works showed that the generalization ability of dense retrieval models on target domains with different distributions is limited, which contrasts with the results obtained with interaction-based models. To address this issue, researchers have resorted to adversarial learning and query generation approaches; both approaches nevertheless resulted in limited improvements. In this paper, we propose to use a self-supervision approach in which pseudo-relevance labels are automatically generated on the target domain. To do so, we first use the standard BM25 model on the target domain to obtain a first ranking of documents, and then use the interaction-based model T53B to re-rank top documents. We further combine this approach with knowledge distillation relying on an interaction-based teacher model trained on the source domain. Our experiments reveal that pseudo-relevance labeling using T53B and the MiniLM teacher performs on average better than other approaches and helps improve the state-of-the-art query generation approach GPL when it is fine-tuned on the pseudo-relevance labeled data.

CITADEL: Conditional Token Interaction via Dynamic Lexical Routing for Efficient and Effective Multi-Vector Retrieval

Nov 18, 2022

Abstract:Multi-vector retrieval methods combine the merits of sparse (e.g. BM25) and dense (e.g. DPR) retrievers and have achieved state-of-the-art performance on various retrieval tasks. These methods, however, are orders of magnitude slower and need much more space to store their indices compared to their single-vector counterparts. In this paper, we unify different multi-vector retrieval models from a token routing viewpoint and propose conditional token interaction via dynamic lexical routing, namely CITADEL, for efficient and effective multi-vector retrieval. CITADEL learns to route different token vectors to the predicted lexical ``keys'' such that a query token vector only interacts with document token vectors routed to the same key. This design significantly reduces the computation cost while maintaining high accuracy. Notably, CITADEL achieves the same or slightly better performance than the previous state of the art, ColBERT-v2, on both in-domain (MS MARCO) and out-of-domain (BEIR) evaluations, while being nearly 40 times faster. Code and data are available at https://github.com/facebookresearch/dpr-scale.

Query Expansion Using Contextual Clue Sampling with Language Models

Oct 13, 2022

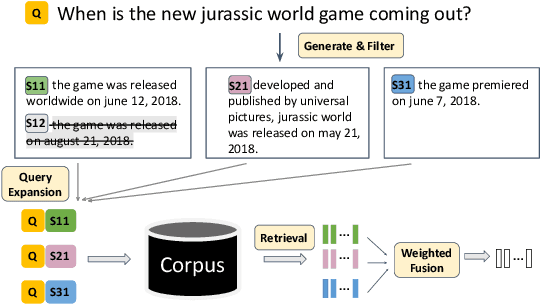

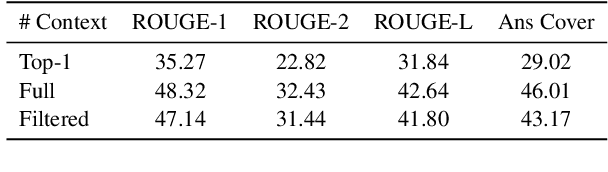

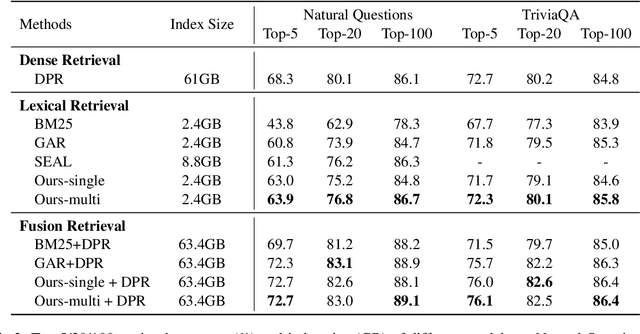

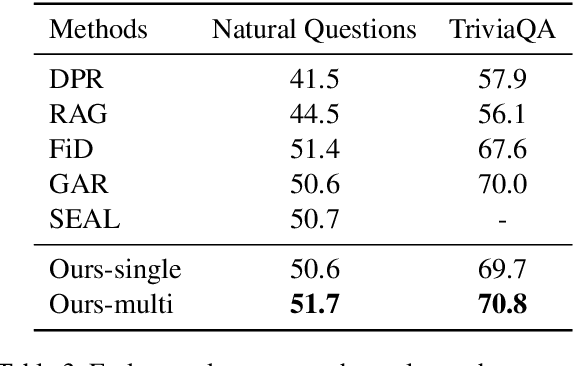

Abstract:Query expansion is an effective approach for mitigating vocabulary mismatch between queries and documents in information retrieval. One recent line of research uses language models to generate query-related contexts for expansion. Along this line, we argue that expansion terms from these contexts should balance two key aspects: diversity and relevance. The obvious way to increase diversity is to sample multiple contexts from the language model. However, this comes at the cost of relevance, because there is a well-known tendency of models to hallucinate incorrect or irrelevant contexts. To balance these two considerations, we propose a combination of an effective filtering strategy and fusion of the retrieved documents based on the generation probability of each context. Our lexical matching based approach achieves a similar top-5/top-20 retrieval accuracy and higher top-100 accuracy compared with the well-established dense retrieval model DPR, while reducing the index size by more than 96%. For end-to-end QA, the reader model also benefits from our method and achieves the highest Exact-Match score against several competitive baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge