Mennatallah El-Assady

Explainable Mapper: Charting LLM Embedding Spaces Using Perturbation-Based Explanation and Verification Agents

Jul 24, 2025

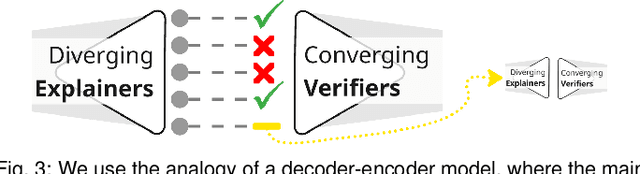

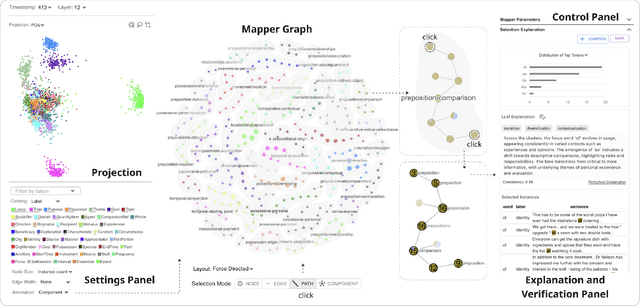

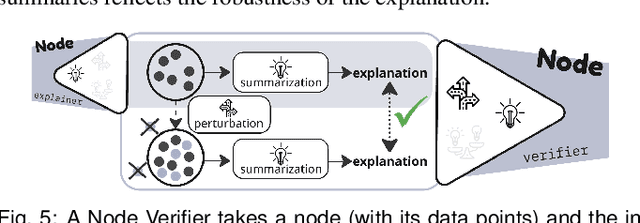

Abstract:Large language models (LLMs) produce high-dimensional embeddings that capture rich semantic and syntactic relationships between words, sentences, and concepts. Investigating the topological structures of LLM embedding spaces via mapper graphs enables us to understand their underlying structures. Specifically, a mapper graph summarizes the topological structure of the embedding space, where each node represents a topological neighborhood (containing a cluster of embeddings), and an edge connects two nodes if their corresponding neighborhoods overlap. However, manually exploring these embedding spaces to uncover encoded linguistic properties requires considerable human effort. To address this challenge, we introduce a framework for semi-automatic annotation of these embedding properties. To organize the exploration process, we first define a taxonomy of explorable elements within a mapper graph such as nodes, edges, paths, components, and trajectories. The annotation of these elements is executed through two types of customizable LLM-based agents that employ perturbation techniques for scalable and automated analysis. These agents help to explore and explain the characteristics of mapper elements and verify the robustness of the generated explanations. We instantiate the framework within a visual analytics workspace and demonstrate its effectiveness through case studies. In particular, we replicate findings from prior research on BERT's embedding properties across various layers of its architecture and provide further observations into the linguistic properties of topological neighborhoods.

Concept-Level Explainability for Auditing & Steering LLM Responses

May 12, 2025Abstract:As large language models (LLMs) become widely deployed, concerns about their safety and alignment grow. An approach to steer LLM behavior, such as mitigating biases or defending against jailbreaks, is to identify which parts of a prompt influence specific aspects of the model's output. Token-level attribution methods offer a promising solution, but still struggle in text generation, explaining the presence of each token in the output separately, rather than the underlying semantics of the entire LLM response. We introduce ConceptX, a model-agnostic, concept-level explainability method that identifies the concepts, i.e., semantically rich tokens in the prompt, and assigns them importance based on the outputs' semantic similarity. Unlike current token-level methods, ConceptX also offers to preserve context integrity through in-place token replacements and supports flexible explanation goals, e.g., gender bias. ConceptX enables both auditing, by uncovering sources of bias, and steering, by modifying prompts to shift the sentiment or reduce the harmfulness of LLM responses, without requiring retraining. Across three LLMs, ConceptX outperforms token-level methods like TokenSHAP in both faithfulness and human alignment. Steering tasks boost sentiment shift by 0.252 versus 0.131 for random edits and lower attack success rates from 0.463 to 0.242, outperforming attribution and paraphrasing baselines. While prompt engineering and self-explaining methods sometimes yield safer responses, ConceptX offers a transparent and faithful alternative for improving LLM safety and alignment, demonstrating the practical value of attribution-based explainability in guiding LLM behavior.

LayerFlow: Layer-wise Exploration of LLM Embeddings using Uncertainty-aware Interlinked Projections

Apr 09, 2025

Abstract:Large language models (LLMs) represent words through contextual word embeddings encoding different language properties like semantics and syntax. Understanding these properties is crucial, especially for researchers investigating language model capabilities, employing embeddings for tasks related to text similarity, or evaluating the reasons behind token importance as measured through attribution methods. Applications for embedding exploration frequently involve dimensionality reduction techniques, which reduce high-dimensional vectors to two dimensions used as coordinates in a scatterplot. This data transformation step introduces uncertainty that can be propagated to the visual representation and influence users' interpretation of the data. To communicate such uncertainties, we present LayerFlow - a visual analytics workspace that displays embeddings in an interlinked projection design and communicates the transformation, representation, and interpretation uncertainty. In particular, to hint at potential data distortions and uncertainties, the workspace includes several visual components, such as convex hulls showing 2D and HD clusters, data point pairwise distances, cluster summaries, and projection quality metrics. We show the usability of the presented workspace through replication and expert case studies that highlight the need to communicate uncertainty through multiple visual components and different data perspectives.

Reward Learning from Multiple Feedback Types

Feb 28, 2025Abstract:Learning rewards from preference feedback has become an important tool in the alignment of agentic models. Preference-based feedback, often implemented as a binary comparison between multiple completions, is an established method to acquire large-scale human feedback. However, human feedback in other contexts is often much more diverse. Such diverse feedback can better support the goals of a human annotator, and the simultaneous use of multiple sources might be mutually informative for the learning process or carry type-dependent biases for the reward learning process. Despite these potential benefits, learning from different feedback types has yet to be explored extensively. In this paper, we bridge this gap by enabling experimentation and evaluating multi-type feedback in a broad set of environments. We present a process to generate high-quality simulated feedback of six different types. Then, we implement reward models and downstream RL training for all six feedback types. Based on the simulated feedback, we investigate the use of types of feedback across ten RL environments and compare them to pure preference-based baselines. We show empirically that diverse types of feedback can be utilized and lead to strong reward modeling performance. This work is the first strong indicator of the potential of multi-type feedback for RLHF.

Mapping out the Space of Human Feedback for Reinforcement Learning: A Conceptual Framework

Nov 18, 2024

Abstract:Reinforcement Learning from Human feedback (RLHF) has become a powerful tool to fine-tune or train agentic machine learning models. Similar to how humans interact in social contexts, we can use many types of feedback to communicate our preferences, intentions, and knowledge to an RL agent. However, applications of human feedback in RL are often limited in scope and disregard human factors. In this work, we bridge the gap between machine learning and human-computer interaction efforts by developing a shared understanding of human feedback in interactive learning scenarios. We first introduce a taxonomy of feedback types for reward-based learning from human feedback based on nine key dimensions. Our taxonomy allows for unifying human-centered, interface-centered, and model-centered aspects. In addition, we identify seven quality metrics of human feedback influencing both the human ability to express feedback and the agent's ability to learn from the feedback. Based on the feedback taxonomy and quality criteria, we derive requirements and design choices for systems learning from human feedback. We relate these requirements and design choices to existing work in interactive machine learning. In the process, we identify gaps in existing work and future research opportunities. We call for interdisciplinary collaboration to harness the full potential of reinforcement learning with data-driven co-adaptive modeling and varied interaction mechanics.

Why context matters in VQA and Reasoning: Semantic interventions for VLM input modalities

Oct 02, 2024

Abstract:The various limitations of Generative AI, such as hallucinations and model failures, have made it crucial to understand the role of different modalities in Visual Language Model (VLM) predictions. Our work investigates how the integration of information from image and text modalities influences the performance and behavior of VLMs in visual question answering (VQA) and reasoning tasks. We measure this effect through answer accuracy, reasoning quality, model uncertainty, and modality relevance. We study the interplay between text and image modalities in different configurations where visual content is essential for solving the VQA task. Our contributions include (1) the Semantic Interventions (SI)-VQA dataset, (2) a benchmark study of various VLM architectures under different modality configurations, and (3) the Interactive Semantic Interventions (ISI) tool. The SI-VQA dataset serves as the foundation for the benchmark, while the ISI tool provides an interface to test and apply semantic interventions in image and text inputs, enabling more fine-grained analysis. Our results show that complementary information between modalities improves answer and reasoning quality, while contradictory information harms model performance and confidence. Image text annotations have minimal impact on accuracy and uncertainty, slightly increasing image relevance. Attention analysis confirms the dominant role of image inputs over text in VQA tasks. In this study, we evaluate state-of-the-art VLMs that allow us to extract attention coefficients for each modality. A key finding is PaliGemma's harmful overconfidence, which poses a higher risk of silent failures compared to the LLaVA models. This work sets the foundation for rigorous analysis of modality integration, supported by datasets specifically designed for this purpose.

Navigating the Maze of Explainable AI: A Systematic Approach to Evaluating Methods and Metrics

Sep 25, 2024Abstract:Explainable AI (XAI) is a rapidly growing domain with a myriad of proposed methods as well as metrics aiming to evaluate their efficacy. However, current studies are often of limited scope, examining only a handful of XAI methods and ignoring underlying design parameters for performance, such as the model architecture or the nature of input data. Moreover, they often rely on one or a few metrics and neglect thorough validation, increasing the risk of selection bias and ignoring discrepancies among metrics. These shortcomings leave practitioners confused about which method to choose for their problem. In response, we introduce LATEC, a large-scale benchmark that critically evaluates 17 prominent XAI methods using 20 distinct metrics. We systematically incorporate vital design parameters like varied architectures and diverse input modalities, resulting in 7,560 examined combinations. Through LATEC, we showcase the high risk of conflicting metrics leading to unreliable rankings and consequently propose a more robust evaluation scheme. Further, we comprehensively evaluate various XAI methods to assist practitioners in selecting appropriate methods aligning with their needs. Curiously, the emerging top-performing method, Expected Gradients, is not examined in any relevant related study. LATEC reinforces its role in future XAI research by publicly releasing all 326k saliency maps and 378k metric scores as a (meta-)evaluation dataset.

iNNspector: Visual, Interactive Deep Model Debugging

Jul 25, 2024Abstract:Deep learning model design, development, and debugging is a process driven by best practices, guidelines, trial-and-error, and the personal experiences of model developers. At multiple stages of this process, performance and internal model data can be logged and made available. However, due to the sheer complexity and scale of this data and process, model developers often resort to evaluating their model performance based on abstract metrics like accuracy and loss. We argue that a structured analysis of data along the model's architecture and at multiple abstraction levels can considerably streamline the debugging process. Such a systematic analysis can further connect the developer's design choices to their impacts on the model behavior, facilitating the understanding, diagnosis, and refinement of deep learning models. Hence, in this paper, we (1) contribute a conceptual framework structuring the data space of deep learning experiments. Our framework, grounded in literature analysis and requirements interviews, captures design dimensions and proposes mechanisms to make this data explorable and tractable. To operationalize our framework in a ready-to-use application, we (2) present the iNNspector system. iNNspector enables tracking of deep learning experiments and provides interactive visualizations of the data on all levels of abstraction from multiple models to individual neurons. Finally, we (3) evaluate our approach with three real-world use-cases and a user study with deep learning developers and data analysts, proving its effectiveness and usability.

MelodyVis: Visual Analytics for Melodic Patterns in Sheet Music

Jul 07, 2024

Abstract:Manual melody detection is a tedious task requiring high expertise level, while automatic detection is often not expressive or powerful enough. Thus, we present MelodyVis, a visual application designed in collaboration with musicology experts to explore melodic patterns in digital sheet music. MelodyVis features five connected views, including a Melody Operator Graph and a Voicing Timeline. The system utilizes eight atomic operators, such as transposition and mirroring, to capture melody repetitions and variations. Users can start their analysis by manually selecting patterns in the sheet view, and then identifying other patterns based on the selected samples through an interactive exploration process. We conducted a user study to investigate the effectiveness and usefulness of our approach and its integrated melodic operators, including usability and mental load questions. We compared the analysis executed by 25 participants with and without the operators. The study results indicate that the participants could identify at least twice as many patterns with activated operators. MelodyVis allows analysts to steer the analysis process and interpret results. Our study also confirms the usefulness of MelodyVis in supporting common analytical tasks in melodic analysis, with participants reporting improved pattern identification and interpretation. Thus, MelodyVis addresses the limitations of fully-automated approaches, enabling music analysts to step into the analysis process and uncover and understand intricate melodic patterns and transformations in sheet music.

On Affine Homotopy between Language Encoders

Jun 04, 2024

Abstract:Pre-trained language encoders -- functions that represent text as vectors -- are an integral component of many NLP tasks. We tackle a natural question in language encoder analysis: What does it mean for two encoders to be similar? We contend that a faithful measure of similarity needs to be \emph{intrinsic}, that is, task-independent, yet still be informative of \emph{extrinsic} similarity -- the performance on downstream tasks. It is common to consider two encoders similar if they are \emph{homotopic}, i.e., if they can be aligned through some transformation. In this spirit, we study the properties of \emph{affine} alignment of language encoders and its implications on extrinsic similarity. We find that while affine alignment is fundamentally an asymmetric notion of similarity, it is still informative of extrinsic similarity. We confirm this on datasets of natural language representations. Beyond providing useful bounds on extrinsic similarity, affine intrinsic similarity also allows us to begin uncovering the structure of the space of pre-trained encoders by defining an order over them.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge