Manash Pratim Das

Preprocessing-based Kinodynamic Motion Planning Framework for Intercepting Projectiles using a Robot Manipulator

Jan 16, 2024

Abstract:We are interested in studying sports with robots and starting with the problem of intercepting a projectile moving toward a robot manipulator equipped with a shield. To successfully perform this task, the robot needs to (i) detect the incoming projectile, (ii) predict the projectile's future motion, (iii) plan a minimum-time rapid trajectory that can evade obstacles and intercept the projectile, and (iv) execute the planned trajectory. These four steps must be performed under the manipulator's dynamic limits and extreme time constraints (<350ms in our setting) to successfully intercept the projectile. In addition, we want these trajectories to be smooth to reduce the robot's joint torques and the impulse on the platform on which it is mounted. To this end, we propose a kinodynamic motion planning framework that preprocesses smooth trajectories offline to allow real-time collision-free executions online. We present an end-to-end pipeline along with our planning framework, including perception, prediction, and execution modules. We evaluate our framework experimentally in simulation and show that it has a higher blocking success rate than the baselines. Further, we deploy our pipeline on a robotic system comprising an industrial arm (ABB IRB-1600) and an onboard stereo camera (ZED 2i), which achieves a 78% success rate in projectile interceptions.

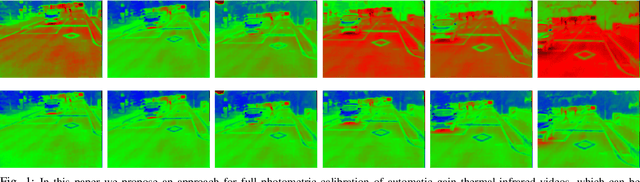

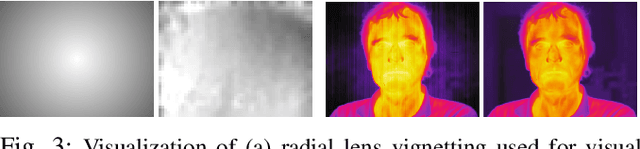

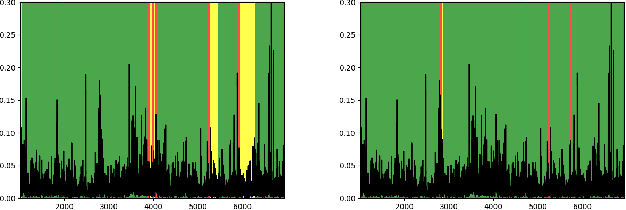

Online Photometric Calibration of Automatic Gain Thermal Infrared Cameras

Jan 11, 2021

Abstract:Thermal infrared cameras are increasingly being used in various applications such as robot vision, industrial inspection and medical imaging, thanks to their improved resolution and portability. However, the performance of traditional computer vision techniques developed for electro-optical imagery does not directly translate to the thermal domain due to two major reasons: these algorithms require photometric assumptions to hold, and methods for photometric calibration of RGB cameras cannot be applied to thermal-infrared cameras due to difference in data acquisition and sensor phenomenology. In this paper, we take a step in this direction, and introduce a novel algorithm for online photometric calibration of thermal-infrared cameras. Our proposed method does not require any specific driver/hardware support and hence can be applied to any commercial off-the-shelf thermal IR camera. We present this in the context of visual odometry and SLAM algorithms, and demonstrate the efficacy of our proposed system through extensive experiments for both standard benchmark datasets, and real-world field tests with a thermal-infrared camera in natural outdoor environments.

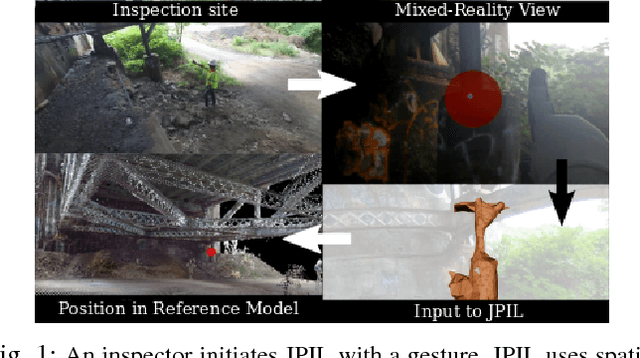

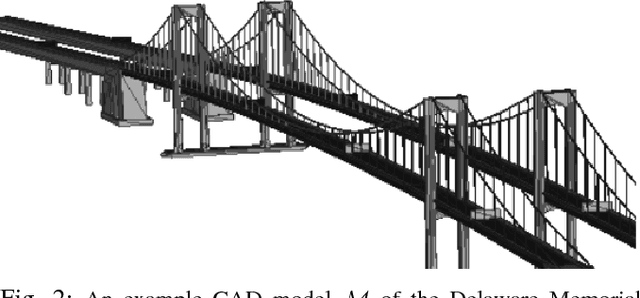

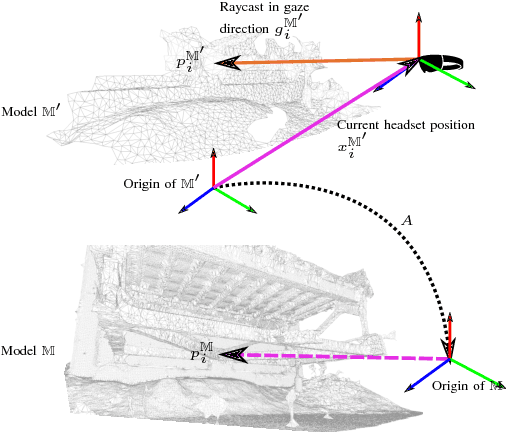

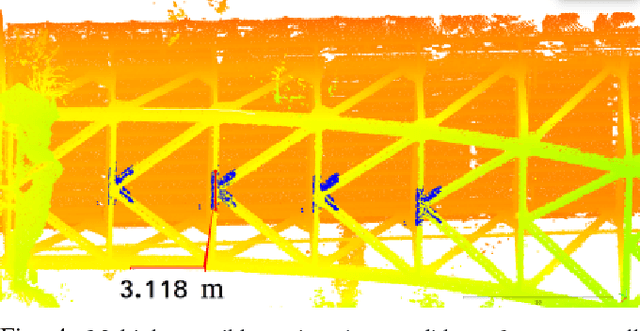

Joint Point Cloud and Image Based Localization For Efficient Inspection in Mixed Reality

Nov 05, 2018

Abstract:This paper introduces a method of structure inspection using mixed-reality headsets to reduce the human effort in reporting accurate inspection information such as fault locations in 3D coordinates. Prior to every inspection, the headset needs to be localized. While external pose estimation and fiducial marker based localization would require setup, maintenance, and manual calibration; marker-free self-localization can be achieved using the onboard depth sensor and camera. However, due to limited depth sensor range of portable mixed-reality headsets like Microsoft HoloLens, localization based on simple point cloud registration (sPCR) would require extensive mapping of the environment. Also, localization based on camera image would face the same issues as stereo ambiguities and hence depends on viewpoint. We thus introduce a novel approach to Joint Point Cloud and Image-based Localization (JPIL) for mixed-reality headsets that use visual cues and headset orientation to register small, partially overlapped point clouds and save significant manual labor and time in environment mapping. Our empirical results compared to sPCR show average 10 fold reduction of required overlap surface area that could potentially save on average 20 minutes per inspection. JPIL is not only restricted to inspection tasks but also can be essential in enabling intuitive human-robot interaction for spatial mapping and scene understanding in conjunction with other agents like autonomous robotic systems that are increasingly being deployed in outdoor environments for applications like structural inspection.

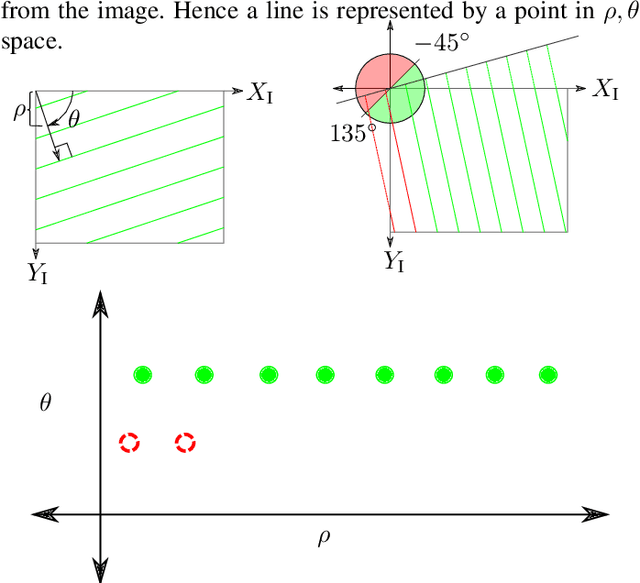

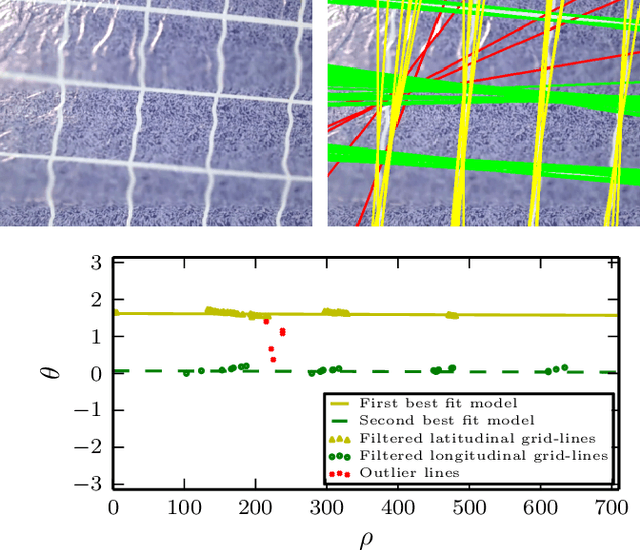

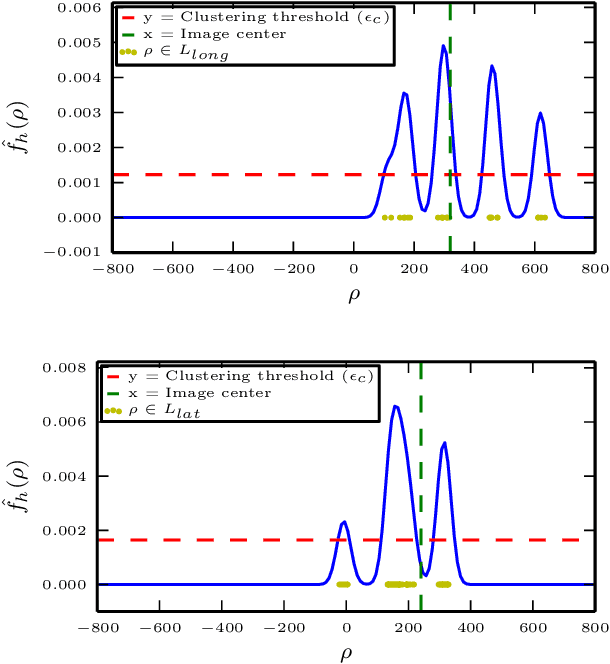

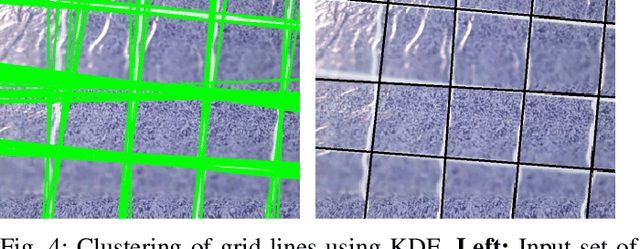

5-DoF Monocular Visual Localization Over Grid Based Floor

Sep 14, 2017

Abstract:Reliable localization is one of the most important parts of an MAV system. Localization in an indoor GPS-denied environment is a relatively difficult problem. Current vision based algorithms track optical features to calculate odometry. We present a novel localization method which can be applied in an environment having orthogonal sets of equally spaced lines to form a grid. With the help of a monocular camera and using the properties of the grid-lines below, the MAV is localized inside each sub-cell of the grid and consequently over the entire grid for a relative localization over the grid. We demonstrate the effectiveness of our system onboard a customized MAV platform. The experimental results show that our method provides accurate 5-DoF localization over grid lines and it can be performed in real-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge