Luis Sentis

On the Performance of Jerk-Constrained Time-Optimal Trajectory Planning for Industrial Manipulators

Apr 11, 2024

Abstract:Jerk-constrained trajectories offer a wide range of advantages that collectively improve the performance of robotic systems, including increased energy efficiency, durability, and safety. In this paper, we present a novel approach to jerk-constrained time-optimal trajectory planning (TOTP), which follows a specified path while satisfying up to third-order constraints to ensure safety and smooth motion. One significant challenge in jerk-constrained TOTP is a non-convex formulation arising from the inclusion of third-order constraints. Approximating inequality constraints can be particularly challenging because the resulting solutions may violate the actual constraints. We address this problem by leveraging convexity within the proposed formulation to form conservative inequality constraints. We then obtain the desired trajectories by solving an $\boldsymbol n$-dimensional Sequential Linear Program (SLP) iteratively until convergence. Lastly, we evaluate in a real robot the performance of trajectories generated with and without jerk limits in terms of peak power, torque efficiency, and tracking capability.

Human Stress Response and Perceived Safety during Encounters with Quadruped Robots

Mar 25, 2024

Abstract:Despite the rise of mobile robot deployments in home and work settings, perceived safety of users and bystanders is understudied in the human-robot interaction (HRI) literature. To address this, we present a study designed to identify elements of a human-robot encounter that correlate with observed stress response. Stress is a key component of perceived safety and is strongly associated with human physiological response. In this study a Boston Dynamics Spot and a Unitree Go1 navigate autonomously through a shared environment occupied by human participants wearing multimodal physiological sensors to track their electrocardiography (ECG) and electrodermal activity (EDA). The encounters are varied through several trials and participants self-rate their stress levels after each encounter. The study resulted in a multidimensional dataset archiving various objective and subjective aspects of a human-robot encounter, containing insights for understanding perceived safety in such encounters. To this end, acute stress responses were decoded from the human participants' ECG and EDA and compared across different human-robot encounter conditions. Statistical analysis of data indicate that on average (1) participants feel more stress during encounters compared to baselines, (2) participants feel more stress encountering multiple robots compared to a single robot and (3) participants stress increases during navigation behavior compared with search behavior.

LIVE: Lidar Informed Visual Search for Multiple Objects with Multiple Robots

Oct 06, 2023Abstract:This paper introduces LIVE: Lidar Informed Visual Search focused on the problem of multi-robot (MR) planning and execution for robust visual detection of multiple objects. We perform extensive real-world experiments with a two-robot team in an indoor apartment setting. LIVE acts as a perception module that detects unmapped obstacles, or Short Term Features (STFs), in Lidar observations. STFs are filtered, resulting in regions to be visually inspected by modifying plans online. Lidar Coverage Path Planning (CPP) is employed for generating highly efficient global plans for heterogeneous robot teams. Finally, we present a data model and a demonstration dataset, which can be found by visiting our project website https://sites.google.com/view/live-iros2023/home.

Learned Contextual LiDAR Informed Visual Search in Unseen Environments

Sep 25, 2023

Abstract:This paper presents LIVES: LiDAR Informed Visual Search, an autonomous planner for unknown environments. We consider the pixel-wise environment perception problem where one is given 2D range data from LiDAR scans and must label points contextually as map or non-map in the surroundings for visual planning. LIVES classifies incoming 2D scans from the wide Field of View (FoV) LiDAR in unseen environments without prior map information. The map-generalizable classifier is trained from expert data collected using a simple cart platform equipped with a map-based classifier in real environments. A visual planner takes contextual data from scans and uses this information to plan viewpoints more likely to yield detection of the search target. While conventional frontier based methods for LiDAR and multi sensor exploration effectively map environments, they are not tailored to search for people indoors, which we investigate in this paper. LIVES is baselined against several existing exploration methods in simulation to verify its performance. Finally, it is validated in real-world experiments with a Spot robot in a 20x30m indoor apartment setting. Videos of experimental validation can be found on our project website at https://sites.google.com/view/lives-icra-2024/home.

Deep Imitation Learning for Humanoid Loco-manipulation through Human Teleoperation

Sep 05, 2023Abstract:We tackle the problem of developing humanoid loco-manipulation skills with deep imitation learning. The difficulty of collecting task demonstrations and training policies for humanoids with a high degree of freedom presents substantial challenges. We introduce TRILL, a data-efficient framework for training humanoid loco-manipulation policies from human demonstrations. In this framework, we collect human demonstration data through an intuitive Virtual Reality (VR) interface. We employ the whole-body control formulation to transform task-space commands by human operators into the robot's joint-torque actuation while stabilizing its dynamics. By employing high-level action abstractions tailored for humanoid loco-manipulation, our method can efficiently learn complex sensorimotor skills. We demonstrate the effectiveness of TRILL in simulation and on a real-world robot for performing various loco-manipulation tasks. Videos and additional materials can be found on the project page: https://ut-austin-rpl.github.io/TRILL.

Learning Contact-based Navigation in Crowds

Mar 02, 2023

Abstract:Navigation strategies that intentionally incorporate contact with humans (i.e. "contact-based" social navigation) in crowded environments are largely unexplored even though collision-free social navigation is a well studied problem. Traditional social navigation frameworks require the robot to stop suddenly or "freeze" whenever a collision is imminent. This paradigm poses two problems: 1) freezing while navigating a crowd may cause people to trip and fall over the robot, resulting in more harm than the collision itself, and 2) in very dense social environments where collisions are unavoidable, such a control scheme would render the robot unable to move and preclude the opportunity to study how humans incorporate robots into these environments. However, if robots are to be meaningfully included in crowded social spaces, such as busy streets, subways, stores, or other densely populated locales, there may not exist trajectories that can guarantee zero collisions. Thus, adoption of robots in these environments requires the development of minimally disruptive navigation plans that can safely plan for and respond to contacts. We propose a learning-based motion planner and control scheme to navigate dense social environments using safe contacts for an omnidirectional mobile robot. The planner is evaluated in simulation over 360 trials with crowd densities varying between 0.0 and 1.6 people per square meter. Our navigation scheme is able to use contact to safely navigate in crowds of higher density than has been previously reported, to our knowledge.

Sample Efficient Dynamics Learning for Symmetrical Legged Robots:Leveraging Physics Invariance and Geometric Symmetries

Oct 13, 2022

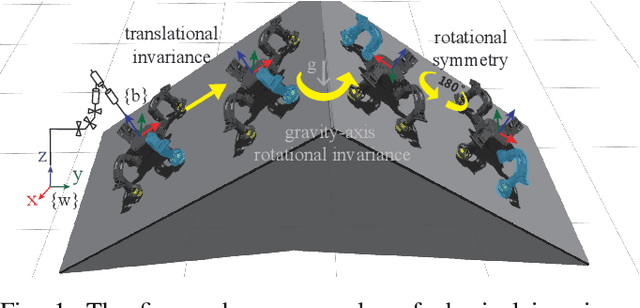

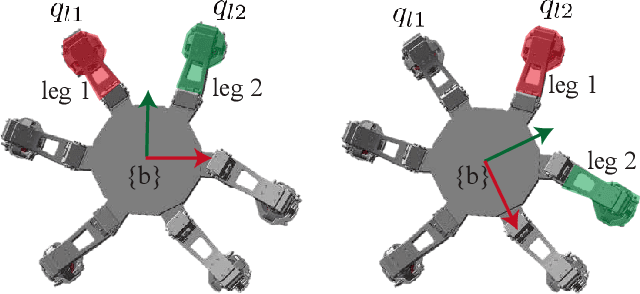

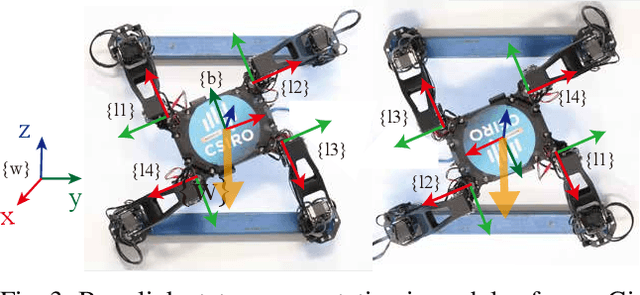

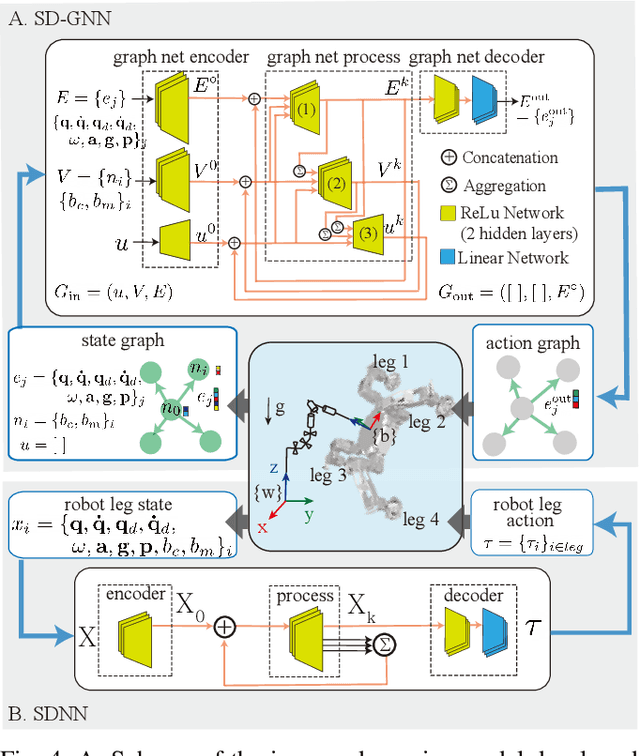

Abstract:Model generalization of the underlying dynamics is critical for achieving data efficiency when learning for robot control. This paper proposes a novel approach for learning dynamics leveraging the symmetry in the underlying robotic system, which allows for robust extrapolation from fewer samples. Existing frameworks that represent all data in vector space fail to consider the structured information of the robot, such as leg symmetry, rotational symmetry, and physics invariance. As a result, these schemes require vast amounts of training data to learn the system's redundant elements because they are learned independently. Instead, we propose considering the geometric prior by representing the system in symmetrical object groups and designing neural network architecture to assess invariance and equivariance between the objects. Finally, we demonstrate the effectiveness of our approach by comparing the generalization to unseen data of the proposed model and the existing models. We also implement a controller of a climbing robot based on learned inverse dynamics models. The results show that our method generates accurate control inputs that help the robot reach the desired state while requiring less training data than existing methods.

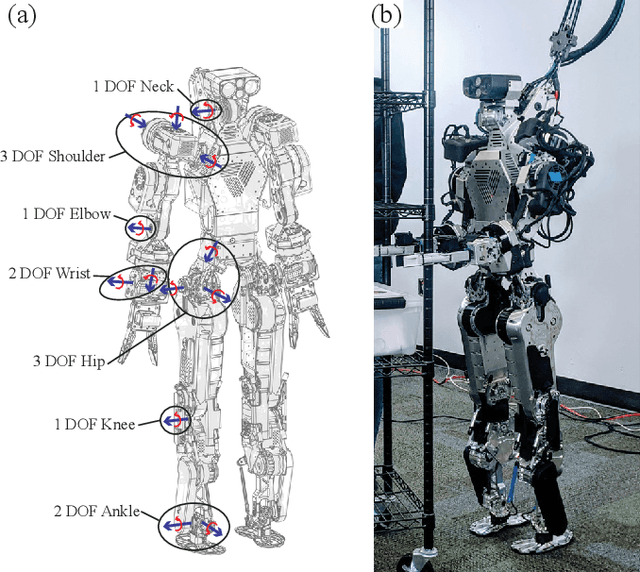

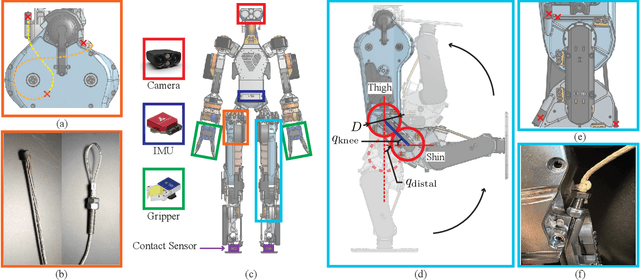

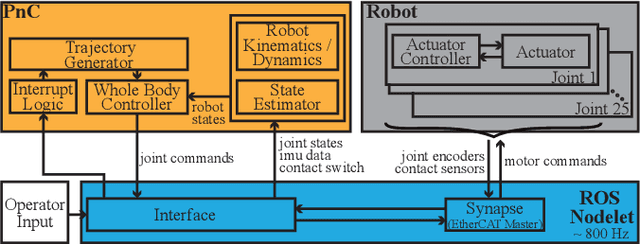

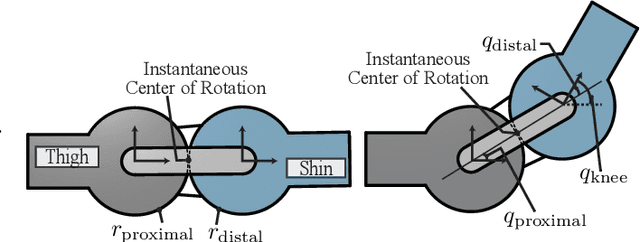

Control and Evaluation of a Humanoid Robot with Rolling Contact Knees

Oct 03, 2022

Abstract:In this paper, we introduce the humanoid robot DRACO 3 by providing a high-level description of its design and control. This robot features proximal actuation and mechanical artifacts to provide a high range of hip, knee and ankle motion. Its versatile design brings interesting problems as it requires a more elaborate control system to perform its motions. For this reason, we introduce a whole body controller (WBC) with support for rolling contact joints and show how it can be easily integrated into our previously presented open-source Planning and Control (PnC) framework. We then validate our controller experimentally on DRACO 3 by showing preliminary results carrying out two postural tasks. Lastly, we analyze the impact of the proximal actuation design and show where it stands in comparison to other adult-size humanoids.

Real-Time Model Predictive Control for Industrial Manipulators with Singularity-Tolerant Hierarchical Task Control

Sep 23, 2022

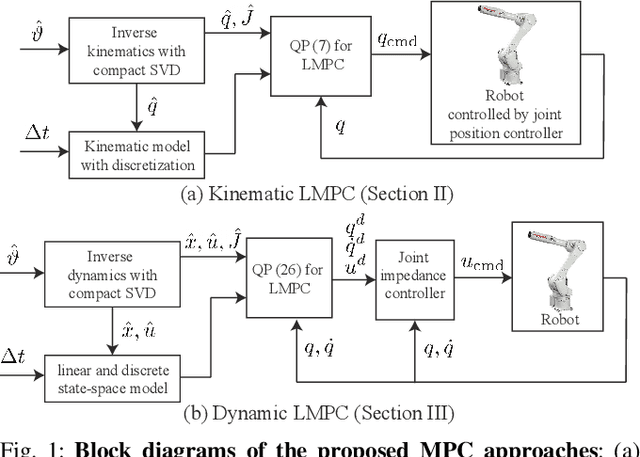

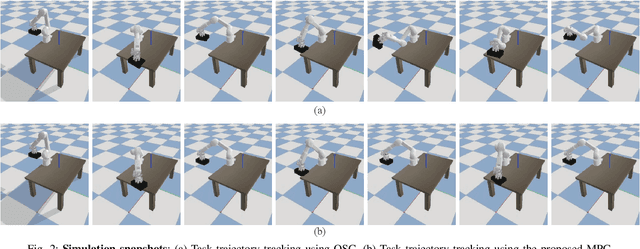

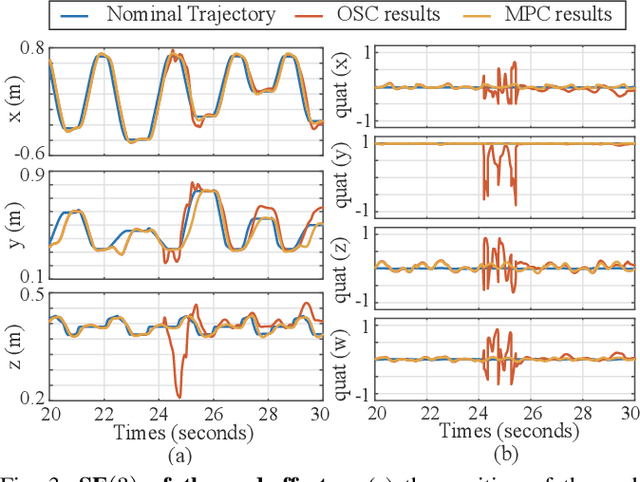

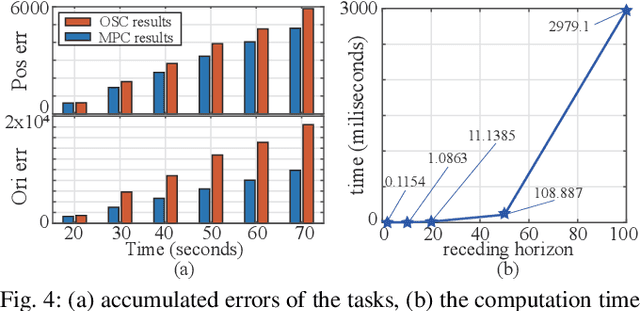

Abstract:This paper proposes a real-time model predictive control (MPC) scheme to execute multiple tasks using robots over a finite-time horizon. In industrial robotic applications, we must carefully consider multiple constraints for avoiding joint position, velocity, and torque limits. In addition, singularity-free and smooth motions require executing tasks continuously and safely. Instead of formulating nonlinear MPC problems, we devise linear MPC problems using kinematic and dynamic models linearized along nominal trajectories produced by hierarchical controllers. These linear MPC problems are solvable via the use of Quadratic Programming; therefore, we significantly reduce the computation time of the proposed MPC framework so the resulting update frequency is higher than 1 kHz. Our proposed MPC framework is more efficient in reducing task tracking errors than a baseline based on operational space control (OSC). We validate our approach in numerical simulations and in real experiments using an industrial manipulator. More specifically, we deploy our method in two practical scenarios for robotic logistics: 1) controlling a robot carrying heavy payloads while accounting for torque limits, and 2) controlling the end-effector while avoiding singularities.

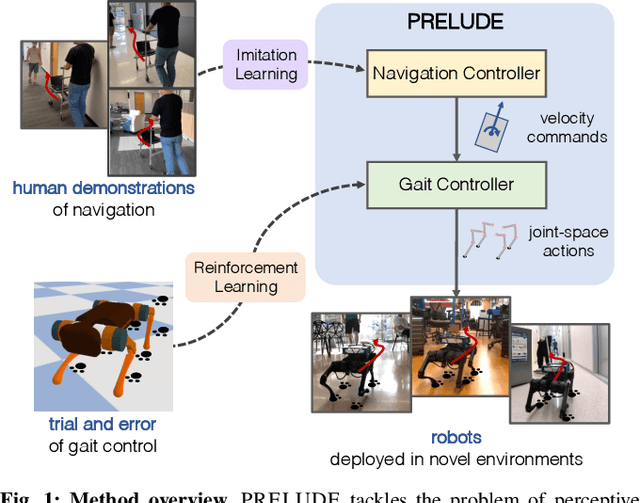

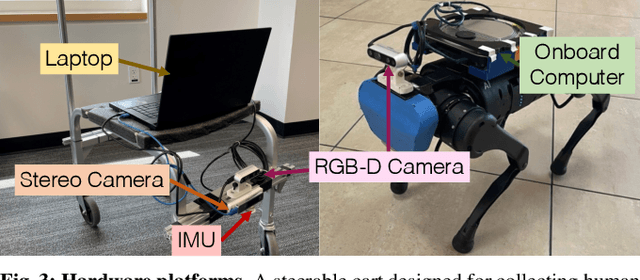

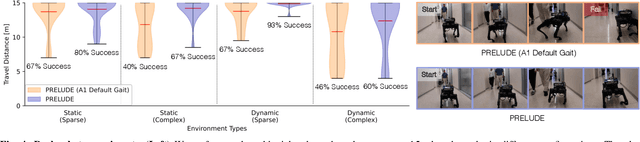

Learning to Walk by Steering: Perceptive Quadrupedal Locomotion in Dynamic Environments

Sep 19, 2022

Abstract:We tackle the problem of perceptive locomotion in dynamic environments. In this problem, a quadrupedal robot must exhibit robust and agile walking behaviors in response to environmental clutter and moving obstacles. We present a hierarchical learning framework, named PRELUDE, which decomposes the problem of perceptive locomotion into high-level decision-making to predict navigation commands and low-level gait generation to realize the target commands. In this framework, we train the high-level navigation controller with imitation learning on human demonstrations collected on a steerable cart and the low-level gait controller with reinforcement learning (RL). Therefore, our method can acquire complex navigation behaviors from human supervision and discover versatile gaits from trial and error. We demonstrate the effectiveness of our approach in simulation and with hardware experiments. Video and code can be found on https://ut-austin-rpl.github.io/PRELUDE.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge