Li Niu

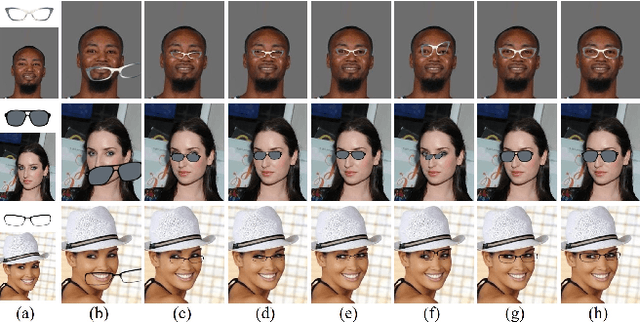

Few-shot Image Generation Using Discrete Content Representation

Jul 22, 2022

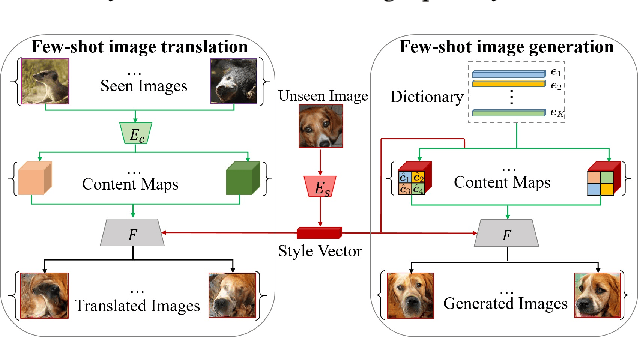

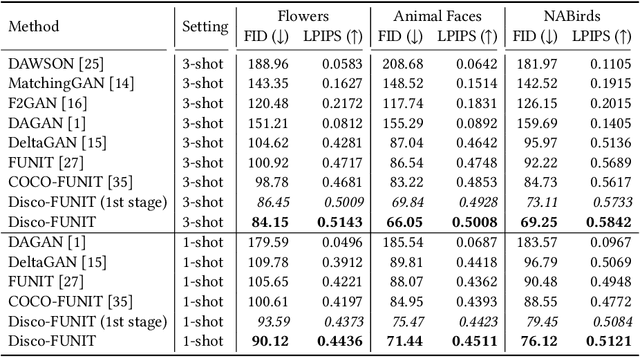

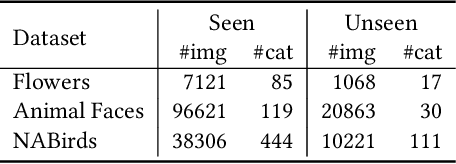

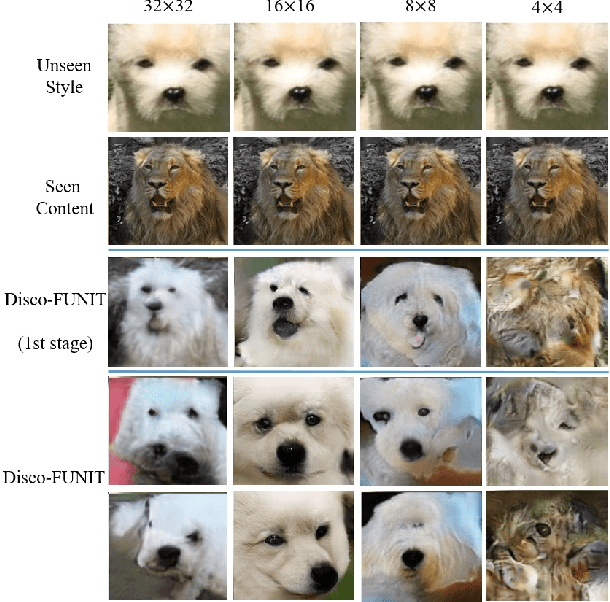

Abstract:Few-shot image generation and few-shot image translation are two related tasks, both of which aim to generate new images for an unseen category with only a few images. In this work, we make the first attempt to adapt few-shot image translation method to few-shot image generation task. Few-shot image translation disentangles an image into style vector and content map. An unseen style vector can be combined with different seen content maps to produce different images. However, it needs to store seen images to provide content maps and the unseen style vector may be incompatible with seen content maps. To adapt it to few-shot image generation task, we learn a compact dictionary of local content vectors via quantizing continuous content maps into discrete content maps instead of storing seen images. Furthermore, we model the autoregressive distribution of discrete content map conditioned on style vector, which can alleviate the incompatibility between content map and style vector. Qualitative and quantitative results on three real datasets demonstrate that our model can produce images of higher diversity and fidelity for unseen categories than previous methods.

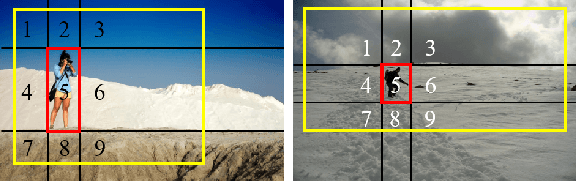

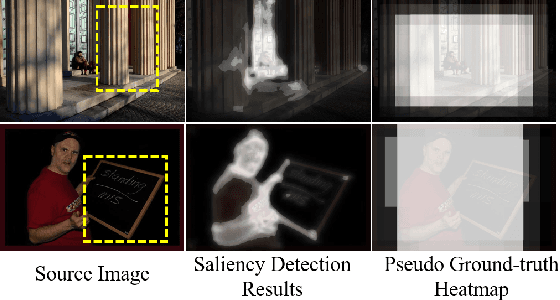

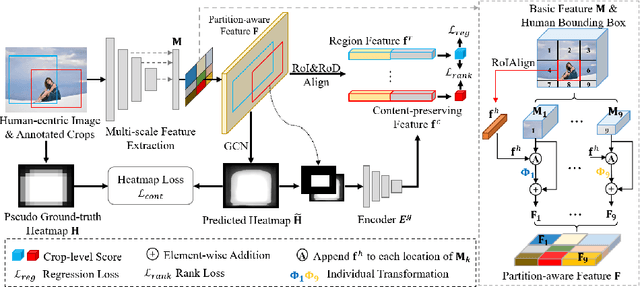

Human-centric Image Cropping with Partition-aware and Content-preserving Features

Jul 21, 2022

Abstract:Image cropping aims to find visually appealing crops in an image, which is an important yet challenging task. In this paper, we consider a specific and practical application: human-centric image cropping, which focuses on the depiction of a person. To this end, we propose a human-centric image cropping method with two novel feature designs for the candidate crop: partition-aware feature and content-preserving feature. For partition-aware feature, we divide the whole image into nine partitions based on the human bounding box and treat different partitions in a candidate crop differently conditioned on the human information. For content-preserving feature, we predict a heatmap indicating the important content to be included in a good crop, and extract the geometric relation between the heatmap and a candidate crop. Extensive experiments demonstrate that our method can perform favorably against state-of-the-art image cropping methods on human-centric image cropping task. Code is available at https://github.com/bcmi/Human-Centric-Image-Cropping.

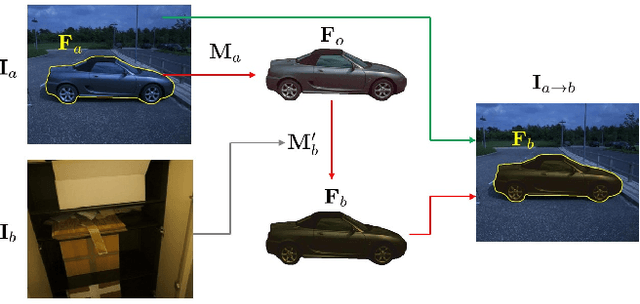

Spatial Transformation for Image Composition via Correspondence Learning

Jul 06, 2022

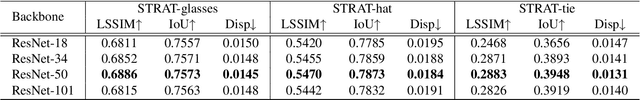

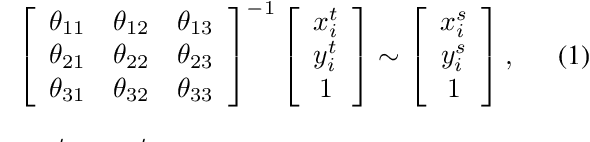

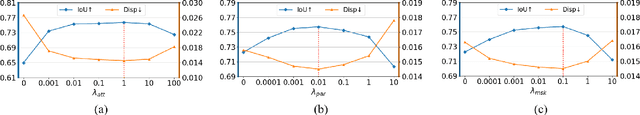

Abstract:When using cut-and-paste to acquire a composite image, the geometry inconsistency between foreground and background may severely harm its fidelity. To address the geometry inconsistency in composite images, several existing works learned to warp the foreground object for geometric correction. However, the absence of annotated dataset results in unsatisfactory performance and unreliable evaluation. In this work, we contribute a Spatial TRAnsformation for virtual Try-on (STRAT) dataset covering three typical application scenarios. Moreover, previous works simply concatenate foreground and background as input without considering their mutual correspondence. Instead, we propose a novel correspondence learning network (CorrelNet) to model the correspondence between foreground and background using cross-attention maps, based on which we can predict the target coordinate that each source coordinate of foreground should be mapped to on the background. Then, the warping parameters of foreground object can be derived from pairs of source and target coordinates. Additionally, we learn a filtering mask to eliminate noisy pairs of coordinates to estimate more accurate warping parameters. Extensive experiments on our STRAT dataset demonstrate that our proposed CorrelNet performs more favorably against previous methods.

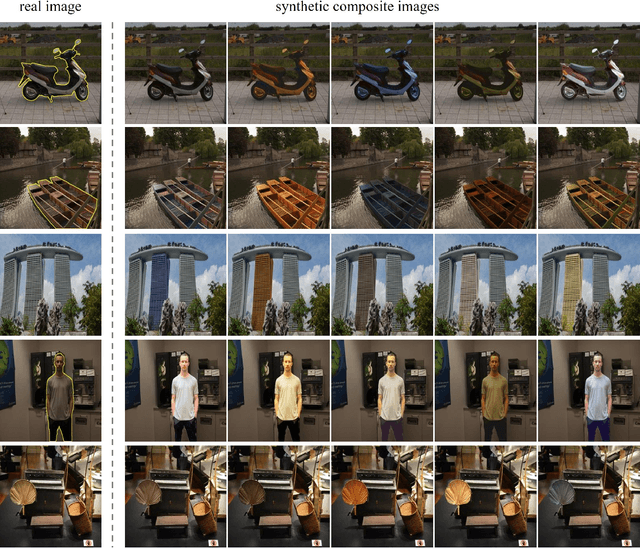

CcHarmony: Color-checker based Image Harmonization Dataset

Jun 01, 2022

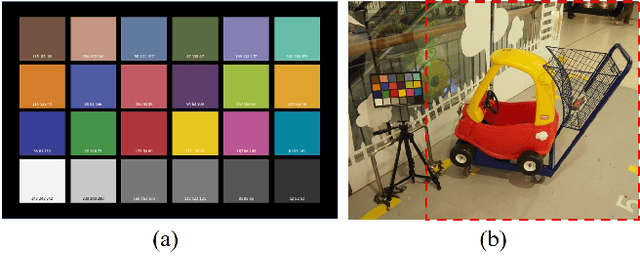

Abstract:Image harmonization targets at adjusting the foreground in a composite image to make it compatible with the background, producing a more realistic and harmonious image. Training deep image harmonization network requires abundant training data, but it is extremely difficult to acquire training pairs of composite images and ground-truth harmonious images. Therefore, existing works turn to adjust the foreground appearance in a real image to create a synthetic composite image. However, such adjustment may not faithfully reflect the natural illumination change of foreground. In this work, we explore a novel transitive way to construct image harmonization dataset. Specifically, based on the existing datasets with recorded illumination information, we first convert the foreground in a real image to the standard illumination condition, and then convert it to another illumination condition, which is combined with the original background to form a synthetic composite image. In this manner, we construct an image harmonization dataset called ccHarmony, which is named after color checker (cc). The dataset is available at https://github.com/bcmi/Image-Harmonization-Dataset-ccHarmony.

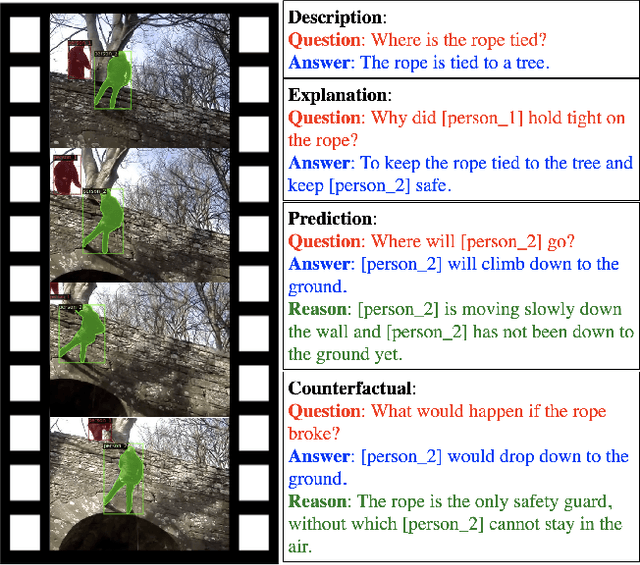

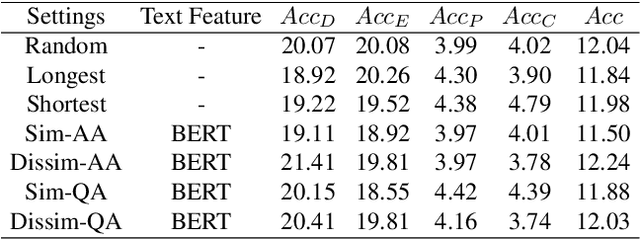

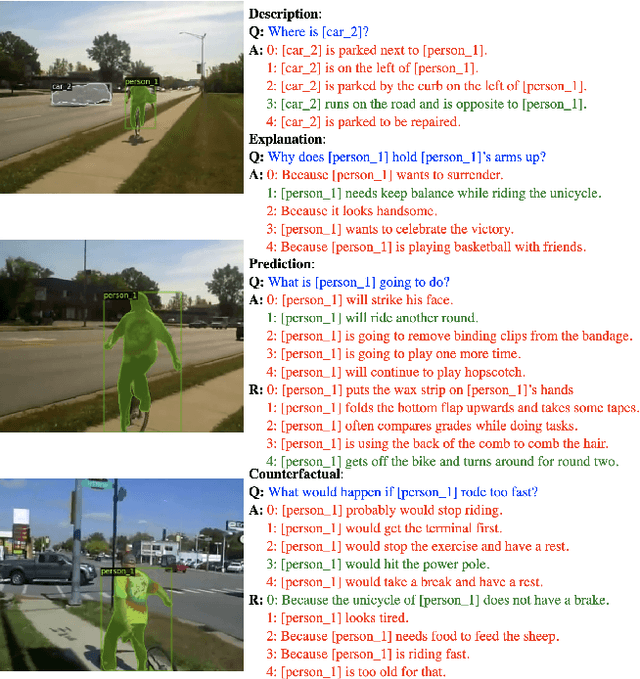

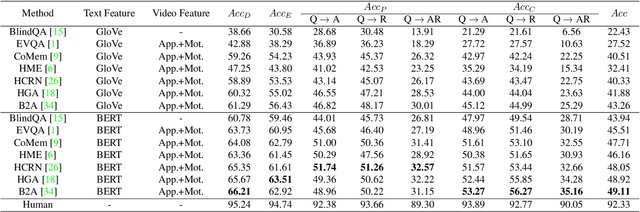

From Representation to Reasoning: Towards both Evidence and Commonsense Reasoning for Video Question-Answering

May 30, 2022

Abstract:Video understanding has achieved great success in representation learning, such as video caption, video object grounding, and video descriptive question-answer. However, current methods still struggle on video reasoning, including evidence reasoning and commonsense reasoning. To facilitate deeper video understanding towards video reasoning, we present the task of Causal-VidQA, which includes four types of questions ranging from scene description (description) to evidence reasoning (explanation) and commonsense reasoning (prediction and counterfactual). For commonsense reasoning, we set up a two-step solution by answering the question and providing a proper reason. Through extensive experiments on existing VideoQA methods, we find that the state-of-the-art methods are strong in descriptions but weak in reasoning. We hope that Causal-VidQA can guide the research of video understanding from representation learning to deeper reasoning. The dataset and related resources are available at \url{https://github.com/bcmi/Causal-VidQA.git}.

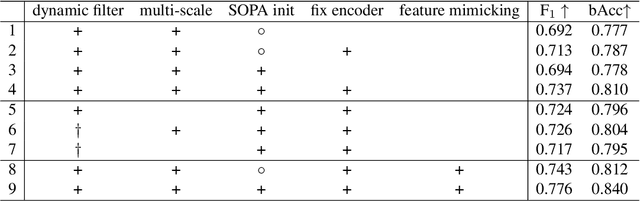

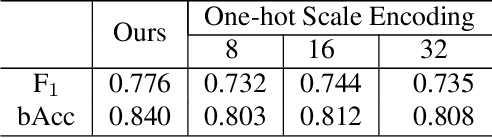

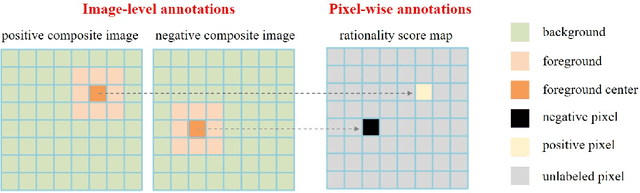

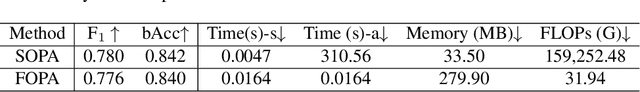

Fast Object Placement Assessment

May 28, 2022

Abstract:Object placement assessment (OPA) aims to predict the rationality score of a composite image in terms of the placement (e.g., scale, location) of inserted foreground object. However, given a pair of scaled foreground and background, to enumerate all the reasonable locations, existing OPA model needs to place the foreground at each location on the background and pass the obtained composite image through the model one at a time, which is very time-consuming. In this work, we investigate a new task named as fast OPA. Specifically, provided with a scaled foreground and a background, we only pass them through the model once and predict the rationality scores for all locations. To accomplish this task, we propose a pioneering fast OPA model with several innovations (i.e., foreground dynamic filter, background prior transfer, and composite feature mimicking) to bridge the performance gap between slow OPA model and fast OPA model. Extensive experiments on OPA dataset show that our proposed fast OPA model performs on par with slow OPA model but runs significantly faster.

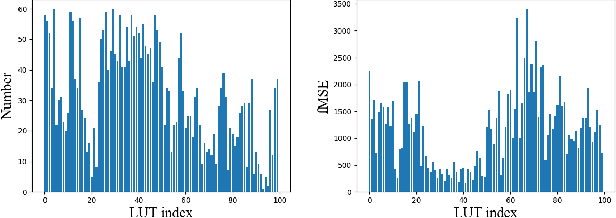

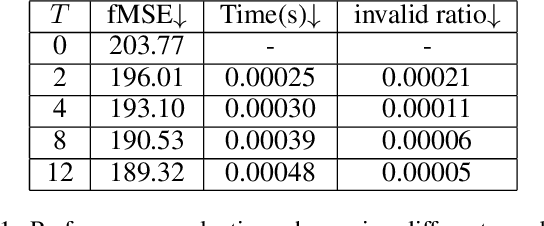

Deep Video Harmonization with Color Mapping Consistency

May 02, 2022

Abstract:Video harmonization aims to adjust the foreground of a composite video to make it compatible with the background. So far, video harmonization has only received limited attention and there is no public dataset for video harmonization. In this work, we construct a new video harmonization dataset HYouTube by adjusting the foreground of real videos to create synthetic composite videos. Moreover, we consider the temporal consistency in video harmonization task. Unlike previous works which establish the spatial correspondence, we design a novel framework based on the assumption of color mapping consistency, which leverages the color mapping of neighboring frames to refine the current frame. Extensive experiments on our HYouTube dataset prove the effectiveness of our proposed framework. Our dataset and code are available at https://github.com/bcmi/Video-Harmonization-Dataset-HYouTube.

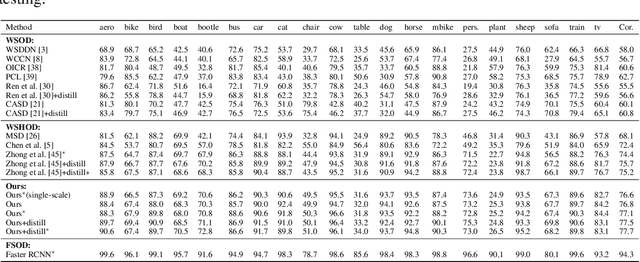

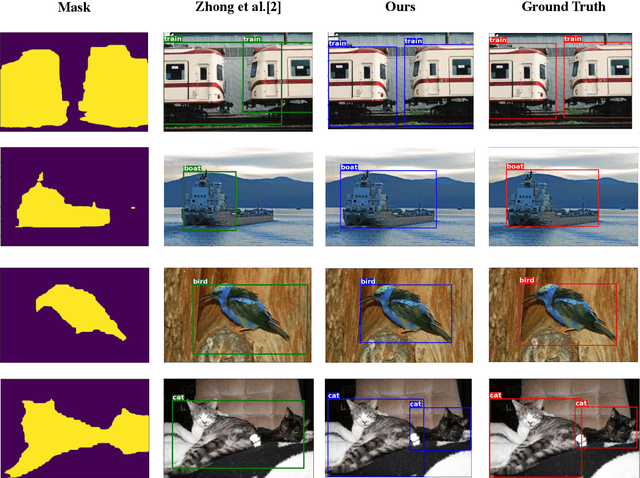

Mixed Supervised Object Detection by Transferring Mask Prior and Semantic Similarity

Oct 27, 2021

Abstract:Object detection has achieved promising success, but requires large-scale fully-annotated data, which is time-consuming and labor-extensive. Therefore, we consider object detection with mixed supervision, which learns novel object categories using weak annotations with the help of full annotations of existing base object categories. Previous works using mixed supervision mainly learn the class-agnostic objectness from fully-annotated categories, which can be transferred to upgrade the weak annotations to pseudo full annotations for novel categories. In this paper, we further transfer mask prior and semantic similarity to bridge the gap between novel categories and base categories. Specifically, the ability of using mask prior to help detect objects is learned from base categories and transferred to novel categories. Moreover, the semantic similarity between objects learned from base categories is transferred to denoise the pseudo full annotations for novel categories. Experimental results on three benchmark datasets demonstrate the effectiveness of our method over existing methods. Codes are available at https://github.com/bcmi/TraMaS-Weak-Shot-Object-Detection.

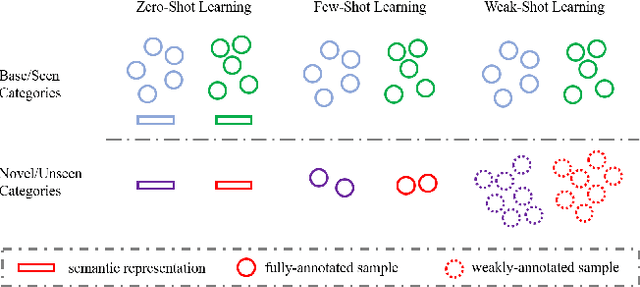

Weak Novel Categories without Tears: A Survey on Weak-Shot Learning

Oct 16, 2021

Abstract:Deep learning is a data-hungry approach, which requires massive training data. However, it is time-consuming and labor-intensive to collect abundant fully-annotated training data for all categories. Assuming the existence of base categories with adequate fully-annotated training samples, different paradigms requiring fewer training samples or weaker annotations for novel categories have attracted growing research interest. Among them, zero-shot (resp., few-shot) learning explores using zero (resp., a few) training samples for novel categories, which lowers the quantity requirement for novel categories. Instead, weak-shot learning lowers the quality requirement for novel categories. Specifically, sufficient training samples are collected for novel categories but they only have weak annotations. In different tasks, weak annotations are presented in different forms (e.g., noisy labels for image classification, image labels for object detection, bounding boxes for segmentation), similar to the definitions in weakly supervised learning. Therefore, weak-shot learning can also be treated as weakly supervised learning with auxiliary fully supervised categories. In this paper, we discuss the existing weak-shot learning methodologies in different tasks and summarize the codes at https://github.com/bcmi/Awesome-Weak-Shot-Learning.

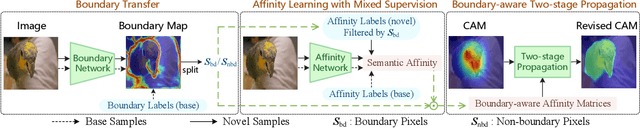

Weak-shot Semantic Segmentation by Transferring Semantic Affinity and Boundary

Oct 04, 2021

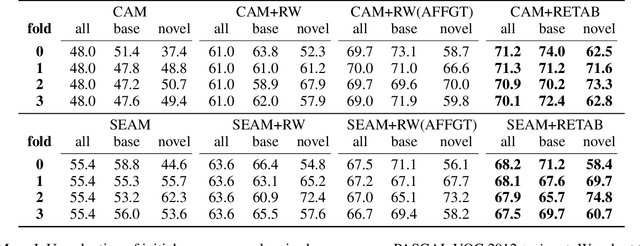

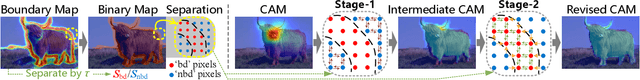

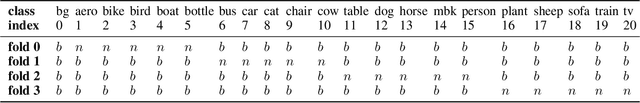

Abstract:Weakly-supervised semantic segmentation (WSSS) with image-level labels has been widely studied to relieve the annotation burden of the traditional segmentation task. In this paper, we show that existing fully-annotated base categories can help segment objects of novel categories with only image-level labels, even if base and novel categories have no overlap. We refer to this task as weak-shot semantic segmentation, which could also be treated as WSSS with auxiliary fully-annotated categories. Recent advanced WSSS methods usually obtain class activation maps (CAMs) and refine them by affinity propagation. Based on the observation that semantic affinity and boundary are class-agnostic, we propose a method under the WSSS framework to transfer semantic affinity and boundary from base categories to novel ones. As a result, we find that pixel-level annotation of base categories can facilitate affinity learning and propagation, leading to higher-quality CAMs of novel categories. Extensive experiments on PASCAL VOC 2012 dataset demonstrate that our method significantly outperforms WSSS baselines on novel categories.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge