DeepGAR: Deep Graph Learning for Analogical Reasoning

Nov 19, 2022Chen Ling, Tanmoy Chowdhury, Junji Jiang, Junxiang Wang, Xuchao Zhang, Haifeng Chen, Liang Zhao

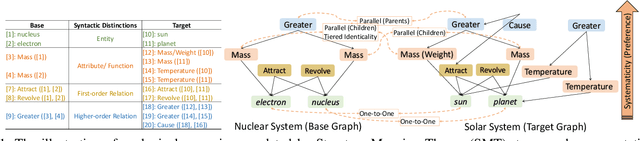

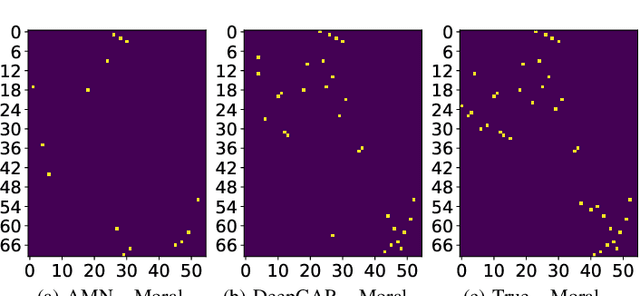

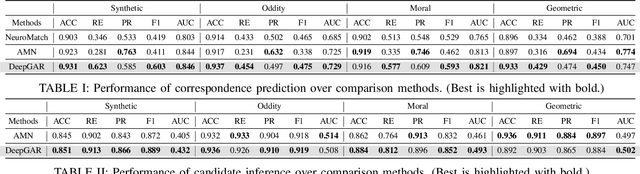

Analogical reasoning is the process of discovering and mapping correspondences from a target subject to a base subject. As the most well-known computational method of analogical reasoning, Structure-Mapping Theory (SMT) abstracts both target and base subjects into relational graphs and forms the cognitive process of analogical reasoning by finding a corresponding subgraph (i.e., correspondence) in the target graph that is aligned with the base graph. However, incorporating deep learning for SMT is still under-explored due to several obstacles: 1) the combinatorial complexity of searching for the correspondence in the target graph; 2) the correspondence mining is restricted by various cognitive theory-driven constraints. To address both challenges, we propose a novel framework for Analogical Reasoning (DeepGAR) that identifies the correspondence between source and target domains by assuring cognitive theory-driven constraints. Specifically, we design a geometric constraint embedding space to induce subgraph relation from node embeddings for efficient subgraph search. Furthermore, we develop novel learning and optimization strategies that could end-to-end identify correspondences that are strictly consistent with constraints driven by the cognitive theory. Extensive experiments are conducted on synthetic and real-world datasets to demonstrate the effectiveness of the proposed DeepGAR over existing methods.

Source Localization of Graph Diffusion via Variational Autoencoders for Graph Inverse Problems

Jun 24, 2022Chen Ling, Junji Jiang, Junxiang Wang, Liang Zhao

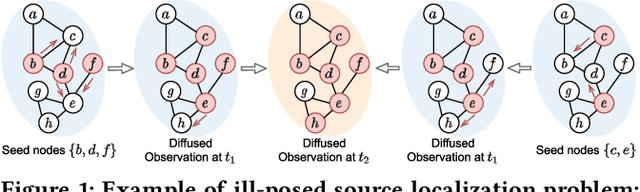

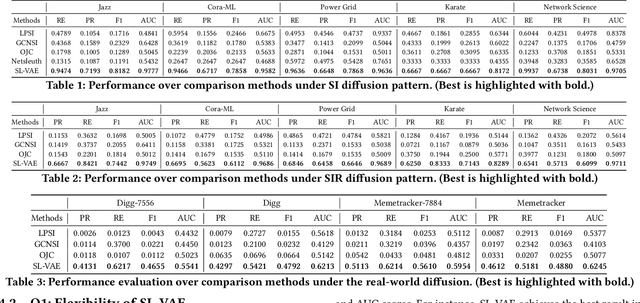

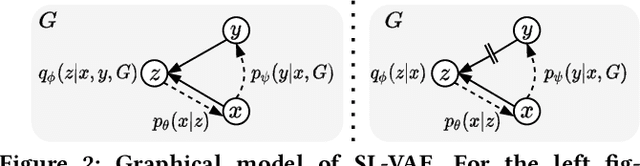

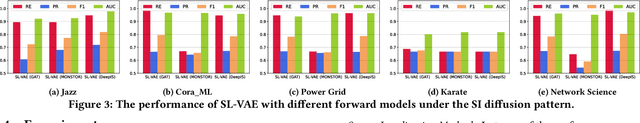

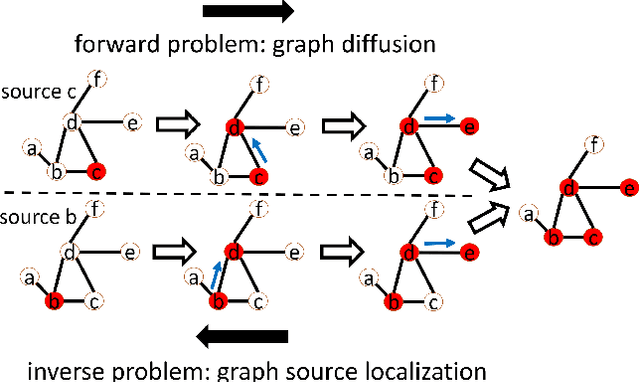

Graph diffusion problems such as the propagation of rumors, computer viruses, or smart grid failures are ubiquitous and societal. Hence it is usually crucial to identify diffusion sources according to the current graph diffusion observations. Despite its tremendous necessity and significance in practice, source localization, as the inverse problem of graph diffusion, is extremely challenging as it is ill-posed: different sources may lead to the same graph diffusion patterns. Different from most traditional source localization methods, this paper focuses on a probabilistic manner to account for the uncertainty of different candidate sources. Such endeavors require overcoming challenges including 1) the uncertainty in graph diffusion source localization is hard to be quantified; 2) the complex patterns of the graph diffusion sources are difficult to be probabilistically characterized; 3) the generalization under any underlying diffusion patterns is hard to be imposed. To solve the above challenges, this paper presents a generic framework: Source Localization Variational AutoEncoder (SL-VAE) for locating the diffusion sources under arbitrary diffusion patterns. Particularly, we propose a probabilistic model that leverages the forward diffusion estimation model along with deep generative models to approximate the diffusion source distribution for quantifying the uncertainty. SL-VAE further utilizes prior knowledge of the source-observation pairs to characterize the complex patterns of diffusion sources by a learned generative prior. Lastly, a unified objective that integrates the forward diffusion estimation model is derived to enforce the model to generalize under arbitrary diffusion patterns. Extensive experiments are conducted on 7 real-world datasets to demonstrate the superiority of SL-VAE in reconstructing the diffusion sources by excelling other methods on average 20% in AUC score.

An Invertible Graph Diffusion Neural Network for Source Localization

Jun 18, 2022Junxiang Wang, Junji Jiang, Liang Zhao

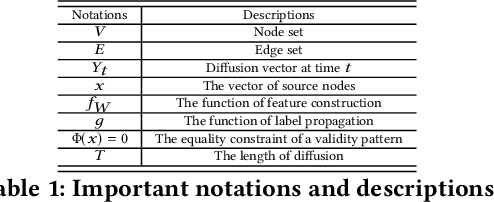

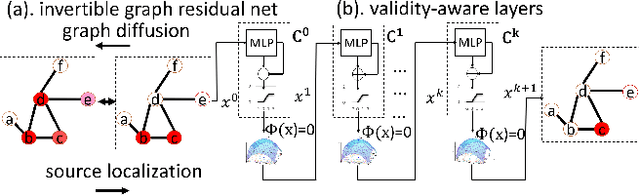

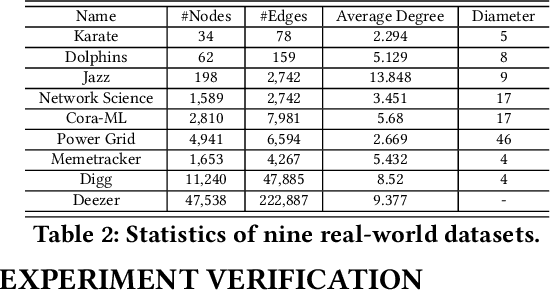

Localizing the source of graph diffusion phenomena, such as misinformation propagation, is an important yet extremely challenging task. Existing source localization models typically are heavily dependent on the hand-crafted rules. Unfortunately, a large portion of the graph diffusion process for many applications is still unknown to human beings so it is important to have expressive models for learning such underlying rules automatically. This paper aims to establish a generic framework of invertible graph diffusion models for source localization on graphs, namely Invertible Validity-aware Graph Diffusion (IVGD), to handle major challenges including 1) Difficulty to leverage knowledge in graph diffusion models for modeling their inverse processes in an end-to-end fashion, 2) Difficulty to ensure the validity of the inferred sources, and 3) Efficiency and scalability in source inference. Specifically, first, to inversely infer sources of graph diffusion, we propose a graph residual scenario to make existing graph diffusion models invertible with theoretical guarantees; second, we develop a novel error compensation mechanism that learns to offset the errors of the inferred sources. Finally, to ensure the validity of the inferred sources, a new set of validity-aware layers have been devised to project inferred sources to feasible regions by flexibly encoding constraints with unrolled optimization techniques. A linearization technique is proposed to strengthen the efficiency of our proposed layers. The convergence of the proposed IVGD is proven theoretically. Extensive experiments on nine real-world datasets demonstrate that our proposed IVGD outperforms state-of-the-art comparison methods significantly. We have released our code at https://github.com/xianggebenben/IVGD.

Do Multi-Lingual Pre-trained Language Models Reveal Consistent Token Attributions in Different Languages?

Dec 23, 2021Junxiang Wang, Xuchao Zhang, Bo Zong, Yanchi Liu, Wei Cheng, Jingchao Ni, Haifeng Chen, Liang Zhao

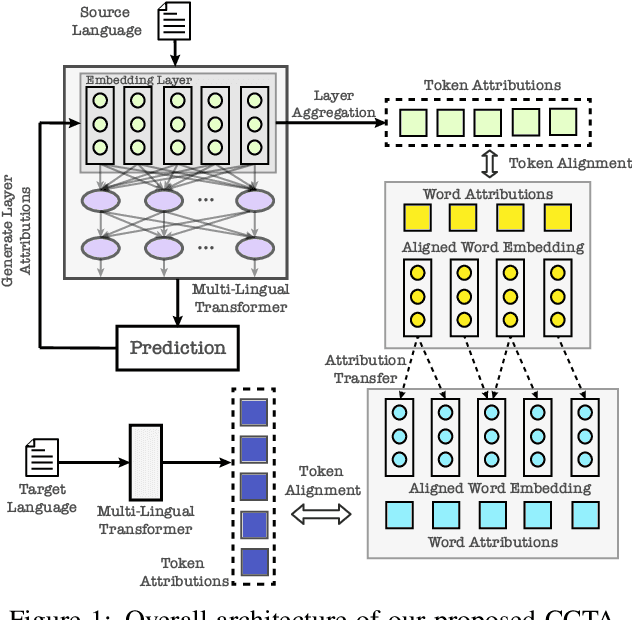

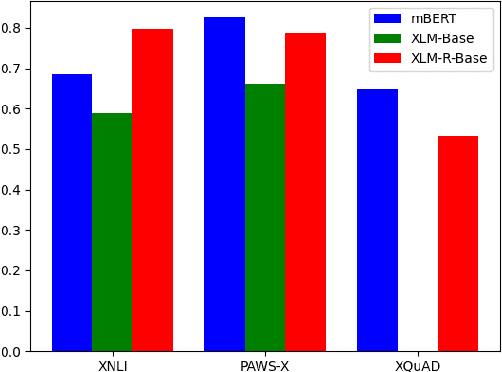

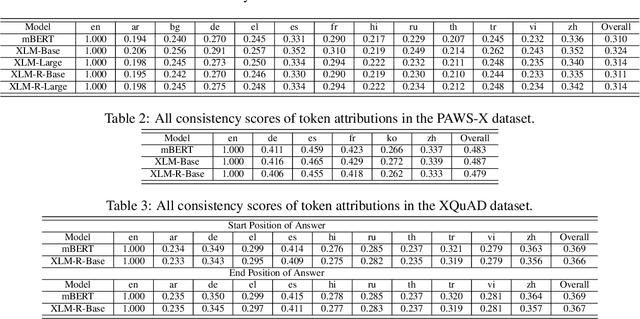

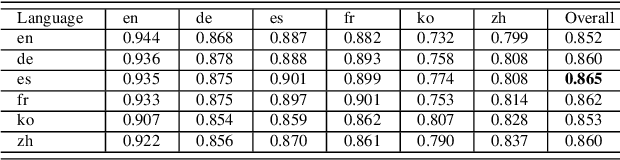

During the past several years, a surge of multi-lingual Pre-trained Language Models (PLMs) has been proposed to achieve state-of-the-art performance in many cross-lingual downstream tasks. However, the understanding of why multi-lingual PLMs perform well is still an open domain. For example, it is unclear whether multi-Lingual PLMs reveal consistent token attributions in different languages. To address this, in this paper, we propose a Cross-lingual Consistency of Token Attributions (CCTA) evaluation framework. Extensive experiments in three downstream tasks demonstrate that multi-lingual PLMs assign significantly different attributions to multi-lingual synonyms. Moreover, we have the following observations: 1) the Spanish achieves the most consistent token attributions in different languages when it is used for training PLMs; 2) the consistency of token attributions strongly correlates with performance in downstream tasks.

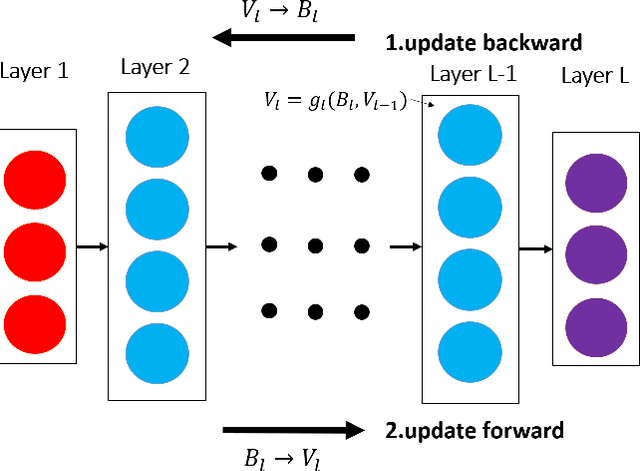

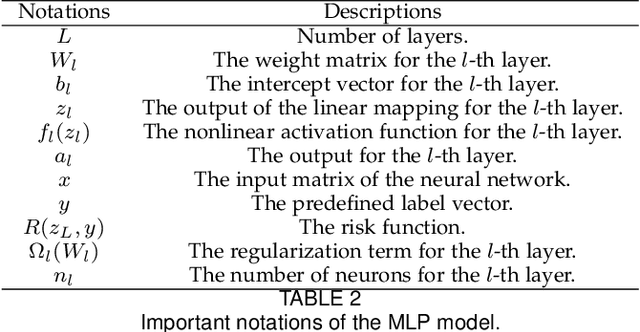

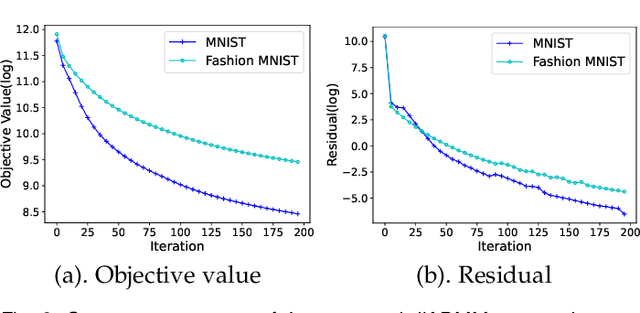

A Convergent ADMM Framework for Efficient Neural Network Training

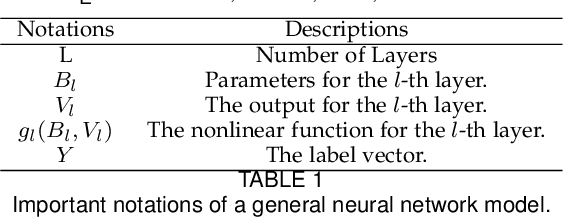

Dec 22, 2021Junxiang Wang, Hongyi Li, Liang Zhao

As a well-known optimization framework, the Alternating Direction Method of Multipliers (ADMM) has achieved tremendous success in many classification and regression applications. Recently, it has attracted the attention of deep learning researchers and is considered to be a potential substitute to Gradient Descent (GD). However, as an emerging domain, several challenges remain unsolved, including 1) The lack of global convergence guarantees, 2) Slow convergence towards solutions, and 3) Cubic time complexity with regard to feature dimensions. In this paper, we propose a novel optimization framework to solve a general neural network training problem via ADMM (dlADMM) to address these challenges simultaneously. Specifically, the parameters in each layer are updated backward and then forward so that parameter information in each layer is exchanged efficiently. When the dlADMM is applied to specific architectures, the time complexity of subproblems is reduced from cubic to quadratic via a dedicated algorithm design utilizing quadratic approximations and backtracking techniques. Last but not least, we provide the first proof of convergence to a critical point sublinearly for an ADMM-type method (dlADMM) under mild conditions. Experiments on seven benchmark datasets demonstrate the convergence, efficiency, and effectiveness of our proposed dlADMM algorithm.

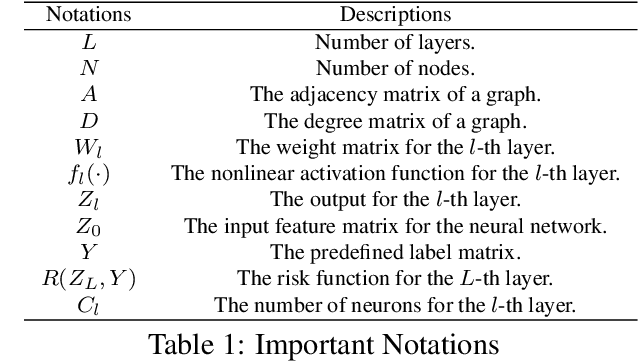

Community-based Layerwise Distributed Training of Graph Convolutional Networks

Dec 17, 2021Hongyi Li, Junxiang Wang, Yongchao Wang, Yue Cheng, Liang Zhao

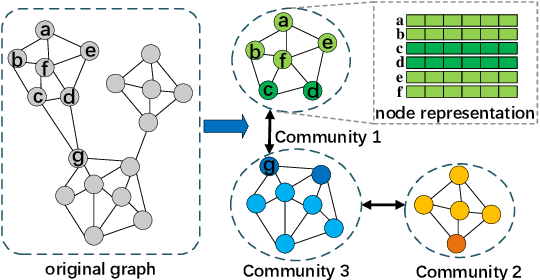

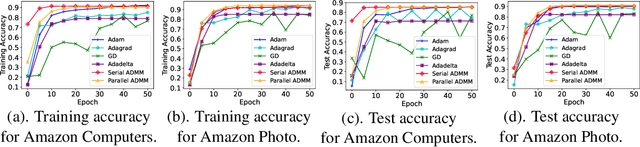

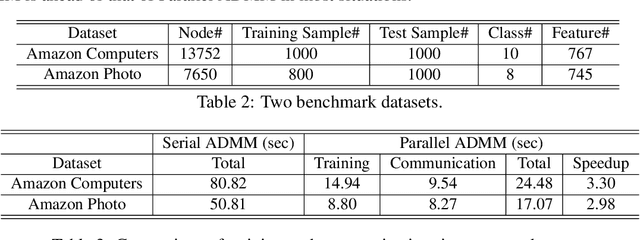

The Graph Convolutional Network (GCN) has been successfully applied to many graph-based applications. Training a large-scale GCN model, however, is still challenging: Due to the node dependency and layer dependency of the GCN architecture, a huge amount of computational time and memory is required in the training process. In this paper, we propose a parallel and distributed GCN training algorithm based on the Alternating Direction Method of Multipliers (ADMM) to tackle the two challenges simultaneously. We first split GCN layers into independent blocks to achieve layer parallelism. Furthermore, we reduce node dependency by dividing the graph into several dense communities such that each of them can be trained with an agent in parallel. Finally, we provide solutions for all subproblems in the community-based ADMM algorithm. Preliminary results demonstrate that our proposed community-based ADMM training algorithm can lead to more than triple speedup while achieving the best performance compared with state-of-the-art methods.

Towards Quantized Model Parallelism for Graph-Augmented MLPs Based on Gradient-Free ADMM framework

May 20, 2021Junxiang Wang, Hongyi Li, Zheng Chai, Yongchao Wang, Yue Cheng, Liang Zhao

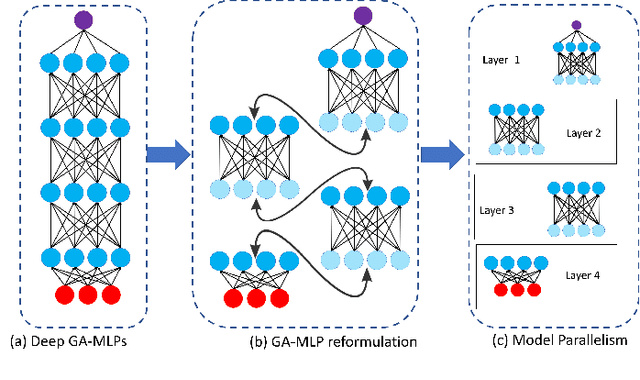

The Graph Augmented Multi-layer Perceptron (GA-MLP) model is an attractive alternative to Graph Neural Networks (GNNs). This is because it is resistant to the over-smoothing problem, and deeper GA-MLP models yield better performance. GA-MLP models are traditionally optimized by the Stochastic Gradient Descent (SGD). However, SGD suffers from the layer dependency problem, which prevents the gradients of different layers of GA-MLP models from being calculated in parallel. In this paper, we propose a parallel deep learning Alternating Direction Method of Multipliers (pdADMM) framework to achieve model parallelism: parameters in each layer of GA-MLP models can be updated in parallel. The extended pdADMM-Q algorithm reduces communication cost by utilizing the quantization technique. Theoretical convergence to a critical point of the pdADMM algorithm and the pdADMM-Q algorithm is provided with a sublinear convergence rate $o(1/k)$. Extensive experiments in six benchmark datasets demonstrate that the pdADMM can lead to high speedup, and outperforms all the existing state-of-the-art comparison methods.

Sign-regularized Multi-task Learning

Feb 22, 2021Johnny Torres, Guangji Bai, Junxiang Wang, Liang Zhao, Carmen Vaca, Cristina Abad

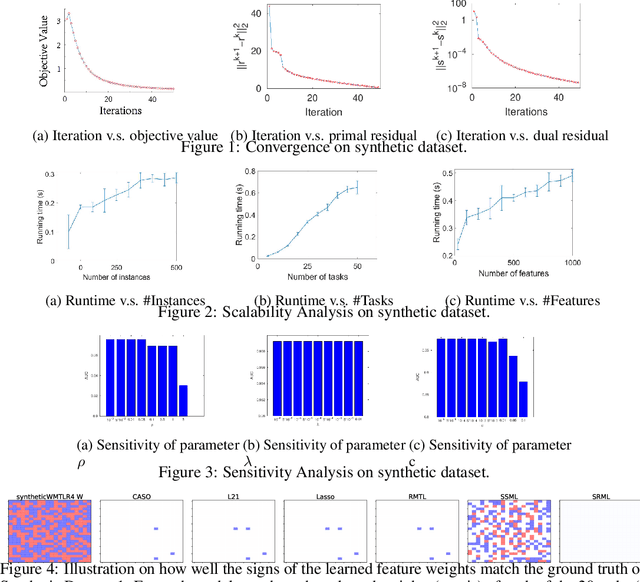

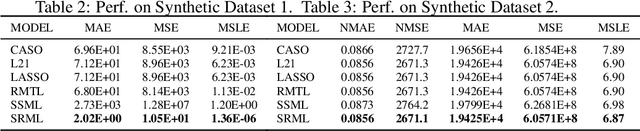

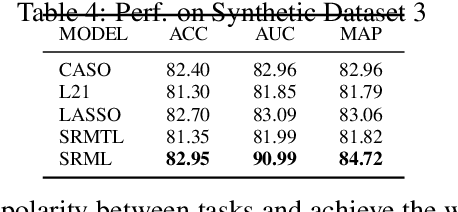

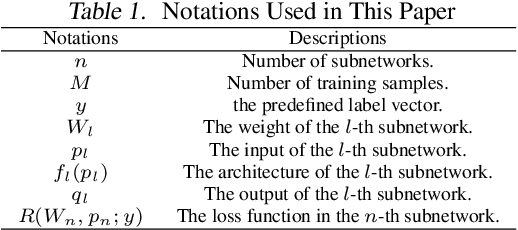

Multi-task learning is a framework that enforces different learning tasks to share their knowledge to improve their generalization performance. It is a hot and active domain that strives to handle several core issues; particularly, which tasks are correlated and similar, and how to share the knowledge among correlated tasks. Existing works usually do not distinguish the polarity and magnitude of feature weights and commonly rely on linear correlation, due to three major technical challenges in: 1) optimizing the models that regularize feature weight polarity, 2) deciding whether to regularize sign or magnitude, 3) identifying which tasks should share their sign and/or magnitude patterns. To address them, this paper proposes a new multi-task learning framework that can regularize feature weight signs across tasks. We innovatively formulate it as a biconvex inequality constrained optimization with slacks and propose a new efficient algorithm for the optimization with theoretical guarantees on generalization performance and convergence. Extensive experiments on multiple datasets demonstrate the proposed methods' effectiveness, efficiency, and reasonableness of the regularized feature weighted patterns.

Tunable Subnetwork Splitting for Model-parallelism of Neural Network Training

Sep 16, 2020Junxiang Wang, Zheng Chai, Yue Cheng, Liang Zhao

Alternating minimization methods have recently been proposed as alternatives to the gradient descent for deep neural network optimization. Alternating minimization methods can typically decompose a deep neural network into layerwise subproblems, which can then be optimized in parallel. Despite the significant parallelism, alternating minimization methods are rarely explored in training deep neural networks because of the severe accuracy degradation. In this paper, we analyze the reason and propose to achieve a compelling trade-off between parallelism and accuracy by a reformulation called Tunable Subnetwork Splitting Method (TSSM), which can tune the decomposition granularity of deep neural networks. Two methods gradient splitting Alternating Direction Method of Multipliers (gsADMM) and gradient splitting Alternating Minimization (gsAM) are proposed to solve the TSSM formulation. Experiments on five benchmark datasets show that our proposed TSSM can achieve significant speedup without observable loss of training accuracy. The code has been released at https://github.com/xianggebenben/TSSM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge