Jiaying Li

ATLAS: A High-Difficulty, Multidisciplinary Benchmark for Frontier Scientific Reasoning

Nov 18, 2025Abstract:The rapid advancement of Large Language Models (LLMs) has led to performance saturation on many established benchmarks, questioning their ability to distinguish frontier models. Concurrently, existing high-difficulty benchmarks often suffer from narrow disciplinary focus, oversimplified answer formats, and vulnerability to data contamination, creating a fidelity gap with real-world scientific inquiry. To address these challenges, we introduce ATLAS (AGI-Oriented Testbed for Logical Application in Science), a large-scale, high-difficulty, and cross-disciplinary evaluation suite composed of approximately 800 original problems. Developed by domain experts (PhD-level and above), ATLAS spans seven core scientific fields: mathematics, physics, chemistry, biology, computer science, earth science, and materials science. Its key features include: (1) High Originality and Contamination Resistance, with all questions newly created or substantially adapted to prevent test data leakage; (2) Cross-Disciplinary Focus, designed to assess models' ability to integrate knowledge and reason across scientific domains; (3) High-Fidelity Answers, prioritizing complex, open-ended answers involving multi-step reasoning and LaTeX-formatted expressions over simple multiple-choice questions; and (4) Rigorous Quality Control, employing a multi-stage process of expert peer review and adversarial testing to ensure question difficulty, scientific value, and correctness. We also propose a robust evaluation paradigm using a panel of LLM judges for automated, nuanced assessment of complex answers. Preliminary results on leading models demonstrate ATLAS's effectiveness in differentiating their advanced scientific reasoning capabilities. We plan to develop ATLAS into a long-term, open, community-driven platform to provide a reliable "ruler" for progress toward Artificial General Intelligence.

Unsupervised Learning for AoD Estimation in MISO Downlink LoS Transmissions

Mar 15, 2025

Abstract:With the emerging of simultaneous localization and communication (SLAC), it becomes more and more attractive to perform angle of departure (AoD) estimation at the receiving Internet of Thing (IoT) user end for improved positioning accuracy, flexibility and enhanced user privacy. To address challenges like large number of real-time measurements required for latency-critical applications and enormous data collection for training deep learning models in conventional AoD estimation methods, we propose in this letter an unsupervised learning framework, which unifies training for both deterministic maximum likelihood (DML) and stochastic maximum likelihood (SML) based AoD estimation in multiple-input single-output (MISO) downlink (DL) wireless transmissions. Specifically, under the line-of-sight (LoS) assumption, we incorporate both the received signals and pilot-sequence information, as per its availability at the DL user, into the input of the deep learning model, and adopt a common neural network architecture compatible with input data in both DML and SML cases. Extensive numerical results validate that the proposed unsupervised learning based AoD estimation not only improves estimation accuracy, but also significantly reduces required number of observations, thereby reducing both estimation overhead and latency compared to various benchmarks.

SOCRATES: Towards a Unified Platform for Neural Network Verification

Jul 22, 2020

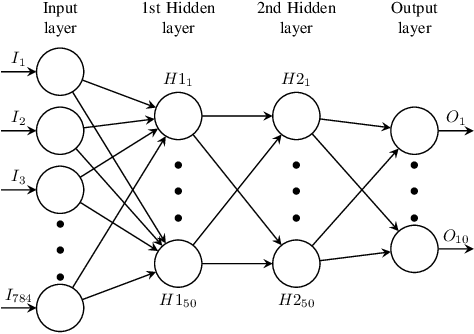

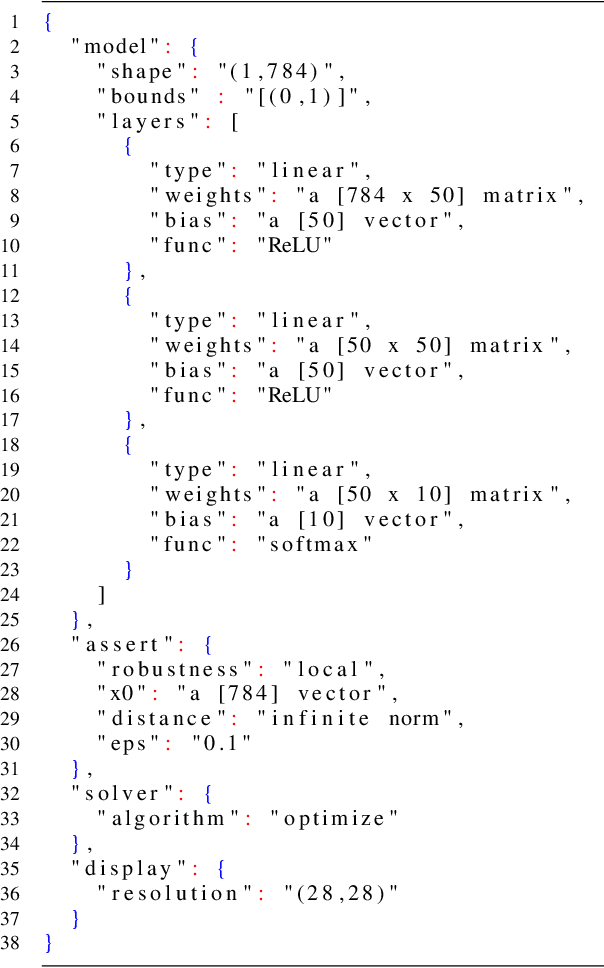

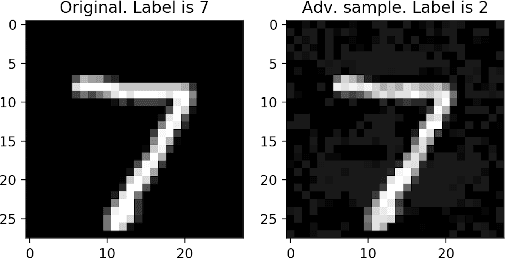

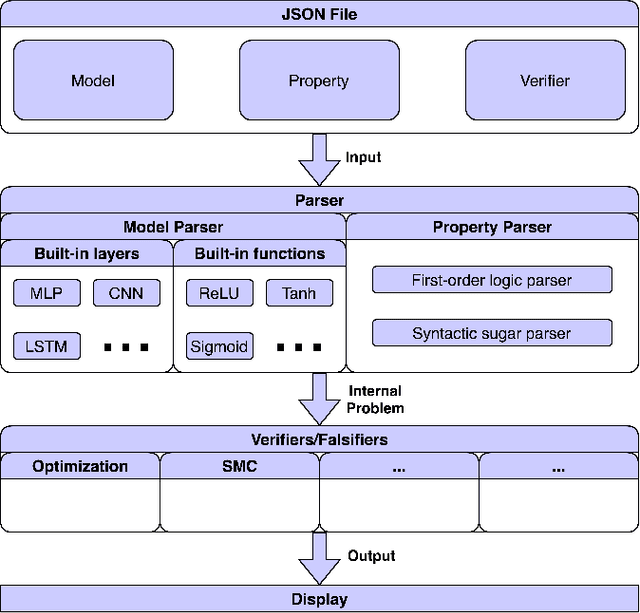

Abstract:Studies show that neural networks, not unlike traditional programs, are subject to bugs, e.g., adversarial samples that cause classification errors and discriminatory instances that demonstrate the lack of fairness. Given that neural networks are increasingly applied in critical applications (e.g., self-driving cars, face recognition systems and personal credit rating systems), it is desirable that systematic methods are developed to verify or falsify neural networks against desirable properties. Recently, a number of approaches have been developed to verify neural networks. These efforts are however scattered (i.e., each approach tackles some restricted classes of neural networks against certain particular properties), incomparable (i.e., each approach has its own assumptions and input format) and thus hard to apply, reuse or extend. In this project, we aim to build a unified framework for developing verification techniques for neural networks. Towards this goal, we develop a platform called SOCRATES which supports a standardized format for a variety of neural network models, an assertion language for property specification as well as two novel algorithms for verifying or falsifying neural network models. SOCRATES is extensible and thus existing approaches can be easily integrated. Experiment results show that our platform offers better or comparable performance to state-of-the-art approaches. More importantly, it provides a platform for synergistic research on neural network verification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge