Jan-Willem van de Meent

Structured Disentangled Representations

May 29, 2018

Abstract:Deep latent-variable models learn representations of high-dimensional data in an unsupervised manner. A number of recent efforts have focused on learning representations that disentangle statistically independent axes of variation by introducing modifications to the standard objective function. These approaches generally assume a simple diagonal Gaussian prior and as a result are not able to reliably disentangle discrete factors of variation. We propose a two-level hierarchical objective to control relative degree of statistical independence between blocks of variables and individual variables within blocks. We derive this objective as a generalization of the evidence lower bound, which allows us to explicitly represent the trade-offs between mutual information between data and representation, KL divergence between representation and prior, and coverage of the support of the empirical data distribution. Experiments on a variety of datasets demonstrate that our objective can not only disentangle discrete variables, but that doing so also improves disentanglement of other variables and, importantly, generalization even to unseen combinations of factors.

Learning Disentangled Representations with Semi-Supervised Deep Generative Models

Nov 13, 2017

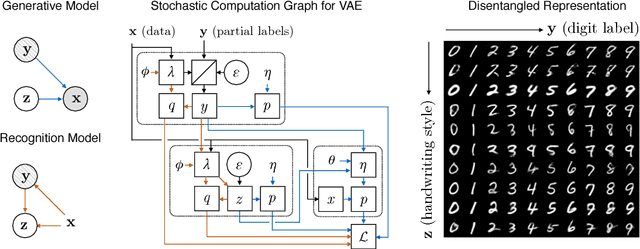

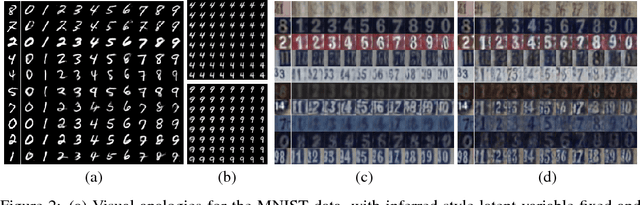

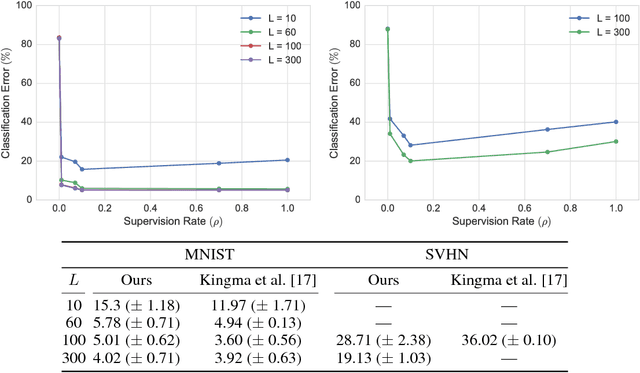

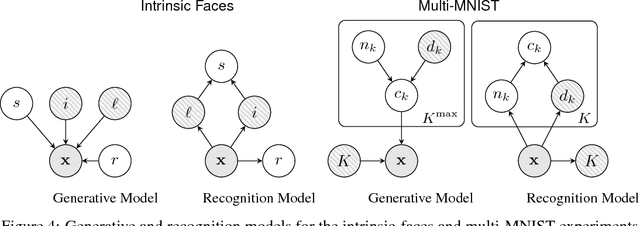

Abstract:Variational autoencoders (VAEs) learn representations of data by jointly training a probabilistic encoder and decoder network. Typically these models encode all features of the data into a single variable. Here we are interested in learning disentangled representations that encode distinct aspects of the data into separate variables. We propose to learn such representations using model architectures that generalise from standard VAEs, employing a general graphical model structure in the encoder and decoder. This allows us to train partially-specified models that make relatively strong assumptions about a subset of interpretable variables and rely on the flexibility of neural networks to learn representations for the remaining variables. We further define a general objective for semi-supervised learning in this model class, which can be approximated using an importance sampling procedure. We evaluate our framework's ability to learn disentangled representations, both by qualitative exploration of its generative capacity, and quantitative evaluation of its discriminative ability on a variety of models and datasets.

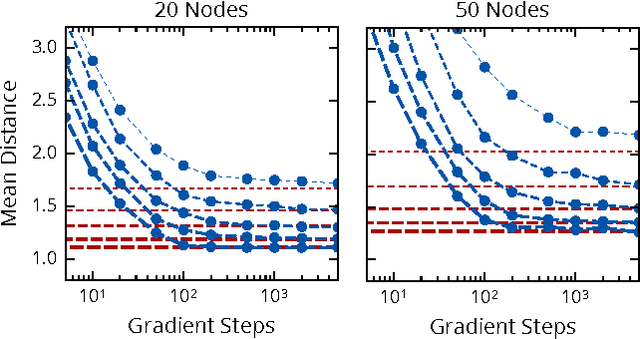

Bayesian Optimization for Probabilistic Programs

Jul 13, 2017

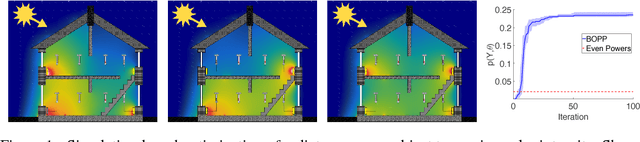

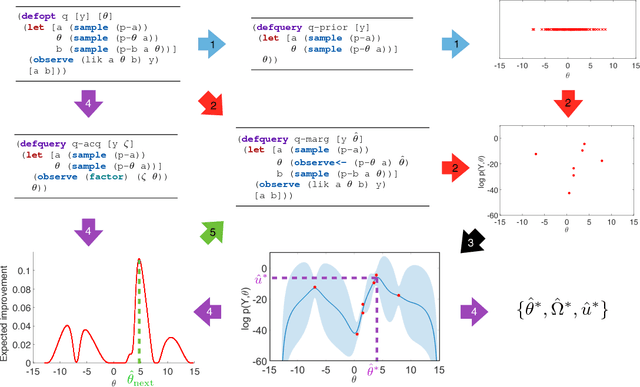

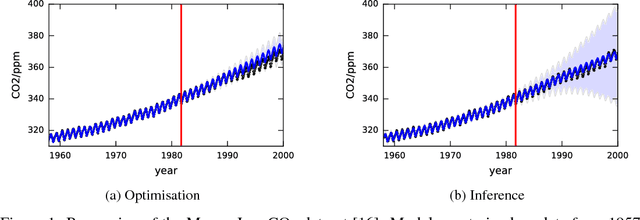

Abstract:We present the first general purpose framework for marginal maximum a posteriori estimation of probabilistic program variables. By using a series of code transformations, the evidence of any probabilistic program, and therefore of any graphical model, can be optimized with respect to an arbitrary subset of its sampled variables. To carry out this optimization, we develop the first Bayesian optimization package to directly exploit the source code of its target, leading to innovations in problem-independent hyperpriors, unbounded optimization, and implicit constraint satisfaction; delivering significant performance improvements over prominent existing packages. We present applications of our method to a number of tasks including engineering design and parameter optimization.

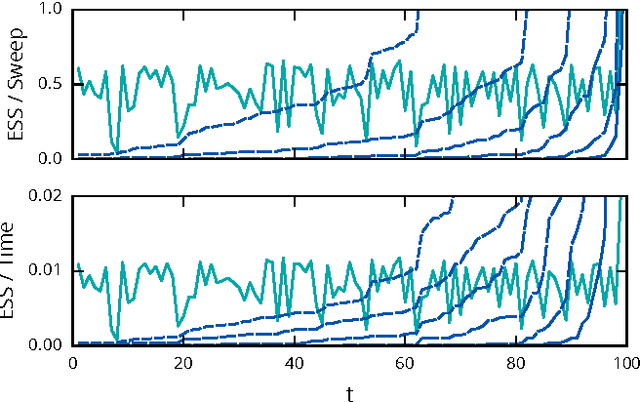

Interacting Particle Markov Chain Monte Carlo

Apr 12, 2017

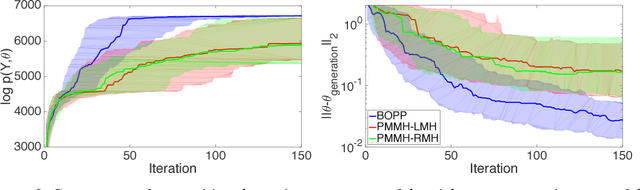

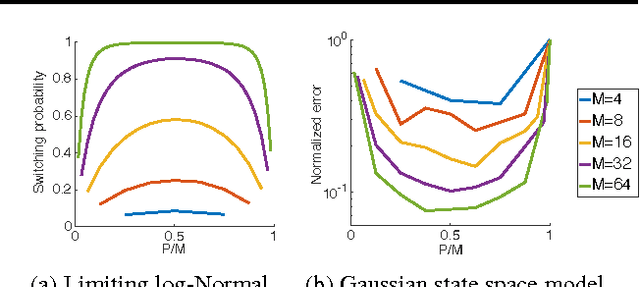

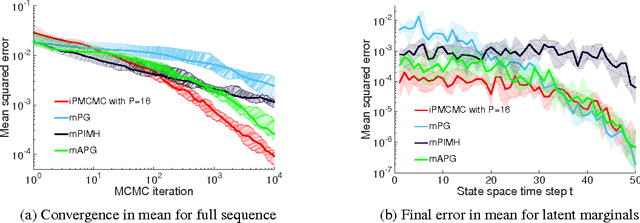

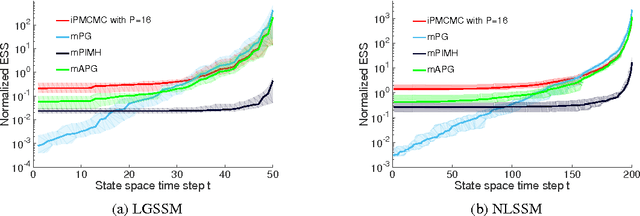

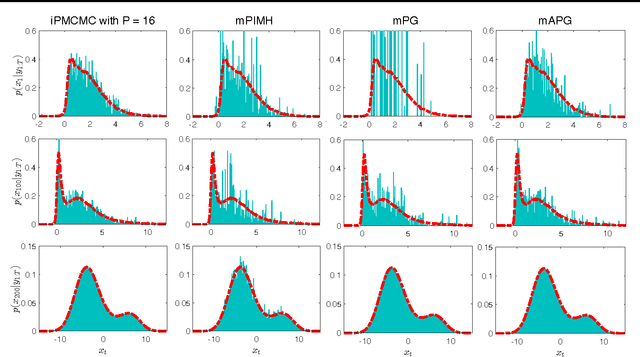

Abstract:We introduce interacting particle Markov chain Monte Carlo (iPMCMC), a PMCMC method based on an interacting pool of standard and conditional sequential Monte Carlo samplers. Like related methods, iPMCMC is a Markov chain Monte Carlo sampler on an extended space. We present empirical results that show significant improvements in mixing rates relative to both non-interacting PMCMC samplers, and a single PMCMC sampler with an equivalent memory and computational budget. An additional advantage of the iPMCMC method is that it is suitable for distributed and multi-core architectures.

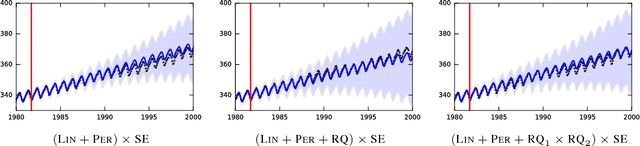

Probabilistic structure discovery in time series data

Nov 21, 2016

Abstract:Existing methods for structure discovery in time series data construct interpretable, compositional kernels for Gaussian process regression models. While the learned Gaussian process model provides posterior mean and variance estimates, typically the structure is learned via a greedy optimization procedure. This restricts the space of possible solutions and leads to over-confident uncertainty estimates. We introduce a fully Bayesian approach, inferring a full posterior over structures, which more reliably captures the uncertainty of the model.

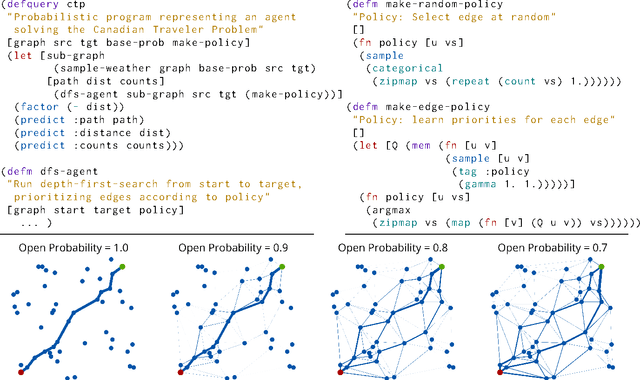

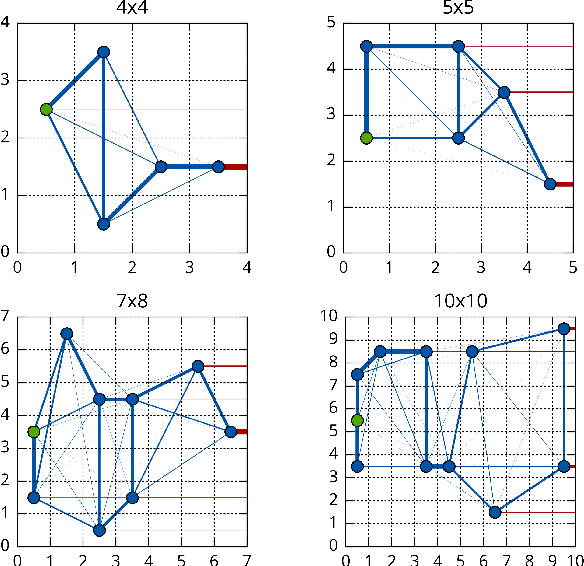

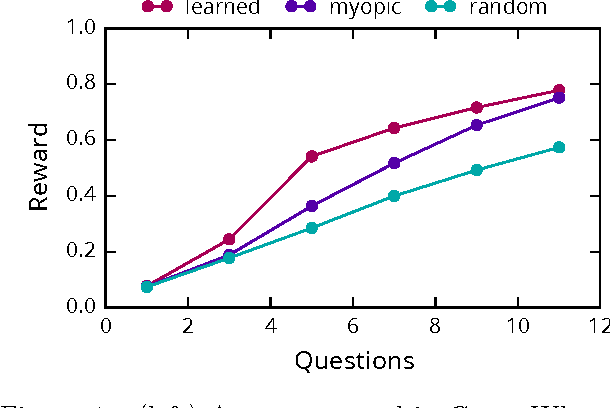

Black-Box Policy Search with Probabilistic Programs

Aug 04, 2016

Abstract:In this work, we explore how probabilistic programs can be used to represent policies in sequential decision problems. In this formulation, a probabilistic program is a black-box stochastic simulator for both the problem domain and the agent. We relate classic policy gradient techniques to recently introduced black-box variational methods which generalize to probabilistic program inference. We present case studies in the Canadian traveler problem, Rock Sample, and a benchmark for optimal diagnosis inspired by Guess Who. Each study illustrates how programs can efficiently represent policies using moderate numbers of parameters.

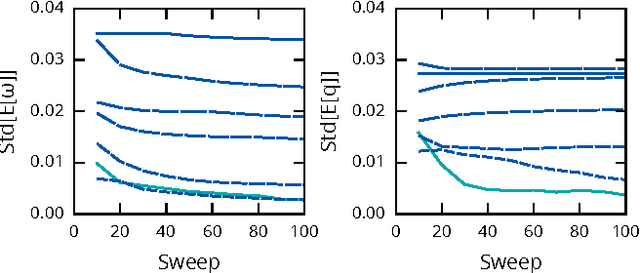

Particle Gibbs with Ancestor Sampling for Probabilistic Programs

Feb 09, 2015

Abstract:Particle Markov chain Monte Carlo techniques rank among current state-of-the-art methods for probabilistic program inference. A drawback of these techniques is that they rely on importance resampling, which results in degenerate particle trajectories and a low effective sample size for variables sampled early in a program. We here develop a formalism to adapt ancestor resampling, a technique that mitigates particle degeneracy, to the probabilistic programming setting. We present empirical results that demonstrate nontrivial performance gains.

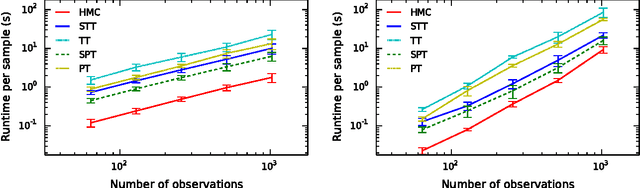

Tempering by Subsampling

Jan 28, 2014

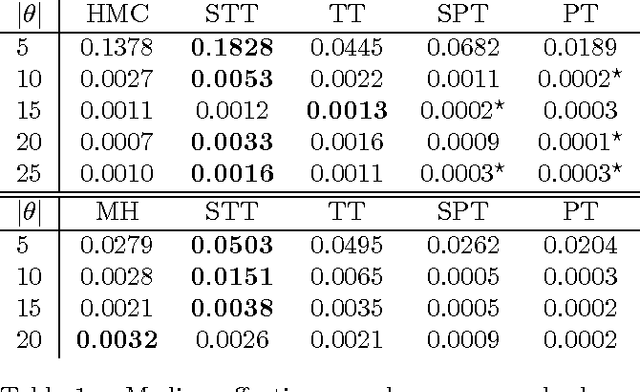

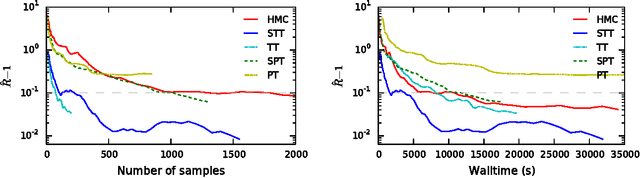

Abstract:In this paper we demonstrate that tempering Markov chain Monte Carlo samplers for Bayesian models by recursively subsampling observations without replacement can improve the performance of baseline samplers in terms of effective sample size per computation. We present two tempering by subsampling algorithms, subsampled parallel tempering and subsampled tempered transitions. We provide an asymptotic analysis of the computational cost of tempering by subsampling, verify that tempering by subsampling costs less than traditional tempering, and demonstrate both algorithms on Bayesian approaches to learning the mean of a high dimensional multivariate Normal and estimating Gaussian process hyperparameters.

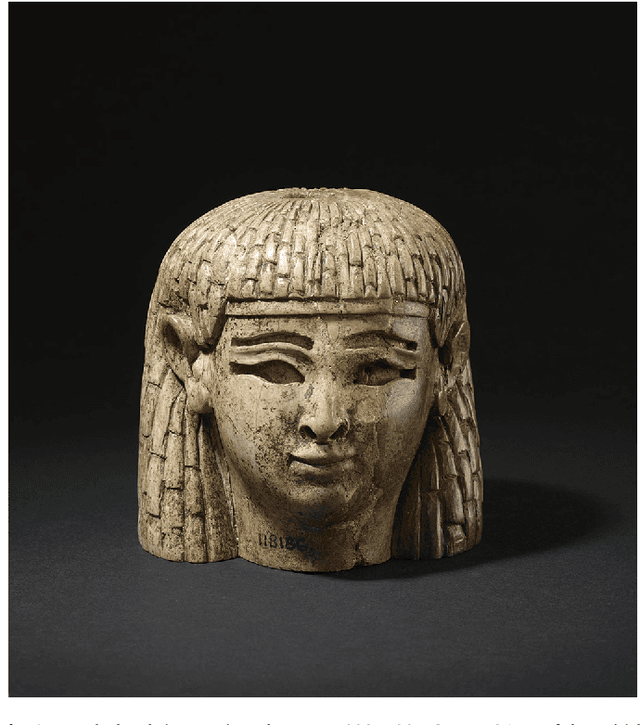

Stylistic Clusters and the Syrian/South Syrian Tradition of First-Millennium BCE Levantine Ivory Carving: A Machine Learning Approach

Jan 05, 2014

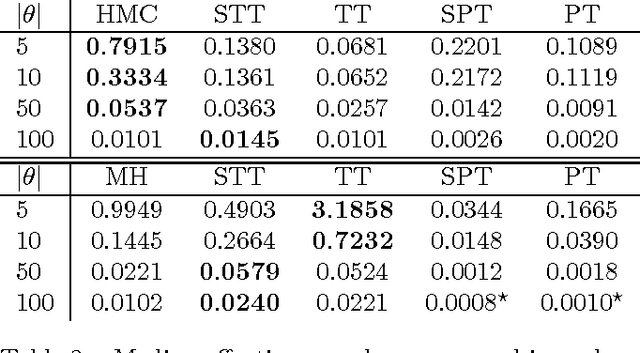

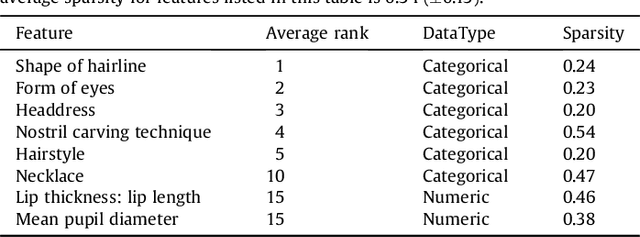

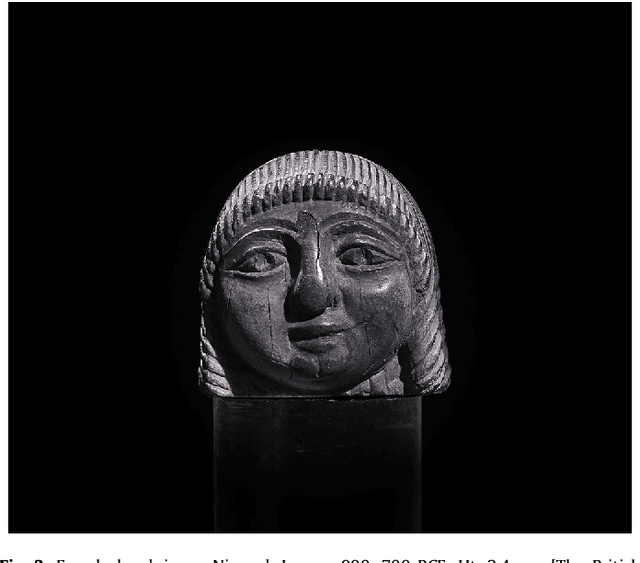

Abstract:Thousands of first-millennium BCE ivory carvings have been excavated from Neo-Assyrian sites in Mesopotamia (primarily Nimrud, Khorsabad, and Arslan Tash) hundreds of miles from their Levantine production contexts. At present, their specific manufacture dates and workshop localities are unknown. Relying on subjective, visual methods, scholars have grappled with their classification and regional attribution for over a century. This study combines visual approaches with machine-learning techniques to offer data-driven perspectives on the classification and attribution of this early Iron Age corpus. The study sample consisted of 162 sculptures of female figures. We have developed an algorithm that clusters the ivories based on a combination of descriptive and anthropometric data. The resulting categories, which are based on purely statistical criteria, show good agreement with conventional art historical classifications, while revealing new perspectives, especially with regard to the contested Syrian/South Syrian/Intermediate tradition. Specifically, we have identified that objects of the Syrian/South Syrian/Intermediate tradition may be more closely related to Phoenician objects than to North Syrian objects; we offer a reconsideration of a subset of Phoenician objects, and we confirm Syrian/South Syrian/Intermediate stylistic subgroups that might distinguish networks of acquisition among the sites of Nimrud, Khorsabad, Arslan Tash and the Levant. We have also identified which features are most significant in our cluster assignments and might thereby be most diagnostic of regional carving traditions. In short, our study both corroborates traditional visual classification methods and demonstrates how machine-learning techniques may be employed to reveal complementary information not accessible through the exclusively visual analysis of an archaeological corpus.

Hierarchically-coupled hidden Markov models for learning kinetic rates from single-molecule data

May 15, 2013

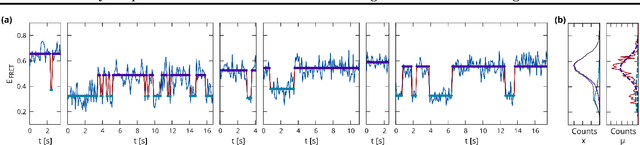

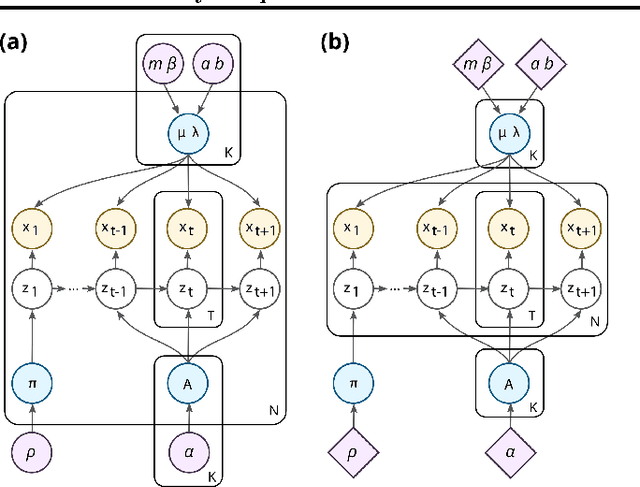

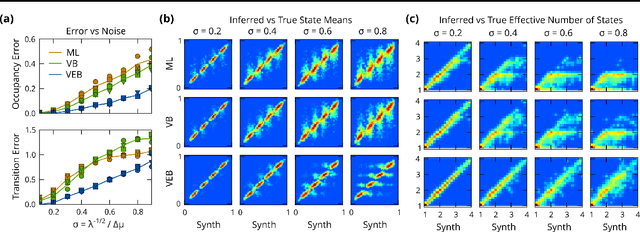

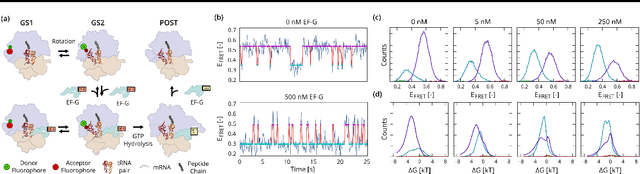

Abstract:We address the problem of analyzing sets of noisy time-varying signals that all report on the same process but confound straightforward analyses due to complex inter-signal heterogeneities and measurement artifacts. In particular we consider single-molecule experiments which indirectly measure the distinct steps in a biomolecular process via observations of noisy time-dependent signals such as a fluorescence intensity or bead position. Straightforward hidden Markov model (HMM) analyses attempt to characterize such processes in terms of a set of conformational states, the transitions that can occur between these states, and the associated rates at which those transitions occur; but require ad-hoc post-processing steps to combine multiple signals. Here we develop a hierarchically coupled HMM that allows experimentalists to deal with inter-signal variability in a principled and automatic way. Our approach is a generalized expectation maximization hyperparameter point estimation procedure with variational Bayes at the level of individual time series that learns an single interpretable representation of the overall data generating process.

* 9 pages, 5 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge