Jan Peters

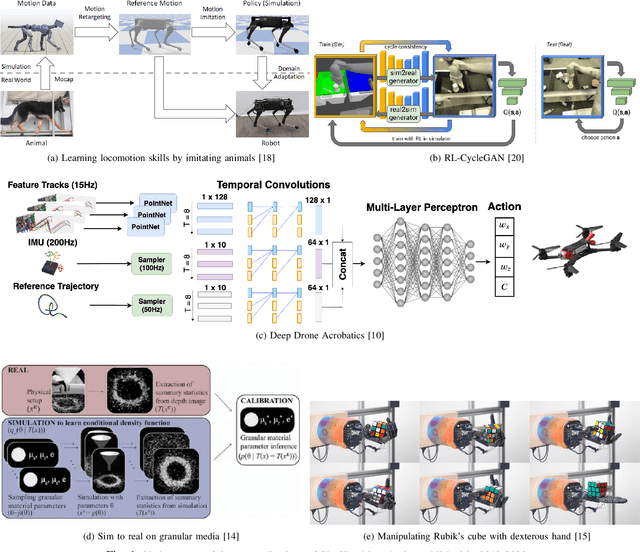

Perspectives on Sim2Real Transfer for Robotics: A Summary of the R:SS 2020 Workshop

Dec 07, 2020

Abstract:This report presents the debates, posters, and discussions of the Sim2Real workshop held in conjunction with the 2020 edition of the "Robotics: Science and System" conference. Twelve leaders of the field took competing debate positions on the definition, viability, and importance of transferring skills from simulation to the real world in the context of robotics problems. The debaters also joined a large panel discussion, answering audience questions and outlining the future of Sim2Real in robotics. Furthermore, we invited extended abstracts to this workshop which are summarized in this report. Based on the workshop, this report concludes with directions for practitioners exploiting this technology and for researchers further exploring open problems in this area.

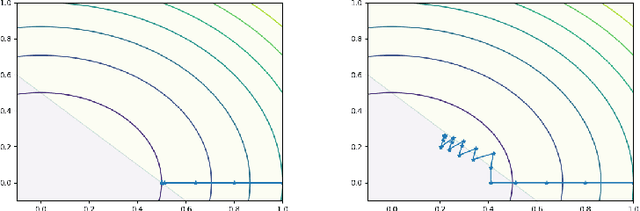

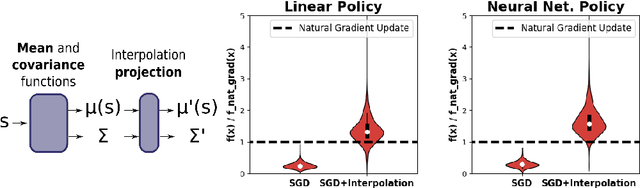

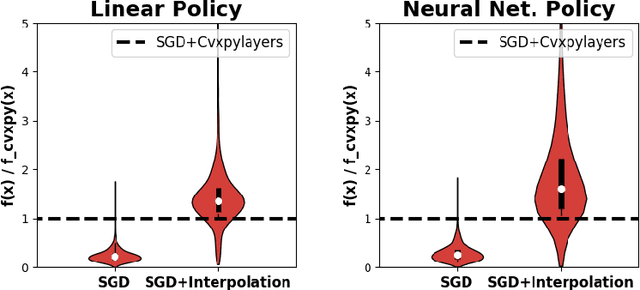

Convex Optimization with an Interpolation-based Projection and its Application to Deep Learning

Nov 13, 2020

Abstract:Convex optimizers have known many applications as differentiable layers within deep neural architectures. One application of these convex layers is to project points into a convex set. However, both forward and backward passes of these convex layers are significantly more expensive to compute than those of a typical neural network. We investigate in this paper whether an inexact, but cheaper projection, can drive a descent algorithm to an optimum. Specifically, we propose an interpolation-based projection that is computationally cheap and easy to compute given a convex, domain defining, function. We then propose an optimization algorithm that follows the gradient of the composition of the objective and the projection and prove its convergence for linear objectives and arbitrary convex and Lipschitz domain defining inequality constraints. In addition to the theoretical contributions, we demonstrate empirically the practical interest of the interpolation projection when used in conjunction with neural networks in a reinforcement learning and a supervised learning setting.

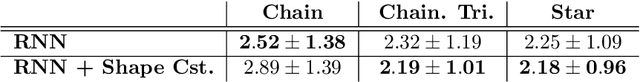

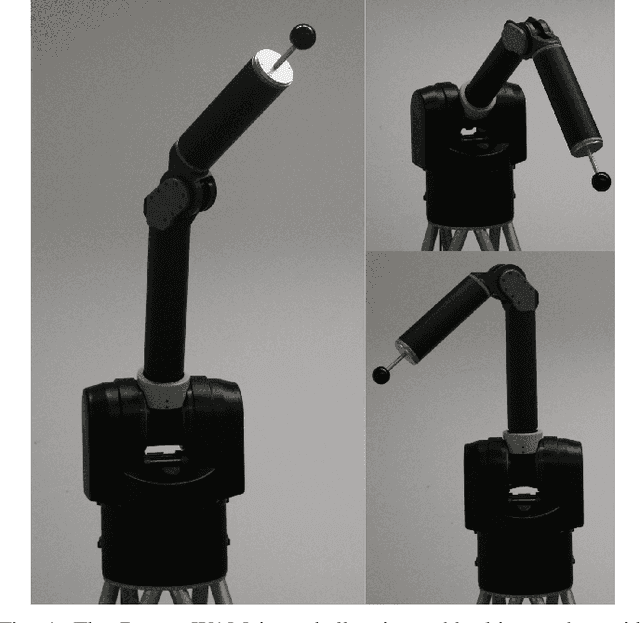

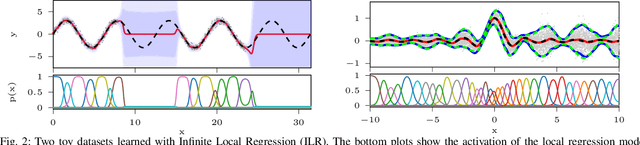

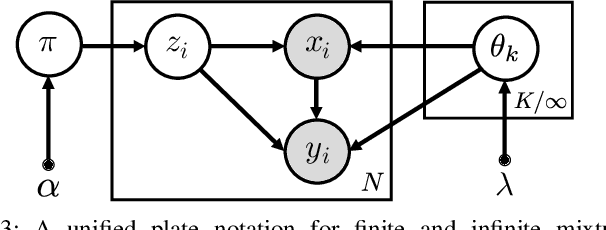

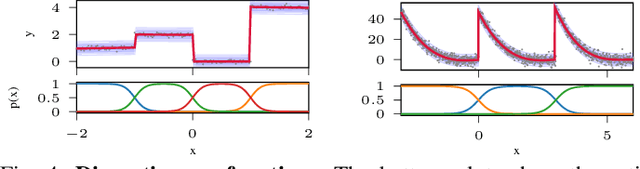

A Variational Infinite Mixture for Probabilistic Inverse Dynamics Learning

Nov 10, 2020

Abstract:Probabilistic regression techniques in control and robotics applications have to fulfill different criteria of data-driven adaptability, computational efficiency, scalability to high dimensions, and the capacity to deal with different modalities in the data. Classical regressors usually fulfill only a subset of these properties. In this work, we extend seminal work on Bayesian nonparametric mixtures and derive an efficient variational Bayes inference technique for infinite mixtures of probabilistic local polynomial models with well-calibrated certainty quantification. We highlight the model's power in combining data-driven complexity adaptation, fast prediction and the ability to deal with discontinuous functions and heteroscedastic noise. We benchmark this technique on a range of large real inverse dynamics datasets, showing that the infinite mixture formulation is competitive with classical Local Learning methods and regularizes model complexity by adapting the number of components based on data and without relying on heuristics. Moreover, to showcase the practicality of the approach, we use the learned models for online inverse dynamics control of a Barret-WAM manipulator, significantly improving the trajectory tracking performance.

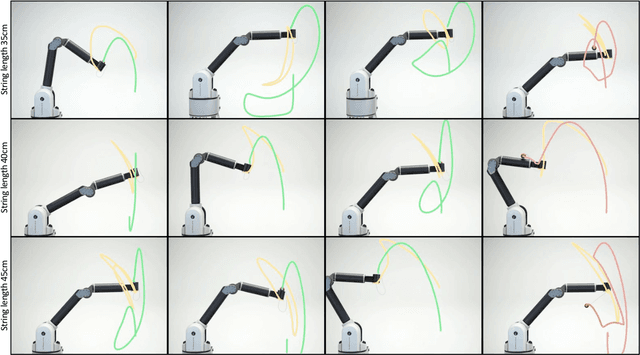

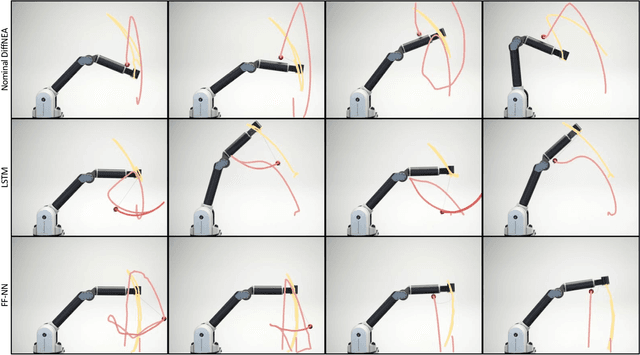

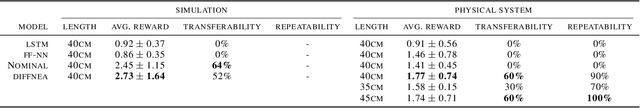

Differentiable Physics Models for Real-world Offline Model-based Reinforcement Learning

Nov 03, 2020

Abstract:A limitation of model-based reinforcement learning (MBRL) is the exploitation of errors in the learned models. Black-box models can fit complex dynamics with high fidelity, but their behavior is undefined outside of the data distribution.Physics-based models are better at extrapolating, due to the general validity of their informed structure, but underfit in the real world due to the presence of unmodeled phenomena. In this work, we demonstrate experimentally that for the offline model-based reinforcement learning setting, physics-based models can be beneficial compared to high-capacity function approximators if the mechanical structure is known. Physics-based models can learn to perform the ball in a cup (BiC) task on a physical manipulator using only 4 minutes of sampled data using offline MBRL. We find that black-box models consistently produce unviable policies for BiC as all predicted trajectories diverge to physically impossible state, despite having access to more data than the physics-based model. In addition, we generalize the approach of physics parameter identification from modeling holonomic multi-body systems to systems with nonholonomic dynamics using end-to-end automatic differentiation. Videos: https://sites.google.com/view/ball-in-a-cup-in-4-minutes/

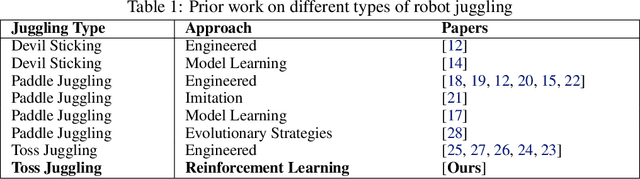

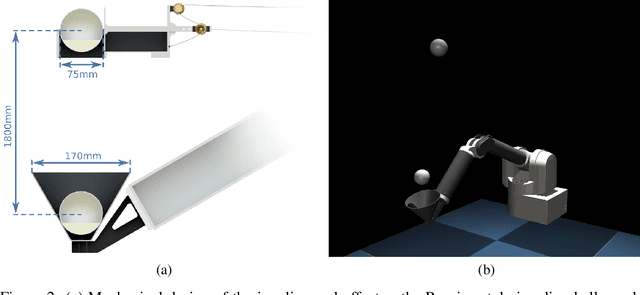

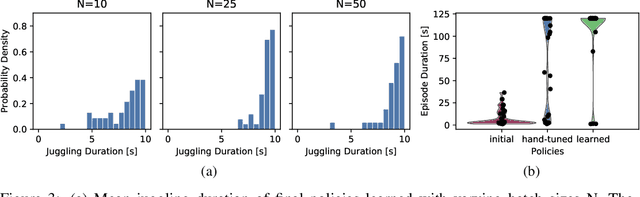

High Acceleration Reinforcement Learning for Real-World Juggling with Binary Rewards

Oct 31, 2020

Abstract:Robots that can learn in the physical world will be important to en-able robots to escape their stiff and pre-programmed movements. For dynamic high-acceleration tasks, such as juggling, learning in the real-world is particularly challenging as one must push the limits of the robot and its actuation without harming the system, amplifying the necessity of sample efficiency and safety for robot learning algorithms. In contrast to prior work which mainly focuses on the learning algorithm, we propose a learning system, that directly incorporates these requirements in the design of the policy representation, initialization, and optimization. We demonstrate that this system enables the high-speed Barrett WAM manipulator to learn juggling two balls from 56 minutes of experience with a binary reward signal. The final policy juggles continuously for up to 33 minutes or about 4500 repeated catches. The videos documenting the learning process and the evaluation can be found at https://sites.google.com/view/jugglingbot

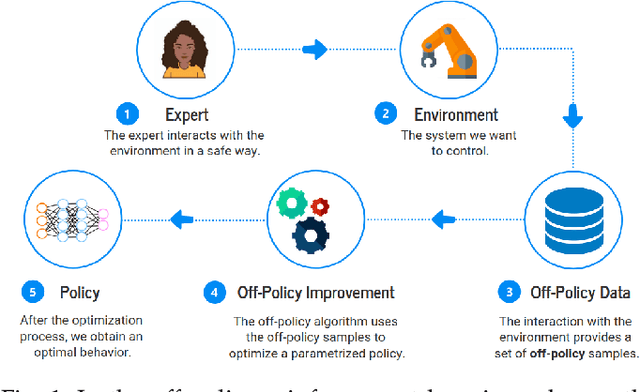

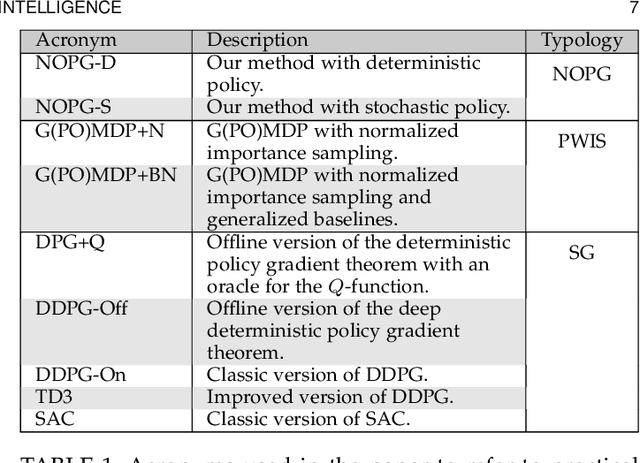

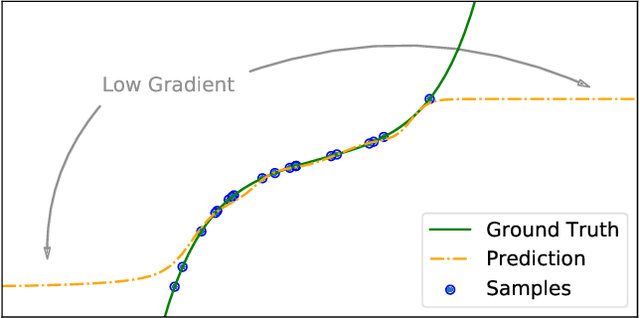

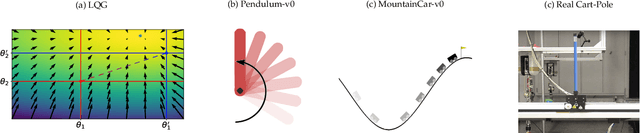

Batch Reinforcement Learning with a Nonparametric Off-Policy Policy Gradient

Oct 29, 2020

Abstract:Off-policy Reinforcement Learning (RL) holds the promise of better data efficiency as it allows sample reuse and potentially enables safe interaction with the environment. Current off-policy policy gradient methods either suffer from high bias or high variance, delivering often unreliable estimates. The price of inefficiency becomes evident in real-world scenarios such as interaction-driven robot learning, where the success of RL has been rather limited, and a very high sample cost hinders straightforward application. In this paper, we propose a nonparametric Bellman equation, which can be solved in closed form. The solution is differentiable w.r.t the policy parameters and gives access to an estimation of the policy gradient. In this way, we avoid the high variance of importance sampling approaches, and the high bias of semi-gradient methods. We empirically analyze the quality of our gradient estimate against state-of-the-art methods, and show that it outperforms the baselines in terms of sample efficiency on classical control tasks.

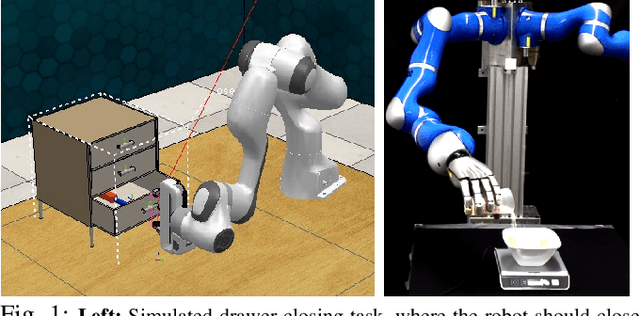

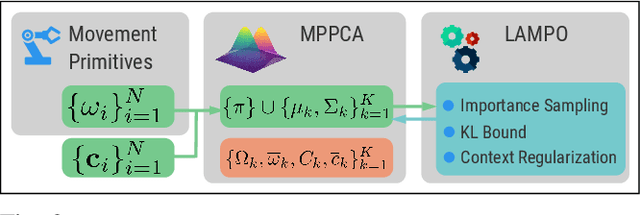

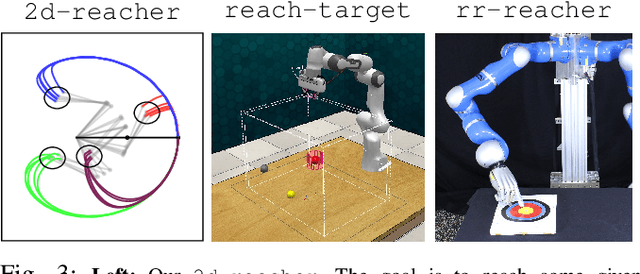

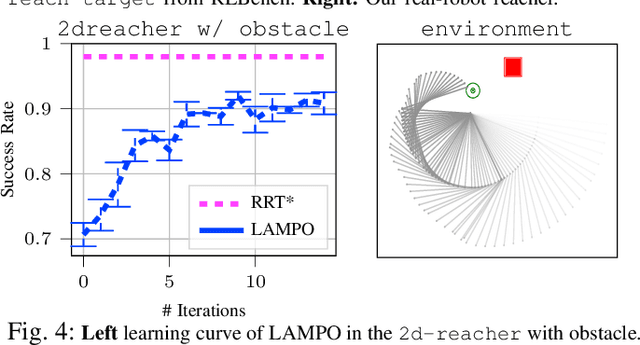

Contextual Latent-Movements Off-Policy Optimization for Robotic Manipulation Skills

Oct 26, 2020

Abstract:Parameterized movement primitives have been extensively used for imitation learning of robotic tasks. However, the high-dimensionality of the parameter space hinders the improvement of such primitives in the reinforcement learning (RL) setting, especially for learning with physical robots. In this paper we propose a novel view on handling the demonstrated trajectories for acquiring low-dimensional, non-linear latent dynamics, using mixtures of probabilistic principal component analyzers (MPPCA) on the movements' parameter space. Moreover, we introduce a new contextual off-policy RL algorithm, named LAtent-Movements Policy Optimization (LAMPO). LAMPO can provide gradient estimates from previous experience using self-normalized importance sampling, hence, making full use of samples collected in previous learning iterations. These advantages combined provide a complete framework for sample-efficient off-policy optimization of movement primitives for robot learning of high-dimensional manipulation skills. Our experimental results conducted both in simulation and on a real robot show that LAMPO provides sample-efficient policies against common approaches in literature.

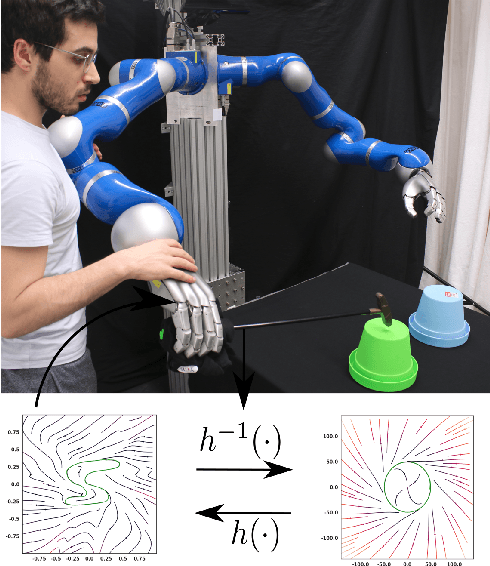

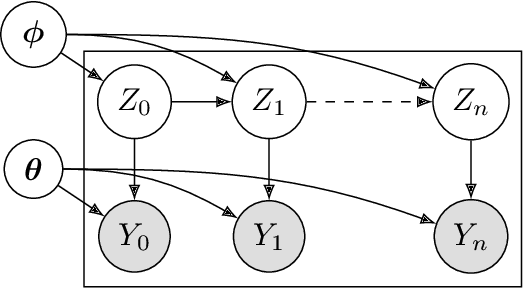

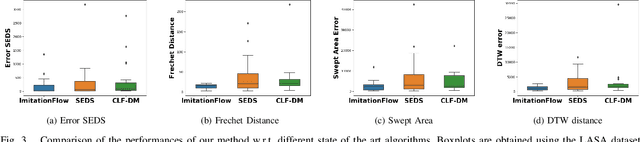

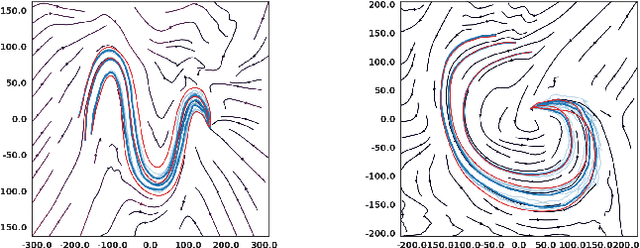

ImitationFlow: Learning Deep Stable Stochastic Dynamic Systems by Normalizing Flows

Oct 25, 2020

Abstract:We introduce ImitationFlow, a novel Deep generative model that allows learning complex globally stable, stochastic, nonlinear dynamics. Our approach extends the Normalizing Flows framework to learn stable Stochastic Differential Equations. We prove the Lyapunov stability for a class of Stochastic Differential Equations and we propose a learning algorithm to learn them from a set of demonstrated trajectories. Our model extends the set of stable dynamical systems that can be represented by state-of-the-art approaches, eliminates the Gaussian assumption on the demonstrations, and outperforms the previous algorithms in terms of representation accuracy. We show the effectiveness of our method with both standard datasets and a real robot experiment.

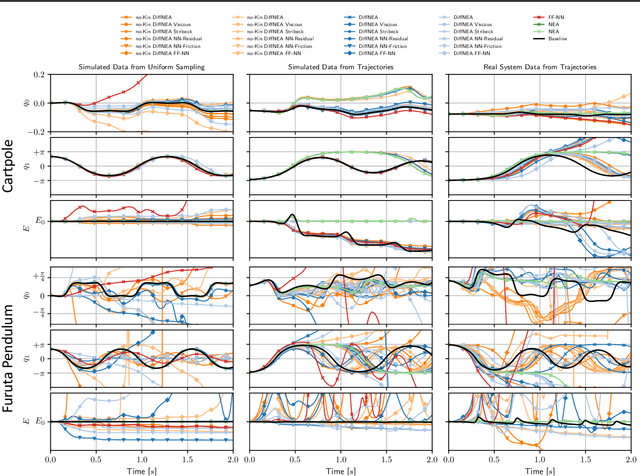

A Differentiable Newton Euler Algorithm for Multi-body Model Learning

Oct 19, 2020

Abstract:In this work, we examine a spectrum of hybrid model for the domain of multi-body robot dynamics. We motivate a computation graph architecture that embodies the Newton Euler equations, emphasizing the utility of the Lie Algebra form in translating the dynamical geometry into an efficient computational structure for learning. We describe the used virtual parameters that enable unconstrained physical plausible dynamics and the used actuator models. In the experiments, we define a family of 26 grey-box models and evaluate them for system identification of the simulated and physical Furuta Pendulum and Cartpole. The comparison shows that the kinematic parameters, required by previous white-box system identification methods, can be accurately inferred from data. Furthermore, we highlight that models with guaranteed bounded energy of the uncontrolled system generate non-divergent trajectories, while more general models have no such guarantee, so their performance strongly depends on the data distribution. Therefore, the main contributions of this work is the introduction of a white-box model that jointly learns dynamic and kinematics parameters and can be combined with black-box components. We then provide extensive empirical evaluation on challenging systems and different datasets that elucidates the comparative performance of our grey-box architecture with comparable white- and black-box models.

Differentiable Implicit Layers

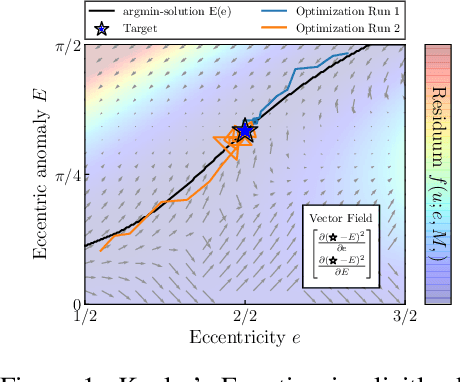

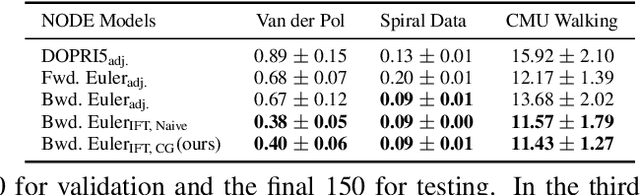

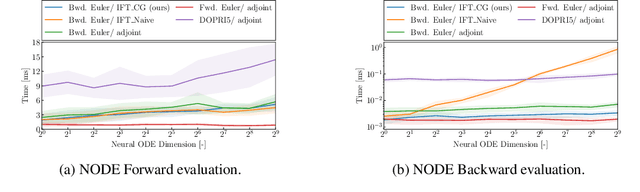

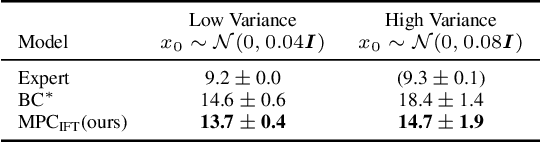

Oct 14, 2020

Abstract:In this paper, we introduce an efficient backpropagation scheme for non-constrained implicit functions. These functions are parametrized by a set of learnable weights and may optionally depend on some input; making them perfectly suitable as learnable layer in a neural network. We demonstrate our scheme on different applications: (i) neural ODEs with the implicit Euler method, and (ii) system identification in model predictive control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge