Jan Peters

Accelerating Integrated Task and Motion Planning with Neural Feasibility Checking

Mar 20, 2022

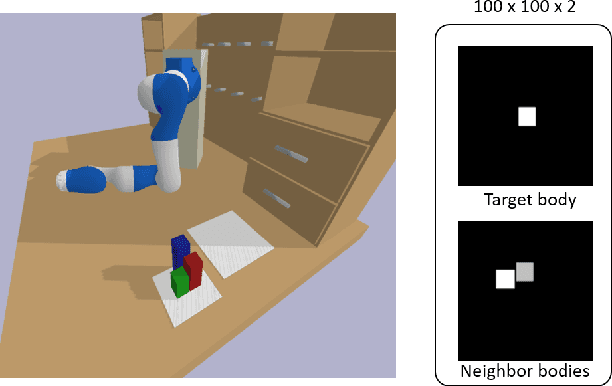

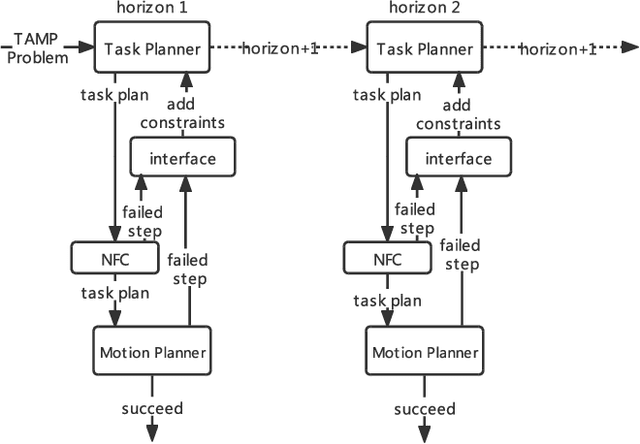

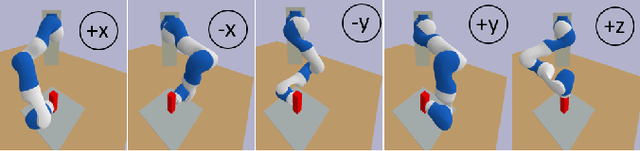

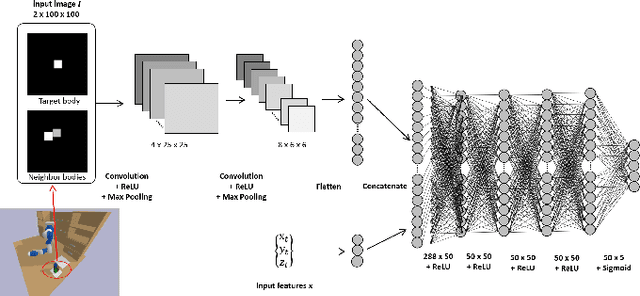

Abstract:As robots play an increasingly important role in the industrial, the expectations about their applications for everyday living tasks are getting higher. Robots need to perform long-horizon tasks that consist of several sub-tasks that need to be accomplished. Task and Motion Planning (TAMP) provides a hierarchical framework to handle the sequential nature of manipulation tasks by interleaving a symbolic task planner that generates a possible action sequence, with a motion planner that checks the kinematic feasibility in the geometric world, generating robot trajectories if several constraints are satisfied, e.g., a collision-free trajectory from one state to another. Hence, the reasoning about the task plan's geometric grounding is taken over by the motion planner. However, motion planning is computationally intense and is usability as feasibility checker casts TAMP methods inapplicable to real-world scenarios. In this paper, we introduce neural feasibility classifier (NFC), a simple yet effective visual heuristic for classifying the feasibility of proposed actions in TAMP. Namely, NFC will identify infeasible actions of the task planner without the need for costly motion planning, hence reducing planning time in multi-step manipulation tasks. NFC encodes the image of the robot's workspace into a feature map thanks to convolutional neural network (CNN). We train NFC using simulated data from TAMP problems and label the instances based on IK feasibility checking. Our empirical results in different simulated manipulation tasks show that our NFC generalizes to the entire robot workspace and has high prediction accuracy even in scenes with multiple obstructions. When combined with state-of-the-art integrated TAMP, our NFC enhances its performance while reducing its planning time.

Dimensionality Reduction and Prioritized Exploration for Policy Search

Mar 19, 2022

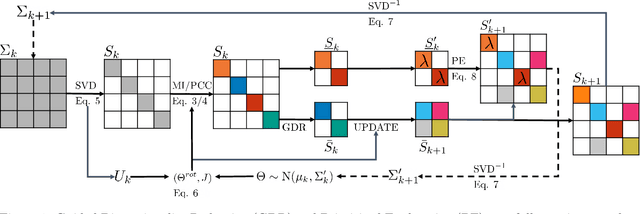

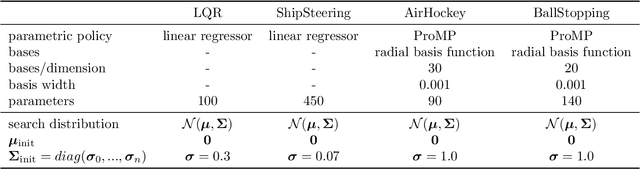

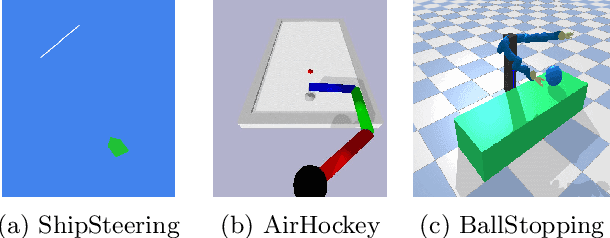

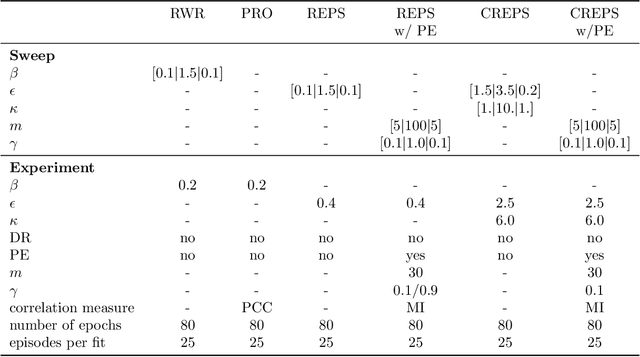

Abstract:Black-box policy optimization is a class of reinforcement learning algorithms that explores and updates the policies at the parameter level. This class of algorithms is widely applied in robotics with movement primitives or non-differentiable policies. Furthermore, these approaches are particularly relevant where exploration at the action level could cause actuator damage or other safety issues. However, Black-box optimization does not scale well with the increasing dimensionality of the policy, leading to high demand for samples, which are expensive to obtain in real-world systems. In many practical applications, policy parameters do not contribute equally to the return. Identifying the most relevant parameters allows to narrow down the exploration and speed up the learning. Furthermore, updating only the effective parameters requires fewer samples, improving the scalability of the method. We present a novel method to prioritize the exploration of effective parameters and cope with full covariance matrix updates. Our algorithm learns faster than recent approaches and requires fewer samples to achieve state-of-the-art results. To select the effective parameters, we consider both the Pearson correlation coefficient and the Mutual Information. We showcase the capabilities of our approach on the Relative Entropy Policy Search algorithm in several simulated environments, including robotics simulations. Code is available at https://git.ias.informatik.tu-darmstadt.de/ias\_code/aistats2022/dr-creps}{git.ias.informatik.tu-darmstadt.de/ias\_code/aistats2022/dr-creps.

Regularized Deep Signed Distance Fields for Reactive Motion Generation

Mar 09, 2022

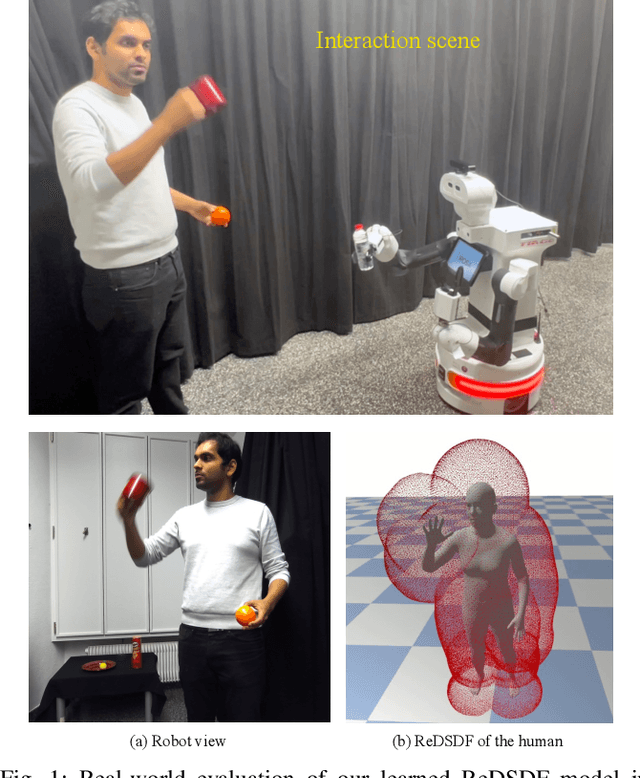

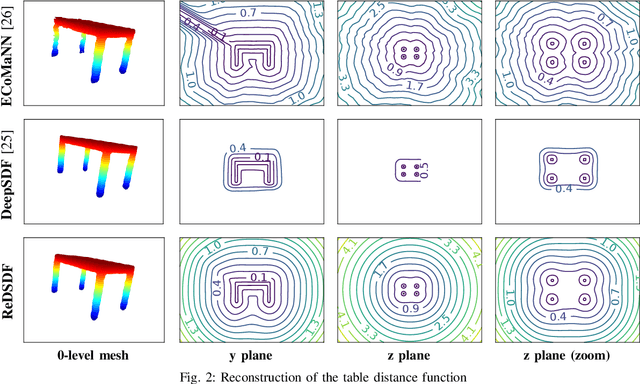

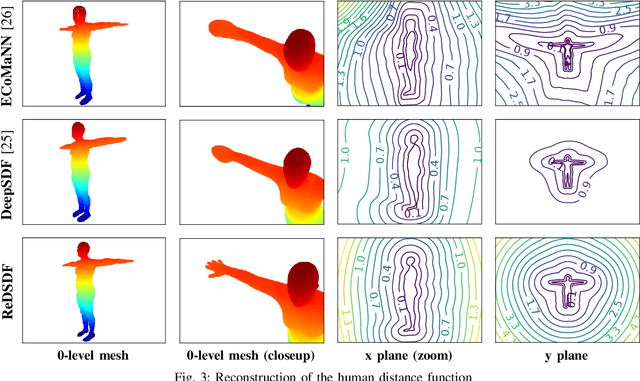

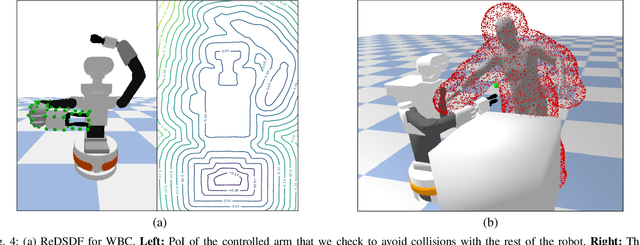

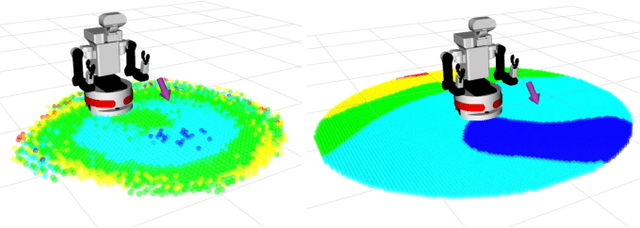

Abstract:Autonomous robots should operate in real-world dynamic environments and collaborate with humans in tight spaces. A key component for allowing robots to leave structured lab and manufacturing settings is their ability to evaluate online and real-time collisions with the world around them. Distance-based constraints are fundamental for enabling robots to plan their actions and act safely, protecting both humans and their hardware. However, different applications require different distance resolutions, leading to various heuristic approaches for measuring distance fields w.r.t. obstacles, which are computationally expensive and hinder their application in dynamic obstacle avoidance use-cases. We propose Regularized Deep Signed Distance Fields (ReDSDF), a single neural implicit function that can compute smooth distance fields at any scale, with fine-grained resolution over high-dimensional manifolds and articulated bodies like humans, thanks to our effective data generation and a simple inductive bias during training. We demonstrate the effectiveness of our approach in representative simulated tasks for whole-body control (WBC) and safe Human-Robot Interaction (HRI) in shared workspaces. Finally, we provide proof of concept of a real-world application in a HRI handover task with a mobile manipulator robot.

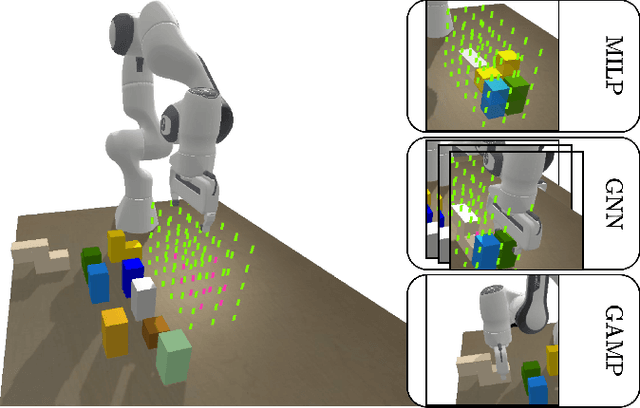

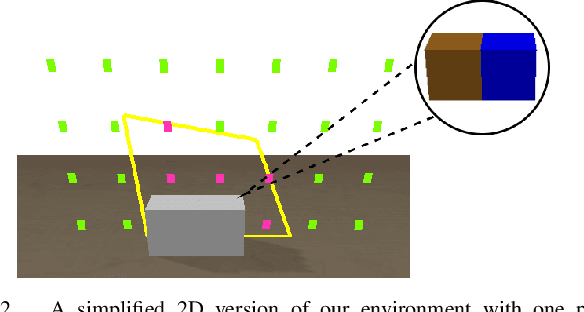

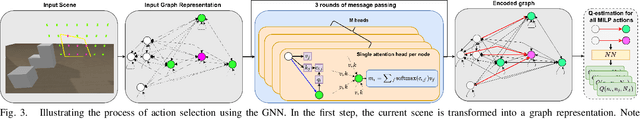

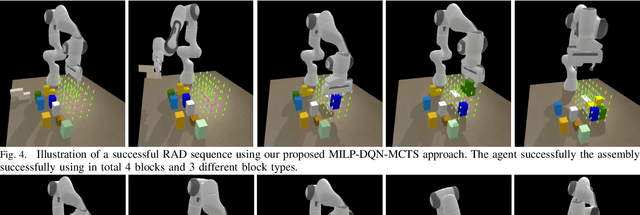

Graph-based Reinforcement Learning meets Mixed Integer Programs: An application to 3D robot assembly discovery

Mar 08, 2022

Abstract:Robot assembly discovery is a challenging problem that lives at the intersection of resource allocation and motion planning. The goal is to combine a predefined set of objects to form something new while considering task execution with the robot-in-the-loop. In this work, we tackle the problem of building arbitrary, predefined target structures entirely from scratch using a set of Tetris-like building blocks and a robotic manipulator. Our novel hierarchical approach aims at efficiently decomposing the overall task into three feasible levels that benefit mutually from each other. On the high level, we run a classical mixed-integer program for global optimization of block-type selection and the blocks' final poses to recreate the desired shape. Its output is then exploited to efficiently guide the exploration of an underlying reinforcement learning (RL) policy. This RL policy draws its generalization properties from a flexible graph-based representation that is learned through Q-learning and can be refined with search. Moreover, it accounts for the necessary conditions of structural stability and robotic feasibility that cannot be effectively reflected in the previous layer. Lastly, a grasp and motion planner transforms the desired assembly commands into robot joint movements. We demonstrate the performance of the proposed method on a set of competitive simulated robot assembly discovery environments and report performance and robustness gains compared to an unstructured end-to-end approach. Videos are available at https://sites.google.com/view/rl-meets-milp .

Robot Learning of Mobile Manipulation with Reachability Behavior Priors

Mar 08, 2022

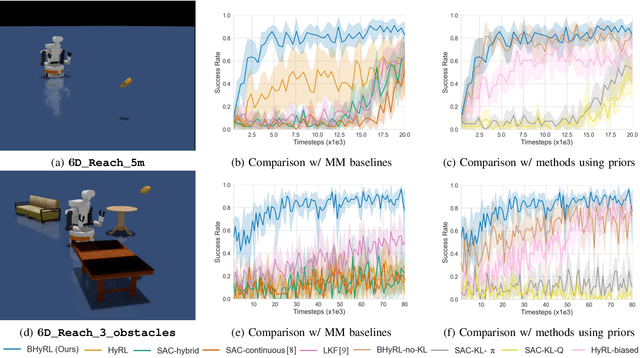

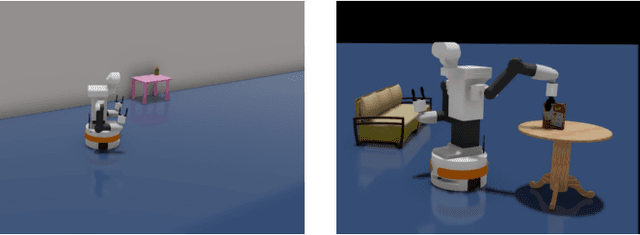

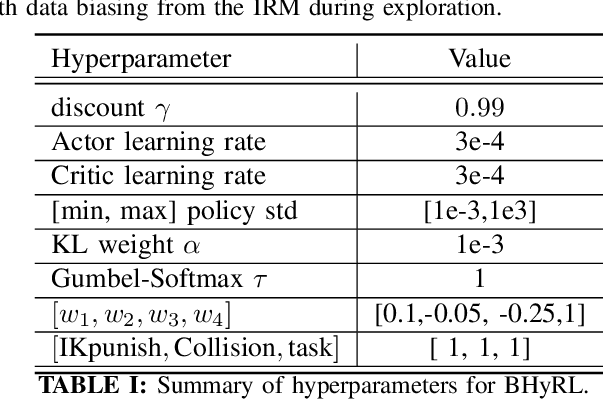

Abstract:Mobile Manipulation (MM) systems are ideal candidates for taking up the role of a personal assistant in unstructured real-world environments. Among other challenges, MM requires effective coordination of the robot's embodiments for executing tasks that require both mobility and manipulation. Reinforcement Learning (RL) holds the promise of endowing robots with adaptive behaviors, but most methods require prohibitively large amounts of data for learning a useful control policy. In this work, we study the integration of robotic reachability priors in actor-critic RL methods for accelerating the learning of MM for reaching and fetching tasks. Namely, we consider the problem of optimal base placement and the subsequent decision of whether to activate the arm for reaching a 6D target. For this, we devise a novel Hybrid RL method that handles discrete and continuous actions jointly, resorting to the Gumbel-Softmax reparameterization. Next, we train a reachability prior using data from the operational robot workspace, inspired by classical methods. Subsequently, we derive Boosted Hybrid RL (BHyRL), a novel algorithm for learning Q-functions by modeling them as a sum of residual approximators. Every time a new task needs to be learned, we can transfer our learned residuals and learn the component of the Q-function that is task-specific, hence, maintaining the task structure from prior behaviors. Moreover, we find that regularizing the target policy with a prior policy yields more expressive behaviors. We evaluate our method in simulation in reaching and fetching tasks of increasing difficulty, and we show the superior performance of BHyRL against baseline methods. Finally, we zero-transfer our learned 6D fetching policy with BHyRL to our MM robot TIAGo++. For more details and code release, please refer to our project site: irosalab.com/rlmmbp

A Hierarchical Approach to Active Pose Estimation

Mar 08, 2022

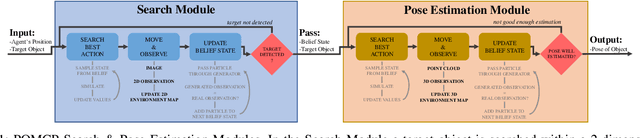

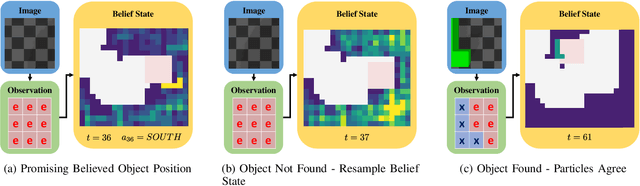

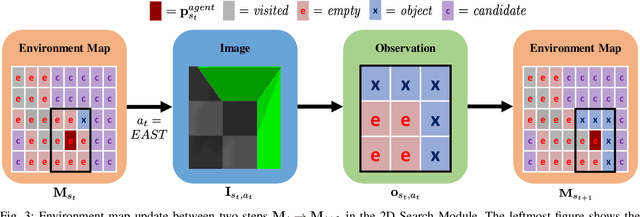

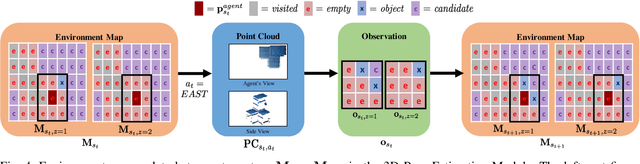

Abstract:Creating mobile robots which are able to find and manipulate objects in large environments is an active topic of research. These robots not only need to be capable of searching for specific objects but also to estimate their poses often relying on environment observations, which is even more difficult in the presence of occlusions. Therefore, to tackle this problem we propose a simple hierarchical approach to estimate the pose of a desired object. An Active Visual Search module operating with RGB images first obtains a rough estimation of the object 2D pose, followed by a more computationally expensive Active Pose Estimation module using point cloud data. We empirically show that processing image features to obtain a richer observation speeds up the search and pose estimation computations, in comparison to a binary decision that indicates whether the object is or not in the current image.

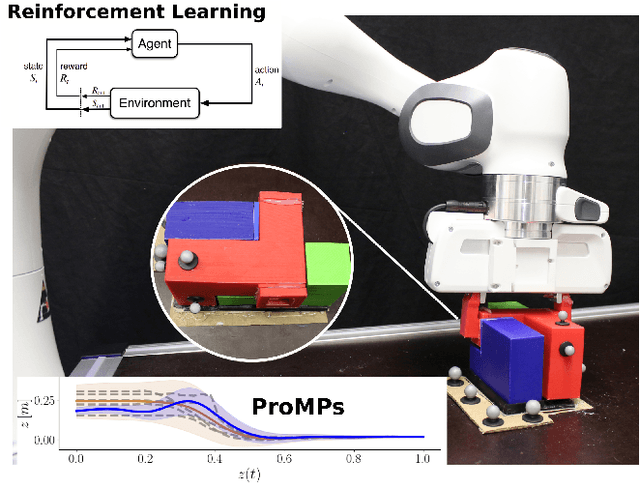

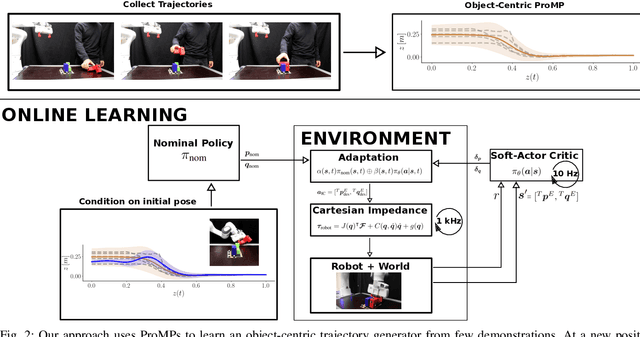

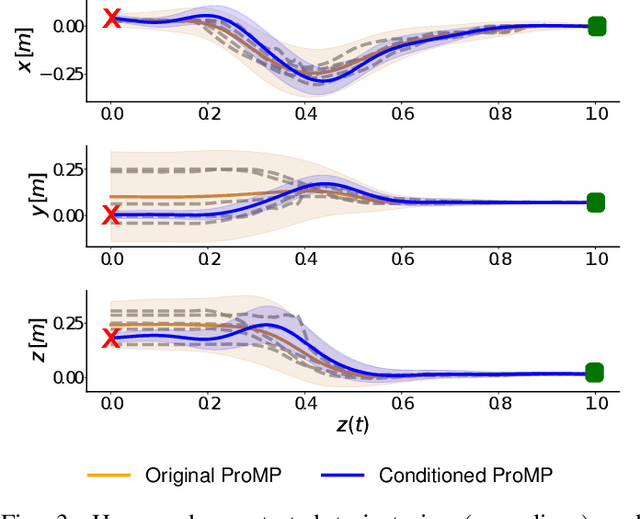

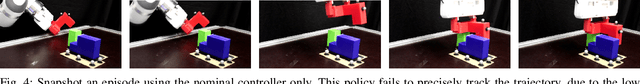

Residual Robot Learning for Object-Centric Probabilistic Movement Primitives

Mar 08, 2022

Abstract:It is desirable for future robots to quickly learn new tasks and adapt learned skills to constantly changing environments. To this end, Probabilistic Movement Primitives (ProMPs) have shown to be a promising framework to learn generalizable trajectory generators from distributions over demonstrated trajectories. However, in practical applications that require high precision in the manipulation of objects, the accuracy of ProMPs is often insufficient, in particular when they are learned in cartesian space from external observations and executed with limited controller gains. Therefore, we propose to combine ProMPs with recently introduced Residual Reinforcement Learning (RRL), to account for both, corrections in position and orientation during task execution. In particular, we learn a residual on top of a nominal ProMP trajectory with Soft-Actor Critic and incorporate the variability in the demonstrations as a decision variable to reduce the search space for RRL. As a proof of concept, we evaluate our proposed method on a 3D block insertion task with a 7-DoF Franka Emika Panda robot. Experimental results show that the robot successfully learns to complete the insertion which was not possible before with using basic ProMPs.

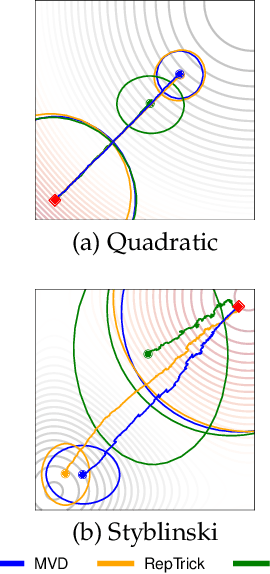

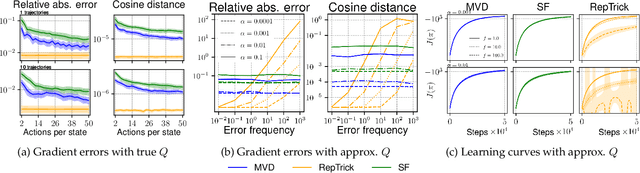

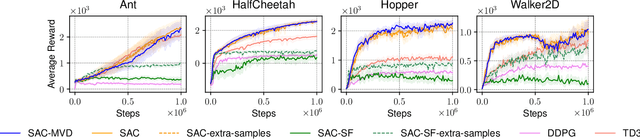

An Analysis of Measure-Valued Derivatives for Policy Gradients

Mar 08, 2022

Abstract:Reinforcement learning methods for robotics are increasingly successful due to the constant development of better policy gradient techniques. A precise (low variance) and accurate (low bias) gradient estimator is crucial to face increasingly complex tasks. Traditional policy gradient algorithms use the likelihood-ratio trick, which is known to produce unbiased but high variance estimates. More modern approaches exploit the reparametrization trick, which gives lower variance gradient estimates but requires differentiable value function approximators. In this work, we study a different type of stochastic gradient estimator - the Measure-Valued Derivative. This estimator is unbiased, has low variance, and can be used with differentiable and non-differentiable function approximators. We empirically evaluate this estimator in the actor-critic policy gradient setting and show that it can reach comparable performance with methods based on the likelihood-ratio or reparametrization tricks, both in low and high-dimensional action spaces. With this work, we want to show that the Measure-Valued Derivative estimator can be a useful alternative to other policy gradient estimators.

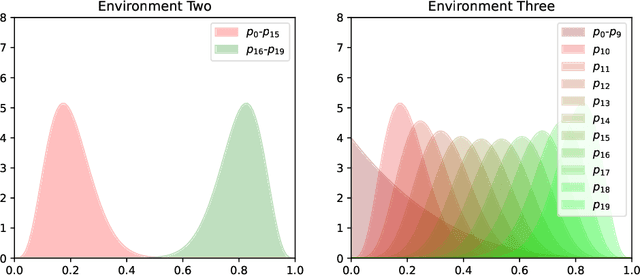

PAC-Bayesian Lifelong Learning For Multi-Armed Bandits

Mar 07, 2022

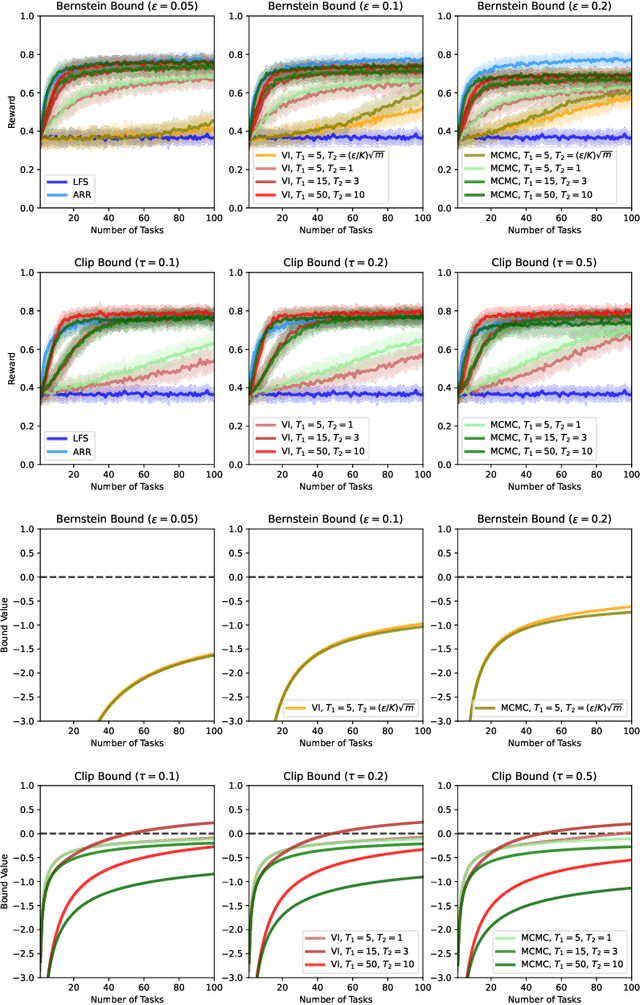

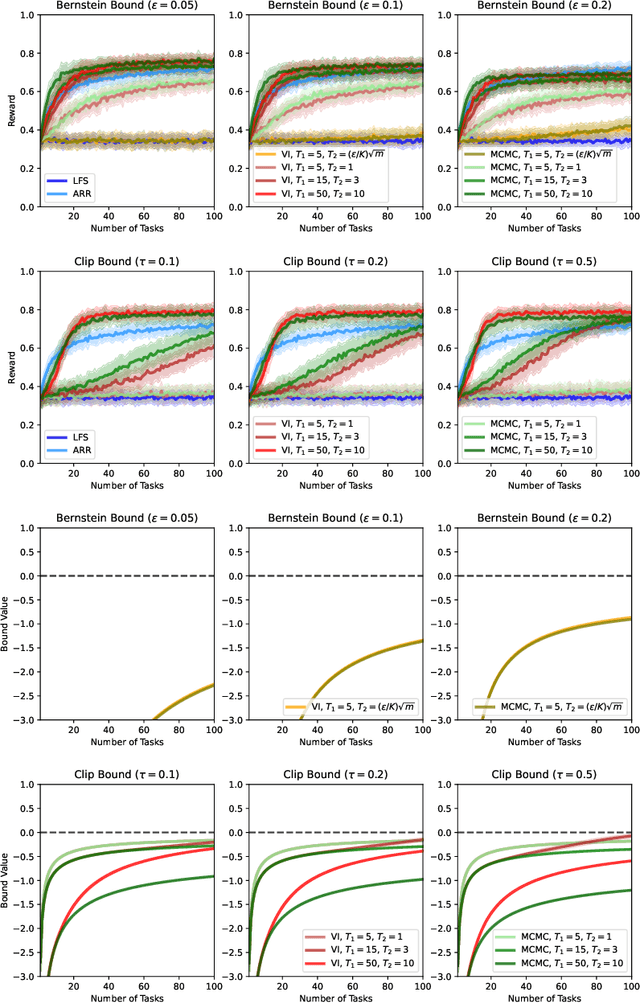

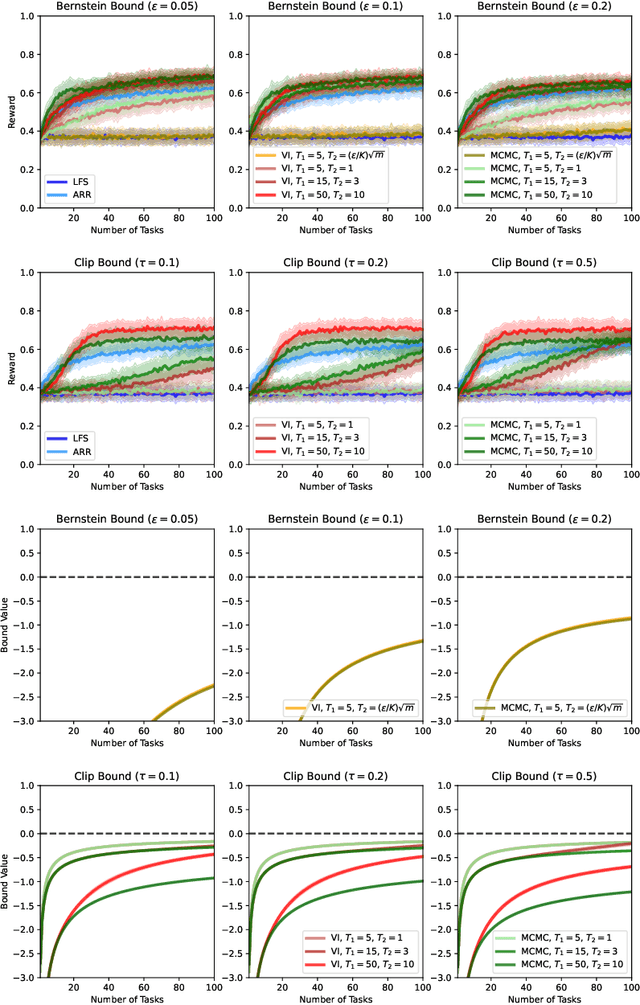

Abstract:We present a PAC-Bayesian analysis of lifelong learning. In the lifelong learning problem, a sequence of learning tasks is observed one-at-a-time, and the goal is to transfer information acquired from previous tasks to new learning tasks. We consider the case when each learning task is a multi-armed bandit problem. We derive lower bounds on the expected average reward that would be obtained if a given multi-armed bandit algorithm was run in a new task with a particular prior and for a set number of steps. We propose lifelong learning algorithms that use our new bounds as learning objectives. Our proposed algorithms are evaluated in several lifelong multi-armed bandit problems and are found to perform better than a baseline method that does not use generalisation bounds.

* 29 pages, 5 figures

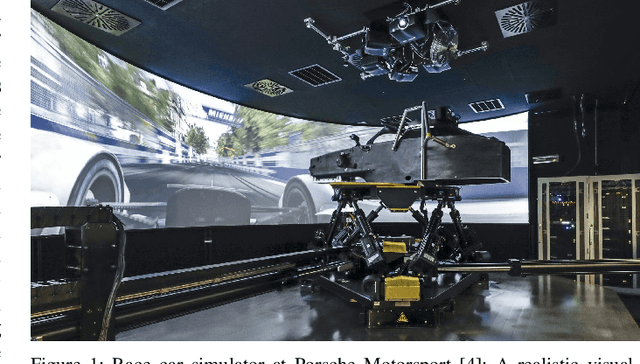

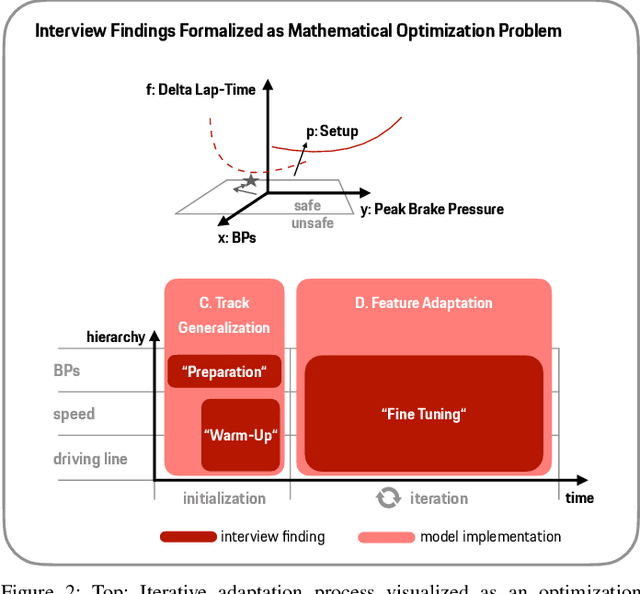

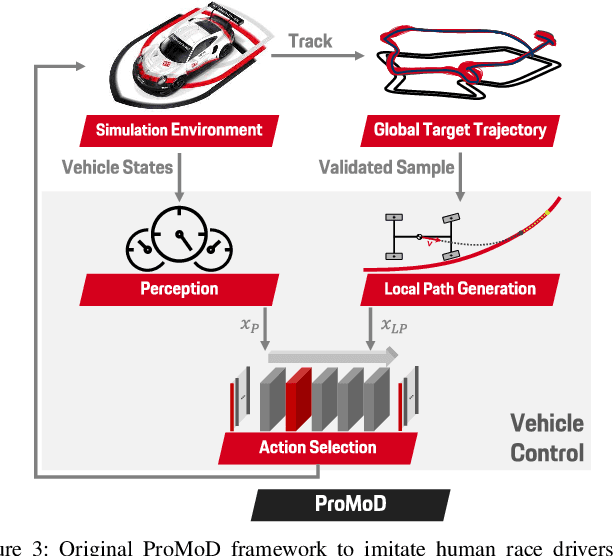

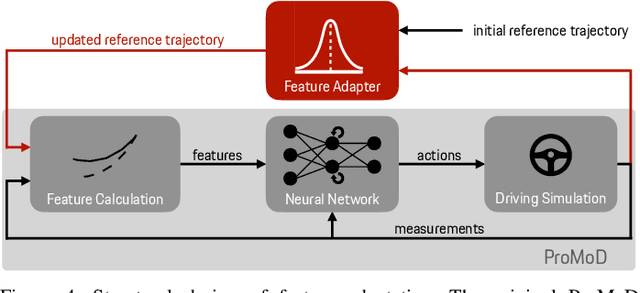

An Adaptive Human Driver Model for Realistic Race Car Simulations

Mar 03, 2022

Abstract:Engineering a high-performance race car requires a direct consideration of the human driver using real-world tests or Human-Driver-in-the-Loop simulations. Apart from that, offline simulations with human-like race driver models could make this vehicle development process more effective and efficient but are hard to obtain due to various challenges. With this work, we intend to provide a better understanding of race driver behavior and introduce an adaptive human race driver model based on imitation learning. Using existing findings and an interview with a professional race engineer, we identify fundamental adaptation mechanisms and how drivers learn to optimize lap time on a new track. Subsequently, we use these insights to develop generalization and adaptation techniques for a recently presented probabilistic driver modeling approach and evaluate it using data from professional race drivers and a state-of-the-art race car simulator. We show that our framework can create realistic driving line distributions on unseen race tracks with almost human-like performance. Moreover, our driver model optimizes its driving lap by lap, correcting driving errors from previous laps while achieving faster lap times. This work contributes to a better understanding and modeling of the human driver, aiming to expedite simulation methods in the modern vehicle development process and potentially supporting automated driving and racing technologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge