Ilya Kolmanovsky

Safe Control and Learning Using Generalized Action Governor

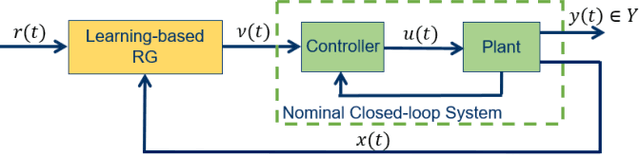

Nov 22, 2022Abstract:This paper introduces the Generalized Action Governor, which is a supervisory scheme for augmenting a nominal closed-loop system with the capability of strictly handling constraints. After presenting its theory for general systems and introducing tailored design approaches for linear and discrete systems, we discuss its application to safe online learning, which aims to safely evolve control parameters using real-time data to improve performance for uncertain systems. In particular, we propose two safe learning algorithms based on integration of reinforcement learning/data-driven Koopman operator-based control with the generalized action governor. The developments are illustrated with a numerical example.

Robust Action Governor for Uncertain Piecewise Affine Systems with Non-convex Constraints and Safe Reinforcement Learning

Jul 17, 2022

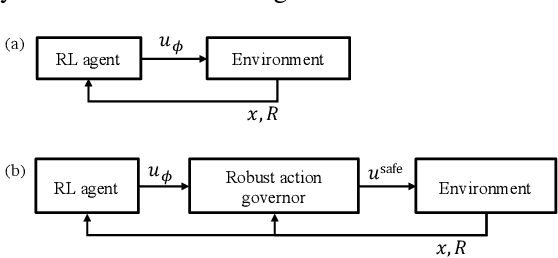

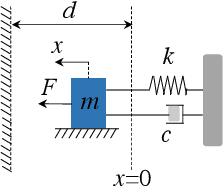

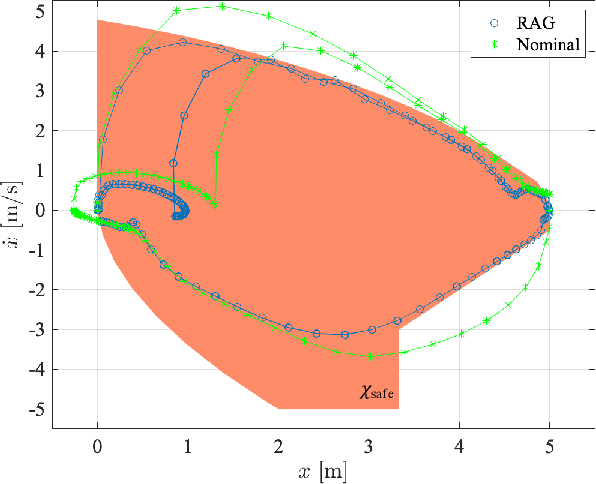

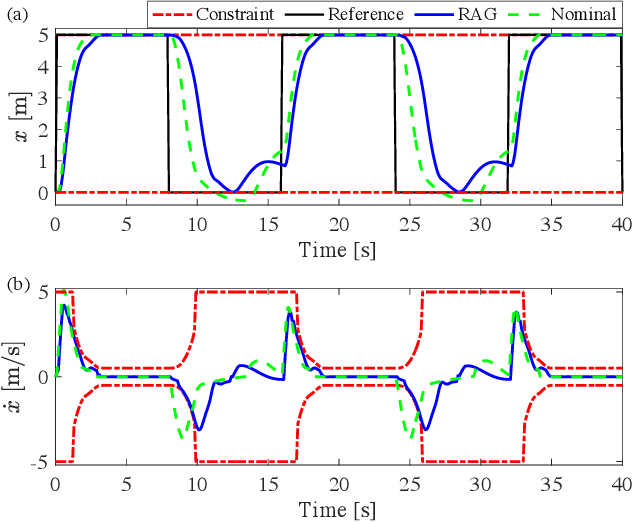

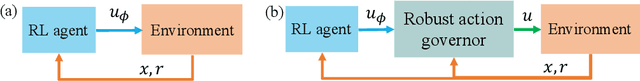

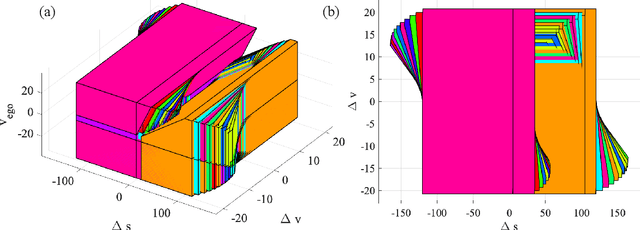

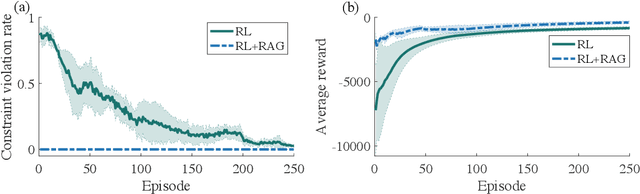

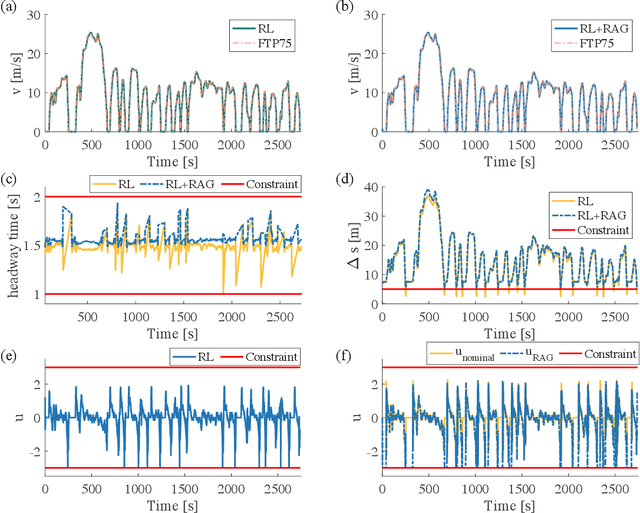

Abstract:The action governor is an add-on scheme to a nominal control loop that monitors and adjusts the control actions to enforce safety specifications expressed as pointwise-in-time state and control constraints. In this paper, we introduce the Robust Action Governor (RAG) for systems the dynamics of which can be represented using discrete-time Piecewise Affine (PWA) models with both parametric and additive uncertainties and subject to non-convex constraints. We develop the theoretical properties and computational approaches for the RAG. After that, we introduce the use of the RAG for realizing safe Reinforcement Learning (RL), i.e., ensuring all-time constraint satisfaction during online RL exploration-and-exploitation process. This development enables safe real-time evolution of the control policy and adaptation to changes in the operating environment and system parameters (due to aging, damage, etc.). We illustrate the effectiveness of the RAG in constraint enforcement and safe RL using the RAG by considering their applications to a soft-landing problem of a mass-spring-damper system.

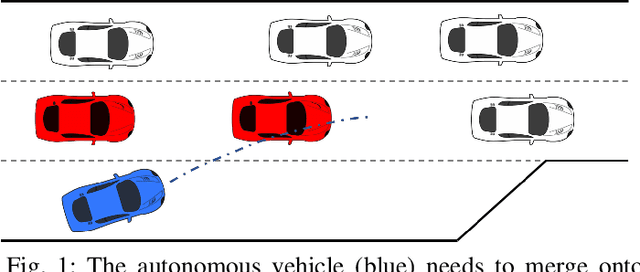

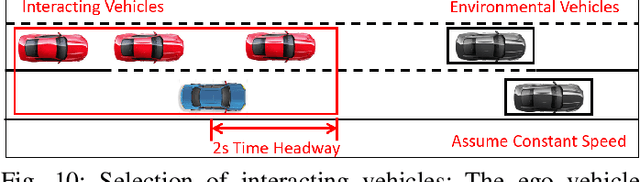

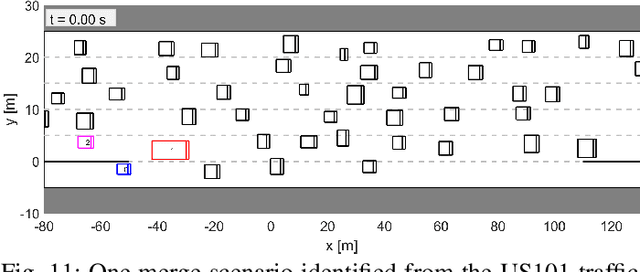

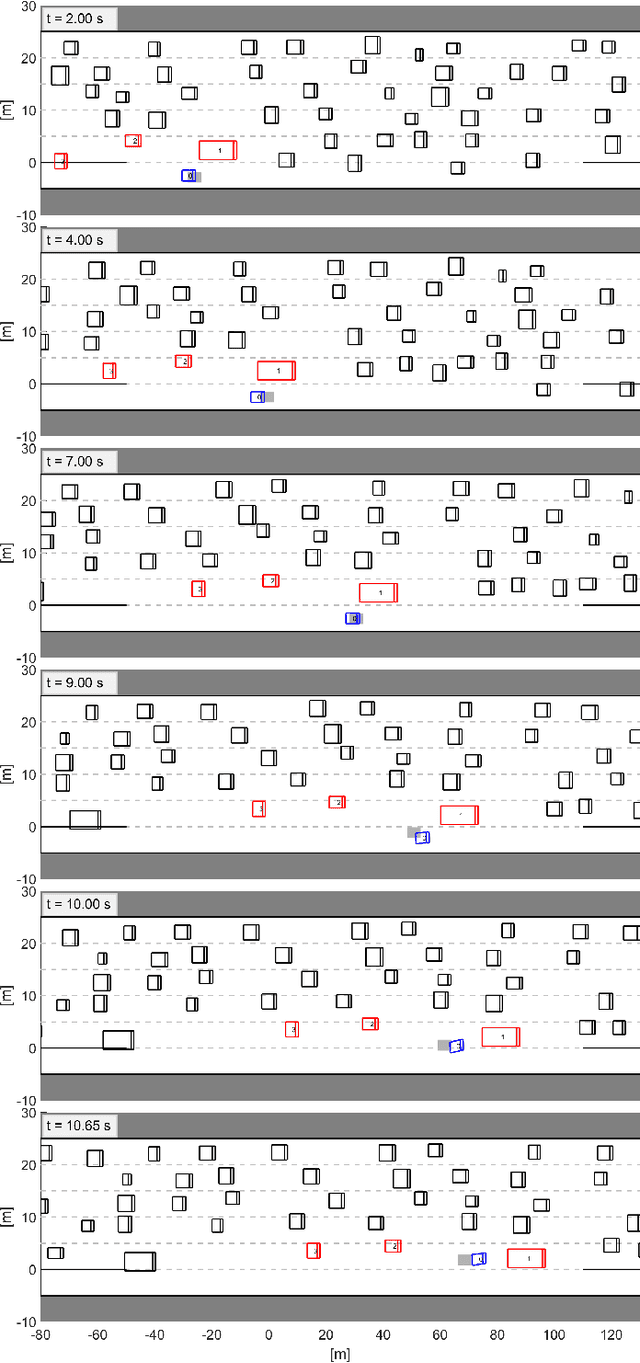

Interaction-Aware Trajectory Prediction and Planning for Autonomous Vehicles in Forced Merge Scenarios

Dec 14, 2021

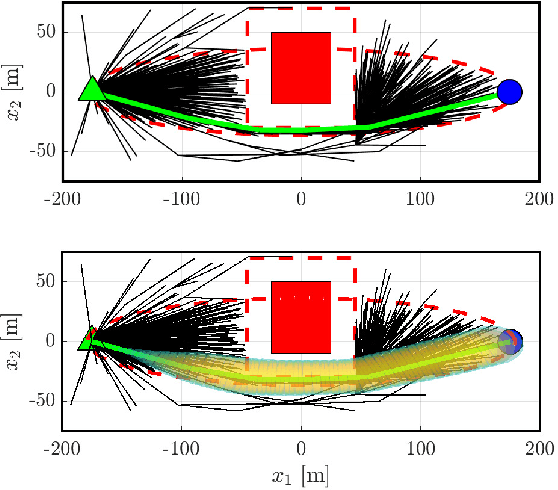

Abstract:Merging is, in general, a challenging task for both human drivers and autonomous vehicles, especially in dense traffic, because the merging vehicle typically needs to interact with other vehicles to identify or create a gap and safely merge into. In this paper, we consider the problem of autonomous vehicle control for forced merge scenarios. We propose a novel game-theoretic controller, called the Leader-Follower Game Controller (LFGC), in which the interactions between the autonomous ego vehicle and other vehicles with a priori uncertain driving intentions is modeled as a partially observable leader-follower game. The LFGC estimates the other vehicles' intentions online based on observed trajectories, and then predicts their future trajectories and plans the ego vehicle's own trajectory using Model Predictive Control (MPC) to simultaneously achieve probabilistically guaranteed safety and merging objectives. To verify the performance of LFGC, we test it in simulations and with the NGSIM data, where the LFGC demonstrates a high success rate of 97.5% in merging.

Energy-Efficient Autonomous Driving Using Cognitive Driver Behavioral Models and Reinforcement Learning

Nov 27, 2021

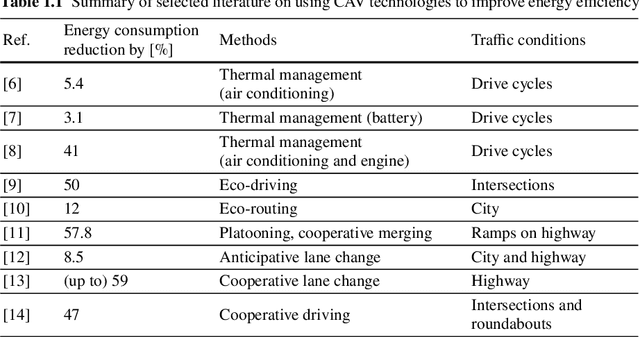

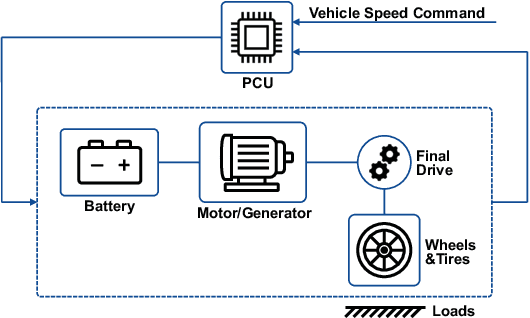

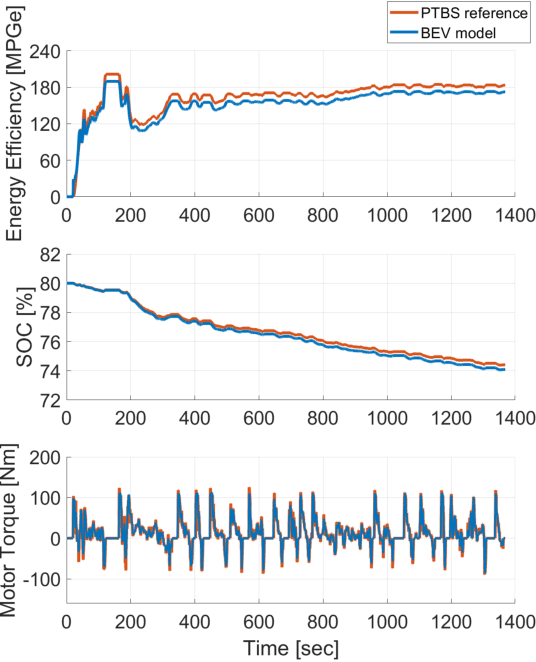

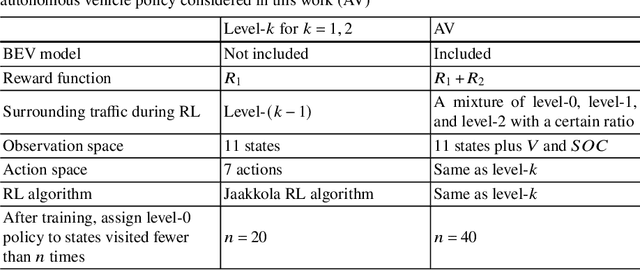

Abstract:Autonomous driving technologies are expected to not only improve mobility and road safety but also bring energy efficiency benefits. In the foreseeable future, autonomous vehicles (AVs) will operate on roads shared with human-driven vehicles. To maintain safety and liveness while simultaneously minimizing energy consumption, the AV planning and decision-making process should account for interactions between the autonomous ego vehicle and surrounding human-driven vehicles. In this chapter, we describe a framework for developing energy-efficient autonomous driving policies on shared roads by exploiting human-driver behavior modeling based on cognitive hierarchy theory and reinforcement learning.

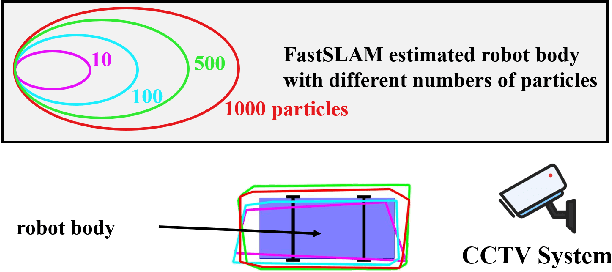

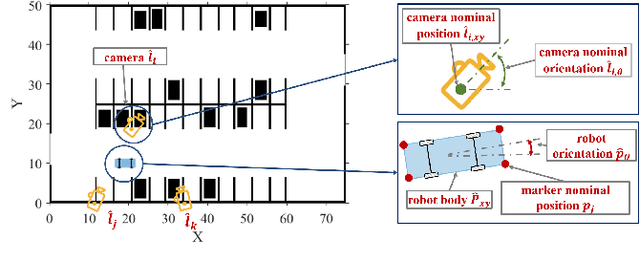

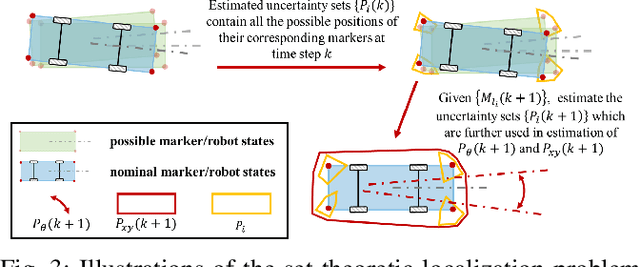

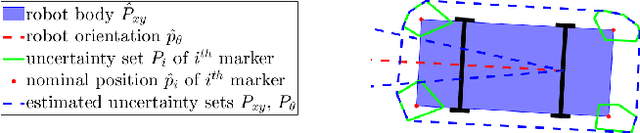

Set-theoretic Localization for Mobile Robots with Infrastructure-based Sensing

Oct 04, 2021

Abstract:In this paper, we introduce a set-theoretic approach for mobile robot localization with infrastructure-based sensing. The proposed method computes sets that over-bound the robot body and orientation under an assumption of known noise bounds on the sensor and robot motion model. We establish theoretical properties and computational approaches for this set-theoretic localization approach and illustrate its application to an automated valet parking example in simulations and to omnidirectional robot localization problems in real-world experiments. We demonstrate that the set-theoretic localization method can perform robustly against uncertainty set initialization and sensor noises compared to the FastSLAM.

Development, Implementation, and Experimental Outdoor Evaluation of Quadcopter Controllers for Computationally Limited Embedded Systems

Jun 01, 2021

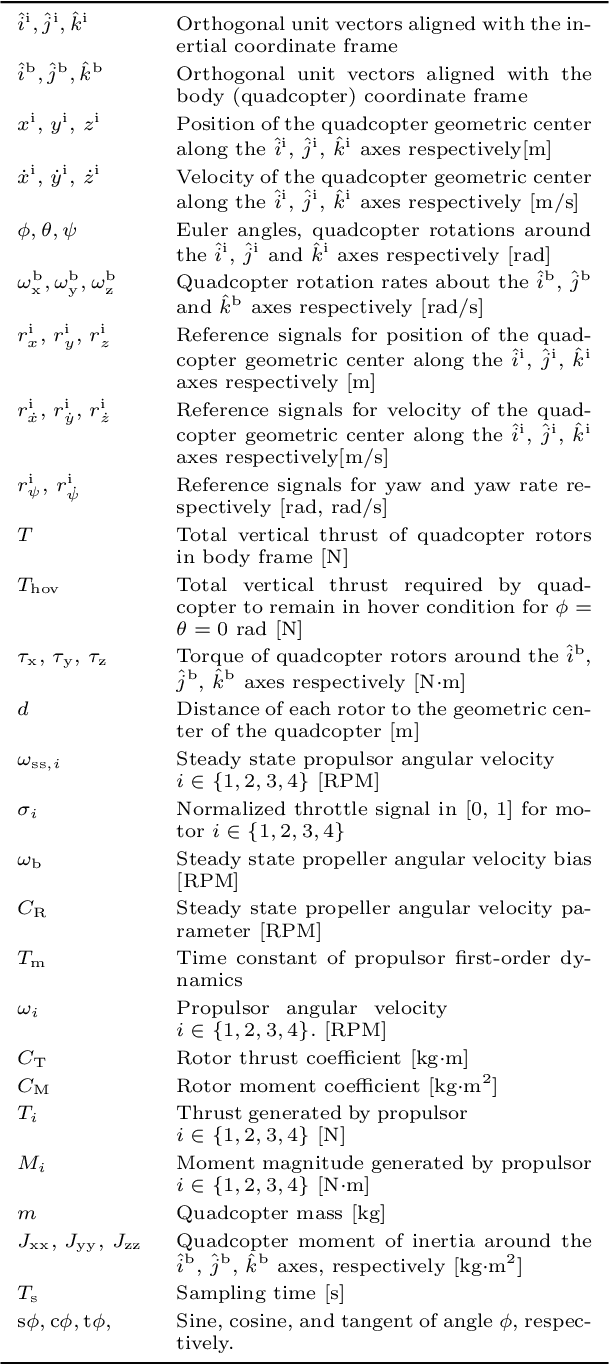

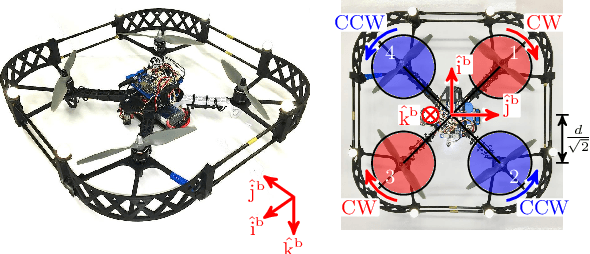

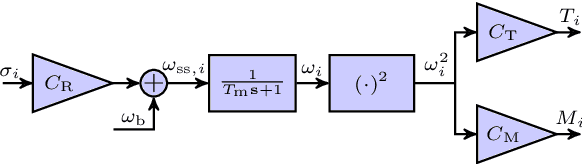

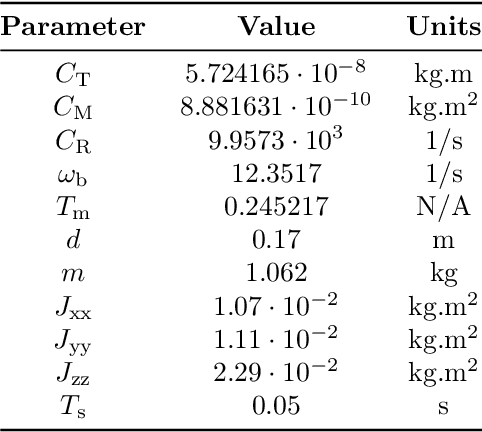

Abstract:Quadcopters are increasingly used for applications ranging from hobby to industrial products and services. This paper serves as a tutorial on the design, simulation, implementation, and experimental outdoor testing of digital quadcopter flight controllers, including Explicit Model Predictive Control, Linear Quadratic Regulator, and Proportional Integral Derivative. A quadcopter was flown in an outdoor testing facility and made to track an inclined, circular path at different tangential velocities under ambient wind conditions. Controller performance was evaluated via multiple metrics, such as position tracking error, velocity tracking error, and onboard computation time. Challenges related to the use of computationally limited embedded hardware and flight in an outdoor environment are addressed with proposed solutions.

Safe Reinforcement Learning Using Robust Action Governor

Feb 21, 2021

Abstract:Reinforcement Learning (RL) is essentially a trial-and-error learning procedure which may cause unsafe behavior during the exploration-and-exploitation process. This hinders the applications of RL to real-world control problems, especially to those for safety-critical systems. In this paper, we introduce a framework for safe RL that is based on integration of an RL algorithm with an add-on safety supervision module, called the Robust Action Governor (RAG), which exploits set-theoretic techniques and online optimization to manage safety-related requirements during learning. We illustrate this proposed safe RL framework through an application to automotive adaptive cruise control.

Safe Learning Reference Governor for Constrained Systems with Application to Fuel Truck Rollover Avoidance

Jan 22, 2021

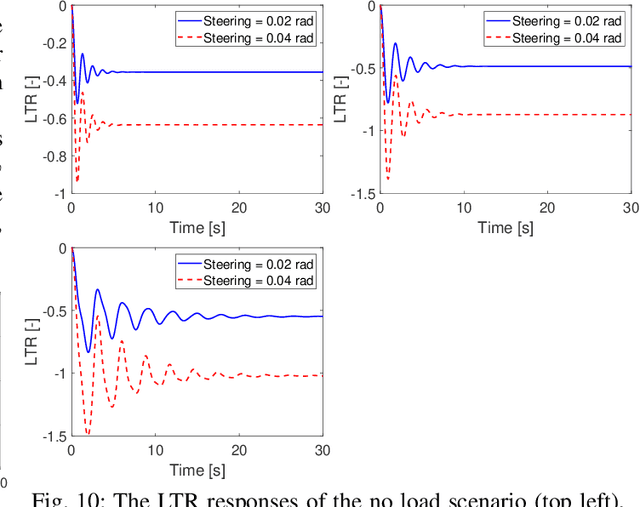

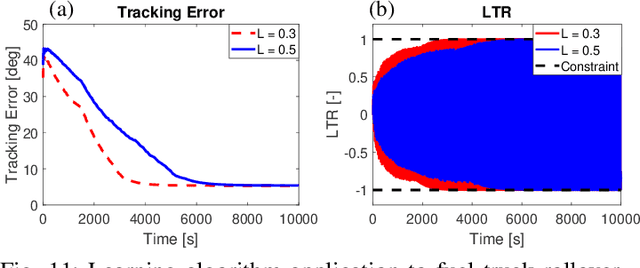

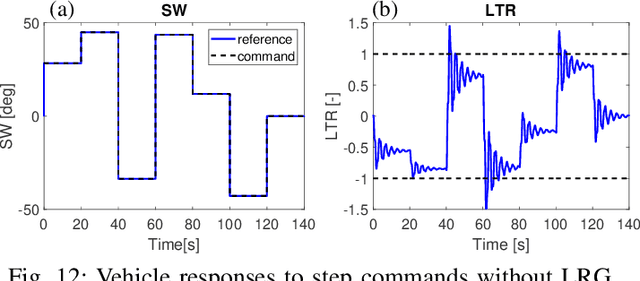

Abstract:This paper proposes a learning reference governor (LRG) approach to enforce state and control constraints in systems for which an accurate model is unavailable; and this approach enables the reference governor to gradually improve command tracking performance through learning while enforcing the constraints during learning and after learning is completed. The learning can be performed either on a black-box type model of the system or directly on the hardware. After introducing the LRG algorithm and outlining its theoretical properties, this paper investigates LRG application to fuel truck rollover avoidance. Through simulations based on a fuel truck model that accounts for liquid fuel sloshing effects, we show that the proposed LRG can effectively protect fuel trucks from rollover accidents under various operating conditions.

A Game Theoretic Approach for Parking Spot Search with Limited Parking Lot Information

May 11, 2020

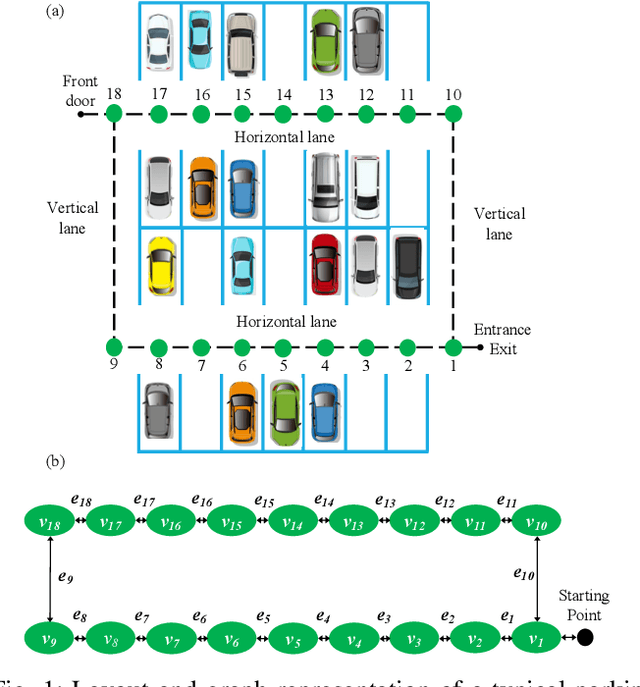

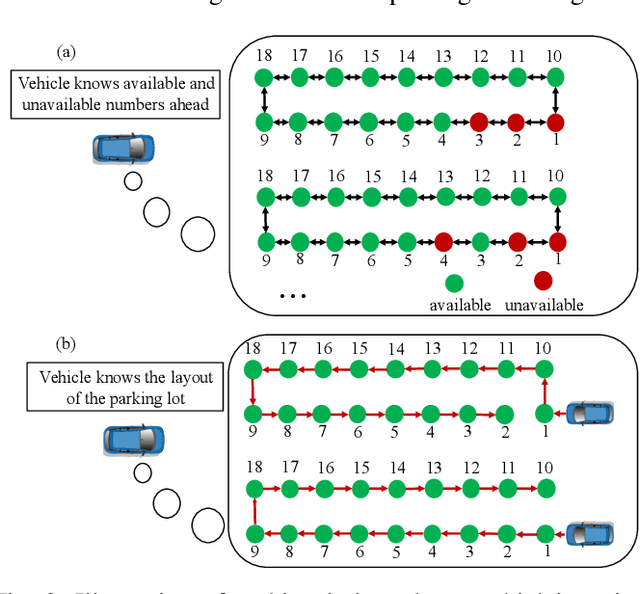

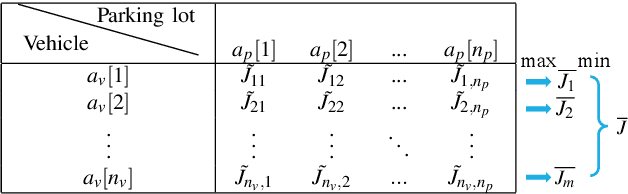

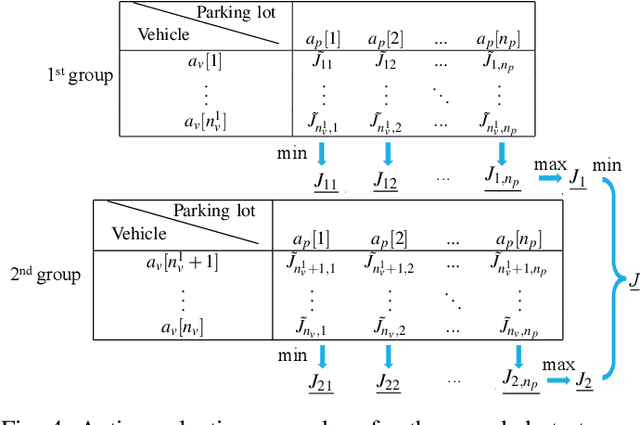

Abstract:We propose a game theoretic approach to address the problem of searching for available parking spots in a parking lot and picking the ``optimal'' one to park. The approach exploits limited information provided by the parking lot, i.e., its layout and the current number of cars in it. Considering the fact that such information is or can be easily made available for many structured parking lots, the proposed approach can be applicable without requiring major updates to existing parking facilities. For large parking lots, a sampling-based strategy is integrated with the proposed approach to overcome the associated computational challenge. The proposed approach is compared against a state-of-the-art heuristic-based parking spot search strategy in the literature through simulation studies and demonstrates its advantage in terms of achieving lower cost function values.

Rapid Uncertainty Propagation and Chance-Constrained Path Planning for Small Unmanned Aerial Vehicles

Nov 06, 2019

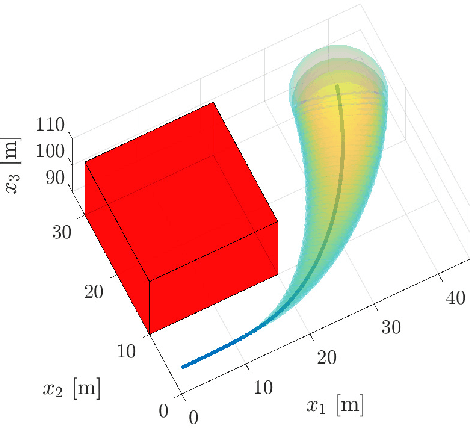

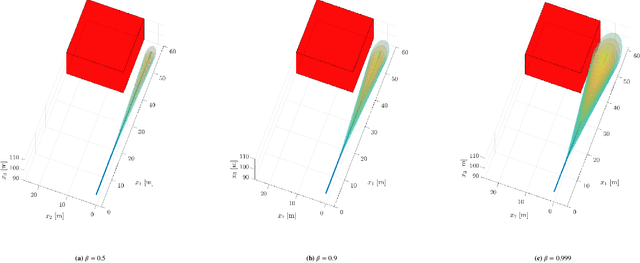

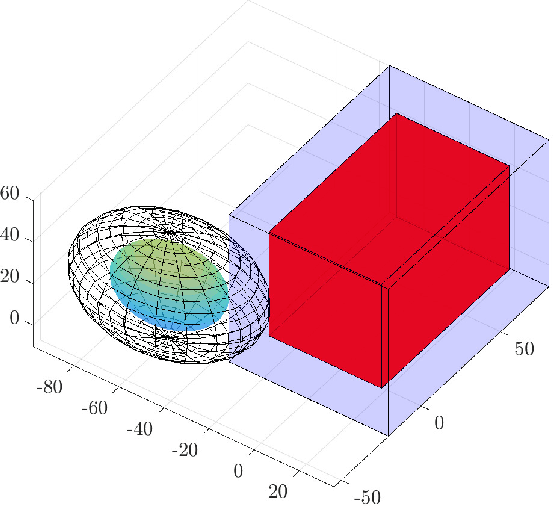

Abstract:With the number of small Unmanned Aircraft Systems (sUAS) in the national airspace projected to increase in the next few years, there is growing interest in a traffic management system capable of handling the demands of this aviation sector. It is expected that such a system will involve trajectory prediction, uncertainty propagation, and path planning algorithms. In this work, we use linear covariance propagation in combination with a quadratic programming-based collision detection algorithm to rapidly validate declared flight plans. Additionally, these algorithms are combined with a Dynamic, Informed RRT* algorithm, resulting in a computationally efficient algorithm for chance-constrained path planning. Detailed numerical examples for both fixed-wing and quadrotor sUAS models are presented.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge