Heinrich Dinkel

Focus on the Sound around You: Monaural Target Speaker Extraction via Distance and Speaker Information

Jun 28, 2023

Abstract:Previously, Target Speaker Extraction (TSE) has yielded outstanding performance in certain application scenarios for speech enhancement and source separation. However, obtaining auxiliary speaker-related information is still challenging in noisy environments with significant reverberation. inspired by the recently proposed distance-based sound separation, we propose the near sound (NS) extractor, which leverages distance information for TSE to reliably extract speaker information without requiring previous speaker enrolment, called speaker embedding self-enrollment (SESE). Full- & sub-band modeling is introduced to enhance our NS-Extractor's adaptability towards environments with significant reverberation. Experimental results on several cross-datasets demonstrate the effectiveness of our improvements and the excellent performance of our proposed NS-Extractor in different application scenarios.

AV-SepFormer: Cross-Attention SepFormer for Audio-Visual Target Speaker Extraction

Jun 25, 2023

Abstract:Visual information can serve as an effective cue for target speaker extraction (TSE) and is vital to improving extraction performance. In this paper, we propose AV-SepFormer, a SepFormer-based attention dual-scale model that utilizes cross- and self-attention to fuse and model features from audio and visual. AV-SepFormer splits the audio feature into a number of chunks, equivalent to the length of the visual feature. Then self- and cross-attention are employed to model and fuse the multi-modal features. Furthermore, we use a novel 2D positional encoding, that introduces the positional information between and within chunks and provides significant gains over the traditional positional encoding. Our model has two key advantages: the time granularity of audio chunked feature is synchronized to the visual feature, which alleviates the harm caused by the inconsistency of audio and video sampling rate; by combining self- and cross-attention, feature fusion and speech extraction processes are unified within an attention paradigm. The experimental results show that AV-SepFormer significantly outperforms other existing methods.

Understanding temporally weakly supervised training: A case study for keyword spotting

May 30, 2023

Abstract:The currently most prominent algorithm to train keyword spotting (KWS) models with deep neural networks (DNNs) requires strong supervision i.e., precise knowledge of the spoken keyword location in time. Thus, most KWS approaches treat the presence of redundant data, such as noise, within their training set as an obstacle. A common training paradigm to deal with data redundancies is to use temporally weakly supervised learning, which only requires providing labels on a coarse scale. This study explores the limits of DNN training using temporally weak labeling with applications in KWS. We train a simple end-to-end classifier on the common Google Speech Commands dataset with increased difficulty by randomly appending and adding noise to the training dataset. Our results indicate that temporally weak labeling can achieve comparable results to strongly supervised baselines while having a less stringent labeling requirement. In the presence of noise, weakly supervised models are capable to localize and extract target keywords without explicit supervision, leading to a performance increase compared to strongly supervised approaches.

Streaming Audio Transformers for Online Audio Tagging

May 29, 2023

Abstract:Transformers have emerged as a prominent model framework for audio tagging (AT), boasting state-of-the-art (SOTA) performance on the widely-used Audioset dataset. However, their impressive performance often comes at the cost of high memory usage, slow inference speed, and considerable model delay, rendering them impractical for real-world AT applications. In this study, we introduce streaming audio transformers (SAT) that combine the vision transformer (ViT) architecture with Transformer-Xl-like chunk processing, enabling efficient processing of long-range audio signals. Our proposed SAT is benchmarked against other transformer-based SOTA methods, achieving significant improvements in terms of mean average precision (mAP) at a delay of 2s and 1s, while also exhibiting significantly lower memory usage and computational overhead. Checkpoints are publicly available https://github.com/RicherMans/SAT.

Unified Keyword Spotting and Audio Tagging on Mobile Devices with Transformers

Mar 03, 2023

Abstract:Keyword spotting (KWS) is a core human-machine-interaction front-end task for most modern intelligent assistants. Recently, a unified (UniKW-AT) framework has been proposed that adds additional capabilities in the form of audio tagging (AT) to a KWS model. However, previous work did not consider the real-world deployment of a UniKW-AT model, where factors such as model size and inference speed are more important than performance alone. This work introduces three mobile-device deployable models named Unified Transformers (UiT). Our best model achieves an mAP of 34.09 on Audioset, and an accuracy of 97.76 on the public Google Speech Commands V1 dataset. Further, we benchmark our proposed approaches on four mobile platforms, revealing that the proposed UiT models can achieve a speedup of 2 - 6 times against a competitive MobileNetV2.

An empirical study of weakly supervised audio tagging embeddings for general audio representations

Sep 30, 2022

Abstract:We study the usability of pre-trained weakly supervised audio tagging (AT) models as feature extractors for general audio representations. We mainly analyze the feasibility of transferring those embeddings to other tasks within the speech and sound domains. Specifically, we benchmark weakly supervised pre-trained models (MobileNetV2 and EfficientNet-B0) against modern self-supervised learning methods (BYOL-A) as feature extractors. Fourteen downstream tasks are used for evaluation ranging from music instrument classification to language classification. Our results indicate that AT pre-trained models are an excellent transfer learning choice for music, event, and emotion recognition tasks. Further, finetuning AT models can also benefit speech-related tasks such as keyword spotting and intent classification.

UniKW-AT: Unified Keyword Spotting and Audio Tagging

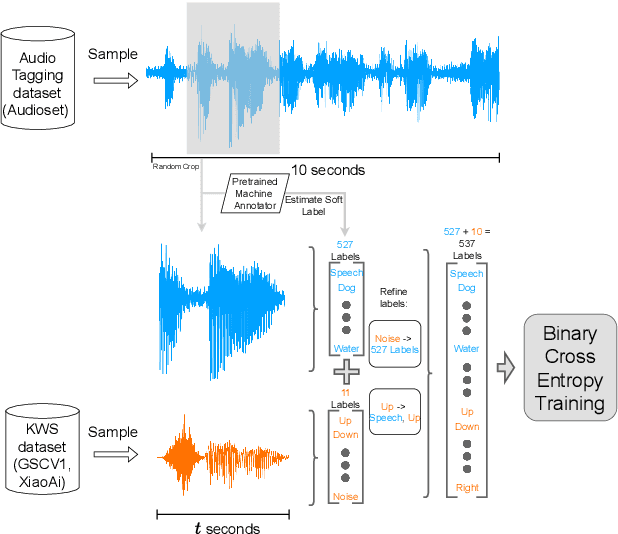

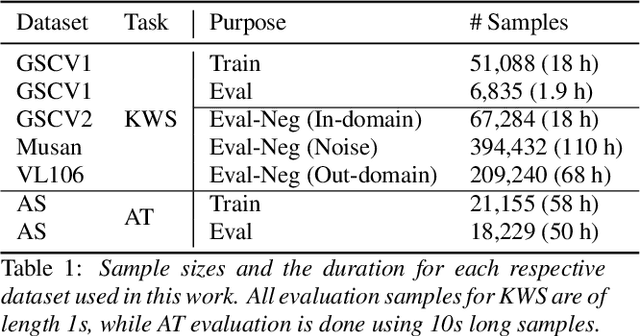

Sep 23, 2022

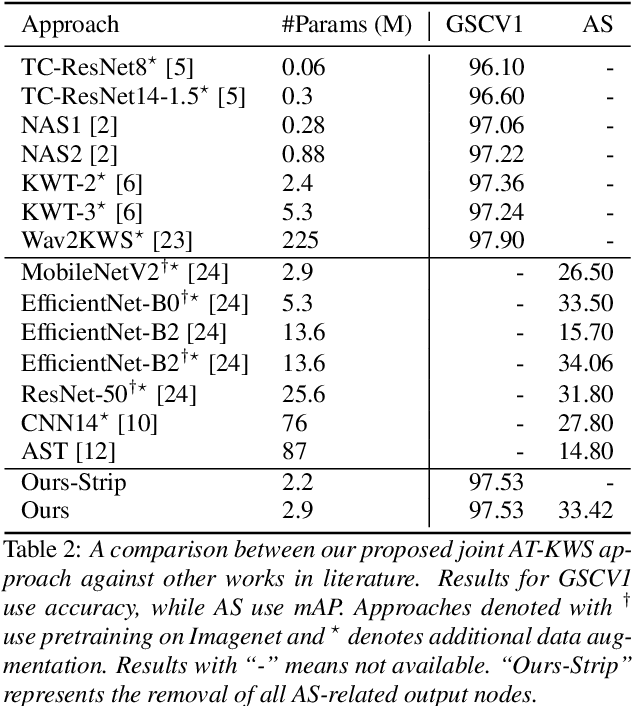

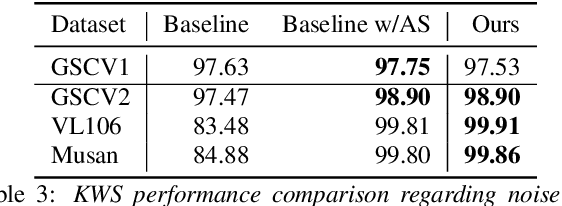

Abstract:Within the audio research community and the industry, keyword spotting (KWS) and audio tagging (AT) are seen as two distinct tasks and research fields. However, from a technical point of view, both of these tasks are identical: they predict a label (keyword in KWS, sound event in AT) for some fixed-sized input audio segment. This work proposes UniKW-AT: An initial approach for jointly training both KWS and AT. UniKW-AT enhances the noise-robustness for KWS, while also being able to predict specific sound events and enabling conditional wake-ups on sound events. Our approach extends the AT pipeline with additional labels describing the presence of a keyword. Experiments are conducted on the Google Speech Commands V1 (GSCV1) and the balanced Audioset (AS) datasets. The proposed MobileNetV2 model achieves an accuracy of 97.53% on the GSCV1 dataset and an mAP of 33.4 on the AS evaluation set. Further, we show that significant noise-robustness gains can be observed on a real-world KWS dataset, greatly outperforming standard KWS approaches. Our study shows that KWS and AT can be merged into a single framework without significant performance degradation.

Pseudo strong labels for large scale weakly supervised audio tagging

Apr 28, 2022

Abstract:Large-scale audio tagging datasets inevitably contain imperfect labels, such as clip-wise annotated (temporally weak) tags with no exact on- and offsets, due to a high manual labeling cost. This work proposes pseudo strong labels (PSL), a simple label augmentation framework that enhances the supervision quality for large-scale weakly supervised audio tagging. A machine annotator is first trained on a large weakly supervised dataset, which then provides finer supervision for a student model. Using PSL we achieve an mAP of 35.95 balanced train subset of Audioset using a MobileNetV2 back-end, significantly outperforming approaches without PSL. An analysis is provided which reveals that PSL mitigates missing labels. Lastly, we show that models trained with PSL are also superior at generalizing to the Freesound datasets (FSD) than their weakly trained counterparts.

Voice activity detection in the wild: A data-driven approach using teacher-student training

May 10, 2021

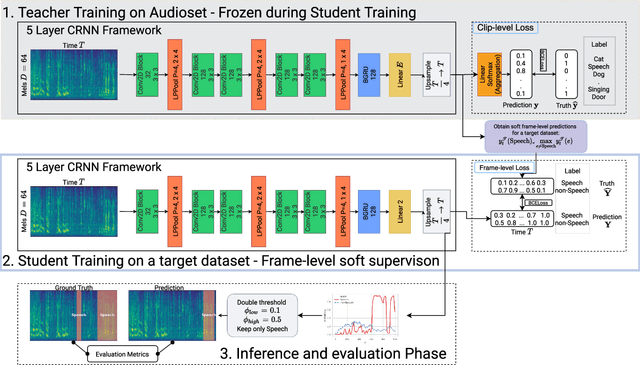

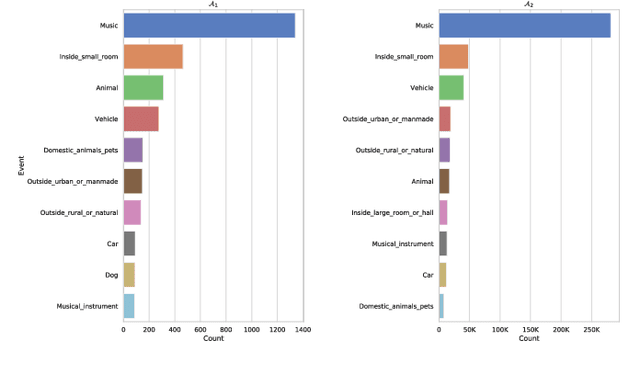

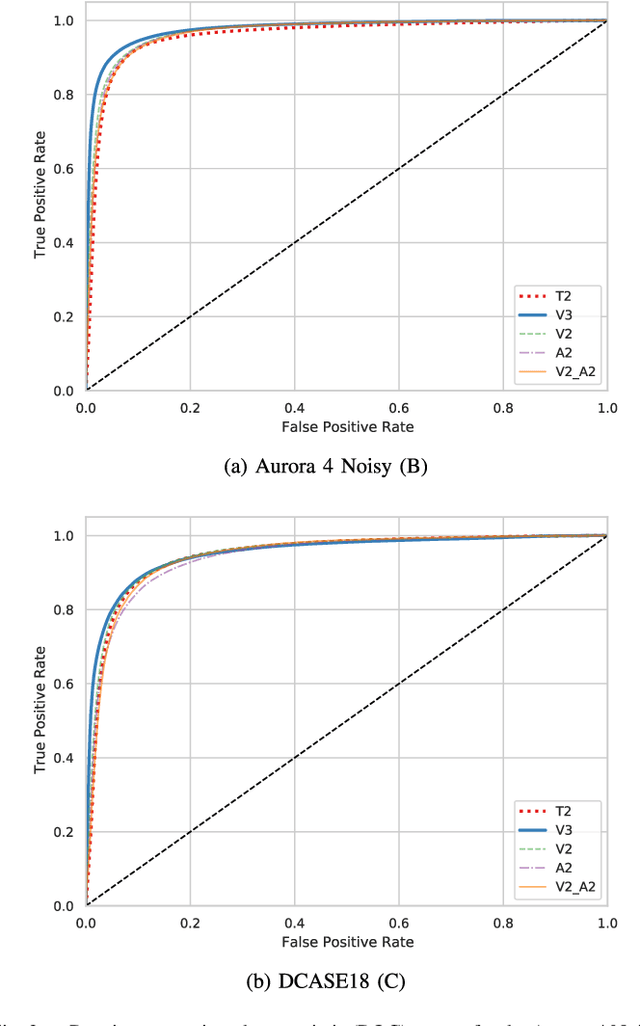

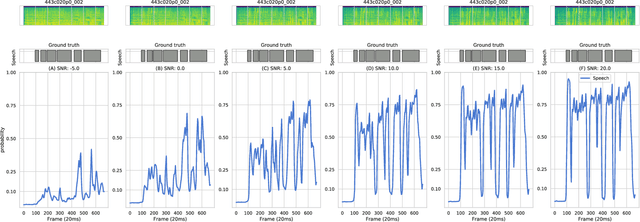

Abstract:Voice activity detection is an essential pre-processing component for speech-related tasks such as automatic speech recognition (ASR). Traditional supervised VAD systems obtain frame-level labels from an ASR pipeline by using, e.g., a Hidden Markov model. These ASR models are commonly trained on clean and fully transcribed data, limiting VAD systems to be trained on clean or synthetically noised datasets. Therefore, a major challenge for supervised VAD systems is their generalization towards noisy, real-world data. This work proposes a data-driven teacher-student approach for VAD, which utilizes vast and unconstrained audio data for training. Unlike previous approaches, only weak labels during teacher training are required, enabling the utilization of any real-world, potentially noisy dataset. Our approach firstly trains a teacher model on a source dataset (Audioset) using clip-level supervision. After training, the teacher provides frame-level guidance to a student model on an unlabeled, target dataset. A multitude of student models trained on mid- to large-sized datasets are investigated (Audioset, Voxceleb, NIST SRE). Our approach is then respectively evaluated on clean, artificially noised, and real-world data. We observe significant performance gains in artificially noised and real-world scenarios. Lastly, we compare our approach against other unsupervised and supervised VAD methods, demonstrating our method's superiority.

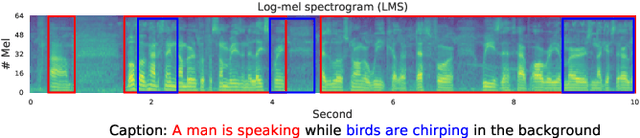

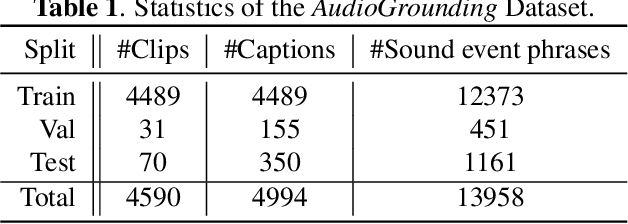

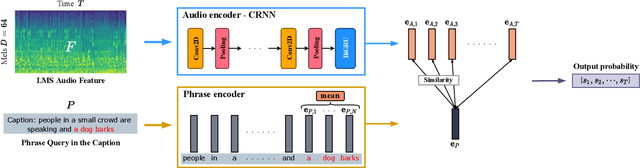

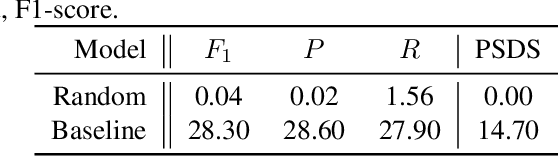

Text-to-Audio Grounding: Building Correspondence Between Captions and Sound Events

Feb 23, 2021

Abstract:Automated Audio Captioning is a cross-modal task, generating natural language descriptions to summarize the audio clips' sound events. However, grounding the actual sound events in the given audio based on its corresponding caption has not been investigated. This paper contributes an AudioGrounding dataset, which provides the correspondence between sound events and the captions provided in Audiocaps, along with the location (timestamps) of each present sound event. Based on such, we propose the text-to-audio grounding (TAG) task, which interactively considers the relationship between audio processing and language understanding. A baseline approach is provided, resulting in an event-F1 score of 28.3% and a Polyphonic Sound Detection Score (PSDS) score of 14.7%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge